- Sony is working on high full well capacity pixel design. The new solution is supposed to improve full well for very small pixels. Diminishing full well is one of the main limitations of the pixel downscaling.

- Kodak p-type pixel is not meeting the company's QE targets, in spite of many process iterations. Also, W-RGB processing still leaves some artifacts. To make the sensor more competitive, Kodak recently taped out the improved version of its sensor.

- SuperPix abandons consumer image sensor market. It looks like the company was unable to capture any meaningful share on the market, so after few years trying it ceased its efforts. It's not clear whether the company continues its operations to find other markets.

- Galaxycore plans to move its production to Hua Hong NEC foundry. It's not clear if Galaxycore completely abandons its former foundry SMIC or just plans to use HH NEC as a second source. HH NEC does not have CIS experience so far and develops it with Galaxycore now. By the way, HH NEC is in merger talks with Grace, another China-based foundry. Grace had some image sensor experience in the past, cooperating with Cypress and Japanese companies. Later it has discontinued CIS process development.

- ST did a superb job on 1.4um FSI pixel QE enhancement. The company uses 65nm process with metal stack of only 1.5um to achieve industry leading QE performance. Actually, ST FSI results make Omnivision's early switch to BSI look premature.

Lists

▼

Tuesday, June 30, 2009

Rumors Galore

There are few rumors brought to my attention recently. Normally, I publish rumors only if an independent confirmation is received from at least one more source. Still, there is a chance of mistake here, so take all the rumors below with a grain of salt:

Panavision and Tower Unveil Fast Linear Sensor

Yahoo: Panavision Imaging and Tower announced production of Panavision’s family of DLIS-2K re-configurable line scan CMOS image sensors. The DLIS-2K sensors were developed using Tower’s Advanced Photo Diode pixel process and pixel IP with Panavision’s patented Imager Architecture. The sensor is claimed to be the world's fastest single port re-configurable linear image sensor.

The DLIS-2K Imager is a Quad Line Sensor with 11 bit A/D, High Dynamic Range, and Correlated Multi-Sampling (CMS) for enhanced sensitivity. The sensor has ambient light subtraction, oversampling, non-destructive read mode, binning of different integrations, auto-thresholding and a high resolution mode with an 120MHz pixel readout.

The imager uses 4 X 32um pixels with sensitivity exceeding 100 V/Lux.Sec. The sensor utilizes 0.18um process.

The DLIS-2K Imager is a Quad Line Sensor with 11 bit A/D, High Dynamic Range, and Correlated Multi-Sampling (CMS) for enhanced sensitivity. The sensor has ambient light subtraction, oversampling, non-destructive read mode, binning of different integrations, auto-thresholding and a high resolution mode with an 120MHz pixel readout.

The imager uses 4 X 32um pixels with sensitivity exceeding 100 V/Lux.Sec. The sensor utilizes 0.18um process.

Friday, June 26, 2009

Caltech Patent Saga Goes On

Bloomberg updates on new developments in Caltech suit on CMOS sensors patents infringement:

A lawyer for Caltech said the university “reached basic settlement terms” with another party in the case and court papers may be filed in two to three weeks - the case he talks about is Canon.

- Sony settled the lawsuit. The terms weren’t disclosed in a federal court filing in the case. “Caltech hereby informs the court that it has resolved its dispute” with Sony Electronics and Tokyo-based Sony Corp., the Pasadena, California-based school said in a June 23 filing in Los Angeles.

- Nikon and Panasonic denied Caltech’s infringement claims, said the patents are invalid and accused the school and inventors of misleading the US Patent and Trademark Office in order to obtain the patents.

- Olympus also denied infringing any valid patent and claimed they were unenforceable.

- Samsung hasn’t yet responded to the complaint, according to the court docket.

A lawyer for Caltech said the university “reached basic settlement terms” with another party in the case and court papers may be filed in two to three weeks - the case he talks about is Canon.

Aptina Sales Up 53% QoQ

Yahoo: Micron reported the quarterly results for its fiscal quarter ended June 4, 2009. Sales of image sensors in the last quarter increased 53% compared to the preceding quarter as a result of a significant increase in unit sales. The company’s gross margin on sales of image sensors was two percent and continues to be negatively impacted by underutilization of dedicated 200mm manufacturing capacity.

Seeking Alpha Earnings Call transcript mentions Aptina expenses run around $30.0+ million in R&D and $9.0 million or so in SG&A (Selling, General & Administrative Expense). The spin-off transaction is expected to be finalized by beginning to mid July.

Seeking Alpha Earnings Call transcript mentions Aptina expenses run around $30.0+ million in R&D and $9.0 million or so in SG&A (Selling, General & Administrative Expense). The spin-off transaction is expected to be finalized by beginning to mid July.

Thursday, June 25, 2009

Dongbu Ships 2MP Sensors To SETi and More

Yahoo: Dongbu announced that it has begun shipping 2MP sensors to SETi. The new chips, processed at the 110nm node, will target primarily 2MP camera phones in developing nations. Dongbu plans for shipping 3- and 5MP versions to SETi by early next year. These future higher resolution versions, also processed at the 110nm node, will deploy a smaller pixel size to compete head on with 90nm-node CIS chips.

According to Joon Hwang, EVP of Dongbu HiTek, “Price competition for CIS chips is getting fiercer, especially in the Chinese market. To sharpen our competitive edge, we are maximizing useful die per wafer by driving the highest quality levels and minimizing the chip size.” He disclosed that the Dongbu technologists are making good progress toward shrinking the size of the pixel to 1.4um, which will enable its 110nm-node CIS chips to compete directly with 90nm-node CIS chips.

Dongbu has drawn up plans to serve a broader range of applications such as image sensors for vehicles and CCTVs. The company expects to continue investing in R&D to improve CIS image quality and eventually license its CIS IP to qualified fabless firms worldwide.

According to Joon Hwang, EVP of Dongbu HiTek, “Price competition for CIS chips is getting fiercer, especially in the Chinese market. To sharpen our competitive edge, we are maximizing useful die per wafer by driving the highest quality levels and minimizing the chip size.” He disclosed that the Dongbu technologists are making good progress toward shrinking the size of the pixel to 1.4um, which will enable its 110nm-node CIS chips to compete directly with 90nm-node CIS chips.

Dongbu has drawn up plans to serve a broader range of applications such as image sensors for vehicles and CCTVs. The company expects to continue investing in R&D to improve CIS image quality and eventually license its CIS IP to qualified fabless firms worldwide.

Microsoft Confirms 3DV Acquisition

Seeking Alpha: Microsoft confirmed it acquired 3DV Systems, the maker of 3D image sensors and solutions. One interesting statement in the article is that oncoming Microsoft gesture control for Xbox (Project Natal) integrates both 3DV and Primesense solutions.

Update: Globes too writes that Israel Microsoft "R&D center helped Microsoft in buying the intellectual property of 3DV Systems, and in the wake of that dozens of the company's employees were recruited to work at the development center."

Update: Globes too writes that Israel Microsoft "R&D center helped Microsoft in buying the intellectual property of 3DV Systems, and in the wake of that dozens of the company's employees were recruited to work at the development center."

Wednesday, June 24, 2009

CMOSIS Paper On Binning

CMOSIS put on-line its pixel binning presentation from Frontiers in Electronic Imaging 2009 Conference held in Munich on June 15-16. The main question is can CMOS sensors can be as flexible in pixel binning as CCDs and at the same time offer other functional advantages over CCDs?

Tuesday, June 23, 2009

Omnivision Tries to Get Back the Lost VGA Market

Digitimes reports OmniVision will offer an inexpensive VGA CMOS image sensor, the 7675, in September 2009, trying to recover lost global market share due to competition from Samsung and Hynix. Probably, Digitimes meant SETi, the chief Omnivsion's competitor on the Chinese market, rather than Hynix and Samsung.

Digitimes' sources claim that OmniVision's 7675 has better light sensitivity because its size (1/9-inch) is bigger than Hynix's 1/10-inch. HP decided to use the 7675 for its low-cost Mini-note family.

The sources expect OmniVision will stop volume production of its 7690 after it launches the 7675.

Digitimes' sources claim that OmniVision's 7675 has better light sensitivity because its size (1/9-inch) is bigger than Hynix's 1/10-inch. HP decided to use the 7675 for its low-cost Mini-note family.

The sources expect OmniVision will stop volume production of its 7690 after it launches the 7675.

"Go Get Papers" Search Engine

I've just bumped into a new paper search engine offering quite a big collection of CMOS sensors papers.

Novatek Enters Image Sensor Market?

Digitimes writes that, according to its sources, Novatek plans to enter image sensor market with 5MP product. Novatek says that it's evaluating the feasibility of designing CIS product, bust so far has no operational plans for production.

It looks like Novatek follows the steps of its bigger competitor Himax seeking to extend its market beyond LCD drivers. Rumors that Novatek designs image sensors at TSMC have been circulating for quite a long time. However, most of the rumors, I've heard so far, told that the first Novatek's product would be VGA sensor for Chinese market.

It looks like Novatek follows the steps of its bigger competitor Himax seeking to extend its market beyond LCD drivers. Rumors that Novatek designs image sensors at TSMC have been circulating for quite a long time. However, most of the rumors, I've heard so far, told that the first Novatek's product would be VGA sensor for Chinese market.

Monday, June 22, 2009

Omnivision 3MP in iPhone 3G

EETimes posted Portelligent findings from iPhone 3G reverse engineering that Apple uses Omnivision 3MP sensor in its phone.

Omnivision Announces 1.75um BSI Sensors

Yahoo: Omnivision introduced 1.75um OmniBSI family consists of a 5MP OV5650 RAW sensor for mobile phone high resolution cameras, and the 2MP OV2665 SoC. The new devices combine the low-light sensitivity of greater than 1400m V/(lux-sec) and a 2x improvement in SNR, with the industry's lowest stack height for today's ultra-slim mobile phones.

The pixel performance of the 1/3.2" 5MP OV5650 enables high frame rate HD video at 60 fps with 10b parallel port or two-lane MIPI and has 256 bytes of available on-chip memory for image tuning. The 1/5" 2MP OV2665 SoC device provides parallel or single lane MIPI interface and incorporates ISP with features such as lens correction, auto exposure, auto white balance, and auto flicker.

Both the OV5650 and OV2665 are currently sampling with volume production slated for the second half of calendar 2009.

The pixel performance of the 1/3.2" 5MP OV5650 enables high frame rate HD video at 60 fps with 10b parallel port or two-lane MIPI and has 256 bytes of available on-chip memory for image tuning. The 1/5" 2MP OV2665 SoC device provides parallel or single lane MIPI interface and incorporates ISP with features such as lens correction, auto exposure, auto white balance, and auto flicker.

Both the OV5650 and OV2665 are currently sampling with volume production slated for the second half of calendar 2009.

Friday, June 19, 2009

Multi-Exciton Generation Pixels

"Breakthrough" seems to be a popular word this week. Just a few days after sCMOS sensor breakthrough, Science Daily and few other sources report that a team of University of Toronto scientists created multi-exciton generation (MEG) light sensor. Until now, no group had collected an electrical current from a device that takes advantage of MEG.

"...the image sensor chips inside cameras collect, at most, one electron's worth of current for every photon (particle of light) that strikes the pixel," says Ted Sargent, professor in University of Toronto's Department of Electrical and Computer Engineering. "Instead generating multiple excitons per photon could ultimately lead to better low-light pictures."

This sounds really interesting. Unfortunately, there is not much details in this PR to judge the feasibility of this approach for the real sensors.

Update: The original article is here. It appears to be quite a far away from anything practical for the mainstream sensors.

"...the image sensor chips inside cameras collect, at most, one electron's worth of current for every photon (particle of light) that strikes the pixel," says Ted Sargent, professor in University of Toronto's Department of Electrical and Computer Engineering. "Instead generating multiple excitons per photon could ultimately lead to better low-light pictures."

This sounds really interesting. Unfortunately, there is not much details in this PR to judge the feasibility of this approach for the real sensors.

Update: The original article is here. It appears to be quite a far away from anything practical for the mainstream sensors.

Thursday, June 18, 2009

Samsung, Aptina Sensor Shortages

Digitimes: The supply of image sensors from Samsung is currently falling 30-40% short of demand, pushing the company to ramp up CIS production, according to sources at Taiwan camera module makers. The shortages are unlikely to ease until Q3 2009.

Some camera module makers have also seen the supply of Aptina's sensors tighten and immediately turned to place orders with Samsung, expanding Samsung's supply gap, the sources indicated.

Some camera module makers have also seen the supply of Aptina's sensors tighten and immediately turned to place orders with Samsung, expanding Samsung's supply gap, the sources indicated.

LinkedIn Image Sensor Group

It came to my attention that LinkedIn has CMOS/CCD Image Sensor Group with more than 180 people already signed on there. Looks like an impressive list and also a useful place to keep track of people.

Wednesday, June 17, 2009

sCMOS Sensor Presented

Bio Optics World Magazine: A whitepaper presented this week at the Laser World of Photonics Conference and Exhibition (Munich, Germany, June 15-18) describes scientific CMOS (sCMOS) technology. It is said to be based on next-generation image sensor design and fabrication techniques and capable of out-performing most scientific imaging devices on the market today. sCMOS is said to be able simultaneously offer extremely low noise, rapid frame rates, wide dynamic range, high QE, high resolution, and a large field of view.

The development is the result of combined resources of Andor Technology (Northern Ireland), Fairchild Imaging (United States) and PCO (Germany). The companies have opened sCMOS web site entirely devoted to the new sensors.

Current scientific imaging technology standards suffer limitations in relation to a strong element of 'mutual exclusivity' between performance parameters, i.e. one can be optimized at the expense of others. sCMOS can be considered unique in its ability to concurrently deliver on many key parameters, whilst eradicating the performance drawbacks that have traditionally been associated with conventional CMOS imagers.

Performance highlights of the first sCMOS technology sensor include:

Talking about the implementation details, the sensor has split readout scheme, where top and bottom part of the sensor are read out independently. Each column coupled to two amplifiers with high and low gain and two ADCs, as on the figure below:

Update: As the whitepaper says, the sensor has <1% non-linearity, which can be corrected down to 0.2%. Antiblooming protection is stated as better than 10,000:1. The charge transfer time is said to be less that 1us, enabling very fast exposure times. This again rises the question about completeness of the charge transfer and image lag.

Update #2: Below is the chip view. The readout occupies a huge area, even knowing there are two separate readouts on top and bottom and double CDS and ADCs in each of them. Why it's so large? May be because the noise is suppressed in a bruteforce manner by using very large caps?

The development is the result of combined resources of Andor Technology (Northern Ireland), Fairchild Imaging (United States) and PCO (Germany). The companies have opened sCMOS web site entirely devoted to the new sensors.

Current scientific imaging technology standards suffer limitations in relation to a strong element of 'mutual exclusivity' between performance parameters, i.e. one can be optimized at the expense of others. sCMOS can be considered unique in its ability to concurrently deliver on many key parameters, whilst eradicating the performance drawbacks that have traditionally been associated with conventional CMOS imagers.

Performance highlights of the first sCMOS technology sensor include:

- Sensor format: 5.5 megapixels (2560(h) x 2160(v))

- Read noise: < 2 e- rms @ 30 frames/s; < 3 e- rms @ 100 frames/s

- Maximum frame rate: 105 fps for rolling shutter, 52.5fps for global shutter modes

- Pixel size: 6.5 um

- Dynamic range: > 16,000:1 (@ 30 fps)

- QEmax.: 60%

- Read out modes: Rolling and Global shutter (user selectable)

Talking about the implementation details, the sensor has split readout scheme, where top and bottom part of the sensor are read out independently. Each column coupled to two amplifiers with high and low gain and two ADCs, as on the figure below:

Update: As the whitepaper says, the sensor has <1% non-linearity, which can be corrected down to 0.2%. Antiblooming protection is stated as better than 10,000:1. The charge transfer time is said to be less that 1us, enabling very fast exposure times. This again rises the question about completeness of the charge transfer and image lag.

Update #2: Below is the chip view. The readout occupies a huge area, even knowing there are two separate readouts on top and bottom and double CDS and ADCs in each of them. Why it's so large? May be because the noise is suppressed in a bruteforce manner by using very large caps?

Tuesday, June 16, 2009

Aptina Paper on Sharpness and Noisiness Perception

SPIE published Aptina's paper on perceivable importance of sharpness and noisiness across the picture area, presented on 2009 Electronic Imaging Symposium.

Mechanical Shutter Competition

etnews.co.kr tells about the new Samsung 12MP camera phone using mechanical shutter from Korean company Sungwoo Electronics.

Up to now, the Japanese maker Copal has accounted for 90% of the global market of mechanical shutters, equivalent to HP in the printer manufacturing business. Backed by the patents, it has dominated the shutter manufacturing business in the global market for digital cameras. However, it has overlooked the market for mobile phone. By beginning the shutter manufacturing for mobile phones, Sungwoo Electronics was able to secure many patents and then manufacture a shutter for 12 mega-pixel cameras.

Up to now, the Japanese maker Copal has accounted for 90% of the global market of mechanical shutters, equivalent to HP in the printer manufacturing business. Backed by the patents, it has dominated the shutter manufacturing business in the global market for digital cameras. However, it has overlooked the market for mobile phone. By beginning the shutter manufacturing for mobile phones, Sungwoo Electronics was able to secure many patents and then manufacture a shutter for 12 mega-pixel cameras.

Nemotek Prepares Wafer Level Cameras and Lenses Production

EETimes: Nemotek, the Rabat, Morocco-based company readying production of wafer level lenses and cameras , has qualified the first parts at its newly built manufacturing and packaging facility, and expects to start shipping devices in the fourth quarter of this year.

Nemotek has to date invested about $40M in a dedicated facility in Rabat, and the total proposed value of the investment over the next three years is $100M, to reach capacities of about 150,000 wafers a year by 2012.

Initially, the capacity is about 17,000 wafer line cameras a year from the 10,000 m2 facility, which includes a certified Class 10 clean room that is said to be the first in Africa located in the Rabat Technopolis Park, a hub for technology development in Morocco.

Nemotek has to date invested about $40M in a dedicated facility in Rabat, and the total proposed value of the investment over the next three years is $100M, to reach capacities of about 150,000 wafers a year by 2012.

Initially, the capacity is about 17,000 wafer line cameras a year from the 10,000 m2 facility, which includes a certified Class 10 clean room that is said to be the first in Africa located in the Rabat Technopolis Park, a hub for technology development in Morocco.

Monday, June 15, 2009

Kodak Explores The Moon

Yahoo: With the scheduled launch of the Lunar Reconnaissance Orbiter, imaging technology from Kodak will be used to create a comprehensive atlas of the moon’s features and resources to aid in the design of a future manned lunar outpost.

The orbiter’s Wide Angle Camera will provide a “big picture” view of the moon by capturing images with 100-meter resolution. Based on the KODAK KAI-1001 Image Sensor, a one-megapixel device that provides both high sensitivity and high dynamic range, this camera will also use seven different color bands to identify spectral signatures of minerals that may be present on the moon’s surface.

Two Narrow Angle Cameras on the orbiter will capture high-resolution images of the moon’s surface at 0.5 meter-per-pixel using KODAK KLI-5001 Image Sensors, providing the same level of detail as the highest resolution satellite images of Earth commercially available today. As it fulfills its primary mission to map the surface of the moon, the LRO will also fly over landing sites from the historic Apollo missions, allowing these high-resolution cameras to capture the first images of Apollo-era artifacts from lunar orbit.

The orbiter’s Wide Angle Camera will provide a “big picture” view of the moon by capturing images with 100-meter resolution. Based on the KODAK KAI-1001 Image Sensor, a one-megapixel device that provides both high sensitivity and high dynamic range, this camera will also use seven different color bands to identify spectral signatures of minerals that may be present on the moon’s surface.

Two Narrow Angle Cameras on the orbiter will capture high-resolution images of the moon’s surface at 0.5 meter-per-pixel using KODAK KLI-5001 Image Sensors, providing the same level of detail as the highest resolution satellite images of Earth commercially available today. As it fulfills its primary mission to map the surface of the moon, the LRO will also fly over landing sites from the historic Apollo missions, allowing these high-resolution cameras to capture the first images of Apollo-era artifacts from lunar orbit.

Sunday, June 14, 2009

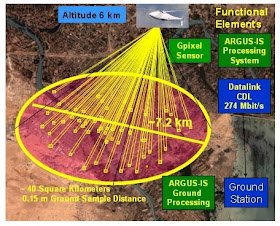

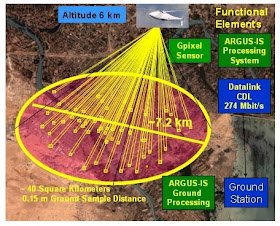

1.8 Gigapixel Sensor Planned

It came to my attention that DARPA Autonomous Real-time Ground Ubiquitous Surveillance - Imaging System (ARGUS-IS) program plans to develop and use 1.8 Gigapixel video sensor. The Gigapixel sensor will be integrated into the A-160 Hummingbird, an unmanned air vehicle for flight testing and demonstrations. The sensor produces more than 27 Gigapixels per second running at a frame rate of 15 Hz. The airborne processing subsystem is modular and scalable providing more than 10 TeraOPS of processing.

Update: 1.8GP pixel count translates into 42.5K x 42.5K pixel square sensor. Assuming a medium format 6cm x 6cm sensor, the pixel size is about 1.4um. I wonder who can provide to DARPA this kind of technology?

Update: 1.8GP pixel count translates into 42.5K x 42.5K pixel square sensor. Assuming a medium format 6cm x 6cm sensor, the pixel size is about 1.4um. I wonder who can provide to DARPA this kind of technology?

Friday, June 12, 2009

Image Sensors at ESSCIRC 2009

ESSCIRC 2009 is to be held on September 14-18, 2009 in Athens, Greece. The conference has 2 sessions on imaging: 3-D Imaging and Optical Sensors and Vision Systems. It looks like 3D imaging becomes popular, if it warrants a special session on that.

Thanks to A.T. for letting me know.

Thanks to A.T. for letting me know.

Samsung 1.3MP Sensors Shortages

Digitimes reports that the lead time for Samsung 1.3MP sensor orders grew from one month to three recently. As a result Taiwan-based module vendors experience shortages. The sensor is also used in Samsung and Lenovo netbooks, both suffering from the sensor's short supply.

Thursday, June 11, 2009

ST TSV for CIS Process Presented

Semiconductor International reviews TSV papers from 2009 ECTC (Electronic Components and Technology Conference).

In one of the papers CEA Leti , Brewer Science and EVG present the use of a temporary thinning carrier in the ST Micro TSV image sensor product. The product relies on 70um-sized TSVs in 70um-thinned wafer, so vias aspect ratio is 1:1.

In one of the papers CEA Leti , Brewer Science and EVG present the use of a temporary thinning carrier in the ST Micro TSV image sensor product. The product relies on 70um-sized TSVs in 70um-thinned wafer, so vias aspect ratio is 1:1.

Wednesday, June 10, 2009

Cypress Designed BSI Sensor for NEC Toshiba Space Systems

Cypress designed a custom BSI CMOS image sensor for a satellite hyperspectral imaging application from NEC TOSHIBA Space Systems, Ltd. In addition to the BSI, the imager integrates Cypress’s patented global-shutter technology, which enables fast frame rates with the ability to read one frame while the next is being captured. The sensor is arranged in a 1024 column x 256 row configuration. The design includes on-board ADCs and a high-speed digital interface tailored for space applications.

The imager is planned to be in space-qualified flight models by mid-2010. The sensor is expected to be available for other space and military applications after flight qualification is completed.

The imager is planned to be in space-qualified flight models by mid-2010. The sensor is expected to be available for other space and military applications after flight qualification is completed.

Tuesday, June 09, 2009

100 Sensors to Every Soldier

Defense News reports about the new idea to equip each soldier by a high resolution imaging system consisting of about 100 tiny 1/16" camera modules and a processor recombining these 100 low resolution images into the one of high resolution. The system is developed by Southern Methodist University SMU in Dallas and Santa Clara University in California and funded by DARPA. The researches also plan to add MEMS mirrors to redirect the cameras to focus on subject of interest.

If this program gets adopted by army, it would be quite a significant new market for image sensors.

If this program gets adopted by army, it would be quite a significant new market for image sensors.

Friday, June 05, 2009

Panasonic May Delay Tonami Fab Opening

Trading Markets: Panasonic is considering postponing the opening of its image sensor plant in Tonami, Toyama Prefecture, for several months due to poor business outlook for image sensors.

Panasonic is investing 94B yen in the new plant, which had been scheduled to begin in August to produce image sensors for digital cameras, camcorders and other products.

Panasonic is investing 94B yen in the new plant, which had been scheduled to begin in August to produce image sensors for digital cameras, camcorders and other products.

Thursday, June 04, 2009

Tessera Talks About Sensor Packaging Trends

Solid State Technology published another article by Tessera's Giles Humpston on challenges in camera module designs. Here is the projection on wafer level packaging adoption in camera phone modules:

Pixpolar Presents Its Technology

In response to our discussion Artto Aurola from Pixpolar commented on the company's MIG operation and benefits over conventional sensors. To get more visibility for his comments, I'm moving them to the front page:

--------------

Hello everybody,

I was asked to comment this conversation about Pixpolar's Modified Internal Gate (MIG) technology. The MIG technology has excellent low light performance due to high fill factor, high sensitivity, low noise and long integration time (allowed by multiple CDS read-out which is enabled due to MIG sensors non-destructive read-out ability). Therefore the low end of the dynamic range can be extended considerably in MIG sensors. A reasonable value for the dynamic range can also be obtained in the high end of the dynamic range scale – typically it is easier to improve the high end than the low end of the dynamic range in any sensor. In case you want to dive deeper into the technological aspects you'll find below a summary about the low light benefits of MIG sensors compared to Complementary metal oxide silicon Image Sensors (CIS) and to the DEpleted Field Effect Transistor [DEPFET, aka Bulk Charge Modulated Device (BCMD) etc] sensors and few words about pixel scaling.

MIG COMPARISION TO CIS IN LOW LIGHT

A critical problem in most digital cameras is the image quality in low light. In order to maximize the low light performance the Signal to Noise Ratio (SNR) needs to be as high as possible, which can be achieved:

1) BY MAXIMIZING CONVERSION AND COLLECTION EFFICIENCY, i.e. by converting as many photons to charges as possible, by collecting these photo-induced charges (signal charges) as efficiently as possible and by transporting these signal charges to the sense node as efficiently as possible. In CIS this is done by incorporating Back Side Illumination (BSI) and micro lenses to the sensor and by the design of the pinned photo diode and the transfer gate. The essentially fully depleted MIG pixel provides throughout the pixel a decent potential gradient for signal charge transport and up to 100 % fill factor in case of BSI. These aspects are missing in CIS but the micro lenses compensate this problem fairly well.

2) BY MINIMIZING NOISE.

2A) Dark noise. The most prominent dark noise component is the interface generated dark current which has been depressed in CIS considerably by using the pinned photo diode structure so that the only possible source of interface dark noise during integration is the transfer gate. In the MIG sensors the interface generated dark current and the signal charges can be completely separated both during integration and read-out. The advantage is, however, not decisive.

2B) Read noise:

2B1) Reset noise. This noise component can be avoided in CIS using Correlated Double Sampling (CDS) which necessitates four transistor CIS pixel architecture. In MIG pixel CDS is always enabled due to the fact that the sense node can be completely depleted.

2B2) Sense node capacitance. In CIS [and in Charge Coupled Devices (CCDs)] an external gate configuration is used for read-out wherein the sense node comprises the floating diffusion, the source follower gate and the wire connecting the two. The signal charge is shared between all the three parts instead of being solely located at the source follower gate meaning that CIS suffers from a large sense node stray capacitance degrading the sensitivity. In MIG sensors the signal charge is collected at a single spot (i.e. at the MIG) which is located inside silicon below the channel of a FET (or below the base of a bipolar transistor) meaning that the sense node capacitance is as small as possible enabling high sensitivity.

2B3) 1/f noise. It is mainly caused by charge trapping and detrapping at the interface of silicon and the gate isolator material of the source follower transistor. It can be reduced in CIS by using CDS and buried channel source follower transistors. However, the problem in the external gate read-out is the fact that the signal charges always modulate the channel threshold potential through the interface. Therefore and due to the large sense node stray capacitance a charge trapped at the interface below the gate of the source follower transistor causes always a larger (false) signal than a real signal charge at the sense node which complicates the read noise reduction in CIS considerably.

In MIG sensors the 1/f noise can likewise be reduced using CDS, i.e. subtracting the results measured when the signal charges are present in the sense node (MIG) and after the sense node has been emptied from signal charges (the sense node can be completely depleted!). A complementary way to reduce the 1/f noise in MIG sensors is to use a buried channel Metal Oxide Silicon (MOS) structure which has a relatively thin, high k gate isolator layer (the thin high k isolator is common in normal MOS transistors) and a relatively deep buried channel. This combination reduces the impact of the interface trapped charge considerably resulting in very low 1/f noise characteristics. In other words the signal charge at the MIG results in a much higher signal than a charge trapped at the interface since a) the gate to silicon distance is much smaller than the gate to channel distance, since b) the external gate is at a fixed potential during the read-out, since c) the sense node stray capacitance is very small and since d) the signal charge is not modulating the channel threshold potential through the interface. Instead of using a MIG transistor having buried channel MOS structure very low 1/f noise characteristics can naturally also be achieved by using JFET or Schottky gate in the MIG transistor.

2C) Multiple CDS read-out / double MIG. The read noise can be further reduced in a double MIG sensor by reading the integrated charge multiple times CDS wise. The multiple CDS read-out of the same signal charges is enabled because the signal charges can be brought back and forth to the sense node (MIG) and because the sense node can be completely depleted, i.e. signal charges do not mix with charges present in the sense node. In CIS and CCDs the external gate sense node can never be depleted and once the signal charges are brought to the sense node they cannot be separated anymore. Therefore multiple CDS read-out is not possible in CIS and CCDs. The multiple CDS read-out is a very efficient way in reducing the read noise since the read noise is reduced by a factor of one over the square root of the read times.

3) BY MAXIMIZING THE INTEGRATION TIME. The integration time is typically limited by subject and/or by camera movements. The maximum possible integration time can be achieved if the signal charge is read as often as possible (e.g. the frame rate is set at a maximal reasonable value by reading signal charge in rolling shutter mode without using a separate integration period) and if all the measured results are stored in memory. In this manner the start and end points of the integration period can be set afterwards according to the actual camera/subject movements which can considerably increase the integration time compared to the case wherein the integration time is set beforehand to a value which enables a decent image success rate. In addition the stored multiple read-out measurement results facilitate the correction of image degradation caused by camera movements (soft ware anti shake) which also enhances the maximum integration time and image quality. Finally the stored multiple read-out results enable different integration times to be used for different image areas increasing considerably the dynamic range and the quality of the image.

If the signal charge is read as often as possible in CIS, if the difference between read-out results at selected start and end points is used as a final measurement result and if the sense node is not reset in between, the final result will comprise a lot of interface generated dark current integrated by the sense node and a lot of 1/f noise. If, on the other hand, the interface generated dark noise is removed CDS wise at all the measurement occasions taking place during the afterwards selected integration period the read noise is added up (this applies also to CCDs). Therefore it may be necessary in CIS to limit the number of the read times to one or to two times at maximum. In this manner, however, the integration time must be set in beforehand and the benefits of multiple read-outs cannot be obtained. Especially the adjusting of the integration time to the subject movements becomes more or less impossible.

The big benefit of the double MIG concept is that for a given pixel one can freely choose an integration time between any two measurement occasions taking place during the afterwards selected start and end points whereby the result is free of interface generated dark noise, whereby read noise is not added up and whereby 1/f noise is considerably reduced due to CDS read-out. (To be precise the read noise is at lowest if the pixel specific start point of integration corresponds to pixel reset.) The first and second points are due to the fact that non destructive read-out and elimination of interface generated dark noise is taking place simultaneously. These two aspects are also true for the single MIG pixel. If in single MIG pixel the 1/f noise is at a low enough level (e.g. buried channel MOS approach) the pixel integration time can also be selected independently from other pixels without degrading the image quality (higher 1/f versus higher integration time) although CDS would not be used.

The afore described means a profound change for especially low light digital photography since instead of setting the integration time beforehand the image can be constructed from a stream of afterwards selected sub images taken at maximum reasonable frame rate. The latter enhances also the ability to correct the image quality software wise.

MIG COMPARISON TO DEPFET/BCMD

In the DEPFET (aka BCMD) sensor signal charges are collected in silicon in an Internal Gate (IG) below the channel of a FET where they modulate the threshold voltage of the FET. In DEPFET the sense node is the IG which can be completely depleted. In addition to that the read-out in DEPFET is non-destructive. The difference between MIGFET and IGFET (DEPFET) is the fact that the current running in the channel of the MIGFET is of the same type than the signal charges in MIG whereas the current running in the IGFET channel and the signal charges in IG are of opposite type.

Process fluctuations (e.g. small mask misalignments etc) and/or poor layout design cause small potential wells for signal charges to be formed in the IG of the DEPFET/IGFET sensor. The threshold potential in the FET channel above the locations of the small potential wells is, however, at maximum (signal charges and current running in the channel are of the opposite type). This results in the problem that signal charges are first collected at locations where the sensor is least sensitive, i.e. read noise is the higher the less there are signal charges to be read which degrades image quality in low light. In other words the IGFET/DEPFET sensor is vulnerable to process fluctuations which may degrade the manufacturing yield to an unsatisfactory level.

Another problem in the IGFET/DEPFET sensor is the fact that normal square IGFETs suffer from serious problems originating from the transistor edges necessitating the use of circular IGFETs. The doughnut shaped IG of the circular transistor increases the capacitance of the IG, i.e. of the sense node, leading to lower sensitivity. This is also likely to impede low light performance. The circular IGFET structure also significantly complicates the signal charge transfer back and forth to the IG meaning that multiple CDS read-out is difficult to perform. Additionally the large size of IG is likely to make the device even more prone for process fluctuations.

The benefit of the MIGFET is that the signal charges are always collected under the location of the channel where the threshold voltage is at highest (signal charges and the current running in the channel are of the same type) which means that MIGFET has tolerance against process fluctuations and that read noise is not increasing when the amount of signal charges decreases. Another benefit is that square MIGFETs do not suffer from edge problems. This fact enables the use of a minimum size MIGFET having a small MIG which enables very high sensitivity. In addition the square MIGFET facilitates considerably the signal charge transfer back and forth to MIG enabling multiple CDS read-out.

The less there are signal charges in the IG the higher the threshold voltage of the IGFET. This is a problem when the read-out is based on current integration since the less there are signal charges in IG the smaller the current through the IGFET and the smaller the amount of the integrated charge. The smaller the amount of the integrated charge the more the SNR is degraded due to high level of shot noise in the amount of integrated charge. In MIG sensors the situation is the opposite way round, i.e. the less there are signal charges in MIG the smaller the threshold voltage of the MIGFET and the bigger the current through the MIGFET, and the less the SNR is affected by shot noise in the amount of integrated charge.

In buried channel circular IGFETs the charges generated at the interface of silicon and gate isolator material are blocked at the origin leading to accumulation of interface generated charges at the interface between source and drain. Although the interface accumulated charge can be removed during reset the interface accumulated charge may increase the noise especially when the amount of signal charge is small and when long integration times are used even though the gate isolator material layer would be fairly thin. The ability to use square MIGFETs enables the use of the buried channel without interface generated charges being accumulated at the silicon and gate isolator material interface (the edges of the square transistor form an exit for the interface generated charges).

Instead of using a circular buried channel IGFET one can use circular JFET or Schottky gate IGFETs which do not suffer from interface charge accumulation. The JFET based IGFET is, however, more complex than other structures (i.e. there are more doping layers present) and since the IGFET is vulnerable to process fluctuations the JFET type IGFET is likely to have the lowest yield. The Schottky gate IGFET is, on the other hand, process wise quite exotic and may be difficult to be incorporated to a CMOS image sensor process.

Probably for afore described reasons the only IGFET structure that has been studied extensively is the circular surface channel IGFET. In surface channel IGFET the external gate cannot be used for row selection since it is necessary to keep the channel open continuously (excluding reset of course), otherwise the IG would be flooded with interface generated dark current. This can be prevented if at least one additional selection transistor is added to the pixel (e.g. the source of the selection transistor is connected to the drain of the IGFET). The downside of the fact that the channel needs to be kept open continuously is that the transfer of signal charges back and forth to IG is even more complicated hindering multiple CDS read-out. In surface channel MIGFET the channel can be closed without the interface generated charges being able to mix with the signal charges in MIG (there is a potential barrier in between). Therefore the external gate of the surface channel MIG can be used for row selection. Additionally multiple CDS is enabled in the surface channel MIGFET since the external gate can be used also for signal charge transfer.

PIXEL SCALING

Important aspects concerning the pixel size in today’s BSI image sensors are certainly the manufacturing line width, the SNR and the dynamic range. The manufacturing line width defines the lower limit for the pixel size: it cannot be smaller than the area of the transistors belonging to one pixel. In practice the pixel size is defined by the low light level performance, i.e. by the SNR of the pixel. In both aspects MIG sensors have an advantage since the minimum number of transistors required per pixel is only one, since many features of the MIG transistors enable noise reduction compared to CIS/CCDs and since the integration time can be maximized by setting the integration time afterwards (multiple CDS provided by double MIG, low 1/f noise provided by buried channel MIGFET). A very good analogy for the MIGFET sensor is the DEPFET sensor which has the world record in noise performance being 0.3 electrons even though it suffers from the above listed problems.

The smaller pixel size should not, however, compromise the dynamic range of the pixel. The lower end of the dynamic range scale is already optimized in MIG sensors. The small sense node (i.e. MIG) capacitance naturally somewhat limits the full well capacity of the MIG. Again a good analogy is here the IGFET/DEPFET/BCMD technology – ST microelectronics has recently reported that they achieved better performance in a 1.4 um pixel with IGFET/DEPFET/BCMD architecture than with normal CIS architecture.

BR, Artto

--------------

Hello everybody,

I was asked to comment this conversation about Pixpolar's Modified Internal Gate (MIG) technology. The MIG technology has excellent low light performance due to high fill factor, high sensitivity, low noise and long integration time (allowed by multiple CDS read-out which is enabled due to MIG sensors non-destructive read-out ability). Therefore the low end of the dynamic range can be extended considerably in MIG sensors. A reasonable value for the dynamic range can also be obtained in the high end of the dynamic range scale – typically it is easier to improve the high end than the low end of the dynamic range in any sensor. In case you want to dive deeper into the technological aspects you'll find below a summary about the low light benefits of MIG sensors compared to Complementary metal oxide silicon Image Sensors (CIS) and to the DEpleted Field Effect Transistor [DEPFET, aka Bulk Charge Modulated Device (BCMD) etc] sensors and few words about pixel scaling.

MIG COMPARISION TO CIS IN LOW LIGHT

A critical problem in most digital cameras is the image quality in low light. In order to maximize the low light performance the Signal to Noise Ratio (SNR) needs to be as high as possible, which can be achieved:

1) BY MAXIMIZING CONVERSION AND COLLECTION EFFICIENCY, i.e. by converting as many photons to charges as possible, by collecting these photo-induced charges (signal charges) as efficiently as possible and by transporting these signal charges to the sense node as efficiently as possible. In CIS this is done by incorporating Back Side Illumination (BSI) and micro lenses to the sensor and by the design of the pinned photo diode and the transfer gate. The essentially fully depleted MIG pixel provides throughout the pixel a decent potential gradient for signal charge transport and up to 100 % fill factor in case of BSI. These aspects are missing in CIS but the micro lenses compensate this problem fairly well.

2) BY MINIMIZING NOISE.

2A) Dark noise. The most prominent dark noise component is the interface generated dark current which has been depressed in CIS considerably by using the pinned photo diode structure so that the only possible source of interface dark noise during integration is the transfer gate. In the MIG sensors the interface generated dark current and the signal charges can be completely separated both during integration and read-out. The advantage is, however, not decisive.

2B) Read noise:

2B1) Reset noise. This noise component can be avoided in CIS using Correlated Double Sampling (CDS) which necessitates four transistor CIS pixel architecture. In MIG pixel CDS is always enabled due to the fact that the sense node can be completely depleted.

2B2) Sense node capacitance. In CIS [and in Charge Coupled Devices (CCDs)] an external gate configuration is used for read-out wherein the sense node comprises the floating diffusion, the source follower gate and the wire connecting the two. The signal charge is shared between all the three parts instead of being solely located at the source follower gate meaning that CIS suffers from a large sense node stray capacitance degrading the sensitivity. In MIG sensors the signal charge is collected at a single spot (i.e. at the MIG) which is located inside silicon below the channel of a FET (or below the base of a bipolar transistor) meaning that the sense node capacitance is as small as possible enabling high sensitivity.

2B3) 1/f noise. It is mainly caused by charge trapping and detrapping at the interface of silicon and the gate isolator material of the source follower transistor. It can be reduced in CIS by using CDS and buried channel source follower transistors. However, the problem in the external gate read-out is the fact that the signal charges always modulate the channel threshold potential through the interface. Therefore and due to the large sense node stray capacitance a charge trapped at the interface below the gate of the source follower transistor causes always a larger (false) signal than a real signal charge at the sense node which complicates the read noise reduction in CIS considerably.

In MIG sensors the 1/f noise can likewise be reduced using CDS, i.e. subtracting the results measured when the signal charges are present in the sense node (MIG) and after the sense node has been emptied from signal charges (the sense node can be completely depleted!). A complementary way to reduce the 1/f noise in MIG sensors is to use a buried channel Metal Oxide Silicon (MOS) structure which has a relatively thin, high k gate isolator layer (the thin high k isolator is common in normal MOS transistors) and a relatively deep buried channel. This combination reduces the impact of the interface trapped charge considerably resulting in very low 1/f noise characteristics. In other words the signal charge at the MIG results in a much higher signal than a charge trapped at the interface since a) the gate to silicon distance is much smaller than the gate to channel distance, since b) the external gate is at a fixed potential during the read-out, since c) the sense node stray capacitance is very small and since d) the signal charge is not modulating the channel threshold potential through the interface. Instead of using a MIG transistor having buried channel MOS structure very low 1/f noise characteristics can naturally also be achieved by using JFET or Schottky gate in the MIG transistor.

2C) Multiple CDS read-out / double MIG. The read noise can be further reduced in a double MIG sensor by reading the integrated charge multiple times CDS wise. The multiple CDS read-out of the same signal charges is enabled because the signal charges can be brought back and forth to the sense node (MIG) and because the sense node can be completely depleted, i.e. signal charges do not mix with charges present in the sense node. In CIS and CCDs the external gate sense node can never be depleted and once the signal charges are brought to the sense node they cannot be separated anymore. Therefore multiple CDS read-out is not possible in CIS and CCDs. The multiple CDS read-out is a very efficient way in reducing the read noise since the read noise is reduced by a factor of one over the square root of the read times.

3) BY MAXIMIZING THE INTEGRATION TIME. The integration time is typically limited by subject and/or by camera movements. The maximum possible integration time can be achieved if the signal charge is read as often as possible (e.g. the frame rate is set at a maximal reasonable value by reading signal charge in rolling shutter mode without using a separate integration period) and if all the measured results are stored in memory. In this manner the start and end points of the integration period can be set afterwards according to the actual camera/subject movements which can considerably increase the integration time compared to the case wherein the integration time is set beforehand to a value which enables a decent image success rate. In addition the stored multiple read-out measurement results facilitate the correction of image degradation caused by camera movements (soft ware anti shake) which also enhances the maximum integration time and image quality. Finally the stored multiple read-out results enable different integration times to be used for different image areas increasing considerably the dynamic range and the quality of the image.

If the signal charge is read as often as possible in CIS, if the difference between read-out results at selected start and end points is used as a final measurement result and if the sense node is not reset in between, the final result will comprise a lot of interface generated dark current integrated by the sense node and a lot of 1/f noise. If, on the other hand, the interface generated dark noise is removed CDS wise at all the measurement occasions taking place during the afterwards selected integration period the read noise is added up (this applies also to CCDs). Therefore it may be necessary in CIS to limit the number of the read times to one or to two times at maximum. In this manner, however, the integration time must be set in beforehand and the benefits of multiple read-outs cannot be obtained. Especially the adjusting of the integration time to the subject movements becomes more or less impossible.

The big benefit of the double MIG concept is that for a given pixel one can freely choose an integration time between any two measurement occasions taking place during the afterwards selected start and end points whereby the result is free of interface generated dark noise, whereby read noise is not added up and whereby 1/f noise is considerably reduced due to CDS read-out. (To be precise the read noise is at lowest if the pixel specific start point of integration corresponds to pixel reset.) The first and second points are due to the fact that non destructive read-out and elimination of interface generated dark noise is taking place simultaneously. These two aspects are also true for the single MIG pixel. If in single MIG pixel the 1/f noise is at a low enough level (e.g. buried channel MOS approach) the pixel integration time can also be selected independently from other pixels without degrading the image quality (higher 1/f versus higher integration time) although CDS would not be used.

The afore described means a profound change for especially low light digital photography since instead of setting the integration time beforehand the image can be constructed from a stream of afterwards selected sub images taken at maximum reasonable frame rate. The latter enhances also the ability to correct the image quality software wise.

MIG COMPARISON TO DEPFET/BCMD

In the DEPFET (aka BCMD) sensor signal charges are collected in silicon in an Internal Gate (IG) below the channel of a FET where they modulate the threshold voltage of the FET. In DEPFET the sense node is the IG which can be completely depleted. In addition to that the read-out in DEPFET is non-destructive. The difference between MIGFET and IGFET (DEPFET) is the fact that the current running in the channel of the MIGFET is of the same type than the signal charges in MIG whereas the current running in the IGFET channel and the signal charges in IG are of opposite type.

Process fluctuations (e.g. small mask misalignments etc) and/or poor layout design cause small potential wells for signal charges to be formed in the IG of the DEPFET/IGFET sensor. The threshold potential in the FET channel above the locations of the small potential wells is, however, at maximum (signal charges and current running in the channel are of the opposite type). This results in the problem that signal charges are first collected at locations where the sensor is least sensitive, i.e. read noise is the higher the less there are signal charges to be read which degrades image quality in low light. In other words the IGFET/DEPFET sensor is vulnerable to process fluctuations which may degrade the manufacturing yield to an unsatisfactory level.

Another problem in the IGFET/DEPFET sensor is the fact that normal square IGFETs suffer from serious problems originating from the transistor edges necessitating the use of circular IGFETs. The doughnut shaped IG of the circular transistor increases the capacitance of the IG, i.e. of the sense node, leading to lower sensitivity. This is also likely to impede low light performance. The circular IGFET structure also significantly complicates the signal charge transfer back and forth to the IG meaning that multiple CDS read-out is difficult to perform. Additionally the large size of IG is likely to make the device even more prone for process fluctuations.

The benefit of the MIGFET is that the signal charges are always collected under the location of the channel where the threshold voltage is at highest (signal charges and the current running in the channel are of the same type) which means that MIGFET has tolerance against process fluctuations and that read noise is not increasing when the amount of signal charges decreases. Another benefit is that square MIGFETs do not suffer from edge problems. This fact enables the use of a minimum size MIGFET having a small MIG which enables very high sensitivity. In addition the square MIGFET facilitates considerably the signal charge transfer back and forth to MIG enabling multiple CDS read-out.

The less there are signal charges in the IG the higher the threshold voltage of the IGFET. This is a problem when the read-out is based on current integration since the less there are signal charges in IG the smaller the current through the IGFET and the smaller the amount of the integrated charge. The smaller the amount of the integrated charge the more the SNR is degraded due to high level of shot noise in the amount of integrated charge. In MIG sensors the situation is the opposite way round, i.e. the less there are signal charges in MIG the smaller the threshold voltage of the MIGFET and the bigger the current through the MIGFET, and the less the SNR is affected by shot noise in the amount of integrated charge.

In buried channel circular IGFETs the charges generated at the interface of silicon and gate isolator material are blocked at the origin leading to accumulation of interface generated charges at the interface between source and drain. Although the interface accumulated charge can be removed during reset the interface accumulated charge may increase the noise especially when the amount of signal charge is small and when long integration times are used even though the gate isolator material layer would be fairly thin. The ability to use square MIGFETs enables the use of the buried channel without interface generated charges being accumulated at the silicon and gate isolator material interface (the edges of the square transistor form an exit for the interface generated charges).

Instead of using a circular buried channel IGFET one can use circular JFET or Schottky gate IGFETs which do not suffer from interface charge accumulation. The JFET based IGFET is, however, more complex than other structures (i.e. there are more doping layers present) and since the IGFET is vulnerable to process fluctuations the JFET type IGFET is likely to have the lowest yield. The Schottky gate IGFET is, on the other hand, process wise quite exotic and may be difficult to be incorporated to a CMOS image sensor process.

Probably for afore described reasons the only IGFET structure that has been studied extensively is the circular surface channel IGFET. In surface channel IGFET the external gate cannot be used for row selection since it is necessary to keep the channel open continuously (excluding reset of course), otherwise the IG would be flooded with interface generated dark current. This can be prevented if at least one additional selection transistor is added to the pixel (e.g. the source of the selection transistor is connected to the drain of the IGFET). The downside of the fact that the channel needs to be kept open continuously is that the transfer of signal charges back and forth to IG is even more complicated hindering multiple CDS read-out. In surface channel MIGFET the channel can be closed without the interface generated charges being able to mix with the signal charges in MIG (there is a potential barrier in between). Therefore the external gate of the surface channel MIG can be used for row selection. Additionally multiple CDS is enabled in the surface channel MIGFET since the external gate can be used also for signal charge transfer.

PIXEL SCALING

Important aspects concerning the pixel size in today’s BSI image sensors are certainly the manufacturing line width, the SNR and the dynamic range. The manufacturing line width defines the lower limit for the pixel size: it cannot be smaller than the area of the transistors belonging to one pixel. In practice the pixel size is defined by the low light level performance, i.e. by the SNR of the pixel. In both aspects MIG sensors have an advantage since the minimum number of transistors required per pixel is only one, since many features of the MIG transistors enable noise reduction compared to CIS/CCDs and since the integration time can be maximized by setting the integration time afterwards (multiple CDS provided by double MIG, low 1/f noise provided by buried channel MIGFET). A very good analogy for the MIGFET sensor is the DEPFET sensor which has the world record in noise performance being 0.3 electrons even though it suffers from the above listed problems.

The smaller pixel size should not, however, compromise the dynamic range of the pixel. The lower end of the dynamic range scale is already optimized in MIG sensors. The small sense node (i.e. MIG) capacitance naturally somewhat limits the full well capacity of the MIG. Again a good analogy is here the IGFET/DEPFET/BCMD technology – ST microelectronics has recently reported that they achieved better performance in a 1.4 um pixel with IGFET/DEPFET/BCMD architecture than with normal CIS architecture.

BR, Artto

Wednesday, June 03, 2009

Samsung Sensors in Short Supply in Taiwan

Digitimes: Coasia Microelectronics, the largest of Samsung sales agents for image sensors in Taiwan, has received more orders than it can deliver due to short supply from the Korean vendor, with order visibility extending to mid-July, according to the company. Coasia is a supplier of CIS for Taiwan-based Lite-On Technology, Lite-On Semiconductor Corporation and Premier Image Technology, according to Digitimes' sources.

Tuesday, June 02, 2009

"Project Natal" at Microsoft

Yahoo: Microsoft unveiled "Project Natal" (pronounced "nuh-tall"), a code name for a new way of game control combining RGB camera, depth sensor, multi-array microphone and custom processor running proprietary software all in one device. Unlike 2-D cameras and controllers, "Project Natal" tracks your full body movement in 3-D, while responding to commands, directions and even a shift of emotion in your voice.

Worth Playing says that the depth sensor has an infrared projector combined with a monochrome CMOS sensor allowing "Project Natal" to see the room in 3-D under any lighting conditions.

Update: Here is Microsoft video on how it works:

Update #2: Microsoft Corporate VP for Strategy and Business Development talks about Project Natal details in interview to Venture Beat. The product is planned to hit the market on spring 2010.

Worth Playing says that the depth sensor has an infrared projector combined with a monochrome CMOS sensor allowing "Project Natal" to see the room in 3-D under any lighting conditions.

Update: Here is Microsoft video on how it works:

Update #2: Microsoft Corporate VP for Strategy and Business Development talks about Project Natal details in interview to Venture Beat. The product is planned to hit the market on spring 2010.

Micron Sells Aptina, Finally

Yahoo, Wall Street Journal: Micron announced that it has signed an agreement to sell a 65% of its interest in Aptina to Riverwood Capital and TPG Capital. The exact price couldn't be learned, but Micron said it would book a loss of about $100 million as result of the transaction. Micron will retain a 35% minority stake in the independent, privately held company. As part of the arrangement with Riverwood and TPG, Micron is expected to continue manufacturing Aptina's image sensors at a facility in Italy.

Riverwood and TPG aren't expected to borrow any money for the deal. Such an arrangement holds appeal for the seller because acquisitions that rely upon financing run a higher risk of not closing. The transaction is expected to be completed in the next 60 days.

Riverwood and TPG aren't expected to borrow any money for the deal. Such an arrangement holds appeal for the seller because acquisitions that rely upon financing run a higher risk of not closing. The transaction is expected to be completed in the next 60 days.

Monday, June 01, 2009

Tessera Discusses WLC Solutions

I-Micronews publishes Tessera executives interview by Yole. Giles Humpston, Director of Research and Development, and Bents Kidron responsible for marketing and business development for all wafer level technologies at Tessera, talk about WLC camera challenges and solutions.

Tessera tells why first generation WLC is not really wafer level integrated. Rather, Tessera makes the optical train at the separate wafer, dice it into individual optical stacks then conduct die-to-wafer assembly to build camera modules.

This is because the optical area of an imager die is very much smaller than the die area because of the other electronics each chip contains. The result is a mismatch in population between the lens wafers and the semiconductor wafers; a 200m diameter lens wafer being able to accommodate about 4 times as many lenses as an imager wafer containing VGA resolution die. So, it is not economically feasible to make everything on the same wafer, at least, not in Tessera's view.

The interview also has many other interesting claims. Thanks to J.B. for sending me the link.

Tessera tells why first generation WLC is not really wafer level integrated. Rather, Tessera makes the optical train at the separate wafer, dice it into individual optical stacks then conduct die-to-wafer assembly to build camera modules.

This is because the optical area of an imager die is very much smaller than the die area because of the other electronics each chip contains. The result is a mismatch in population between the lens wafers and the semiconductor wafers; a 200m diameter lens wafer being able to accommodate about 4 times as many lenses as an imager wafer containing VGA resolution die. So, it is not economically feasible to make everything on the same wafer, at least, not in Tessera's view.

The interview also has many other interesting claims. Thanks to J.B. for sending me the link.