Laguna Hills, Calif. – June 14, 2022 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of neuromorphic AI IP, and Prophesee, the inventor of the world’s most advanced neuromorphic vision systems, today announced a technology partnership that delivers next-generation platforms for OEMs looking to integrate event-based vision systems with high levels of AI performance coupled with ultra-low power technologies.

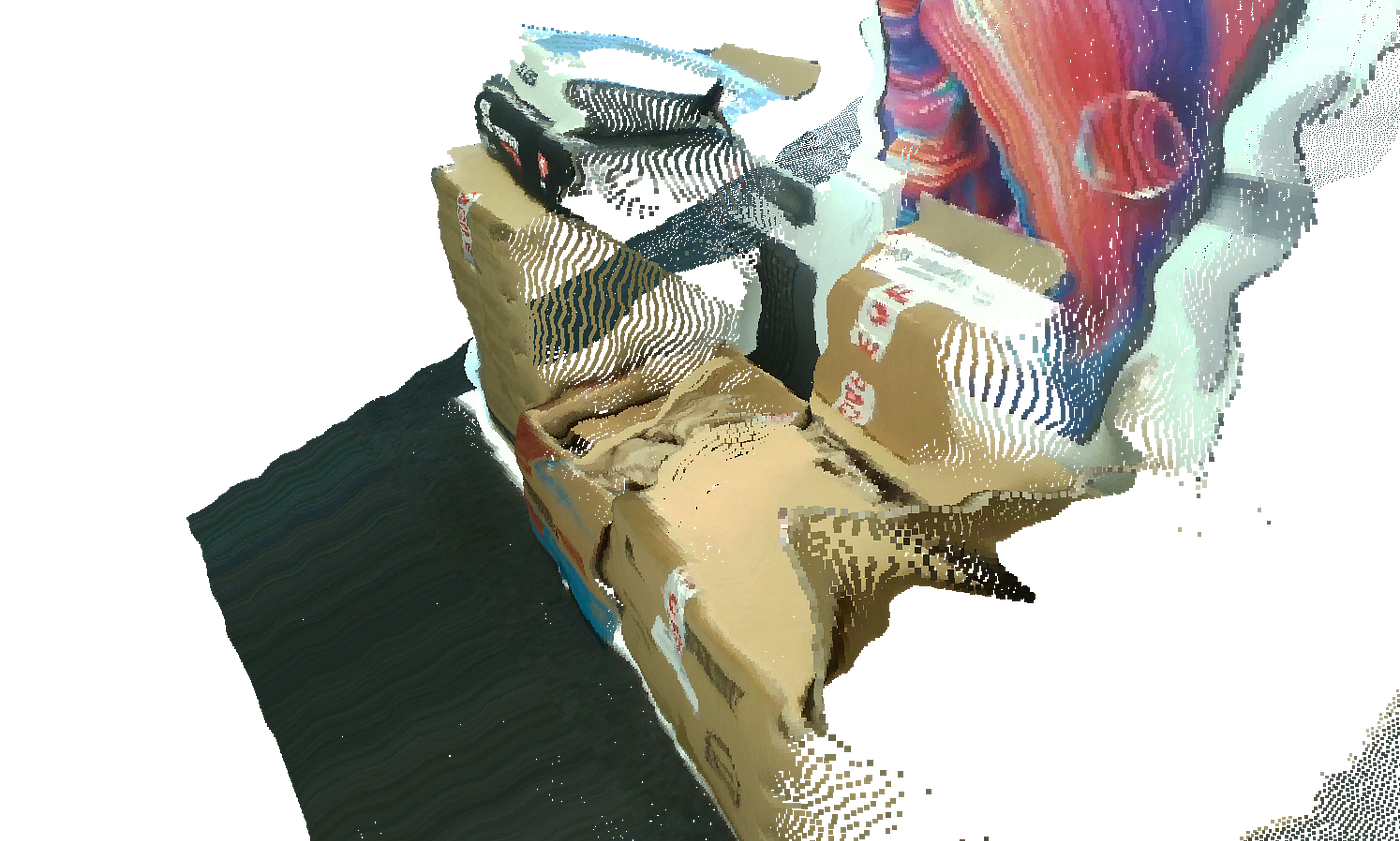

Inspired by human vision, Prophesee’s technology uses a patented sensor design and AI algorithms that mimic the eye and brain to reveal what was invisible until now using standard frame-based technology. Prophesee’s computer vision systems open new potential in areas such as autonomous vehicles, industrial automation, IoT, security and surveillance, and AR/VR.

BrainChip’s first-to-market neuromorphic processor, Akida, mimics the human brain to analyze only essential sensor inputs at the point of acquisition, processing data with unparalleled efficiency, precision, and economy of energy. Keeping AI/ML local to the chip, independent of the cloud, also dramatically reduces latency.

“We’ve successfully ported the data from Prophesee’s neuromorphic-based camera sensor to process inference on Akida with impressive performance,” said Anil Mankar, Co-Founder and CDO of BrainChip. “This combination of intelligent vision sensors with Akida’s ability to process data with unparalleled efficiency, precision and economy of energy at the point of acquisition truly advances state-of-the-art AI enablement and offers manufacturers a ready-to-implement solution.”

“By combining our Metavision solution with Akida-based IP, we are better able to deliver a complete high-performance and ultra-low power solution to OEMs looking to leverage edge-based visual technologies as part of their product offerings, said Luca Verre, CEO and co-founder of Prophesee.”

For additional information about the BrainChip/Prophesee partnership contact sales@brainchip.com.

“We’ve successfully ported the data from Prophesee’s neuromorphic-based camera sensor to process inference on Akida with impressive performance,” said Anil Mankar, Co-Founder and CDO of BrainChip. “This combination of intelligent vision sensors with Akida’s ability to process data with unparalleled efficiency, precision and economy of energy at the point of acquisition truly advances state-of-the-art AI enablement and offers manufacturers a ready-to-implement solution.”

“By combining our Metavision solution with Akida-based IP, we are better able to deliver a complete high-performance and ultra-low power solution to OEMs looking to leverage edge-based visual technologies as part of their product offerings, said Luca Verre, CEO and co-founder of Prophesee.”

ABOUT BRAINCHIP HOLDINGS LTD (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY)

BrainChip is the worldwide leader in edge AI on-chip processing and learning. The company’s first-to-market neuromorphic processor, AkidaTM, mimics the human brain to analyze only essential sensor inputs at the point of acquisition, processing data with unparalleled efficiency, precision, and economy of energy. Keeping machine learning local to the chip, independent of the cloud, also dramatically reduces latency while improving privacy and data security. In enabling effective edge compute to be universally deployable across real world applications such as connected cars, consumer electronics, and industrial IoT, BrainChip is proving that on-chip AI, close to the sensor, is the future, for its customers’ products, as well as the planet.

Explore the benefits of Essential AI at www.brainchip.com.

For additional information about the BrainChip/Prophesee partnership, contact sales@brainchip.com.

ABOUT PROPHESEE

Prophesee is the inventor of the world’s most advanced neuromorphic vision systems.

The company developed a breakthrough Event-based Vision approach to machine vision. This new vision category allows for significant reductions of power, latency and data processing requirements to reveal what was invisible to traditional frame-based sensors until now. Prophesee’s patented Metavision® sensors and algorithms mimic how the human eye and brain work to dramatically improve efficiency in areas such as autonomous vehicles, industrial automation, IoT, security and surveillance, and AR/VR.

Prophesee is based in Paris, with local offices in Grenoble, Shanghai, Tokyo and Silicon Valley. The company is driven by a team of more than 100 visionary engineers, holds more than 50 international patents and is backed by leading international equity and corporate investors including 360 Capital Partners, European Investment Bank, iBionext, Intel Capital, Robert Bosch Ventures, Sinovation, Supernova Invest, Will Semiconductor, Xiaomi.