Lists

Wednesday, August 31, 2022

Gpixel announces new global shutter GSPRINT 4502 sensor

Monday, August 29, 2022

2023 International Image Sensors Workshop - Call for Papers

The 2023 International Image Sensors Workshop (IISW) will be held in Scotland from 22-25 May 2023. The first call for papers is now available at this link: 2023 IISW CFP.

Wednesday, August 24, 2022

Surprises of Single Photon Imaging

[This is an invited blog post by Prof. Andreas Velten from University of Wisconsin-Madison.]

When we started working on single photon imaging we were anticipating having to do away with many established concepts in computational imaging and photography. Concepts like exposure time, well depth, motion blur, and many others don’t make sense for single photon sensors. Despite this expectation we still encountered several unexpected surprises.

Our first surprise was that SPAD cameras, which typically are touted for low light applications, have an exceptionally large dynamic range and therefore outperform conventional sensors not only in dark, but also in very bright scenes. Due to their hold off time, SPADs reject a growing number of photons at higher flux levels resulting in a nonlinear response curve. The classical light flux is usually estimated by counting photons over a certain time interval. One can instead measure the time between photons or the time a sensor pixel waits for a photon in the active state. This further increases dynamic range so that the saturation flux level is above the safe operating range of the detector pixel and far above eye safety levels. The camera does not saturate. [1][2][3]

The second surprise was that single photon cameras, without further computational improvements, are of limited use in low light imaging situations. In most imaging applications motion of the scene or camera demands short exposure times well below 1 second to avoid motion blur. At light levels low enough to present a challenge to current CMOS sensors results in low photon counts even for a perfect camera. The image looks noisy not because of a problem introduced by the sensor, but because of Poisson noise due to light quantization. The low light capabilities of SPADs only come to bear when long exposure times are used or when motion can be compensated for. Luckily motion compensation strategies inspired by burst photography and event cameras work exceptionally well for SPADs due to the absence of readout noise and inherent motion blur. [4][5][6]

Finally, we assumed early on that single photon sensors have an inherent disadvantage due to larger energy consumption. They either need internal amplification like the SPAD or high frame rates like QIS and qCMOS both of which result in higher power consumption. We learned that the internal amplification process in SPADs makes up a small and decreasing portion of the overall energy consumption of a SPAD. The lions share is spent in transferring and storing the large data volumes resulting from individually processing every single photon. To address the power consumption of SPAD cameras we therefore need to find better ways to compress photon data close to the pixel and be more selective about which photons to process and which to ignore. Even the operation of a conventional CMOS camera can be thought of as a type of compression. Photons are accumulated over an exposure time and only the total is read out after each frame. The challenge for SPAD cameras is to use their access to every single photon and combine it with more sophisticated ways of data compression implemented close to the pixel. [7]

As we transition imaging to widely available high resolution single photon cameras, we are likely in for more surprises. Light is made up of photons. Light detection is a Poisson process. Light and light intensity are derived quantities that are based on ensemble averages over a large number of photons. It is reasonable to assume that detection and processing methods that are based on the classical concept of flux are sub-optimal. The full potential of single photon capture and processing is therefore not yet known. I am hoping for more positive surprises.

References

[1] Ingle, A., Velten, A., & Gupta, M. (2019). High flux passive imaging with single-photon sensors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 6760-6769). [Project Page]

[2] Ingle, A., Seets, T., Buttafava, M., Gupta, S., Tosi, A., Gupta, M., & Velten, A. (2021). Passive inter-photon imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 8585-8595). [Project Page]

[3] Liu, Y., Gutierrez-Barragan, F., Ingle, A., Gupta, M., & Velten, A. (2022). Single-photon camera guided extreme dynamic range imaging. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (pp. 1575-1585). [Project Page]

[4] Seets, T., Ingle, A., Laurenzis, M., & Velten, A. (2021). Motion adaptive deblurring with single-photon cameras. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (pp. 1945-1954). [Interactive Visualization]

[5] Ma, S., Gupta, S., Ulku, A. C., Bruschini, C., Charbon, E., & Gupta, M. (2020). Quanta burst photography. ACM Transactions on Graphics (TOG), 39(4), 79-1. [Project Page]

[6] Laurenzis, M., Seets, T., Bacher, E., Ingle, A., & Velten, A. (2022). Comparison of super-resolution and noise reduction for passive single-photon imaging. Journal of Electronic Imaging, 31(3), 033042.

[7] Gutierrez-Barragan, F., Ingle, A., Seets, T., Gupta, M., & Velten, A. (2022). Compressive Single-Photon 3D Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 17854-17864). [Project Page]

About the author:

Andreas Velten is Assistant Professor at the Department of Biostatistics and Medical Informatics and the department of Electrical and Computer Engineering at the University of Wisconsin-Madison and directs the Computational Optics Group. He obtained his PhD with Prof. Jean-Claude Diels in Physics at the University of New Mexico in Albuquerque and was a postdoctoral associate of the Camera Culture Group at the MIT Media Lab. He has included in the MIT TR35 list of the world's top innovators under the age of 35 and is a senior member of NAI, OSA, and SPIE as well as a member of Sigma Xi. He is co-Founder of OnLume, a company that develops surgical imaging systems, and Ubicept, a company developing single photon imaging solutions.

Monday, August 22, 2022

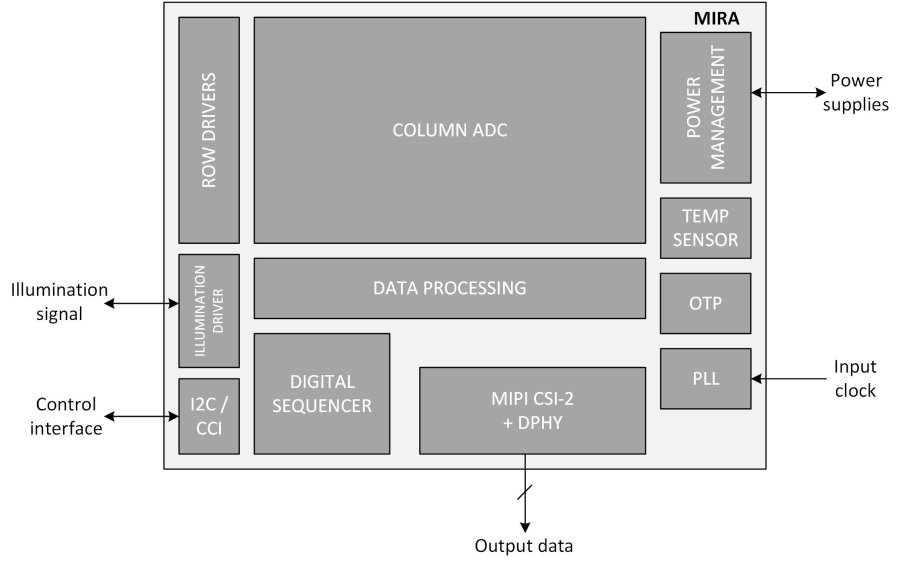

amsOSRAM announces new sensor Mira220

- New Mira220 image sensor’s high quantum efficiency enables operation with low-power emitter and in dim lighting conditions

- Stacked chip design uses ams OSRAM back side illumination technology to shrink package footprint to just 5.3mm x 5.3mm, giving greater design flexibility to manufacturers of smart glasses and other space-constrained products

- Low-power operation and ultra-small size make the Mira220 ideal for active stereo vision or structured lighting 3D systems in drones, robots and smart door locks, as well as mobile and wearable devices

Press Release: https://ams-osram.com/news/press-releases/mira220

Premstaetten, Austria (14th July 2022) -- ams OSRAM (SIX: AMS), a global leader in optical solutions, has launched a 2.2Mpixel global shutter visible and near infrared (NIR) image sensor which offers the low-power characteristics and small size required in the latest 2D and 3D sensing systems for virtual reality (VR) headsets, smart glasses, drones and other consumer and industrial applications.

The new Mira220 is the latest product in the Mira family of pipelined high-sensitivity global shutter image sensors. ams OSRAM uses back side illumination (BSI) technology in the Mira220 to implement a stacked chip design, with the sensor layer on top of the digital/readout layer. This allows it to produce the Mira220 in a chip-scale package with a footprint of just 5.3mm x 5.3mm, giving manufacturers greater freedom to optimize the design of space-constrained products such as smart glasses and VR headsets.

The sensor combines excellent optical performance with very low-power operation. The Mira220 offers a high signal-to-noise-ratio as well as high quantum efficiency of up to 38% as per internal tests at the 940nm NIR wavelength used in many 2D or 3D sensing systems. 3D sensing technologies such as structured light or active stereo vision, which require an NIR image sensor, enable functions such as eye and hand tracking, object detection and depth mapping. The Mira220 will support 2D or 3D sensing implementations in augmented reality and virtual reality products, in industrial applications such as drones, robots and automated vehicles, as well as in consumer devices such as smart door locks.

The Mira220’s high quantum efficiency allows device manufacturers to reduce the output power of the NIR illuminators used alongside the image sensor in 2D and 3D sensing systems, reducing total power consumption. The Mira220 features very low power consumption at only 4mW in sleep mode, 40mW in idle mode and at full resolution and 90fps the sensor has a power consumption of 350mW. By providing for low system power consumption, the Mira220 enables wearable and portable device manufacturers to save space by specifying a smaller battery, or to extend run-time between charges.

“Growing demand in emerging markets for VR and augmented reality equipment depends on manufacturers’ ability to make products such as smart glasses smaller, lighter, less obtrusive and more comfortable to wear. This is where the Mira220 brings new value to the market, providing not only a reduction in the size of the sensor itself, but also giving manufacturers the option to shrink the battery, thanks to the sensor’s very low power consumption and high sensitivity at 940nm,” said Brian Lenkowski, strategic marketing director for CMOS image sensors at ams OSRAM.

Superior pixel technology

The Mira220’s advanced back-side illumination (BSI) technology gives the sensor very high sensitivity and quantum efficiency with a pixel size of 2.79μm. Effective resolution is 1600px x 1400px and maximum bit depth is 12 bits. The sensor is supplied in a 1/2.7” optical format.

The sensor supports on-chip operations including external triggering, windowing, and horizontal or vertical mirroring. The MIPI CSI-2 interface allows for easy interfacing with a processor or FPGA. On-chip registers can be accessed via an I2C interface for easy configuration of the sensor.

Digital correlated double sampling (CDS) and row noise correction result in excellent noise performance.

ams OSRAM will continue to innovate and extend the Mira family of solutions, offering customers a choice of resolution and size options to fit various application requirements.

The Mira220 NIR image sensor is available for sampling. More information about Mira220.

Image: ams

Friday, August 19, 2022

Gigajot article in Nature Scientific Reports

Jiaju Ma et al. of Gigajot Technology, Inc. have published a new article titled "Ultra‑high‑resolution quanta image sensor with reliable photon‑number‑resolving and high dynamic range capabilities" in Nature Scientific Reports.

Abstract:

Superior low‑light and high dynamic range (HDR) imaging performance with ultra‑high pixel resolution are widely sought after in the imaging world. The quanta image sensor (QIS) concept was proposed in 2005 as the next paradigm in solid‑state image sensors after charge coupled devices (CCD)and complementary metal oxide semiconductor (CMOS) active pixel sensors. This next‑generation image sensor would contain hundreds of millions to billions of small pixels with photon‑number‑resolving and HDR capabilities, providing superior imaging performance over CCD and conventional CMOS sensors. In this article, we present a 163 megapixel QIS that enables both reliable photon‑number‑resolving and high dynamic range imaging in a single device. This is the highest pixel resolution ever reported among low‑noise image sensors with photon‑number‑resolving capability. This QIS was fabricated with a standard, state‑of‑the‑art CMOS process with 2‑layer wafer stacking and backside illumination. Reliable photon‑number‑resolving is demonstrated with an average read noise of 0.35 e‑ rms at room temperature operation, enabling industry leading low‑light imaging performance. Additionally, a dynamic range of 95 dB is realized due to the extremely low noise floor and an extended full‑well capacity of 20k e‑. The design, operating principles, experimental results, and imaging performance of this QIS device are discussed.

Ma, J., Zhang, D., Robledo, D. et al. Ultra-high-resolution quanta image sensor with reliable photon-number-resolving and high dynamic range capabilities. Sci Rep 12, 13869 (2022).

This is an open access article: https://www.nature.com/articles/s41598-022-17952-z.epdf

Wednesday, August 17, 2022

RMCW LiDAR

Baraja is an automotive LiDAR company headquartered in Australia which specializes in pseudo-random modulation continuous wave LiDAR technology which they call "RMCW". A blog post by Cibby Pulikkaseril (Founder & CTO of Baraja) compares and contrasts RMCW with the more-commonly-known FMCW and ToF LiDAR technologies.

tldr; There's good reason to believe that pseudo-random modulation can provide robustness in multi-camera environments where multiple LiDARs are trying to transmit/receive over a common shared channel (free space).

Full blog article here: https://www.baraja.com/en/blog/rmcw-lidar

Some excerpts:

Definition of RMCW

Random modulated continuous wave (RMCW) LiDAR is a technique published in Applied Optics by Takeuchi et al. in 1983. The idea was to take a continuous wave laser, and modulate it with a pseudo-random binary sequence before shooting it out into the environment; the returned signal would be correlated against the known sequence and the delay would indicate the range to target.

Benefits

... correlation turns the pseudo-random signal, which to the human eye, looks just like noise, into a sharp pulse, providing excellent range resolution and precision. Thus, by using low-speed electronics, we can achieve the same pulse performance used by frequency-modulated continuous wave (FMCW) LiDARs... .

... incredible immunity to interference, and this can be dialed in by software.

RMCW vs. FMCW vs. ToF

... [FMCW and RMCW] are fundamentally different modulation techniques. FMCW LiDAR sensors will modulate the frequency of the laser light, a relatively complicated operation, and then attempt to recognize the modulation in the return signal from the environment.

Both RMCW and FMCW LiDAR offer extremely high immunity from interfering lasers – compared to conventional ToF LiDARs, which are extremely vulnerable to interference.

Spectrum-Scan™ + RMCW is also able to produce instantaneous velocity information per-pixel, also known as Doppler velocity ... something not [natively] possible with conventional LiDAR... .

Baraja's Spectrum OffRoad LiDARs are currently available.

Spectrum HD25 LiDAR samples (specs in image below) will be available in 2022.