Lists

Monday, February 27, 2023

Stanford University talk on Pixel Design

Friday, February 24, 2023

Ambient light resistant long-range time-of-flight sensor

Kunihiro Hatakeyama et al. of Toppan Inc. and Brookman Technology Inc. (Japan) published an article titled "A Hybrid ToF Image Sensor for Long-Range 3D Depth Measurement Under High Ambient Light Conditions" in the IEEE Journal of Solid-State Circuits.

Abstract:

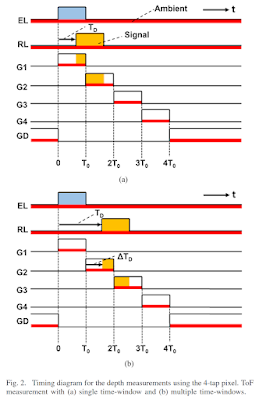

A new indirect time of flight (iToF) sensor realizing long-range measurement of 30 m has been demonstrated by a hybrid ToF (hToF) operation, which uses multiple time windows (TWs) prepared by multi-tap pixels and range-shifted subframes. The VGA-resolution hToF image sensor with 4-tap and 1-drain pixels, fabricated by the BSI process, can measure a depth of up to 30 m for indoor operation and 20 m for outdoor operation under high ambient light of 100 klux. The new hToF operation with overlapped TWs between subframes for mitigating an issue on the motion artifact is implemented. The sensor works at 120 frames/s for a single subframe operation. Interference between multiple ToF cameras in IoT systems is suppressed by a technique of emission cycle-time changing.

Wednesday, February 22, 2023

PetaPixel article on limits of computational photography

“yes, dedicated cameras have some significant advantages”. Primarily, the relevant metric is what I call “photographic bandwidth” – the information-theoretic limit on the amount of optical data that can be absorbed by the camera under given photographic conditions (ambient light, exposure time, etc.).Cell phone cameras only get a fraction of the photographic bandwidth that dedicated cameras get, mostly due to size constraints.

- Objective Lens Diameter

- Optical Path Quality

- Pixel Size and Sensor Depth

- “Injective” errors. Errors where photons end up in the “wrong” place on the sensor, but they don’t necessarily clobber each other. E.g. if our lens causes the red light to end up slightly further out from the center than it should, we can correct for that by moving red light closer to the center in the processed photograph. Some fraction of chromatic aberration is like this, and we can remove a bit of chromatic error by re-shaping the sampled red, green, and blue images. Lenses also tend to have geometric distortions which warp the image towards the edges – we can un-warp them in software. Computational photography can actually help a fair bit here.

- “Informational” errors. Errors where we lose some information, but in a non-geometrically-complicated way. For example, lenses tend to exhibit vignetting effects, where the image is darker towards the edges of the lens. Computational photography can’t recover the information lost here, but it can help with basic touch-ups like brightening the darkened edges of the image.

- “Non-injective” errors. Errors where photons actually end up clobbering pixels they shouldn’t, such as coma. Computational photography can try to fight errors like this using processes like deconvolution, but it tends to not work very well.

Monday, February 20, 2023

TRUMPF industrializes SWIR VCSELs above 1.3 micron wavelength

From Yole industry news: https://www.yolegroup.com/industry-news/trumpf-reports-breakthrough-in-industrializing-swir-vcsels-above-1300-nm/

TRUMPF reports breakthrough in industrializing SWIR VCSELs above 1300 nm

TRUMPF Photonic Components, a global leader in VCSEL and photodiode solutions, is industrializing the production of SWIR VCSELs above 1300 nm to support high volume applications such as in smartphones in under-OLED applications. The company demonstrates outstanding results regarding the efficiency of infrared laser components with long wavelengths beyond 1300 nm on an industrial-grade manufacturing level. This takes TRUMPF one step further towards mass production of indium-phosphide-based (InP) VCSELs in the range from 1300 nm to 2000 nm. “At TRUMPF we are working hard to mature this revolutionary production process and to implement standardization, which would further develop this outstanding technology into a cost-attractive solution. We aim to bring the first products to the high-volume market in 2025,” said Berthold Schmidt, CEO at TRUMPF Photonic Components. By developing the new industrial production platform, TRUMPF is expanding its current portfolio of Gallium arsenide- (GaAs-) based VCSELs in the 760 nm to 1300 nm range for NIR applications. The new platform is more flexible in the longer wavelength spectrum than are GaAs, but it still provides the same benefits as compact, robust and economical light sources. “The groundwork for the successful implementation of long-wavelength VCSELs in high volumes has been laid. But we also know that it is still a way to go, and major production equipment investments have to be made before ramping up mass production”, said Schmidt.

VCSELs to conquer new application fields

A broad application field can be revolutionized by the industrialization of long-wavelength VCSELs, as the SWIR VCSELs can be used in applications with higher output power while remaining eye-safe compared to shorter-wavelength VCSELs. The long wavelength solution is not susceptible to disturbing light such as sunlight in a broader wavelength regime. One popular example from the mass markets of smartphone and consumer electronics devices, is under-OLED applications. The InP-based VCSELs can be easily put below these OLED displays, without disturbing other functionalities and with the benefit of higher eye-safety standards. OLED displays are a huge application field for long wavelength sensor solutions. “In future we expect high volume projects not only in the fields of consumer sensing, but automotive LiDAR, data communication applications for longer reach, medical applications such as spectroscopy applications, as well as photonic integrated circuits (PICs), and quantum photonic integrated circuits (QPICs). The related demands enable the SWIR VCSEL technology to make a breakthrough in mass production”, said Schmidt.

Exceptional test results

TRUMPF presents results showing VCSEL laser performance up to 140°C at ~1390 nm wavelength. The technology used for fabrication is scalable for mass production and the emission wavelength can be tuned between 1300 nm to 2000 nm, resulting in a wide range of applications. Recent results show good reproducible behavior and excellent temperature performance. “I’m proud of my team, as it’s their achievement that we can present exceptional results in the performance and robustness of these devices”, said Schmidt. “We are confident that the highly efficient, long wavelength VCSELs can be produced at high yield to support cost-effective solutions”, Schmidt adds.

Friday, February 17, 2023

ON Semi announces that it will be manufacturing image sensors in New York

- Acquisition and investments planned for ramp-up at the East Fishkill (EFK) fab create onsemi’s largest U.S. manufacturing site

- EFK enables accelerated growth and differentiation for onsemi’s power, analog and sensing technologies

- onsemi retains more than 1,000 jobs at the site

Wednesday, February 15, 2023

ST introduces new sensors for computer vision, AR/VR

ST has released a new line of global shutter image sensors with embedded optical flow feature which is fully autonomous with no need for host computing/assistance. This can provide savings in power and bandwidth and free up host resources that would otherwise be needed for optical flow computations. From this optical flow data, it is possible for a host processor to compute the visual odometry (SLAM or camera trajectory), without the need for the full RGB image. The optical flow data can be interlaced with the standard image stream, with any of the monochrome, RGB Bayer or RGB-IR sensor versions.

Monday, February 13, 2023

Canon Announces 148dB (24 f-stop) Dynamic Range Sensor

- Parking garage entrance, afternoon: With conventional cameras, vehicle's license plate is not legible due to whiteout, while driver's face is not visible due to crushed blacks. However, the new sensor enables recognition of both the license plate and driver's face.

- The new sensor realizes an industry-leading high dynamic range of 148 dB, enabling image capture in environments with brightness levels ranging from approx. 0.1 lux to approx. 2,700,000 lux. For reference, 0.1 lux is equivalent to the brightness of a full moon at night, while 500,000 lux is equivalent to filaments in lightbulbs and vehicle headlights.

With conventional sensors, in order to produce a natural-looking image when capturing images in environments with both bright and dark areas, high-dynamic-range image capture requires taking multiple separate photos under different exposure conditions and then synthesizing them into a single image. (In the diagram below, four exposure types are utilized per single frame).

With Canon's new sensor, optimal exposure conditions are automatically specified for each of the 736 areas, thus eliminating the need for image synthesis.

Portion in which subject moves is detected based on discrepancies between first image (one frame prior) and second image (two frames prior). ((1) Generate movement map).

In first image (one frame prior) brightness of subject is recognized for each area4 and luminance map is generated (2). After ensuring difference in brightness levels between adjacent areas are not excessive ((3) Reduce adjacent exposure discrepancy), exposure conditions are corrected based on information from movement map, and final exposure conditions are specified (4).

Final exposure conditions (4) are applied to images for corresponding frames.

Friday, February 10, 2023

New SWIR Sensor from NIT

NSC2001 is the NIT Triple H SWIR sensor:

- High Dynamic Range operating in linear and logarithmic mode response, it exhibits more than 120 dB of dynamic range

- High Speed, capable of generating up to 1K frames per second in full frame mode, and much more with sub windowing

- High Sensitivity and low noise figure (< 50e-)

Wednesday, February 08, 2023

Workshop on Infrared Detection for Space Applications June 7-9, 2023 in Toulouse, France

CNES, ESA, ONERA, CEA-LETI, Labex Focus, Airbus Defence & Space and Thales Alenia Space are pleased to inform you that they are organising the second workshop dedicated to Infrared Detection for Space Applications, that will be held in Toulouse from 7th to 9th, June 2023 in the frame of the Optics and Optoelectronics Technical Expertise Community (COMET).

The aim of this workshop is to focus on Infrared Detectors technologies and components, Focal Plane Arrays and associated subsystems, control and readout ASICs, manufacturing, characterization and qualification results. The workshop will only address IR spectral bands between 1μm and 100 μm. Due to the commonalities with space applications and the increasing interest of space agencies to qualify and to use COTS IR detectors, companies and laboratories involved in defence applications, scientific applications and non-space cutting-edge developments are very welcome to attend this workshop.

The workshop will comprise several sessions addressing the following topics:

- Detector needs for future space missions,

- Infrared detectors and technologies including (but not limited to):

- Photon detectors: MCT, InGaAs, InSb, XBn, QWIP, SL, intensified, SI:As, ...

- Uncooled thermal detectors: microbolometers (a-Si, VOx), pyroelectric detectors ...

- ROIC (including design and associated Si foundry aspects).

- Optical functions on detectors

- Focal Plane technologies and solutions for Space or Scientific applications including subassembly elements such as:

- Assembly techniques for large FPAs,

- Flex and cryogenic cables,

- Passive elements and packaging,

- Cold filters, anti-reflection coatings,

- Proximity ASICs for IR detectors,

- Manufacturing techniques from epitaxy to package integration,

- Characterization techniques,

- Space qualification and validation of detectors and ASICs,

- Recent Infrared Detection Chain performances and Integration from a system point of view.

Three tutorials will be given during this workshop.

Please send a short abstract giving the title, the authors’ names and affiliations, and presenting the subject of your talk, to following contacts: anne.rouvie@cnes.fr and nick.nelms@esa.int.

The workshop official language is English (oral presentation and posters).

After abstract acceptance notification, authors will be requested to prepare their presentation in pdf or PowerPoint format, to be presented at the workshop. Authors will also be required to provide a version of their presentation to the organization committee along with an authorization to make it available for Workshop attendees and on-line for COMET members. No proceedings will be compiled and so no detailed manuscript needs to be submitted.

Monday, February 06, 2023

Recent Industry News: Sony, SK Hynix

Sony separates production of cameras for China and non-China markets

Sony Group has transferred production of cameras sold in the Japanese, U.S. and European markets to Thailand from China, part of growing efforts by manufacturers to protect supply chains by reducing their Chinese dependence. Sony’s plant in China will in principle produce cameras for the domestic market. Sony offers the Alpha line of high-end mirrorless cameras. The company sold roughly 2.11M units globally in 2022, according to Euromonitor. Of those, China accounted for 150,000 units, with the rest, or 90%, sold elsewhere, meaning the bulk of Sony’s Chinese production has been shifted to Thailand. Canon in 2022 closed part of its camera production in China, shifting it back to Japan. Daikin Industries plans to establish a supply chain to make air conditioners without having to rely on Chinese-made parts within fiscal 2023.

TOKYO -- Sony Group has transferred production of cameras sold in the Japanese, U.S. and European markets to Thailand from China, part of growing efforts by manufacturers to protect supply chains by reducing their Chinese dependence.

Sony's plant in China will in principle produce cameras for the domestic market. Until now, Sony cameras were exported from China and Thailand. The site will retain some production facilities to be brought back online in emergencies.

After tensions heightened between Washington and Beijing, Sony first shifted manufacturing of cameras bound for the U.S. The transfer of the production facilities for Japan- and Europe-bound cameras was completed at the end of last year.

Sony offers the Alpha line of high-end mirrorless cameras. The company sold roughly 2.11 million units globally in 2022, according to Euromonitor. Of those, China accounted for 150,000 units, with the rest, or 90%, sold elsewhere, meaning the bulk of Sony's Chinese production has been shifted to Thailand.

On the production shift, Sony said it "continues to focus on the Chinese market and has no plans of exiting from China."

Sony will continue making other products, such as TVs, game consoles and camera lenses, in China for export to other countries.

The manufacturing sector has been working to address a heavy reliance on Chinese production following supply chain disruptions caused by Beijing's zero-COVID policy.

Canon in 2022 closed part of its camera production in China, shifting it back to Japan. Daikin Industries plans to establish a supply chain to make air conditioners without having to rely on Chinese-made parts within fiscal 2023.

Sony ranks second in global market share for cameras, following Canon. Its camera-related sales totaled 414.8 billion yen ($3.2 billion) in fiscal 2021, about 20% of its electronics business.

Call for Papers: IEEE International Conference on Computational Photography (ICCP) 2023

Friday, February 03, 2023

Global Image Sensor Market Forecast to Grow Nearly 11% through 2030

Factors Influencing

Various companies are coming up with advanced image sensors with Artificial Intelligence capabilities. Sony Corporation (Japan) recently launched IMX500, the world's first intelligent vision sensor that carries out machine learning and boosts computer vision operations automatically. Thus, such advancements are forecast to prompt the growth of the global image sensor market in the coming years.

Regional Analysis

International Image Sensors Workshop (IISW) 2023 Program and Pre-Registration Open

The 2023 International Image Sensors Workshop announces the technical programme and opens the pre-registration to attend the workshop.

Technical Programme is announced: The Workshop programme is from May 22nd to 25th with attendees arriving on May 21st. The programme features 54 regular presentations and 44 posters with presenters from industry and academia. There are 10 engaging sessions across 4 days in a single track format. On one afternoon, there are social trips to Stirling Castle or the Glenturret Whisky Distillery. Click here to see the technical programme.

Pre-Registration is Open: The pre-registration is now open until Monday 6th Feb. Click here to pre-register to express your interest to attend.

Wednesday, February 01, 2023

PhotonicsSpectra article on quantum dots-based SWIR Imagers

https://www.photonics.com/Articles/New_Sensor_Materials_and_Designs_Deepen_SWIR/a68543