ST publishes another presentation of its ToF sensors lineup and roadmap:

Lists

Wednesday, September 30, 2020

Tuesday, September 29, 2020

CMYG to RGB & IR

MDPI paper "An Acquisition Method for Visible and Near Infrared Images from Single CMYG Color Filter Array-Based Sensor" by Younghyeon Park and Byeungwoo Jeon from Sungkyunkwan University, Korea, proposes color processing pipeline that converts CMYG image into RGB and IR:

"To acquire the NIR image together with visible range signals, an imaging device should be able to simultaneously capture NIR and visible range images. An implementation of such a system having separate sensors for NIR and visible light has practical shortcomings due to its size and hardware cost. To overcome this, a single sensor-based acquisition method is investigated in this paper. The proposed imaging system is equipped with a conventional color filter array of cyan, magenta, yellow, and green, and achieves signal separation by applying a proposed separation matrix which is derived by mathematical modeling of the signal acquisition structure.

The elements of the separation matrix are calculated through color space conversion and experimental data. Subsequently, an additional denoising process is implemented to enhance the quality of the separated images. Experimental results show that the proposed method successfully separates the acquired mixed image of visible and near-infrared signals into individual red, green, and blue (RGB) and NIR images. The separation performance of the proposed method is compared to that of related work in terms of the average peak-signal-to-noise-ratio (PSNR) and color distance. The proposed method attains average PSNR value of 37.04 and 33.29 dB, respectively for the separated RGB and NIR images, which is respectively 6.72 and 2.55 dB higher than the work used for comparison."

Omnivision Unveils World's First RGB-IR Endoscopic Sensor

Dynamic Low-Light Imaging with QIS

Stanley Chan from Purdue University publishes a presentation of his ECCV 2020 paper "Dynamic Low-light Imaging with Quanta Image Sensors" by Yiheng Chi, Abhiram Gnanasambandam, Vladlen Koltun, and Stanley H. Chan:

Event-Based Vision Algorithms

Samsung Strategy & Innovation Center publishes "Event-based Vision Algorithms" lecture by Kostas Daniilidis from Penn University:

Monday, September 28, 2020

Single-Pixel Imaging Review

OSA Optics Express publishes "Single-pixel imaging 12 years on: a review" by Graham M. Gibson, Steven D. Johnson, and Miles J. Padgett from Glasgow University, UK.

"Modern cameras typically use an array of millions of detector pixels to capture images. By contrast, single-pixel cameras use a sequence of mask patterns to filter the scene along with the corresponding measurements of the transmitted intensity which is recorded using a single-pixel detector. This review considers the development of single-pixel cameras from the seminal work of Duarte et al. up to the present state of the art. We cover the variety of hardware configurations, design of mask patterns and the associated reconstruction algorithms, many of which relate to the field of compressed sensing and, more recently, machine learning. Overall, single-pixel cameras lend themselves to imaging at non-visible wavelengths and with precise timing or depth resolution. We discuss the suitability of single-pixel cameras for different application areas, including infrared imaging and 3D situation awareness for autonomous vehicles."

Autosens Interview with Espros CEO

Sunday, September 27, 2020

Maxim Unveils Low-Cost Gesture Sensor for Automotive Applications

PRNewswire: Maxim introduces MAX25205 hand-gesture controls sensor claimed to be the industry's lowest cost and smallest size. Featuring integrated optics and a 6x10 IR sensor array, the MAX25205 detects swipe and hand-rotation gestures without the complexity of ToF cameras at 10x lower cost and up to 75% smaller size.

"Maxim Integrated's MAX25205 is a game changer for the automotive industry," said Szu-Kang Hsien, executive business manager for Automotive Business Unit at Maxim. "By offering the most dynamic gesture control for automotive applications at the lowest cost, automakers can avoid the prohibitive costs of time-of-flight camera solutions and offer gesture sensing in more car models. It offers a stylish, cool factor to cars, especially for laid back drivers who prefer to use gesture for control with the added benefit of keeping their touch screens dirt-free."

Saturday, September 26, 2020

DVS Pixel Bias Self-Calibration

Tobi Delbruk and Zhenming Yu from Zurich University publish a video presentation explaining their ISCAS 2020 paper "Self Calibration of Wide Dynamic Range Bias Current Generators ISCAS."

"Many neuromorphic chips now include on-chip, digitally programmable bias generator circuits. So far, precision of these generated biases has been designed by transistor sizing and circuit design to ensure tolerable statistical variance due to threshold mismatch. This paper reports the use of an integrated measurement circuit based on spiking neuron and a scheme for calibrating each chip set of biases against the smallest of all the biases from that chip. That way, the averaging across individual biases improves overall matching both within a chip and across chips. This paper includes measurements of generated biases, the method for remapping bias values towards more uniform values, and measurements across 5 sample chips. With the method presented in this paper, 1σ mismatch of subthreshold currents is decreased by at least a factor of 3. The firmware implementation completes calibration in about a minute and uses about 1kB of flash storage of calibration data."

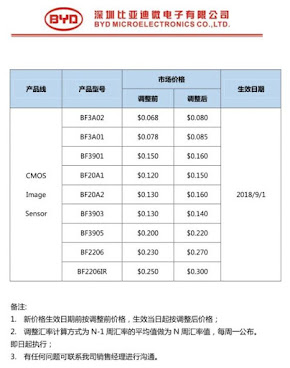

CIS Prices in China

- Galaxycore GC5025, 5MP, 1.12um pixel, integrated ISP - $0.53

- Galaxycore CG032A, VGA, integrated ISP - $0.15

- BYD BF2206, 2MP - $0.23

- BYD BF3903, VGA, integrated ISP - $0.15

- BYD BF3A02, QVGA - $0.068

Friday, September 25, 2020

Automotive News: Cepton, Ouster, Voyant Photonics, Mobileye

BusinessWire: Cepton announces its automotive-grade lidar – the Vista-X90, priced at less than $1000 for high volume applications. It is said to set a new benchmark for high performance at low power in a compact form factor. Weighing less than 900 g, the Vista-X90 achieves up to 200 m range at 10% reflectivity with an angular resolution of 0.13° and power consumption of <12W. The sensor supports frame rates of up to 40 Hz.

With a width of 120 mm, depth of 110 mm and a front-facing height of <45 mm, Vista-X90 is compact and embeddable. Its 90° x 25° field of view, combined with its directional, non-rotational design allows seamless vehicle integration - such as in the fascia, behind the windshield or on the roof.

Vista-X90 has a licensable design architecture powered by Cepton’s patented Micro Motion Technology (MMT) – a frictionless, mirrorless, rotation-free lidar architecture. Cepton has licensed its technology to the world’s largest automotive headlamp Tier 1, Koito, who has non-exclusive rights to manufacture and sell Cepton’s lidar technology for an automotive applications, using key modules supplied by Cepton.

“We are excited to disrupt the industry with the Vista-X90, which is the most cost-effective, high-performance lidar in the world for automotive applications,” said Jun Pei, Cepton’s CEO. “Automotive lidars have historically had either low performance at acceptable cost or claimed high performance while being too expensive for many OEM programs. The Vista-X90 fundamentally changes the game by bridging that divide and delivering the optimal mix of performance, power, reliability and cost. This is an integral part of our plan to make lidar available as an essential safety device in every consumer vehicle in the world.”

The Vista-X90 is targeted for production in 2022 and beyond, and samples can be made available upon request.

Yole publishes an interview with Ouster CEO Angus Pacala "A rising star in the LiDAR landscape – An interview with Ouster." Few quotes:

"Since our founding in 2015, Ouster has secured over 800 customers and $140 million in funding. Our headquarters is in San Francisco, and we have offices in Paris, Hamburg, Frankfurt, Hong Kong, and Suzhou.

At the time, nobody thought that you could use VCSELs and SPADs to make a high-performance sensor, but we figured out how to do it and patented our approach. The design relies on these two chips with the lasers and detectors, and a lens in front of each chip. In that way, digital lidar really resembles a digital camera."

Voyant Photonics publishes a Medium article "LiDAR-on-a-Chip is Not a Fool’s Errand" with bold claims on its FMCW LiDAR capabilities:

"With every pulse our FMCW LiDAR receives reflectance and polarization measurements that let it differentiate pavement from metal, hands from coffee mugs, street signs from rubber tires, and of course a pot hole from a plastic bag. We expect to read painted markings on asphalt, in total darkness, far past where cameras could. FMCW Lidar is like a sensor from Star Trek. It can tell you where something is, how fast it’s moving, and also what it is made of.

Voyant’s devices are not science fiction. They fit in your hand. They are real. We plan on producing more of them by the end of 2022 than Velodyne has sold across all its products in the last 13 years.

Thursday, September 24, 2020

Microsoft ToF Group Pursues Industrial Applications with SICK

SICK AG is working with Microsoft to enable the development of commercial industrial 3D cameras and related solutions, which will be compatible with a Microsoft ecosystem built on top of Microsoft depth, Intelligent Cloud, and Intelligent Edge platforms. Selected customers are already testing SICK cameras that incorporate Microsoft ToF depth technology.

SICK and Microsoft are expanding 3D ToF technologies in the context of Industry 4.0, to bring state of the art technologies to SICK’s 3DToF Visionary-T camera product line, and make it smarter, using Azure Intelligent Cloud and Intelligent Edge capabilities.

SICK’s latest industrial 3DToF camera Visionary-T Mini is expected to be available for sales in early 2021, while prototypes are already available now. Visionary-T Mini incorporates another variant of Microsoft’s 3D ToF technology with an impressive dynamic range and a resolution of ~510 x 420 pixels. It will offer extended performance and advanced on-device processing infrastructure and tools not currently available with Azure Kinect DK, to include, but not limited to: 24/7 robustness, industrial interfaces, enhanced resolution with sharper depth images and enhanced depth quality.

Lessons Learned in a Hard Way

Wednesday, September 23, 2020

ADI Partners with Microsoft on ToF Imaging

BusinessWire: Analog Devices announces a strategic collaboration with Microsoft to leverage Microsoft’s 3D ToF sensor technology.

“Our customers want depth image capture that ‘just works’ and is as easy as taking a photo,” said Duncan Bosworth, GM, Consumer Business Unit, Analog Devices. “Microsoft’s ToF 3D sensor technology used in the HoloLens mixed-reality headset and Azure Kinect Development Kit is seen as the industry standard for time-of-flight technologies. Combining this technology with custom-built solutions from ADI, our customers can easily deploy and scale the next generation of high-performance applications they demand, out of the box.”

“Analog Devices is an established leader in translating physical phenomena into digital information,” said Cyrus Bamji, Microsoft Partner Hardware Architect, Microsoft. “This collaboration will expand market access of our ToF sensor technology and enable the development of commercial 3D sensors, cameras, and related solutions, which will be compatible with a Microsoft ecosystem built on top of Microsoft depth, Intelligent Cloud, and Intelligent Edge platforms.”

Tuesday, September 22, 2020

High-Speed SPAD Sensor

Ams Presents its 3D Sensing Solutions

Ams publishes a couple of videos about its 3D sensing solutions:

Assorted News: Egis Sues Goodix, LeddarTech Acquires Phantom Intelligence Assets

Lynred and PI Tutorials

Lynred starts publishing tutorials on IR imaging. The first part is Infrared detection basics.

Teledyne Princeton Instruments publishes a tutorial "The Fundamentals Behind Modern Scientific Cameras."

Monday, September 21, 2020

Assorted News: Sense Photonics, MIPI, Pamtek, CSET, CoreDAR, Samsung

LaserFocusWorld publishes an interview with Shauna McIntyre, the new CEO of Sense Photonics. Few quotes:

"We have core flash lidar technology in the laser emitter, the detector array, and the algorithms and software stack. The proprietary laser emitter is based on a large VCSEL array, which provides high, eye-safe optical output power for long-range detection and wide field-of-view at a low cost point that is game-changing. Because the emitter’s wavelength is centered around 940 nm, our detector array can be based on inexpensive CMOS technology for low cost, and we get the added benefit of lower background light from the sun for a higher signal-to-noise ratio. From an architecture perspective, we intentionally chose a flash architecture because of its simple camera-like global shutter design, scalability to high-volume manufacture, the benefit of having no moving parts, and most importantly, it enables low cost.

Our laser array is a network consisting of thousands of VCSELs interconnected in a way that provides short pulses of high-power light. In keeping with our philosophy of design simplicity and high performance for our customers, we actuate the array to generate a single laser flash rather than adding complexity and cost associated with a multi-flash approach."

- High reliability: Ultra-low packet error rate (PER) of 10-19 for unprecedented performance over the vehicle lifetime

- High resiliency: Ultra-high immunity to EMC effects in demanding automotive conditions

- Long reach: Up to 15 meters

- High performance: Data rate as high as 16 Gbps with a roadmap to 48 Gbps and beyond; v1.1, already in development, will provide a doubling of the high-speed data rate to 32 Gbps and increase the uplink data rate to 200 Mbps

Sunday, September 20, 2020

DVS Company Celepixel Acquired by Will Semiconductor

Upconversion Device for THz to LWIR to MWIR Imaging

Phys.org: Physical Review paper "Molecular Platform for Frequency Upconversion at the Single-Photon Level" by Philippe Roelli, Diego Martin-Cano, Tobias J. Kippenberg, and Christophe Galland from EPFL and Max Planck Institute proposes a way to convert photons with wavelength of 60um to 3um to 0.5-1um ones.

"Direct detection of single photons at wavelengths beyond 2um under ambient conditions remains an outstanding technological challenge. One promising approach is frequency upconversion into the visible (VIS) or near-infrared (NIR) domain, where single-photon detectors are readily available. Here, we propose a nanoscale solution based on a molecular optomechanical platform to up-convert photons from the far- and mid-infrared (covering part of the terahertz gap) into the VIS-NIR domain. We perform a detailed analysis of its outgoing noise spectral density and conversion efficiency with a full quantum model. Our platform consists in doubly resonant nanoantennas focusing both the incoming long-wavelength radiation and the short-wavelength pump laser field into the same active region. There, infrared active vibrational modes are resonantly excited and couple through their Raman polarizability to the pump field. This optomechanical interaction is enhanced by the antenna and leads to the coherent transfer of the incoming low-frequency signal onto the anti-Stokes sideband of the pump laser. Our calculations demonstrate that our scheme is realizable with current technology and that optimized platforms can reach single-photon sensitivity in a spectral region where this capability remains unavailable to date."

Saturday, September 19, 2020

Samsung Registers ISOCELL Vizion Trademark for ToF and DVS Products

LetsGoDigital noticed that Samsung has registered ISOCELL Vizion trademark:

"Samsung ISOCELL Vizion trademark description: Time-of-flight (TOF) optical sensors for smartphones, facial recognition system comprising TOF optical sensors, 3D modeling and 3D measurement of objects with TOF sensors; Dynamic Vision Sensor (DVS) in the nature of motion sensors for smartphones; proximity detection sensors and Dynamic Vision Sensor (DVS) for detecting the shape, proximity, movement, color and behavior of humans."

Friday, September 18, 2020

PRNU Pattern is Not Unique Anymore

University of Florence, FORLAB, and AMPED Software, Italy, publish an interesting arxiv.org paper "A leak in PRNU based source identification? Questioning fingerprint uniqueness" by Massimo Iuliani, Marco Fontani, and Alessandro Piva.

"Photo Response Non Uniformity (PRNU) is considered the most effective trace for the image source attribution task. Its uniqueness ensures that the sensor pattern noises extracted from different cameras are strongly uncorrelated, even when they belong to the same camera model. However, with the advent of computational photography, most recent devices of the same model start exposing correlated patterns thus introducing the real chance of erroneous image source attribution. In this paper, after highlighting the issue under a controlled environment, we perform a large testing campaign on Flickr images to determine how widespread the issue is and which is the plausible cause. To this aim, we tested over 240000 image pairs from 54 recent smartphone models comprising the most relevant brands. Experiments show that many Samsung, Xiaomi and Huawei devices are strongly affected by this issue. Although the primary cause of high false alarm rates cannot be directly related to specific camera models, firmware nor image contents, it is evident that the effectiveness of PRNU-based source identification on the most recent devices must be reconsidered in light of these results. Therefore, this paper is to be intended as a call to action for the scientific community rather than a complete treatment of the subject."

Melexis Reports 1M Automotive ToF Sensors Shipped

Melexis publishes an article on its history in automotive ToF imaging, starting from cooperation with Free University of Brussels (VUB), continuing with VUB spin-off Softkinetic that was acquired by Sony and renamed to Sony Depthsensing Solutions.

So far, Melexis has shipped 1M automotive ToF sensors:

"At Melexis, we are proud of having designed the first automotive qualified ToF sensor IC with our first generation MLX75023. This proves our capability to not only design but also produce the new technology in line with stringent automotive quality standards. It is therefore with great pleasure that we are the first to have reached in 2019 the impressive milestone of having more than 1 million ToF image sensor ICs on the road."Melexis also announces a QVGA ToF sensor MLX75027 and publishes "ToF Basics" tutorial.