Press release:

https://voxelsensors.com/wp-content/uploads/2023/05/VoxelSensors_Announces_Seed_Round_Closing_May-17-2023-_-RC_FINAL.pdf

Brussels (Belgium), May 17, 2023

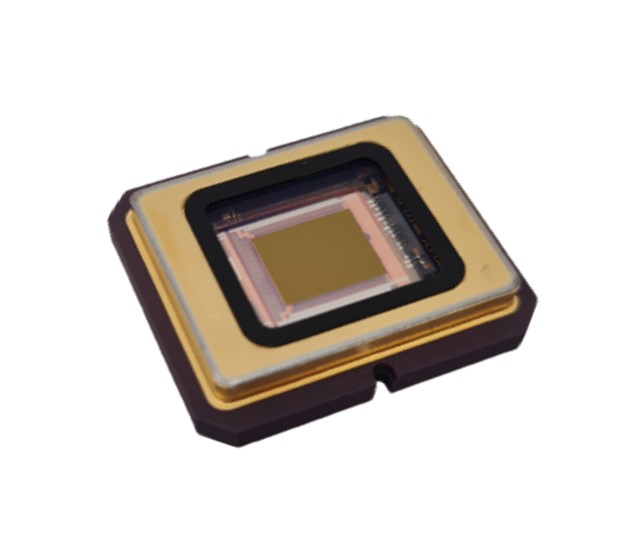

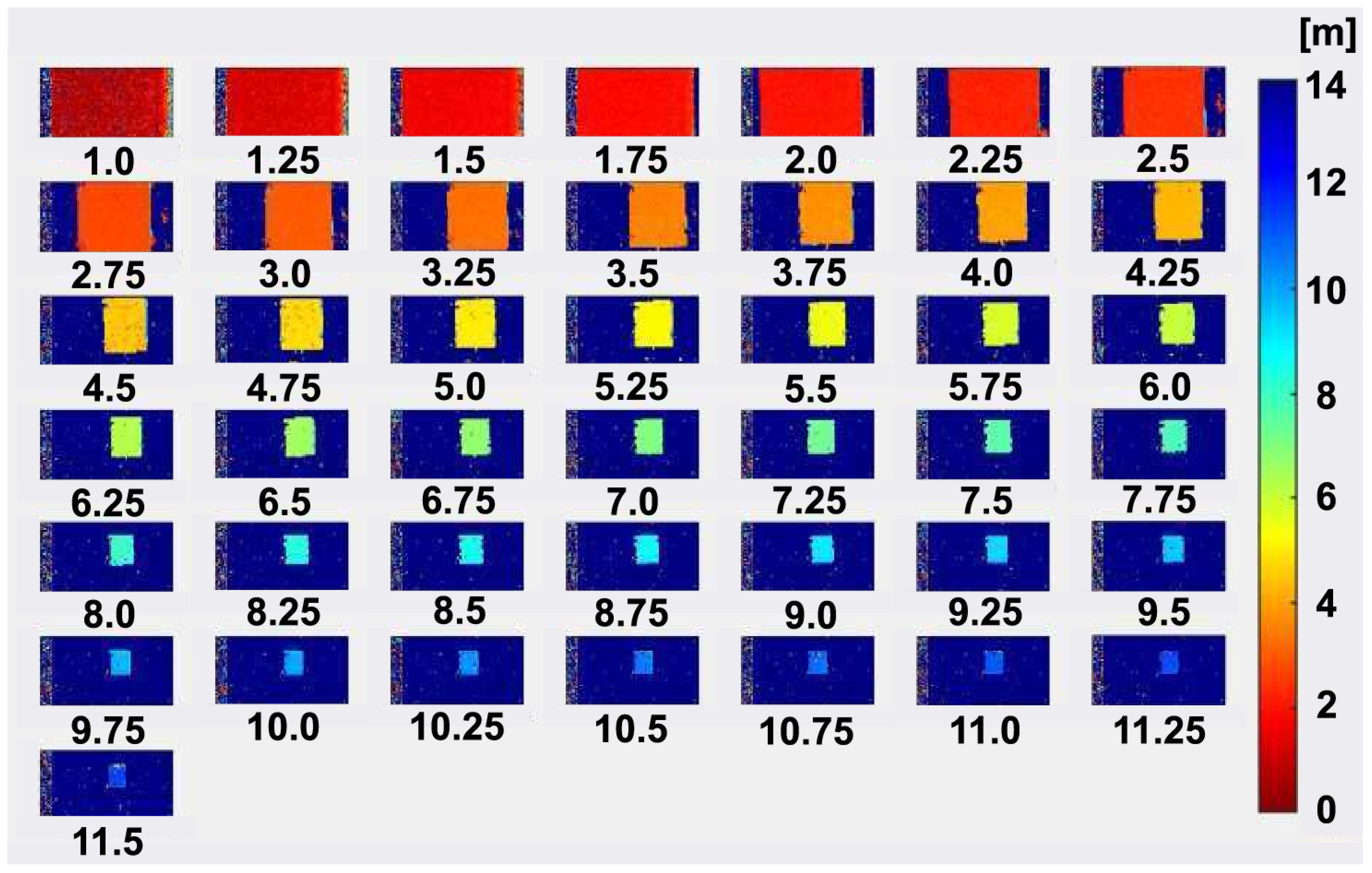

- VoxelSensors today announces an investment of €5M led by Belgian venture capital firms Capricorn Partners and Qbic, with participation from the investment firm finance&invest.brussels, existing investors and the team. VoxelSensors’ Switching Pixels® Active Event Sensor (SPAES) is a novel category of ultra-low power and ultra-low latency 3D perception sensors for Extended Reality (XR)1 to blend the physical and digital worlds. The funding will be used to further develop VoxelSensors’ roadmap, hire key employees, and strengthen business engagements with customers in the U.S. and Asia. Furthermore, VoxelSensors remains committed to raising funds in order to back its ambitious growth plans.

Extended Reality device manufacturers require low power consumption and low latency 3D

perception technology to seamlessly blend the physical and digital worlds and unlock the true

potential of immersive experiences. VoxelSensors’ patented Switching Pixels® Active Event Sensor technology has uniquely resolved these significant 3D perception challenges and is the world’s first solution reaching less than 10 milliwatts power consumption combined with less than 5 milliseconds latency while being resistant to outdoor lighting at distances over 5 meters and being immune to crosstalk interferences.

The founders of VoxelSensors boast a combined experience of more than 50 years in the development of cutting-edge 3D sensor technologies, systems and software. Their track record of success includes co-inventing an efficient 3D Time of Flight sensor and camera technology, which was acquired by a leading tech company.

“Our goal at VoxelSensors is to seamlessly integrate the physical and digital worlds to a point level where they become indistinguishable,” said Johannes Peeters, co-founder and CEO of VoxelSensors. "Extended Reality has rapidly gained traction in recent years, with diverse applications across sectors such as gaming, entertainment, education, healthcare, manufacturing, and more. With our Switching Pixels® Active Event Sensor technology we are poised to deliver unparalleled opportunities for groundbreaking user experiences. We are excited by the opportunity to contribute to the growth of our growing industry and honored by the trust of these investors to help us expand the company and accelerate market penetration.”

“We are excited to invest with the Capricorn Digital Growth Fund in VoxelSensors. We appreciate the broad experience in the team, the flexibility of the 3D perception solution towards different applications and the solid intellectual property base, essential for the success of a deep tech start-up. The team has a proven track record to build a scalable business model within a Europe-based semiconductor value chain. We also highly value the support of the Brussels region via Innoviris,” explained Marc Lambrechts, Investment Director at Capricorn Partners.

“As an inter-university fund, Qbic is delighted to support VoxelSensors in this phase of its journey. It’s a pleasure to see the team that led one of Vrije Universiteit Brussels’ (VUB) most prominent spinoffs to successful exit, start another initiative in this space. They will leverage again the expertise VUB has in this domain, through an extensive research collaboration,” said Steven Leuridan, Partner at Qbic III Fund. “We truly believe VoxelSensors is a shining example of a European fabless semiconductor company that holds potential to lead its market.”

Marc Lambrechts from Capricorn Partners and Steven Leuridan from Qbic are appointed to VoxelSensors’ Board of Directors, effective immediately.

“With Switching Pixels® Active Event Sensing (SPAES) we challenge the status quo in 3D perception,” concludes VoxelSensors’ co-founder and CTO of VoxelSensors, PhD Ward van der

Tempel. “This groundbreaking technology unlocks new possibilities in Extended Reality by addressing

previously unmet needs such as precise segmentation, spatial mapping, anchoring and natural interaction. Moreover, this breakthrough innovation extends beyond Extended Reality, and has exciting potential in various industries, including robotics, automotive, drones, and medical applications.”

VoxelSensors will showcase their breakthrough technology at the Augmented World Expo (AWE) USA 2023 from May 31 to June 2, 2023, in Santa Clara (California, USA). Evaluation Kits of the SPAES technology are available for purchase through sales@voxelsensors.com