The April 2023 edition of ADAS and Autonomous Vehicles International features a cover story article by Dr. Sabbir Rangwala on miniaturization of LiDAR sensors.

https://www.ukimediaevents.com/publication/9cb6eeeb/36

The single biggest obstacle to lidar miniaturization is the laser. Producing photons from electrons is difficult and inefficient. The telecommunication revolution of the 1990s went a long way to progress semiconductor lasers from research labs to the factory and into terrestrial and undersea fiber-optic networks. Lidar poses challenges of a different nature, as it involves laser energy transmission in free space. The transmit-receive process is inefficient due to atmospheric attenuation and range-related optical coupling losses. Addressing thousands of image elements over a reasonable FoV with acceptable resolution and high frame rates requires power levels that are difficult to realize from semiconductor lasers. This leads to the use of optical amplification (fiber lasers), large laser arrays (like VCSELs) or time and space sharing of the laser energy (scanning). Eye safety is another consideration. The 800-900nm wavelengths used by some lidars have low eye-safety margins. This improves with 1,300-1,500nm lasers, but there are still limits to the amount of safe power density that can be used to achieve a certain level of performance. Bulky system packaging and optics are required to engineer eye-safe solutions. Lasers are inefficient and sensitive to temperature. Roughly 70-80% of the electrical energy used is converted to heat (which needs to be managed). Automotive temperature ranges also cause problems because of wavelength shifts and further efficiency degradation. The complex III-V semiconductors used for lasers (GaAs or InGaAs) degrade faster at higher temperatures and in the presence of moisture. Active cooling and more complex packaging are required. At a system level, lidar currently requires hybrid integration of different materials: complex III-V semiconductors, silicon processing electronics, glass fibers, bulk optics (focusing lenses, isolators), scanning mechanisms, thermal management and complex packaging.

Current approaches

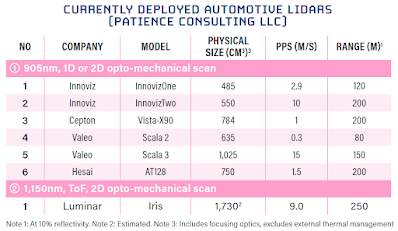

The FoV is addressed with purely solid-state approaches (no moving parts): using a single laser pulse or flash, in which all image pixels are simultaneously addressed (PreAct, TriEye, Ouster); or electronic scanning arrays of monolithic silicon SPADs and GaAs VCSELs that address regions in the FoV sequentially (Opsys, Hesai). The VCSEL-SPAD approach leverages thedevelopments, productization and integration of ToF (time-of-flight) lidar in smartphones at 905/940nm wavelengths (exact values vary and are proprietary); or optical scanning using a combination of phase-tuning antennas (optical phase arrays or OPAs) and wavelength dispersion, implemented in chip-scale silicon photonics platforms (Analog Photonics). This platform is compatible with FMCW-coherent lidar, which simultaneously measures range and radial velocity and operates in the 1,500nm wavelength band. PreAct focuses on short-range lidar for in-cabin sensing and road-facing applications. The approach is disruptive – it uses low-cost, off-the-shelf, CCD arrays and LED (versus laser) light sources to create 3D images based on indirect time of flight (iToF, like in gaming applications). The TrueSense T30 operates at an impressively high 150Hz frame rate, which is important for fast reaction times associated with short-range applications like blind spot obstacle avoidance and pedestrian safety. The size envelope includes an 8MP RGB camera and electronics that fuse the visible and 3D images. Eliminating the RGB sensor can reduce the size further. TriEye’s SEDAR (Spectrum Enhanced Detection and Ranging) is a flash lidar that uses a 1.3Mp CMOS-based germanium-silicon SWIR detector array and an internally developed, Q-switched, high peak-power, solid-state pumped diode laser that flashes the entire FoV. The higher wavelength provides greater eye-safety margins, which enables the use of higher laser power. Opsys uses a unique implementation of electronically addressable high-power VCSEL and SPAD arrays to achieve a solid-state lidar with no moving parts. It operates over automotive temperature ranges without requiring any type of active cooling or temperature stabilization. Hesai is in production for multiple automotive customers with the AT128 long-range lidar (which uses mechanical scanning for the HFoV). The FT120 is a fully solid-state lidar that uses electronic scanning of the VCSEL and SPAD arrays and is targeted for short-range applications (blind spot detection, in-cabin, etc). The company went public in January 2023, and is currently in a quiet period.