AllTechAsia, ChinaMoneyNetwork: Alibaba investment arm Ant Financial leads Orbbec financing round D of $200m. Other investors include SAIF Partners, R-Z Capital, Green Pine Capital Partners, and Tianlangxing Capital. The structured light 3D camera maker Orbbec is said to be the fourth largest company in the world to mass produce 3D sensors for consumer use. Orbbec says that its solution is now used by over 2,000 companies globally, and can be applied in various fields, including unmanned retail, auto-driving, home systems, smart security, robotics, Industry 4.0, VR/AR, etc.

Saturday, June 30, 2018

Friday, June 29, 2018

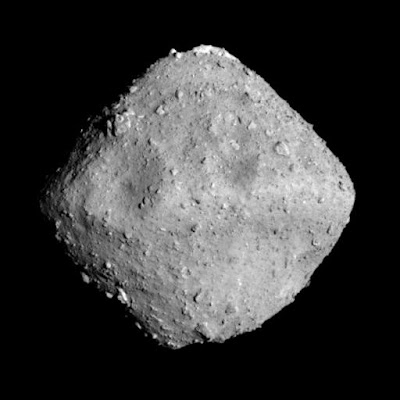

Hayabusa2 Takes Pictures of Ryugu

JAXA: Japanese asteroid explorer Hayabusa2 has arrived to the distance of 20km to Ryugu, the target asteroid, after three and a half years en route. The explorer landing module is expected to bring a sample of asteroid back to Earth in December 2020.

Here is one of the first Ryugu images from a short distance:

Talking about Hayabusa2 cameras, there are 4 of them:

Here is one of the first Ryugu images from a short distance:

Talking about Hayabusa2 cameras, there are 4 of them:

Thursday, June 28, 2018

Omnivision Loses Lawsuit Against SmartSens

SmartSens kindly sent me an official update on Omnivision lawsuit against Smartsens:

"SHANGHAI, June 25, 2018 – SmartSens, a leading provider of high-performance CMOS image sensors, responded to its patent infringement lawsuit today.

With regard to the recent lawsuit that accused SmartSens of infringing patents No. 200510052302.4 (titled “CMOS Image Sensor Using Shared Transistors between Pixels”) and No. 200680019122.9 (titled “Pixel with Symmetrical Field Effect Transistor Placement”), the Patent Reexamination Board of State Intellectual Property Office of the People’s Republic of China has ruled the two patents in question invalid.

According to the relevant judicial interpretation of the Supreme People’s Court, the infringement case regarding the above-mentioned patents will be dismissed.

“As a company researching, developing and utilizing technology, we pay due respect to intellectual property. However, we will not yield to any false accusations and misuse of intellectual property law,” said SmartSens CTO Yaowu Mo, Ph.D. “SmartSens will take legal measures to not only defend the company and its property, but also protect our partners’ and clients’ interest. SmartSens attributes its success to our talent and intellectual property. In this case, we are pleased to see that justice is served and a law-abiding company like SmartSens is protected.”

Thus far, SmartSens has applied for more than 100 patents, of which 75 were submitted in China and more than 20 have been granted. More than 30 patent applications were submitted in the United States, and more than 20 have been granted.

About SmartSens

SmartSens Technology Co., Ltd, a leading supplier of high-performance CMOS imaging systems, was founded by Richard Xu, Ph.D. in 2011. SmartSens’ R&D teams in Silicon Valley and Shanghai develop industry-leading image sensing technology and products. The company receives strong support from strategic partners and an ISO-Certified supply chain infrastructure, and delivers award-winning imaging solutions for security and surveillance, consumer products, automotive and other mass market applications."

"SHANGHAI, June 25, 2018 – SmartSens, a leading provider of high-performance CMOS image sensors, responded to its patent infringement lawsuit today.

With regard to the recent lawsuit that accused SmartSens of infringing patents No. 200510052302.4 (titled “CMOS Image Sensor Using Shared Transistors between Pixels”) and No. 200680019122.9 (titled “Pixel with Symmetrical Field Effect Transistor Placement”), the Patent Reexamination Board of State Intellectual Property Office of the People’s Republic of China has ruled the two patents in question invalid.

According to the relevant judicial interpretation of the Supreme People’s Court, the infringement case regarding the above-mentioned patents will be dismissed.

“As a company researching, developing and utilizing technology, we pay due respect to intellectual property. However, we will not yield to any false accusations and misuse of intellectual property law,” said SmartSens CTO Yaowu Mo, Ph.D. “SmartSens will take legal measures to not only defend the company and its property, but also protect our partners’ and clients’ interest. SmartSens attributes its success to our talent and intellectual property. In this case, we are pleased to see that justice is served and a law-abiding company like SmartSens is protected.”

Thus far, SmartSens has applied for more than 100 patents, of which 75 were submitted in China and more than 20 have been granted. More than 30 patent applications were submitted in the United States, and more than 20 have been granted.

About SmartSens

SmartSens Technology Co., Ltd, a leading supplier of high-performance CMOS imaging systems, was founded by Richard Xu, Ph.D. in 2011. SmartSens’ R&D teams in Silicon Valley and Shanghai develop industry-leading image sensing technology and products. The company receives strong support from strategic partners and an ISO-Certified supply chain infrastructure, and delivers award-winning imaging solutions for security and surveillance, consumer products, automotive and other mass market applications."

LiDARs in China

ResearchInChina publishes "Global and China In-vehicle LiDAR Industry Report, 2017-2022." Few quotes:

"Global automotive LiDAR sensor market was USD300 million in 2017, and is expected to reach USD1.4 billion in 2022 and soar to USD4.4 billion in 2027 in the wake of large-scale deployment of L4/5 private autonomous cars. Mature LiDAR firms are mostly foreign ones, such as Valeo and Quanergy. Major companies that have placed LiDARs on prototype autonomous driving test cars are Velodyne, Ibeo, Luminar, Valeo and SICK.

Chinese LiDAR companies lag behind key foreign peers in terms of time of establishment and technology. LiDARs are primarily applied to autonomous logistic vehicles (JD and Cainiao) and self-driving test cars (driverless vehicles of Beijing Union University and Moovita). Baidu launched Pandora (co-developed with Hesai Technologies), the sensor integrating LiDAR and camera, in its Apollo 2.5 hardware solution.

According to ADAS and autonomous driving plans of major OEMs, most of them will roll out SAE L3 models around 2020. Overseas OEMs: PAS SAE L3 (2020), Honda SAE L3 (2020), GM SAE L4 (2021+), Mercedes Benz SAE L3 (Mercedes Benz new-generation S in 2021), BMW SAE L3 (2021). Domestic OEMs: SAIC SAE L3 (2018-2020), FAW SAE L3 (2020), Changan SAE L3 (2020), Great Wall SAE L3 (2020), Geely SAE L3 (2020), and GAC SAE L3 (2020). The L3-and-above models with LiDAR are expected to share 10% of ADAS models in China in 2022. The figure will hit 50% in 2030."

13NewsNow talks about Tesla denying the need in LiDAR altogether: "Tesla has looked to cameras and radar — without lidar — to do much of the work needed for its Autopilot driver assistance system.

But other automakers and tech companies rushing to develop autonomous cars — Waymo, Ford and General Motors, for instance — are betting on lidar.

"Tesla's trying to do it on the cheap," [Sam Abuelsamid, an analyst with Navigant Research] said. "They're trying to take the cheap approach and focus on software. The problem with software is it's only as good as the data you can feed it."

"Global automotive LiDAR sensor market was USD300 million in 2017, and is expected to reach USD1.4 billion in 2022 and soar to USD4.4 billion in 2027 in the wake of large-scale deployment of L4/5 private autonomous cars. Mature LiDAR firms are mostly foreign ones, such as Valeo and Quanergy. Major companies that have placed LiDARs on prototype autonomous driving test cars are Velodyne, Ibeo, Luminar, Valeo and SICK.

Chinese LiDAR companies lag behind key foreign peers in terms of time of establishment and technology. LiDARs are primarily applied to autonomous logistic vehicles (JD and Cainiao) and self-driving test cars (driverless vehicles of Beijing Union University and Moovita). Baidu launched Pandora (co-developed with Hesai Technologies), the sensor integrating LiDAR and camera, in its Apollo 2.5 hardware solution.

According to ADAS and autonomous driving plans of major OEMs, most of them will roll out SAE L3 models around 2020. Overseas OEMs: PAS SAE L3 (2020), Honda SAE L3 (2020), GM SAE L4 (2021+), Mercedes Benz SAE L3 (Mercedes Benz new-generation S in 2021), BMW SAE L3 (2021). Domestic OEMs: SAIC SAE L3 (2018-2020), FAW SAE L3 (2020), Changan SAE L3 (2020), Great Wall SAE L3 (2020), Geely SAE L3 (2020), and GAC SAE L3 (2020). The L3-and-above models with LiDAR are expected to share 10% of ADAS models in China in 2022. The figure will hit 50% in 2030."

13NewsNow talks about Tesla denying the need in LiDAR altogether: "Tesla has looked to cameras and radar — without lidar — to do much of the work needed for its Autopilot driver assistance system.

But other automakers and tech companies rushing to develop autonomous cars — Waymo, Ford and General Motors, for instance — are betting on lidar.

"Tesla's trying to do it on the cheap," [Sam Abuelsamid, an analyst with Navigant Research] said. "They're trying to take the cheap approach and focus on software. The problem with software is it's only as good as the data you can feed it."

Wednesday, June 27, 2018

Velodyne CTO Promotes High Resolution LiDAR

Velodyne publishes a video with its CTO Anand Gopalan talking about VLS-128 improvements and features:

Vivo Smartphone ToF Camera is Official Now

PRNewswire: Vivo reveals its TOF 3D Sensing Technology "with the promise of a paradigm shift in imaging, AR and human-machine interaction, which will elevate consumer lifestyles with new levels of immersion and smart capability."

Vivo's TOF 3D camera features 300,000 depth pixel resolution, which is said to be 10x the number of existing Structured Light Technology. It enables 3D mapping at up to 3m from the phone while having a smaller baseline than Structured Light. TOF 3D Sensing Technology is also simpler and smaller in structure and allows for more flexibility when embedded in a smartphone. This will enable much broader application of this technology than was previously possible.

Vivo's TOF 3D Sensing Technology is no mere proof of concept. The technology is tested and meets industry standards required for integration with current apps soon. Beyond facial recognition, TOF 3D Sensing Technology will open up new possibilities for entertainment as well as work.

Vivo's TOF 3D camera features 300,000 depth pixel resolution, which is said to be 10x the number of existing Structured Light Technology. It enables 3D mapping at up to 3m from the phone while having a smaller baseline than Structured Light. TOF 3D Sensing Technology is also simpler and smaller in structure and allows for more flexibility when embedded in a smartphone. This will enable much broader application of this technology than was previously possible.

Vivo's TOF 3D Sensing Technology is no mere proof of concept. The technology is tested and meets industry standards required for integration with current apps soon. Beyond facial recognition, TOF 3D Sensing Technology will open up new possibilities for entertainment as well as work.

Update: Analog Devices (ADI) claims that Vivo ToF camera uses its technology (Panasonic CCD-based):

Samsung Cooperates with Fujifilm to Improve Color Separation

BusinessWire: Samsung introduces its new 'ISOCELL Plus' technology. The original ISOCELL circa 2013 forms a physical barrier between the neighboring pixels, reducing color crosstalk and expanding the full-well capacity.

With the introduction of ISOCELL Plus, Samsung improves isolation through an optimized pixel architecture. In the existing pixel structure, metal grids are formed over the photodiodes to reduce interference between the pixels, which can also lead to some optical loss as metals tend to reflect and/or absorb the incoming light. For ISOCELL Plus, Samsung replaced the metal barrier with an innovative new material developed by Fujifilm, minimizing optical loss and light reflection.

“We value our strategic relationship with Samsung and would like to congratulate on the completion of the ISOCELL Plus development,” said Naoto Yanagihara, CVP of Fujifilm. “This development is a remarkable milestone for us as it marks the first commercialization of our new material. Through continuous cooperation with Samsung, we anticipate to bring more meaningful innovation to mobile cameras.”

The new ISOCELL Plus delivers higher color fidelity along with up to a 15% enhancement in light sensitivity. The technology is said to enable pixel scaling down to 0.8µm and smaller without a loss in performance.

“Through close collaboration with Fujifilm, an industry leader in imaging and information technology, we have pushed the boundaries of CMOS image sensor technology even further,” said Ben K. Hur, VP of System LSI marketing at Samsung Electronics. “The ISOCELL Plus will not only enable the development of ultra-high-resolution sensors with incredibly small pixel dimensions, but also bring performance advancements for sensors with larger pixel designs.”

With the introduction of ISOCELL Plus, Samsung improves isolation through an optimized pixel architecture. In the existing pixel structure, metal grids are formed over the photodiodes to reduce interference between the pixels, which can also lead to some optical loss as metals tend to reflect and/or absorb the incoming light. For ISOCELL Plus, Samsung replaced the metal barrier with an innovative new material developed by Fujifilm, minimizing optical loss and light reflection.

“We value our strategic relationship with Samsung and would like to congratulate on the completion of the ISOCELL Plus development,” said Naoto Yanagihara, CVP of Fujifilm. “This development is a remarkable milestone for us as it marks the first commercialization of our new material. Through continuous cooperation with Samsung, we anticipate to bring more meaningful innovation to mobile cameras.”

The new ISOCELL Plus delivers higher color fidelity along with up to a 15% enhancement in light sensitivity. The technology is said to enable pixel scaling down to 0.8µm and smaller without a loss in performance.

“Through close collaboration with Fujifilm, an industry leader in imaging and information technology, we have pushed the boundaries of CMOS image sensor technology even further,” said Ben K. Hur, VP of System LSI marketing at Samsung Electronics. “The ISOCELL Plus will not only enable the development of ultra-high-resolution sensors with incredibly small pixel dimensions, but also bring performance advancements for sensors with larger pixel designs.”

Tuesday, June 26, 2018

Vivo to Integrate ToF Front Camera into its Smartphone

Gizchina reports that Vivo is about to announce a ToF 3D camera integration into its smartphone at the end of this month:

"In addition to being able to recognize human faces better and more quickly, the recognition distance can be enhanced. Moreover, it does not require the face to be in front of the screen to process. this technology is very likely to be used in a flagship model launched in the second half of the year."

"In addition to being able to recognize human faces better and more quickly, the recognition distance can be enhanced. Moreover, it does not require the face to be in front of the screen to process. this technology is very likely to be used in a flagship model launched in the second half of the year."

Monday, June 25, 2018

Samsung to Use EUV in Image Sensors Manufacturing

Samsung investors presentation on June 4, 2018 in Singapore talks about an interesting development at the company's S4 fab:

"S4 line provides CMOS image sensor using 45-nanometer and below process node, and we are building EUV line. We started constructing in February this year."

Samsung has been using 28nm design rules in its image sensors for quite a some time. EUV seems to be a natural next step to avoid double patterning limitations on the pixel layout (double patterning is commonly used starting from 22nm node.)

"S4 line provides CMOS image sensor using 45-nanometer and below process node, and we are building EUV line. We started constructing in February this year."

Samsung has been using 28nm design rules in its image sensors for quite a some time. EUV seems to be a natural next step to avoid double patterning limitations on the pixel layout (double patterning is commonly used starting from 22nm node.)

Sunday, June 24, 2018

EUV Lithography Drives EUV Imaging

CNET: As Samsung endorses EUV at its 7nm process node, the new generation of photolithography is finally here, after 30 years in development:

ASML cooperates with Imec to develop 13.5nm-wavelength image sensors for its EUV machines:

ASML cooperates with Imec to develop 13.5nm-wavelength image sensors for its EUV machines:

Saturday, June 23, 2018

Photon Counting History

Edward Fisher from the University of Edinburgh, UK publishes "Principles and Early Historical Development of Silicon Avalanche and Geiger-Mode Photodiodes" chapter in an open source "Photon Counting" book.

The chapter is a historical literature review for the development of solid-state photodetection and avalanche multiplication between 1900 and 1969 based on 110+ primary sources that are key in the field over that time.

The author is looking to fill some of the gaps for a later Springer book covering 1900 to 1999, i.e. 100 years of solid-state photodetection with a distinct avalanche focus (with significantly more detail). Edward Fisher is looking for any key papers or patents the readers of this blog think should be included for the 1900-1969 period.

When the full 1900-1999 analysis is finished, Edward aims for it to be the de-facto literature reference for the field, as it is often difficult for busy technical researchers to either find or read some of the older literature.

"The historical development of technology can inform future innovation, and while theses and review articles attempt to set technologies and methods in context, few can discuss the historical background of a scientific paradigm. In this chapter, the nature of the photon is discussed along with what physical mechanisms allow detection of single-photons using solid-state semiconductor-based technologies. By restricting the scope of this chapter to near-infrared, visible and near-ultraviolet detection we can focus upon the internal photoelectric effect. Likewise, by concentrating on single-photon semiconductor detectors, we can focus upon the carrier-multiplication gain that has allowed sensitivity to approach the single-photon level. This chapter and the references herein aim to provide a historical account and full literature review of key, early developments in the history of photodiodes (PDs), avalanche photodiodes (APDs), single-photon avalanche diodes (SPADs), other Geiger-mode avalanche photodiodes (GM-APDs) and silicon photo-multipliers (Si-PMs).

As there are overlaps with the historical development of the transistor (1940s), we find that development of the p-n junction and the observation of noise from distinct crystal lattice or doping imperfections – called “microplasmas” – were catalysts for innovation. The study of microplasmas, and later dedicated structures acting as known-area, uniform-breakdown artificial microplasmas, allowed the avalanche gain mechanism to be observed, studied and utilised."

The chapter is a historical literature review for the development of solid-state photodetection and avalanche multiplication between 1900 and 1969 based on 110+ primary sources that are key in the field over that time.

The author is looking to fill some of the gaps for a later Springer book covering 1900 to 1999, i.e. 100 years of solid-state photodetection with a distinct avalanche focus (with significantly more detail). Edward Fisher is looking for any key papers or patents the readers of this blog think should be included for the 1900-1969 period.

When the full 1900-1999 analysis is finished, Edward aims for it to be the de-facto literature reference for the field, as it is often difficult for busy technical researchers to either find or read some of the older literature.

"The historical development of technology can inform future innovation, and while theses and review articles attempt to set technologies and methods in context, few can discuss the historical background of a scientific paradigm. In this chapter, the nature of the photon is discussed along with what physical mechanisms allow detection of single-photons using solid-state semiconductor-based technologies. By restricting the scope of this chapter to near-infrared, visible and near-ultraviolet detection we can focus upon the internal photoelectric effect. Likewise, by concentrating on single-photon semiconductor detectors, we can focus upon the carrier-multiplication gain that has allowed sensitivity to approach the single-photon level. This chapter and the references herein aim to provide a historical account and full literature review of key, early developments in the history of photodiodes (PDs), avalanche photodiodes (APDs), single-photon avalanche diodes (SPADs), other Geiger-mode avalanche photodiodes (GM-APDs) and silicon photo-multipliers (Si-PMs).

As there are overlaps with the historical development of the transistor (1940s), we find that development of the p-n junction and the observation of noise from distinct crystal lattice or doping imperfections – called “microplasmas” – were catalysts for innovation. The study of microplasmas, and later dedicated structures acting as known-area, uniform-breakdown artificial microplasmas, allowed the avalanche gain mechanism to be observed, studied and utilised."

Holst Centre and Imec Present Organic Fingerprint Sensor

Friday, June 22, 2018

Pico Presents Zense ToF Camera

NotebookItalia: China-based Pico presents Zense camera, its first foray into ToF sensing. The depth processing is based on Rockchip RV1108 with CEVA XM4 vision IP core:

Yole Forecast on 3D Sensing Market

Yole Developpement publishes "3D Imaging & Sensing 2018" report:

"Apple set the standard for technology and use-case for 3D sensing in consumer. From our initial depiction of the market in March 2017, the main gap is in illumination ASP, which is greater than expected. High expenses in dot and flood illumination VCSELs from Lumentum/II-VI/Finisar, along with the dot illuminator optical assembly from ams, are the biggest technology surprises powering Apple’s $1,000 [iPhone X] smartphone.

Yole Développement (Yole) expects the global 3D imaging & sensing market to expand from $2.1B in 2017 to $18.5B in 2023, at a 44% CAGR. Along with consumer, automotive, industrial, and other high-end markets will also experience a double-digit growth pattern.

The transition from imaging to sensing is happening before our eyes. Despite half-successful attempts like Xbox’s Kinect technology and Leap-Motion hand controllers, 3D sensing is now tracking towards ubiquitousness.

Oppo made the first announcement beginning of the year with Orbeec, while Xiaomi released the Mi 8 explorer edition with Mantis as a technology partner. We expect Huawei to release its own solution soon, probably partnering with ams and Sunny Optical.

...players like Himax are currently paying the price for a lower-performance offering and are struggling to get design-ins beyond AR/VR headsets for Microsoft.

Unlike previous sensing components, the responsibility of system design does not fall on the OEM - instead, a specialist is required, such as the Primesense team that Apple acquired in 2013, or other firms like Mantis, Orbbec, and ams, which want play the “specialist” role in the new 3D imaging & sensing ecosystem. Such players orchestrate the final solution while allowing room for the best in each sub-component category.

Is 3D imaging & sensing now ripe for disruption? Yole expects it will take at least 2 -3 years before any new solution start dramatically lowering total system cost."

"Apple set the standard for technology and use-case for 3D sensing in consumer. From our initial depiction of the market in March 2017, the main gap is in illumination ASP, which is greater than expected. High expenses in dot and flood illumination VCSELs from Lumentum/II-VI/Finisar, along with the dot illuminator optical assembly from ams, are the biggest technology surprises powering Apple’s $1,000 [iPhone X] smartphone.

Yole Développement (Yole) expects the global 3D imaging & sensing market to expand from $2.1B in 2017 to $18.5B in 2023, at a 44% CAGR. Along with consumer, automotive, industrial, and other high-end markets will also experience a double-digit growth pattern.

The transition from imaging to sensing is happening before our eyes. Despite half-successful attempts like Xbox’s Kinect technology and Leap-Motion hand controllers, 3D sensing is now tracking towards ubiquitousness.

Oppo made the first announcement beginning of the year with Orbeec, while Xiaomi released the Mi 8 explorer edition with Mantis as a technology partner. We expect Huawei to release its own solution soon, probably partnering with ams and Sunny Optical.

...players like Himax are currently paying the price for a lower-performance offering and are struggling to get design-ins beyond AR/VR headsets for Microsoft.

Unlike previous sensing components, the responsibility of system design does not fall on the OEM - instead, a specialist is required, such as the Primesense team that Apple acquired in 2013, or other firms like Mantis, Orbbec, and ams, which want play the “specialist” role in the new 3D imaging & sensing ecosystem. Such players orchestrate the final solution while allowing room for the best in each sub-component category.

Is 3D imaging & sensing now ripe for disruption? Yole expects it will take at least 2 -3 years before any new solution start dramatically lowering total system cost."

Thursday, June 21, 2018

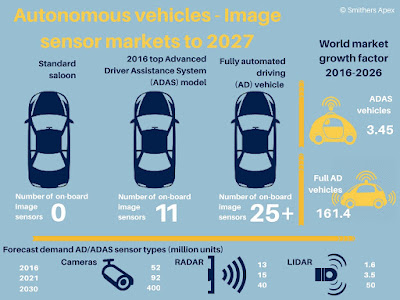

Smithers Report on Technology Challenges for Image Sensors in ADAS & Autonomous Vehicle

Smithers Apex publishes a report "Technology Challenges for Image Sensors in ADAS & Autonomous Vehicle Markets to 2023." Few quotes:

"...as vehicles approach full autonomy, the need for human-friendly colour reproduction becomes redundant, and the quality requirements on the image sensing for computer vision will increase further. A high dynamic range of 140 dB, resolutions of 8 MP for mono vision and at least 2MP for stereo vision cameras, frame rates beyond 60 fps, a sensitivity of several thousand electrons per lux and second are requested.

At the same time the noise performance should ensure a signal to noise ratio of 1 at 1 millilux, and an exposure time of less than 1/30 s. High dynamic range shall be achieved by as few as possible exposures to minimise motion blur. The compensation of the flickering of LED traffic signs and lights as described in the previous section is even more important for level 4 and 5 vehicles."

"...as vehicles approach full autonomy, the need for human-friendly colour reproduction becomes redundant, and the quality requirements on the image sensing for computer vision will increase further. A high dynamic range of 140 dB, resolutions of 8 MP for mono vision and at least 2MP for stereo vision cameras, frame rates beyond 60 fps, a sensitivity of several thousand electrons per lux and second are requested.

At the same time the noise performance should ensure a signal to noise ratio of 1 at 1 millilux, and an exposure time of less than 1/30 s. High dynamic range shall be achieved by as few as possible exposures to minimise motion blur. The compensation of the flickering of LED traffic signs and lights as described in the previous section is even more important for level 4 and 5 vehicles."

SWIR Startup Trieye Raises $3m of Seed Money

Globes: Israeli startup TriEye announces the completion of a $3m seed round led by Grove Ventures. TriEye develops SWIR sensors providing autonomous cars heightened visual capabilities in restricted visual conditions at significantly reduced cost. The technology is based on many years of research at the Hebrew University of Jerusalem by Prof. Uriel Levy.

TriEye cofounder and CEO Avi Bakal says, "The company is offering capabilities that in the past were only accessible to defense and aerospace industries and at a minimal cost compared with the past. This capability improves the safety of advanced driver-assistance systems and is a major step forward to the extensive adoption of the technology by the carmakers."

TriEye cofounder and CEO Avi Bakal says, "The company is offering capabilities that in the past were only accessible to defense and aerospace industries and at a minimal cost compared with the past. This capability improves the safety of advanced driver-assistance systems and is a major step forward to the extensive adoption of the technology by the carmakers."

AutoSens Brussels Agenda

Autosens Brussels to be held in September 2018 announces its preliminary speakers list. There is quite a lot of image sensors presentations:

- Introduction to the world of Time-of-Flight for 3D imaging, Albert Theuwissen, Harvest Imaging (Tutorial)

- Do we have a lidar bubble? Panel discussion

- Vehicle perception of humans – what level of image quality is needed to recognise behavioural intentions, Panel discussion

- A review of the latest research in photonics-sensor technologies in automotive, Michael Watts, Analog Photonics

- Automotive HDR imaging – the history and future, Mario Heid, Omnivision

- Self-driving cars and lidar, Simon Verghese, Waymo

- Tier 1 achievements with solid state lidar, Filip Geuens, Xenomatix

- An Approach to realize “Safety Cocoon”, Yuichi Motohashi, Sony

- How AI/Computer Vision affects camera design and SOC design, Marco Jacobs, videantis

- Objective and Application Oriented Characterisation of Image Sensors with EMVA’s 1288 Standard, Bernd Jaehne, EMVA 1288 Chair

- Review of IEEE P2020 developments, Patrick Denny, Valeo

Xintec Unable to Fill 12-inch WLP Line

Digitimes reports that Xintec has hard time recovering from the loss of its major investor and customer Omnivision. The company has decided to suspend its 12-inch WLP production line for a year due to disappointing demand for mass-market applications. The 12-inch line workforce will be transferred to 8-inch lines to improve revenue and profit.

Wednesday, June 20, 2018

Tunable Plasmonic Filters Enable Time-Sequential Color Imaging

ACS Photonics paper "Tunable Multispectral Color Sensor with Plasmonic Reflector" by Vladislav Jovanov, Helmut Stiebig, and Dietmar Knipp from Jacobs University Bremen and Institute of Photovoltaics Jülich, Germany proposes plasmonic reflectors for color imaging:

"Vertically integrated color sensors with plasmonic reflectors are realized. The complete color information is detected at each color pixel of the sensor array without using optical filters. The spectral responsivity of the sensor is tuned by the applied electric bias and the design of the plasmonic reflector. By introducing an interlayer between the lossy metal back reflector and the sensor, the reflectivity can be modified over a wide spectral range. The detection principle is demonstrated for a silicon thin film detector prepared on a textured silver back reflector. The sensor can be used for RGB color detection replacing conventional color sensors with optical filters. Combining detectors with different spectral reflectivity of the back reflector allows for the realization of multispectral color sensors covering the visible and the near-infrared spectral range.

To our knowledge for the first time a sensor is presented that combines a spatial color multiplexing scheme (side-by-side arrangement of the individual color channels) used by conventional color sensors with the time multiplexing scheme (sequential read-out of colors) of a vertically integrated sensor."

"Vertically integrated color sensors with plasmonic reflectors are realized. The complete color information is detected at each color pixel of the sensor array without using optical filters. The spectral responsivity of the sensor is tuned by the applied electric bias and the design of the plasmonic reflector. By introducing an interlayer between the lossy metal back reflector and the sensor, the reflectivity can be modified over a wide spectral range. The detection principle is demonstrated for a silicon thin film detector prepared on a textured silver back reflector. The sensor can be used for RGB color detection replacing conventional color sensors with optical filters. Combining detectors with different spectral reflectivity of the back reflector allows for the realization of multispectral color sensors covering the visible and the near-infrared spectral range.

To our knowledge for the first time a sensor is presented that combines a spatial color multiplexing scheme (side-by-side arrangement of the individual color channels) used by conventional color sensors with the time multiplexing scheme (sequential read-out of colors) of a vertically integrated sensor."

Daqri on Importance of Latency in AR/VR Imaging

Daqri Chief Scientist Daniel Wagner publishes an article "Motion to Photon Latency in AR and VR applications:"

Daqri tells about its latency optimization achievements: "Overall, what is the final latency we can achieve with such a system? The answer is: It depends. If we consider rendering using the latest 6DOF pose estimates as the last step in our pipeline that produces fully correct augmentations then achieving a latency of ~17ms is our best-case scenario.

However, late warping can correct most noticeable artefacts, so it makes sense to treat it as valid part of the pipeline. Without using forward prediction for late warping, we can achieve a physical latency of 4 milliseconds per color channel in our example system. However, 4 milliseconds is such a short length of time, making forward prediction almost perfect and pushing perceived latency towards zero. Further, we could actually predict beyond 4ms into the future to achieve a negative perceived latency. However, negative latency is just as unpleasant as positive latency, so this would not make sense for our scenario."

Daqri tells about its latency optimization achievements: "Overall, what is the final latency we can achieve with such a system? The answer is: It depends. If we consider rendering using the latest 6DOF pose estimates as the last step in our pipeline that produces fully correct augmentations then achieving a latency of ~17ms is our best-case scenario.

However, late warping can correct most noticeable artefacts, so it makes sense to treat it as valid part of the pipeline. Without using forward prediction for late warping, we can achieve a physical latency of 4 milliseconds per color channel in our example system. However, 4 milliseconds is such a short length of time, making forward prediction almost perfect and pushing perceived latency towards zero. Further, we could actually predict beyond 4ms into the future to achieve a negative perceived latency. However, negative latency is just as unpleasant as positive latency, so this would not make sense for our scenario."

Tuesday, June 19, 2018

Lucid Demos Sony Polarization Sensor

LUCID Vision Labs demos Sony IMX250MYR polarized color sensor in its Phoenix camera family. The 5 MP GS sensor with 3.45µm pixel and frame rates of up to 24 fps is based on the popular IMX250 Sony Pregius CMOS color sensor with polarizing filters added to the pixel. The sensor has four different directional polarizing filters (0°, 90°, 45°, and 135°) on every four pixels:

Panasonic Develops 250m-Range APD-based ToF Sensor

Panasonic has developed a TOF image sensorthat uses avalanche PD (APD) pixels and is capable of capturing range imaging of objects up to 250m even at night with poor visibility (there is no mention what is the range in mid-day sunlight). The new sensor applications include automotive range imaging and wide-area surveillance in the dark.

The ToF pixel includes an APD and an in-pixel circuit that integrates weak input signals to enables the 3D range imaging 250 m ahead. The sensor resolution is said to be the world's highest 250,000 pixels for a sensor based on electron-multiplying pixels. This high integration is achieved through the lamination of the electron multiplier and the electron storage as well as the area reduction of APD pixels.

The key innovative technologies are:

The ToF pixel includes an APD and an in-pixel circuit that integrates weak input signals to enables the 3D range imaging 250 m ahead. The sensor resolution is said to be the world's highest 250,000 pixels for a sensor based on electron-multiplying pixels. This high integration is achieved through the lamination of the electron multiplier and the electron storage as well as the area reduction of APD pixels.

The key innovative technologies are:

- The area of APD pixels is significantly reduced while the multiplication performance is maintained through the lamination of the multiplier that amplifies photoelectrons and the electron storage that retains electrons.

The APD multiplication factor is 10,000. - Long-range measurement imaging technology

Trioptics Active Alignment, Assembly and Testing of Camera Modules

Germany-based Trioptics demos Procam, its modular manufacturing line for active alignment, assembly and testing of camera modules in mass production:

Fiat-Chrysler Autonomous Car Relies on 5 LiDARs and 8 Cameras

Fiat-Chrysler 5-year plan presentation shows its Level 4 autonomous car with 5 LiDARs and 8 cameras. Most of the LiDARs are defined as "mid-range" possibly meaning their range is shorter than 200m for a cheaper price:

Monday, June 18, 2018

Gil Amelio on Patent Infrigements

Investors Business Daily publishes Gil Amelio article with a story of Pictos vs Samsung lawsuit:

"A typical small inventive company, Pictos Technologies, was put out of business after Samsung aggressively infringed its intellectual property.

Pictos invented an inexpensive image sensor that could be used in countless applications such as mobile phones and automobile cameras, to name only two. This next-generation Image Sensor was a follow-on to my dozen or so image-sensing patents that helped launch the solid-state image-sensor business years earlier. The Pictos technology, developed after years of investment and design, was protected by a portfolio of patents obtained at substantial cost.

In 2014, Pictos sued Samsung in federal court, alleging that it had "willfully infringed" its intellectual property. After years of costly litigation, the case went to trial, where Pictos lawyers introduced evidence that proved Samsung began as a Pictos customer, secretly copied its engineering designs and production process, and replicated them in Korea. Using our technology and its sizable scale, it went on to dominate this sector of the world electronics market.

Following lengthy litigation, the jury ruled in our favor and awarded substantial damages. The judge then trebled the damages based on "evidence of (Samsung's) conduct at the time of the accused infringement." Please note: Samsung's behavior was so egregious that the judge tripled the jury determination of the infringement costs to us.

That was just the first round, though. The verdict can be overturned on appeal, which, of course, Samsung has filed."

Update: Once we are at historical stuff, SemiWiki publishes Mentor Graphics CEO Wally Rhines memories from the early days of CCD and DRAM imagers in Stanford University in 1960s.

"A typical small inventive company, Pictos Technologies, was put out of business after Samsung aggressively infringed its intellectual property.

Pictos invented an inexpensive image sensor that could be used in countless applications such as mobile phones and automobile cameras, to name only two. This next-generation Image Sensor was a follow-on to my dozen or so image-sensing patents that helped launch the solid-state image-sensor business years earlier. The Pictos technology, developed after years of investment and design, was protected by a portfolio of patents obtained at substantial cost.

In 2014, Pictos sued Samsung in federal court, alleging that it had "willfully infringed" its intellectual property. After years of costly litigation, the case went to trial, where Pictos lawyers introduced evidence that proved Samsung began as a Pictos customer, secretly copied its engineering designs and production process, and replicated them in Korea. Using our technology and its sizable scale, it went on to dominate this sector of the world electronics market.

Following lengthy litigation, the jury ruled in our favor and awarded substantial damages. The judge then trebled the damages based on "evidence of (Samsung's) conduct at the time of the accused infringement." Please note: Samsung's behavior was so egregious that the judge tripled the jury determination of the infringement costs to us.

That was just the first round, though. The verdict can be overturned on appeal, which, of course, Samsung has filed."

Update: Once we are at historical stuff, SemiWiki publishes Mentor Graphics CEO Wally Rhines memories from the early days of CCD and DRAM imagers in Stanford University in 1960s.

Microsoft Opens Access to Hololens Cameras

Microsoft opens access to a raw video stream from cameras in its Hololens AR headset, including 3D ToF camera:

"The depth camera uses active infrared (IR) illumination to determine depth through time-of-flight. The camera can operate in two modes. The first mode enables high-frequency (30 FPS) near-depth sensing, commonly used for hand tracking, while the other is used for lower-frequency (1-5 FPS) far-depth sensing, currently used by spatial mapping. In addition to depth, this camera also delivers actively illuminated IR images that can be valuable in their own right because they are illuminated from the HoloLens and reasonably unaffected by ambient light."

"The depth camera uses active infrared (IR) illumination to determine depth through time-of-flight. The camera can operate in two modes. The first mode enables high-frequency (30 FPS) near-depth sensing, commonly used for hand tracking, while the other is used for lower-frequency (1-5 FPS) far-depth sensing, currently used by spatial mapping. In addition to depth, this camera also delivers actively illuminated IR images that can be valuable in their own right because they are illuminated from the HoloLens and reasonably unaffected by ambient light."

Sunday, June 17, 2018

Canon Explores Large Image Sensor Future

Canon publishes an article on its image sensor projects for academic and industrial customers.

The world's largest high-Sensitivity CMOS sensor is measuring ~20 cm square. As such, a 20-cm-square sensor is the largest size that can be manufactured on 300mm wafer, and is equivalent to nearly 40 times the size of a 35 mm full-frame CMOS sensor:

Canon has spent many years working to reduce the pixel size for CMOS sensors, making possible a pixel size of 2.2 µm for a total of approximately 120MP on a single sensor. The APS-H size (approx. 29 x 20 mm) CMOS sensor boasts approximately 7.5 times the number of pixels and 2.6 times the resolution of sensors of the same size featured in existing products. This sensor offers potential for a range of industrial applications, including cameras for shooting images for large-format poster prints, cameras for the image inspection of precision parts, aerospace cameras, and omnidirectional vision cameras.

The world's largest high-Sensitivity CMOS sensor is measuring ~20 cm square. As such, a 20-cm-square sensor is the largest size that can be manufactured on 300mm wafer, and is equivalent to nearly 40 times the size of a 35 mm full-frame CMOS sensor:

Canon has spent many years working to reduce the pixel size for CMOS sensors, making possible a pixel size of 2.2 µm for a total of approximately 120MP on a single sensor. The APS-H size (approx. 29 x 20 mm) CMOS sensor boasts approximately 7.5 times the number of pixels and 2.6 times the resolution of sensors of the same size featured in existing products. This sensor offers potential for a range of industrial applications, including cameras for shooting images for large-format poster prints, cameras for the image inspection of precision parts, aerospace cameras, and omnidirectional vision cameras.

Saturday, June 16, 2018

Infineon Predicts Autonomous Cars with More Radars than Cameras

Friday, June 15, 2018

TrinamiX Paper in Nature

Nature publishes BASF spin-off TrinamiX paper "Focus-Induced Photoresponse: a novel way to measure distances with photodetectors" by Oili Pekkola, Christoph Lungenschmied, Peter Fejes, Anke Handreck, Wilfried Hermes, Stephan Irle, Christian Lennartz, Christian Schildknecht, Peter Schillen, Patrick Schindler, Robert Send, Sebastian Valouch, Erwin Thiel, and Ingmar Bruder.

"We present the Focus-Induced Photoresponse (FIP) technique, a novel approach to optical distance measurement. It takes advantage of a universally-observed phenomenon in photodetector devices, an irradiance-dependent responsivity. This means that the output from a sensor is not only dependent on the total flux of incident photons, but also on the size of the area in which they fall. If probe light from an object is cast on the detector through a lens, the sensor response depends on how far in or out of focus the object is. We call this the FIP effect. Here we demonstrate how to use the FIP effect to measure the distance to that object. We show that the FIP technique works with different sensor types and materials, as well as visible and near infrared light. The FIP technique operates on a working principle, which is fundamentally different from all established distance measurement methods and hence offers a way to overcome some of their limitations. FIP enables fast optical distance measurements with a simple single-pixel detector layout and minimal computational power. It allows for measurements that are robust to ambient light even outside the wavelength range accessible with silicon.

In this paper, we demonstrated the measurement principle at distances up to 2 m and showed a resolution of below 500 µm at a distance of 50 cm. In the Supplementary Information S7, distance measurements up to 70 m can be found."

"We present the Focus-Induced Photoresponse (FIP) technique, a novel approach to optical distance measurement. It takes advantage of a universally-observed phenomenon in photodetector devices, an irradiance-dependent responsivity. This means that the output from a sensor is not only dependent on the total flux of incident photons, but also on the size of the area in which they fall. If probe light from an object is cast on the detector through a lens, the sensor response depends on how far in or out of focus the object is. We call this the FIP effect. Here we demonstrate how to use the FIP effect to measure the distance to that object. We show that the FIP technique works with different sensor types and materials, as well as visible and near infrared light. The FIP technique operates on a working principle, which is fundamentally different from all established distance measurement methods and hence offers a way to overcome some of their limitations. FIP enables fast optical distance measurements with a simple single-pixel detector layout and minimal computational power. It allows for measurements that are robust to ambient light even outside the wavelength range accessible with silicon.

In this paper, we demonstrated the measurement principle at distances up to 2 m and showed a resolution of below 500 µm at a distance of 50 cm. In the Supplementary Information S7, distance measurements up to 70 m can be found."

F-35 Gets 6 Cameras for Surround View

PRNewswire: Surround view cameras reach defense industry. Lockheed Martin selectes Raytheon to develop and deliver the next generation Distributed Aperture System (DAS) for the F-35 fighter jet.

The F-35's DAS collects and sends high resolution, real-time imagery to the pilot's helmet from six IR cameras mounted around the aircraft, allowing pilots to see the environment around them – day or night. With the ability to detect and track threats from any angle, the F-35 DAS gives pilots situational awareness of the battlespace.

The F-35's DAS collects and sends high resolution, real-time imagery to the pilot's helmet from six IR cameras mounted around the aircraft, allowing pilots to see the environment around them – day or night. With the ability to detect and track threats from any angle, the F-35 DAS gives pilots situational awareness of the battlespace.

imec is Back to Film, Organic Film

imec promotes its organic film image sensors:

"We demonstrated a first film measuring 6 by 8 cm – which can check 4 fingers simultaneously – and which has a resolution of 200ppi. The second film – designed for a single fingerprint – has a resolution of 500ppi. This level of accuracy is what would be typical for the FBI to identify someone correctly.

The image sensors detect visible light between 400 and 700 nm that is reflected by the skin. They can also detect light that penetrates the skin before being reflected. This latter feature is of value for detecting a heartbeat, which provides an extra security check.

The fingerprint and palm print sensor is made up of a layer of oxide thin-film transistors with organic photodiodes on top. These photodiodes can then be ‘tuned’ by using a different organic material so that they detect a different wavelength, such as near infrared. This enables the vein pattern in a hand to be visualized, which is even more precise for accurate identification than a palm print.

In addition to this fingerprint scanner based on photodiodes and light, imec and Holst Centre are also working on a scanner that uses thermal sensors (PYCSEL project). Once again a lower layer of oxide thin-film transistors is used. The upper layer is a material that measures electric temperature changes. The fingerprint is then detected indirectly by local variations in temperature changes that correspond with the pattern of the fingerprint. Here again a resolution of 500ppi is achievable."

"We demonstrated a first film measuring 6 by 8 cm – which can check 4 fingers simultaneously – and which has a resolution of 200ppi. The second film – designed for a single fingerprint – has a resolution of 500ppi. This level of accuracy is what would be typical for the FBI to identify someone correctly.

The image sensors detect visible light between 400 and 700 nm that is reflected by the skin. They can also detect light that penetrates the skin before being reflected. This latter feature is of value for detecting a heartbeat, which provides an extra security check.

The fingerprint and palm print sensor is made up of a layer of oxide thin-film transistors with organic photodiodes on top. These photodiodes can then be ‘tuned’ by using a different organic material so that they detect a different wavelength, such as near infrared. This enables the vein pattern in a hand to be visualized, which is even more precise for accurate identification than a palm print.

In addition to this fingerprint scanner based on photodiodes and light, imec and Holst Centre are also working on a scanner that uses thermal sensors (PYCSEL project). Once again a lower layer of oxide thin-film transistors is used. The upper layer is a material that measures electric temperature changes. The fingerprint is then detected indirectly by local variations in temperature changes that correspond with the pattern of the fingerprint. Here again a resolution of 500ppi is achievable."

Thursday, June 14, 2018

ON Semi Talks about Automotive Pixel Technologies

AutoSens publishes an interview with ON Semi talking about "Super Exposing" pixel that reduces LED flicker and other ON innovations for the automotive market:

Mazda CX-3 SUV Features Nighttime Pedestrian Detection

Nikkei: Mazda CX-3 compact SUV comes with, as a standard feature, an automatic emergency braking system that supports nighttime pedestrians detection:

"Nighttime pedestrians are detected by the monocular camera. To support nighttime pedestrians, in terms of software, the logic of detecting pedestrians was improved, enhancing the accuracy of recognizing pedestrians at night. Its hardware was also improved to increase the speed of exchanging data between the [Mobileye] EyeQ3 image processing chip and memory."

"Nighttime pedestrians are detected by the monocular camera. To support nighttime pedestrians, in terms of software, the logic of detecting pedestrians was improved, enhancing the accuracy of recognizing pedestrians at night. Its hardware was also improved to increase the speed of exchanging data between the [Mobileye] EyeQ3 image processing chip and memory."

Wednesday, June 13, 2018

More AutoSens Detroit Interviews

AutoSens publishes more interviews from Detroit:

Xenomatix talks about many design wins for its LiDAR:

Tetravue talks about its technology:

FLIR talks about thermal camera for automotive applications:

Algolux talks about its ML algorithms:

Xenomatix talks about many design wins for its LiDAR:

Tetravue talks about its technology:

FLIR talks about thermal camera for automotive applications:

Algolux talks about its ML algorithms:

3D Imaging with PDAF Pixels

OSA Optics Express publishes a paper "Depth extraction with offset pixels" by W. J. Yun, Y. G. Kim, Y. M. Lee, J. Y. Lim, H. J. Kim, M. U. K. Khan, S. Chang, H. S. Park, and C. M. Kyung, KAIST, QiSens, and Kongju National University, Korea.

"Numerous depth extraction techniques have been proposed in the past. However, the utility of these techniques is limited as they typically require multiple imaging units, bulky platforms for computation, cannot achieve high speed and are computationally expensive. To counter the above challenges, a sensor with Offset Pixel Apertures (OPA) has been recently proposed. However, a working system for depth extraction with the OPA sensor has not been discussed. In this paper, we propose the first such system for depth extraction using the OPA sensor. We also propose a dedicated hardware implementation for the proposed system, named as the Depth Map Processor (DMP). The DMP can provide depth at 30 frames per second at 1920 × 1080 resolution with 31 disparity levels. Furthermore, the proposed DMP has low power consumption as for the aforementioned speed and resolution it only requires 290.76 mW. The proposed system makes it an ideal choice for depth extraction systems in constrained environments."

"Numerous depth extraction techniques have been proposed in the past. However, the utility of these techniques is limited as they typically require multiple imaging units, bulky platforms for computation, cannot achieve high speed and are computationally expensive. To counter the above challenges, a sensor with Offset Pixel Apertures (OPA) has been recently proposed. However, a working system for depth extraction with the OPA sensor has not been discussed. In this paper, we propose the first such system for depth extraction using the OPA sensor. We also propose a dedicated hardware implementation for the proposed system, named as the Depth Map Processor (DMP). The DMP can provide depth at 30 frames per second at 1920 × 1080 resolution with 31 disparity levels. Furthermore, the proposed DMP has low power consumption as for the aforementioned speed and resolution it only requires 290.76 mW. The proposed system makes it an ideal choice for depth extraction systems in constrained environments."

Tuesday, June 12, 2018

NIT Demos Log Sensor with LED Flicker Suppression

New Imaging Technologies publishes a demo of its NSC1701 sensor featuring LED flicker suppression mode:

Depth Sensing: From Exotic to Ubiquitous

Embedded Vision Alliance publishes a video lecture "The Evolution of Depth Sensing: From Exotic to Ubiquitous" delivered by Erik Klaas, CTO of 8tree in September 2017.

ams 48MP 30fps Full-Frame GS Imager Enters Mass Production

BusinessWire: ams announces its CMV50000, a high-speed 48MP global shutter CMOS sensor for machine vision applications, has entered into mass production and is available for purchase in high volumes now.

The CMV50000, which features a 35mm-format 7920 x 6004 array of 4.6µm-sized pixels based on a 8T pixel architecture, operates at 30 fps with 12-bit pixel depth at full resolution or a binned 4K and 8K modes, and even faster – up to 60 fps – with pixel sub-sampling at 4K resolution.

The sensor offers 64dB optical DR at full resolution and up to 68dB in binned 4K mode. The image sensor benefits from the implementation of sophisticated new on-chip noise-reduction circuitry such as black-level clamping, enabling it to capture high-quality images in low-light conditions.

The superior imaging performance of the CMV50000 was recognized earlier in 2018 when it was named the Biggest Breakthrough Development at the Image Sensors Europe Awards 2018.

“During recent months, ams has seen great demand for the CMV50000 from design teams developing new automated optical inspection systems and vision systems for testing flat panel displays,” said Wim Wuyts, Marketing Director for Image Sensors at ams. “The CMV50000 is now fully qualified and available to these manufacturers in production volumes. It is also about to be supported by a full demonstration system for evaluating the sensor’s performance.”

Both the monochrome and color versions of the CMV50000 are available in production volumes now. The per unit pricing is €3,450.

ams investors presentation dated by December 2017 details the company's strategy in imaging:

The CMV50000, which features a 35mm-format 7920 x 6004 array of 4.6µm-sized pixels based on a 8T pixel architecture, operates at 30 fps with 12-bit pixel depth at full resolution or a binned 4K and 8K modes, and even faster – up to 60 fps – with pixel sub-sampling at 4K resolution.

The sensor offers 64dB optical DR at full resolution and up to 68dB in binned 4K mode. The image sensor benefits from the implementation of sophisticated new on-chip noise-reduction circuitry such as black-level clamping, enabling it to capture high-quality images in low-light conditions.

The superior imaging performance of the CMV50000 was recognized earlier in 2018 when it was named the Biggest Breakthrough Development at the Image Sensors Europe Awards 2018.

“During recent months, ams has seen great demand for the CMV50000 from design teams developing new automated optical inspection systems and vision systems for testing flat panel displays,” said Wim Wuyts, Marketing Director for Image Sensors at ams. “The CMV50000 is now fully qualified and available to these manufacturers in production volumes. It is also about to be supported by a full demonstration system for evaluating the sensor’s performance.”

Both the monochrome and color versions of the CMV50000 are available in production volumes now. The per unit pricing is €3,450.

ams investors presentation dated by December 2017 details the company's strategy in imaging:

Subscribe to:

Posts (Atom)