Fraunhofer IOSB publishes MDPI paper "Laser Safety Calculations for Imaging Sensors" by Gunnar Ritt.

"This publication presents an approach to adapt the well-known classical eye-related concept of laser safety calculations on camera sensors as general as possible. The difficulty in this approach is that sensors, in contrast to the human eye, consist of a variety of combinations of optics and detectors. Laser safety calculations related to the human eye target terms like Maximum Permissible Exposure (MPE) and Nominal Ocular Hazard Distance (NOHD). The MPE describes the maximum allowed level of irradiation at the cornea of the eye to keep the eye safe from damage. The hazard distance corresponding to the MPE is called NOHD. Recently, a laser safety framework regarding the case of human eye dazzling was suggested. For laser eye dazzle, the quantities Maximum Dazzle Exposure (MDE) and the corresponding hazard distance Nominal Ocular Dazzle Distance (NODD) were introduced. Here, an approach is presented to extend laser safety calculations to camera sensors in an analogous way. The main objective thereby was to establish closed-form equations that are as simple as possible to allow also non-expert users to perform such calculations. This is the first time that such investigations have been carried out for this purpose."

Saturday, August 31, 2019

Friday, August 30, 2019

Why CIS Resolution Change Incurs So Much Latency

Arizona State University team led by Robert LiKamWa explains why image sensor resolution change takes so long on Android and iOS systems:

DNN + Compressive Sensing Promises to Increase Video Resolution to 100Gpixel

Axiv.org paper "Compressive Sampling for Array Cameras" by Xuefei Yan, David J. Brady, Jianqiang Wang, Chao Huang, Zian Li, Songsong Yan, Di Liu, and Zhan Ma from Duke University, Nanjing University, and Kunshan Camputer (?) Laboratory says:

"In the 75 year transition from standard definition to 8K, the pixel capacity of video has increased by a factor of 100. Over the same time period, the computer was invented and the processing, communications and storage capacities of digital systems improved by 6-8 orders of magnitude. The failure of video resolution to develop a rate comparable to other information technologies may be attributed to the physical challenge of creating lenses and sensors capable of capturing more than 10 megapixels. Recently, however, parallel and multiscale optical and electronic designs have enabled video capture with resolution in the range of 0.1-10 gigapixels per frame. At 10 to 100 gigapixels, video capacity will have increased by a factor comparable to improvements in other information technologies."

"In the 75 year transition from standard definition to 8K, the pixel capacity of video has increased by a factor of 100. Over the same time period, the computer was invented and the processing, communications and storage capacities of digital systems improved by 6-8 orders of magnitude. The failure of video resolution to develop a rate comparable to other information technologies may be attributed to the physical challenge of creating lenses and sensors capable of capturing more than 10 megapixels. Recently, however, parallel and multiscale optical and electronic designs have enabled video capture with resolution in the range of 0.1-10 gigapixels per frame. At 10 to 100 gigapixels, video capacity will have increased by a factor comparable to improvements in other information technologies."

Thursday, August 29, 2019

Interview with Lynred CEO

Security and Defense Business Review publishes an interview with Jean-François Delepau, CEO of Lynred company formed by merger of Sofradir and ULIS IR businesses. Few quotes:

"Lynred is now the world’s number-two developer of infrared technology in the two fields we serve, and we have an 18% share of the global market. The company boasts 1,000 employees, mostly based in Grenoble, and generates annual revenue of €225 million (43% on the defense market, 34% on the industrial market, 12% on the civil aeronautics and space markets, and 11% on the consumer goods market).

Lynred will pursue a pro-active growth strategy. The military infrared imaging systems market, estimated at $8.5 billion (around €7.6 billion) in 2018, is expected to grow to $14 billion (around €12.5 billion) by 2023. If you extrapolate the data from the industrial and consumer camera market, this market could grow from $2.9 billion (around €2.6 billion) to $4.1 billion (around €3.7 billion) over the same period. This represents potential growth of up to 10% for cameras and systems.

With the government’s support, Lynred is investing €150 million over five years to develop its future generations of infrared detectors. These new IR systems will be designed to meet the needs of autonomous systems for smart buildings (workspace management, energy efficiency), traffic safety, and vehicle cabin comfort. The development work will also encompass very-large-format infrared detectors used in astronomy and space observation and lightweight, compact detectors that can be integrated into portable systems and drones."

"Lynred is now the world’s number-two developer of infrared technology in the two fields we serve, and we have an 18% share of the global market. The company boasts 1,000 employees, mostly based in Grenoble, and generates annual revenue of €225 million (43% on the defense market, 34% on the industrial market, 12% on the civil aeronautics and space markets, and 11% on the consumer goods market).

Lynred will pursue a pro-active growth strategy. The military infrared imaging systems market, estimated at $8.5 billion (around €7.6 billion) in 2018, is expected to grow to $14 billion (around €12.5 billion) by 2023. If you extrapolate the data from the industrial and consumer camera market, this market could grow from $2.9 billion (around €2.6 billion) to $4.1 billion (around €3.7 billion) over the same period. This represents potential growth of up to 10% for cameras and systems.

With the government’s support, Lynred is investing €150 million over five years to develop its future generations of infrared detectors. These new IR systems will be designed to meet the needs of autonomous systems for smart buildings (workspace management, energy efficiency), traffic safety, and vehicle cabin comfort. The development work will also encompass very-large-format infrared detectors used in astronomy and space observation and lightweight, compact detectors that can be integrated into portable systems and drones."

Wednesday, August 28, 2019

Waymo LiDAR Stealing Saga Goes On

NYTimes, EETimes, Reuters: While Waymo has settled the lawsuit against Uber over stealing its LiDAR secrets, the U.S. Department of Justice filed the personal criminal charges against Anthony Levandowski, accusing the former high-ranking Alphabet Inc. engineer of stealing the company’s LiDAR technology before joining rival Uber Technologies Inc. Levandowski has started another self-driving car technology company since leaving Uber in 2017.

In February 2018, Uber announced to pay $245M worth of its own shares to Waymo to settle the legal dispute.

In February 2018, Uber announced to pay $245M worth of its own shares to Waymo to settle the legal dispute.

IC Insights: CIS Market Sets Records

IC Insights: Despite an expected slowdown in growth this year and in 2020, the CMOS sensor market is forecast to continue reaching record-high sales and unit volumes through 2023 with the spread of digital imaging applications offsetting weakness in the global economy and the fallout from the U.S.-China trade war.

IC Insights is forecasting an 11% rise in CMOS sensor shipments in 2019 to a record-high 6.1b units worldwide, followed by a 9% increase in 2020 to 6.6b when the global economy is expected to teeter into recessionary territory, partly because of the tariff-driven trade war between the U.S. and China. In 2018, CMOS image sensor revenue grew 14% to $14.2b after climbing 19% in 2017. CMOS sensor sales have hit new-high levels each year since 2011 and the string of consecutive records is expected to continue through 2023, when sales reach $21.5 billion.

Worldwide shipments of CMOS image sensors also have reached eight consecutive years of all-time high levels since 2011 and those annual records are expected to continue to 2023, when the unit volume hits 9.5b. China accounted for about 39% of all image sensors purchased in 2018 (not counting units bought by companies in other countries for system production in Chinese assembly plants). About 19% of image sensors in 2018 were purchased by companies in the Americas (90% of them in the US.)

Camera cellphones continue to be the largest end-use market for CMOS image sensors, generating 61% of sales and representing 64% of unit shipments in 2018, but other applications will provide greater lift in setting annual market records in the next five years. The automotive systems being the fastest growing CMOS sensor application, with dollar sales volume rising by a CAGR of 29.7% to $3.2b in 2023, or 15% of the market’s total sales that year (versus 6% in 2018). After that, the highest sales growth rates in the next five years are expected to be: medical/scientific systems (a CAGR of 22.7% to $1.2b); security cameras (a CAGR of 19.5% to $2.0b); industrial, including robots and the Internet of Things (a CAGR of 16.1% to $1.8b); and toys and games, including consumer-class virtual/augmented reality (a CAGR of 15.1% to $172M).

CMOS sensor sales for cellphones are forecast to grow by a CAGR of just 2.6% to $9.8b in 2023, or about 45% of the market total versus 61% in 2018 ($8.6b). Revenues for CMOS sensors in PCs and tablets are expected to rise by a CAGR of 5.6% to $990M in 2023, while sensor sales for stand-alone digital cameras (still-picture photography and video) are projected to grow by a CAGR of only 1.0% to $1.1b in the next five years.

IC Insights is forecasting an 11% rise in CMOS sensor shipments in 2019 to a record-high 6.1b units worldwide, followed by a 9% increase in 2020 to 6.6b when the global economy is expected to teeter into recessionary territory, partly because of the tariff-driven trade war between the U.S. and China. In 2018, CMOS image sensor revenue grew 14% to $14.2b after climbing 19% in 2017. CMOS sensor sales have hit new-high levels each year since 2011 and the string of consecutive records is expected to continue through 2023, when sales reach $21.5 billion.

Worldwide shipments of CMOS image sensors also have reached eight consecutive years of all-time high levels since 2011 and those annual records are expected to continue to 2023, when the unit volume hits 9.5b. China accounted for about 39% of all image sensors purchased in 2018 (not counting units bought by companies in other countries for system production in Chinese assembly plants). About 19% of image sensors in 2018 were purchased by companies in the Americas (90% of them in the US.)

Camera cellphones continue to be the largest end-use market for CMOS image sensors, generating 61% of sales and representing 64% of unit shipments in 2018, but other applications will provide greater lift in setting annual market records in the next five years. The automotive systems being the fastest growing CMOS sensor application, with dollar sales volume rising by a CAGR of 29.7% to $3.2b in 2023, or 15% of the market’s total sales that year (versus 6% in 2018). After that, the highest sales growth rates in the next five years are expected to be: medical/scientific systems (a CAGR of 22.7% to $1.2b); security cameras (a CAGR of 19.5% to $2.0b); industrial, including robots and the Internet of Things (a CAGR of 16.1% to $1.8b); and toys and games, including consumer-class virtual/augmented reality (a CAGR of 15.1% to $172M).

CMOS sensor sales for cellphones are forecast to grow by a CAGR of just 2.6% to $9.8b in 2023, or about 45% of the market total versus 61% in 2018 ($8.6b). Revenues for CMOS sensors in PCs and tablets are expected to rise by a CAGR of 5.6% to $990M in 2023, while sensor sales for stand-alone digital cameras (still-picture photography and video) are projected to grow by a CAGR of only 1.0% to $1.1b in the next five years.

Sony and Yamaha Autonomous Vehicle

TechXplore: Sony and Yamaha Motor unveil SC-1 Sociable Cart, promoted as "a new mobility experience" and based on Sony sensors. There are five 35 mm full-frame CMOS sensors (four directions around the vehicle and one in the vehicle) and two ISX019 1/3.8-inch CMOS sensor embedded cameras.

The image sensors are used to capture surroundings on HD displays in the area where windows would otherwise be. A ToF-based mixed reality technology developed by Sony superimposes computer graphics onto the surroundings on the monitor. "This turns the area that used to be taken up by windows, where passengers could only see the scenery, into an entertainment area."

According to Sony, "analyzing the images obtained via the image sensor with artificial intelligence (AI) enables the information being streamed to be interactive. The AI can determine the attributes (age, gender, etc.) of people outside the vehicle and optimize ads and other streaming info accordingly."

The image sensors are used to capture surroundings on HD displays in the area where windows would otherwise be. A ToF-based mixed reality technology developed by Sony superimposes computer graphics onto the surroundings on the monitor. "This turns the area that used to be taken up by windows, where passengers could only see the scenery, into an entertainment area."

According to Sony, "analyzing the images obtained via the image sensor with artificial intelligence (AI) enables the information being streamed to be interactive. The AI can determine the attributes (age, gender, etc.) of people outside the vehicle and optimize ads and other streaming info accordingly."

Tuesday, August 27, 2019

CMOS Sensors for Kids

BBC Teach publishes a video with Professor Danielle George explains how a digital camera’s CMOS sensor captures an image, using balls and buckets to represent photons, electrons and capacitors in an interactive demonstration with four children. Unfortunately, the video is only available in the UK and Crown Dependencies.

Monday, August 26, 2019

Convergence of Electronic Memory and Optical Sensor

Research Journal publishes a review paper "2D Materials Based Optoelectronic Memory: Convergence of Electronic Memory and Optical Sensor" by Feichi Zhou, Jiewei Chen, Xiaoming Tao, Xinran Wang, and Yang Chai from Hong Kong Polytechnic University and Nanjing University.

"a device integration with optical sensing and data storage and processing is highly demanded for future energy-efficient and miniaturized electronic system. In this paper, we overview the state-of-the-art optoelectronic random-access memories (ORAMs) based on 2D materials, as well as ORAM synaptic devices and their applications in neural network and image processing. The ORAM devices potentially enable direct storage/processing of sensory data from external environment. We also provide perspectives on possible directions of other neuromorphic sensor design (e.g., auditory and olfactory) based on 2D materials towards the future smart electronic systems for artificial intelligence."

"a device integration with optical sensing and data storage and processing is highly demanded for future energy-efficient and miniaturized electronic system. In this paper, we overview the state-of-the-art optoelectronic random-access memories (ORAMs) based on 2D materials, as well as ORAM synaptic devices and their applications in neural network and image processing. The ORAM devices potentially enable direct storage/processing of sensory data from external environment. We also provide perspectives on possible directions of other neuromorphic sensor design (e.g., auditory and olfactory) based on 2D materials towards the future smart electronic systems for artificial intelligence."

Sunday, August 25, 2019

LiDAR Startup Oryx Vision Shuts Down

CTech: Israeli LiDAR startup Oryx Vision shuts down. Over the past 3 years, the company has raised $67M and now is in a process of returning the remaining $40M to its investors.

The field has changed immensely since the company started its operations, and all the players in the field of LiDAR now understand that autonomous vehicles will take more time to become mainstream than was originally thought, Oryx CEO Rani Wellingstein says, “Currently, the architecture of the autonomous vehicle is simply not converging, so a venture-backed company will not be able to justify the investment that will still be needed."

The company founders VP R&D David Ben-Bassat and CEO Rani Wellingstein tried to sell Oryx, but were not successful. "There was a lot of deliberation and investors were prepared to keep going, but we saw that LIDAR was becoming a game of giants and as a small company, it would be difficult to continue operating and return investments."

Update: A 2016 LinkedIn presentation talks about Oryx technology:

AutoSens published a short company video about a year ago:

Update #2: Globes reports about a disagreement between the Oryx founders:

Over the past year, Wellingstein advocated shutting down Oryx in opposition to Ben-Bassat, because he believed that there was no point in continuing to operate in a market experiencing a slowdown. Ben-Bassat believed that the company could continue operating on a leaner format and keep the investors from losing money on their investment, but now realizes that it will take longer than he originally thought. "The diagnosis is correct. The market is behind schedule and shrinking. The situation now is not the same as it was in 2016, and I really think that there was hype here. There was a bubble, and it hasn't finished deflating. We're barely a third of the way there," Ben-Bassat added.

"I left the company three months ago because of material disagreements with Ran. I was not a party to the decision to close the company down," Ben-Bassat told "Globes." "I believed, and still believe, that there is an opportunity for other options for the company." No comment was available from Wellingstein.

The field has changed immensely since the company started its operations, and all the players in the field of LiDAR now understand that autonomous vehicles will take more time to become mainstream than was originally thought, Oryx CEO Rani Wellingstein says, “Currently, the architecture of the autonomous vehicle is simply not converging, so a venture-backed company will not be able to justify the investment that will still be needed."

The company founders VP R&D David Ben-Bassat and CEO Rani Wellingstein tried to sell Oryx, but were not successful. "There was a lot of deliberation and investors were prepared to keep going, but we saw that LIDAR was becoming a game of giants and as a small company, it would be difficult to continue operating and return investments."

Update: A 2016 LinkedIn presentation talks about Oryx technology:

AutoSens published a short company video about a year ago:

Update #2: Globes reports about a disagreement between the Oryx founders:

Over the past year, Wellingstein advocated shutting down Oryx in opposition to Ben-Bassat, because he believed that there was no point in continuing to operate in a market experiencing a slowdown. Ben-Bassat believed that the company could continue operating on a leaner format and keep the investors from losing money on their investment, but now realizes that it will take longer than he originally thought. "The diagnosis is correct. The market is behind schedule and shrinking. The situation now is not the same as it was in 2016, and I really think that there was hype here. There was a bubble, and it hasn't finished deflating. We're barely a third of the way there," Ben-Bassat added.

"I left the company three months ago because of material disagreements with Ran. I was not a party to the decision to close the company down," Ben-Bassat told "Globes." "I believed, and still believe, that there is an opportunity for other options for the company." No comment was available from Wellingstein.

Face Recognition Q&A

New York University School of Law Professor Barry Friedman and Moji Solgi, VP of AI and Machine Learning at Axon Enterprise, present a Q&A conversation between face recognition policy analyst and a technologist. Few quotes:

Q: How accurate is FR? We’ve heard differing accounts of the state of the technology — some experts say it’s nowhere near ready for field deployment, and yet we hear stories both internationally and locally about FR being used in a variety of ways.

Short answer: We are getting closer every year, but we need at least two orders of magnitude reduction in error rates to make the technology feasible for real-time applications with uncooperative subjects such as in body-worn cameras.

Q: When companies say that their product should be set to only provide a match at a “99%” accuracy level, what does that mean?

Short answer: Such metrics are meant as marketing tools and are meaningless for all practical purposes.

Q: How do FR systems compare with human face recognition? In other words, can computers do a better job than human in recognizing faces?

Short answer: They are far behind for most cases, but many misguiding headlines (the oldest that we could find was from 2006) have claimed FR has surpassed human-level performance.

Q: How accurate is FR? We’ve heard differing accounts of the state of the technology — some experts say it’s nowhere near ready for field deployment, and yet we hear stories both internationally and locally about FR being used in a variety of ways.

Short answer: We are getting closer every year, but we need at least two orders of magnitude reduction in error rates to make the technology feasible for real-time applications with uncooperative subjects such as in body-worn cameras.

Q: When companies say that their product should be set to only provide a match at a “99%” accuracy level, what does that mean?

Short answer: Such metrics are meant as marketing tools and are meaningless for all practical purposes.

Q: How do FR systems compare with human face recognition? In other words, can computers do a better job than human in recognizing faces?

Short answer: They are far behind for most cases, but many misguiding headlines (the oldest that we could find was from 2006) have claimed FR has surpassed human-level performance.

Saturday, August 24, 2019

LiDAR Approaches Overview

Universitat Politècnica de Catalunya, Spain, publishes a paper "An overview of imaging lidar sensors for autonomous vehicles" by Santiago Royo and Maria Ballesta.

"Imaging lidars are one of the hottest topics in the optronics industry. The need to sense the surroundings of every autonomous vehicle has pushed forward a career to decide the final solution to be implemented. The diversity of state-of-the art approaches to the solution brings, however, a large uncertainty towards such decision. This results often in contradictory claims from different manufacturers and developers. Within this paper we intend to provide an introductory overview of the technology linked to imaging lidars for autonomous vehicles. We start with the main single-point measurement principles, and then present the different imaging strategies implemented 8 in the different solutions. An overview of the main components most frequently used in practice is also presented. Finally, a brief section on pending issues for lidar development has been included, 10 in order to discuss some of the problems which still need to be solved before an efficient final implementation.Beyond this introduction, the reader is provided with a detailed bibliography containing both relevant books and state of the art papers."

"Imaging lidars are one of the hottest topics in the optronics industry. The need to sense the surroundings of every autonomous vehicle has pushed forward a career to decide the final solution to be implemented. The diversity of state-of-the art approaches to the solution brings, however, a large uncertainty towards such decision. This results often in contradictory claims from different manufacturers and developers. Within this paper we intend to provide an introductory overview of the technology linked to imaging lidars for autonomous vehicles. We start with the main single-point measurement principles, and then present the different imaging strategies implemented 8 in the different solutions. An overview of the main components most frequently used in practice is also presented. Finally, a brief section on pending issues for lidar development has been included, 10 in order to discuss some of the problems which still need to be solved before an efficient final implementation.Beyond this introduction, the reader is provided with a detailed bibliography containing both relevant books and state of the art papers."

Friday, August 23, 2019

AUO Presents Large Area Optical Fingerprint Sensor Embedded in LCD Display

Devicespecifications: Taiwan-based AU Optronics unveils the 6-inch full screen optical in-cell fingerprint LTPS LCD which is the world first of its kind to have an optical sensor installed within the LCD structure. Equipped with AHVA (Advanced Hyper-Viewing Angle) technology, full HD+ (1080 x 2160) resolution, and a high pixel density of 403 PPI, the panel has a 30 ms sensor response time for the accurate fingerprint sensing. For identification such as law enforcement and customs inspection purposes, AUO’s 4.4-inch fingerprint sensor module features high resolution (1600 x 1500) and a high pixel density of 503 PPI. Its large sensor area allows for multiple fingers detection, enhancing both accuracy and security. The module can produce accurate images outdoors even under strong sunlight.

Samsung Presents Green-Selective Organic PD

OSA Optics Express publishes Samsung paper "Green-light-selective organic photodiodes for full-color imaging" by Gae Hwang Lee, Xavier Bulliard, Sungyoung Yun, Dong-Seok Leem, Kyung-Bae Park, Kwang-Hee Lee, Chul-Joon Heo, In-Sun Jung, Jung-Hwa Kim, Yeong Suk Choi, Seon-Jeong Lim, and Yong Wan Jin.

"OPDs using narrowband organic semiconductors in the active layers are highly promising candidates for novel stacked-type full-color photodetectors or image sensors [11–17]. In a stacked-type architecture, the light detection area is increased and thanks to the organic layer, the spectral response can be controlled without the use of a top filter. Recently, highly attractive filterless narrowband organic photodetectors based on charge collection narrowing for tuning the internal quantum efficiency (IQE) have been reported [18,19], but this concept might be hardly applied to real full-color imaging due to the significant mismatch between the absorption spectra and the external quantum efficiency (EQE) spectra of the devices as well as the low EQEs. To improve the EQE of OPDs, in addition to the development of narrowband and high absorption organic semi-conductors, other optical manipulations can be brought to the device.

...we presented a high-performing green-selective OPD that reached an EQE over 70% at an operating voltage of 3 V, while the dark current was only 6 e/s/μm2. Those performances result from the use of newly synthesized dipolar p-type compounds having high absorption coefficient and from light manipulation within the device."

"OPDs using narrowband organic semiconductors in the active layers are highly promising candidates for novel stacked-type full-color photodetectors or image sensors [11–17]. In a stacked-type architecture, the light detection area is increased and thanks to the organic layer, the spectral response can be controlled without the use of a top filter. Recently, highly attractive filterless narrowband organic photodetectors based on charge collection narrowing for tuning the internal quantum efficiency (IQE) have been reported [18,19], but this concept might be hardly applied to real full-color imaging due to the significant mismatch between the absorption spectra and the external quantum efficiency (EQE) spectra of the devices as well as the low EQEs. To improve the EQE of OPDs, in addition to the development of narrowband and high absorption organic semi-conductors, other optical manipulations can be brought to the device.

...we presented a high-performing green-selective OPD that reached an EQE over 70% at an operating voltage of 3 V, while the dark current was only 6 e/s/μm2. Those performances result from the use of newly synthesized dipolar p-type compounds having high absorption coefficient and from light manipulation within the device."

Thursday, August 22, 2019

ResearchInChina on Automotive Vision Difficulties

ResearchInChina publishes quite a long list of Toyota camera-based Pre-collision System (PCS) difficulties in various situations:

Toyota’s Pre-Collision System (PCS) renders an in-vehicle camera and laser to detect pedestrians and other vehicles in front of the vehicle. If it determines possibility of a frontal collision, the system will prompt the driver to take action and avoid it with audio and visual alerts. If the driver notices the potential collision and apply the brakes, the Pre-Collision System with Pedestrian Detection (PCS w/PD) may apply additional force using Brake Assist (BA). If the driver fails to brake in time, it may automatically apply the brakes to reduce the vehicle’s speed, helping to minimize the likelihood of a frontal collision or reduce its severity.

In some situations (such as the following), a vehicle/pedestrian may not be detected by the radar and camera sensors, thus preventing the system from operating properly:

PCS should be disabled when radar and camera sensor may not recognize a pedestrian in the following circumstances:

ADAS suppliers and OEMs work together on product and technology development to make breakthroughs in so many inapplicable scenarios, so that ADAS can get improved and become safer. All players still have a long way to go before autonomous driving comes true.

Toyota’s Pre-Collision System (PCS) renders an in-vehicle camera and laser to detect pedestrians and other vehicles in front of the vehicle. If it determines possibility of a frontal collision, the system will prompt the driver to take action and avoid it with audio and visual alerts. If the driver notices the potential collision and apply the brakes, the Pre-Collision System with Pedestrian Detection (PCS w/PD) may apply additional force using Brake Assist (BA). If the driver fails to brake in time, it may automatically apply the brakes to reduce the vehicle’s speed, helping to minimize the likelihood of a frontal collision or reduce its severity.

In some situations (such as the following), a vehicle/pedestrian may not be detected by the radar and camera sensors, thus preventing the system from operating properly:

- When an oncoming vehicle approaches

- When the preceding vehicle is a motorcycle or a bicycle

- When approaching the side or front of a vehicle

- If a preceding vehicle has a small rear end, such as an unloaded truck

- If a preceding vehicle has a low rear end, such as a low bed trailer

- When the preceding vehicle has high ground clearance

- When a preceding vehicle is carrying a load which protrudes past its rear bumper

- If a vehicle ahead is irregularly shaped, such as a tractor or sidecar

- If the sun or other light is shining directly on the vehicle ahead

- If a vehicle cuts in front of your vehicle or emerges from beside a vehicle

- If a preceding vehicle ahead makes an abrupt maneuver (such as sudden swerving, acceleration or deceleration)

- When a sudden cut-in occurs behind a preceding vehicle

- When a preceding vehicle is not right in front of your vehicle

- When driving in bad weather such as heavy rain, fog, snow or a sandstorm

- When the vehicle is hit by water, snow, dust, etc. from a vehicle ahead

- When driving through steam or smoke

- When amount of light changes dramatically, such as at a tunnel exit/entrance

- When a very bright light, such as the sun or the headlights of oncoming vehicle, beat down the camera sensor

- When driving in low light (dusk, dawn, etc.) or when driving without headlights at night or in a tunnel

- After the hybrid system has started and the vehicle has not been driven for a certain period of time

- While making a left/right turn and within a few seconds after making a left/right turn

- While driving on a curve, and within a few seconds after driving on a curve

- If your vehicle is skidding

- If the front of the vehicle is raised or lowered

- If the wheels are misaligned

- If the camera sensor is blocked (by a wiper blade, etc.)

- If your vehicle is wobbling

- If your vehicle is being driven at extremely high speeds

- While driving up or down a slope

- When the camera sensor or radar sensor is misaligned

PCS should be disabled when radar and camera sensor may not recognize a pedestrian in the following circumstances:

- When a pedestrian is 1m or shorter or 2m or taller

- When a pedestrian wears oversized clothing (a rain coat, long skirt, etc.), obscuring the pedestrian’s silhouette

- When a pedestrian carries large baggage, holds an umbrella, etc., hiding part of the body

- When a pedestrian leans forward or squats

- When a pedestrian pushes a pram, wheelchair, bicycle or other vehicle

- When pedestrians are walking in a group or are close together

- When a pedestrian is in white that reflects sunlight and looks extremely bright

- When a pedestrian is in the darkness such as at night or while in a tunnel

- When a pedestrian has clothing with brightness/color similar to scenery and that blend into the background

- When a pedestrian is staying close to or walking alongside a wall, fence, guardrail, vehicle or other obstacle

- When a pedestrian is walking on top of metal on the road surface

- When a pedestrian walks fast

- When a pedestrian abruptly changes walking speed

- When a pedestrian runs out from behind a vehicle or a large object

- When a pedestrian is very close to a side (external rearview mirror) of the vehicle

ADAS suppliers and OEMs work together on product and technology development to make breakthroughs in so many inapplicable scenarios, so that ADAS can get improved and become safer. All players still have a long way to go before autonomous driving comes true.

Sony vs Top 14 Semiconductor Companies

IC Insights: Sony is the only top-15 semiconductor supplier to register YoY growth in 1H19. In total, the top-15 semiconductor companies’ sales dropped by 18% in 1H19 compared to 1H18, 4% worse than the total worldwide semiconductor industry 1H19/1H18 decline of 14%. Most of Sony semiconductor sales are from CMOS image sensors.

Wednesday, August 21, 2019

Automotive News: Porsche-Trieye, Koito-Daihatsu

Globes: Porsche has invested in Israeli SWIR sensor startup Trieye. “We see great potential in this sensor technology that paves the way for the next generation of driver assistance systems and autonomous driving functions. SWIR can be a key element: it offers enhanced safety at a competitive price,” says Michael Steiner, Member of the Executive Board for R&D at Porsche AG.

Porsche $1.5M investment is a part of Series A round extension from $17M to $19M. TriEye's SWIR technology is CMOS-based, said to enable the scalable mass-production of SWIR sensors and reducing the cost by a factor of 1,000 compared to current InGaAs-based technology. As a result, the company can produce an affordable HD SWIR camera in a compact format, facilitating easy in-vehicle mounting behind the car’s windshield.

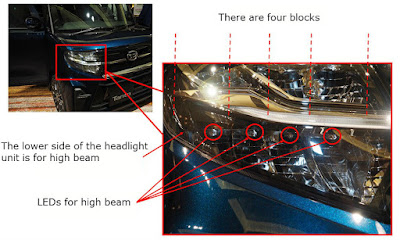

Nikkei: Daihatsu low cost car Tanto released it July 9, 2019 features adaptive headlight technology from Koito. This is the first appearance of such a technology in low cost car, probably signalling a beginning of the broad market adoption:

"When a light-distribution-changeable headlight detects an oncoming or preceding vehicle at the time of using high beam, it blocks part of light so that the light is not directed at the area in which the vehicle exists.

In general, it uses multiple horizontally-arranged LED chips for high beam and controls light distribution by turning off LED light that is directed at an area to which light should not be applied.

With a stereo camera set up in the location of the rear-view mirror, it recognizes oncoming and preceding vehicles. By recognizing the color and intensity of light captured by the camera, it judges whether the source of the light is a headlight or taillight.

When it recognizes a vehicle, LEDs irradiating the area of the vehicle are turned off. It can recognize a headlight about 500m (approx 0.31 miles) away."

Porsche $1.5M investment is a part of Series A round extension from $17M to $19M. TriEye's SWIR technology is CMOS-based, said to enable the scalable mass-production of SWIR sensors and reducing the cost by a factor of 1,000 compared to current InGaAs-based technology. As a result, the company can produce an affordable HD SWIR camera in a compact format, facilitating easy in-vehicle mounting behind the car’s windshield.

Nikkei: Daihatsu low cost car Tanto released it July 9, 2019 features adaptive headlight technology from Koito. This is the first appearance of such a technology in low cost car, probably signalling a beginning of the broad market adoption:

"When a light-distribution-changeable headlight detects an oncoming or preceding vehicle at the time of using high beam, it blocks part of light so that the light is not directed at the area in which the vehicle exists.

In general, it uses multiple horizontally-arranged LED chips for high beam and controls light distribution by turning off LED light that is directed at an area to which light should not be applied.

With a stereo camera set up in the location of the rear-view mirror, it recognizes oncoming and preceding vehicles. By recognizing the color and intensity of light captured by the camera, it judges whether the source of the light is a headlight or taillight.

When it recognizes a vehicle, LEDs irradiating the area of the vehicle are turned off. It can recognize a headlight about 500m (approx 0.31 miles) away."

Cambridge Mechatronics Extends ToF Range by 5-10x

AF and OIS actuators company Cambridge Mechatronics Ltd. (CML) comes up with an interesting statement:

"CML has developed technology capable of extending the range of ToF depth sensing for next-generation AR experiences.

Because current technology can only detect objects at a maximum distance of around two metres, its usage in smartphones is limited to photographic enhancements and a small number of Augmented Reality (AR) applications. However, for widespread adoption, these experiences should be enriched by significantly increasing the working range.

CML has developed technology that is now ready to be licensed capable of increasing this range of interaction to ten metres to unlock the full potential of the smartphone AR market."

And another statement:

"Mobile AR uses sensors to create a depth map of the surrounding landscape in order to overlay images on top of the real world. Current 3D sensors have a limited range of interaction of around 4 metres. CML is developing SMA actuator technology to improve the range and accuracy of augmented experiences tenfold, providing a more immersive experience."

"CML has developed technology capable of extending the range of ToF depth sensing for next-generation AR experiences.

Because current technology can only detect objects at a maximum distance of around two metres, its usage in smartphones is limited to photographic enhancements and a small number of Augmented Reality (AR) applications. However, for widespread adoption, these experiences should be enriched by significantly increasing the working range.

CML has developed technology that is now ready to be licensed capable of increasing this range of interaction to ten metres to unlock the full potential of the smartphone AR market."

And another statement:

"Mobile AR uses sensors to create a depth map of the surrounding landscape in order to overlay images on top of the real world. Current 3D sensors have a limited range of interaction of around 4 metres. CML is developing SMA actuator technology to improve the range and accuracy of augmented experiences tenfold, providing a more immersive experience."

Tuesday, August 20, 2019

Smartsens Partners with MEMS Drive to Deliver Chip-Level OIS

PRNewswire: SmartSens has signed a cooperation agreement with MEMS image stabilization company MEMS Drive. The two will collaborate on a series of research projects in the fields of CMOS sensing chip and chip-level OIS technologies to open up new areas of applications.

Through this collaboration, SmartSens now introduces chip-level anti-vibration technology directly into CMOS sensors, made available in non-mobile applications, such as security and machine vision. In comparison with conventional OIS technology, sensors with chip-level stabilization offer higher simplicity in engineering, without losing any of the robust capabilities unique to OIS in traditional VCMs. Additionally, these sensors will feature added stabilization control to the rotation of the sensor, achieving optimal stabilization with 5 axes. For applications in AI-enabled systems, which often require HDR imaging, high frame rate video capture, or sensing in ultra-low light conditions, SmartSens will be able to provide a solution in the form of efficient stabilization.

Besides its conventional application in mobile cameras, image stabilization is equally important for application in non-mobile areas such as security monitoring, AI machine vision and autonomous vehicles. As an example of the importance of video capturing chips in the field of AI machine vision, CMOS image sensors need to be able to respond to factors such as uneven roads and air turbulence that could result in blurred images. This requires that the image sensor itself possesses exceptional stabilization capabilities, in order to increase efficiency in both the identification as well as the overall AI system.

SmartSens CMO Chris Yiu expresses her optimism, saying, "Optical image stabilizing technology is one of the hottest areas of research and development in the fields of DSLR and mobile cameras, and is unprecedented in non-mobile applications such as AI video capturing. SmartSens's collaboration with MEMS Drive in the area of non-mobile image stabilization opens up new possibilities in this field. SmartSens and MEMS Drive are both companies that rely on innovation to drive growth, and we are very pleased to use this partnership to bring forth further innovation in the field of image sensing technology."

MEMS Drive CEO Colin Kwan notes, "Since its founding, MEMS Drive has dedicated itself to replacing the limited voice coil motor (VCM) technology with its own innovative MEMS OIS technology. Our close collaboration with SmartSens will further improve efficiency of MEMS OIS develop image stabilization for non-mobile fields, and together achieve many more technological breakthroughs."

Through this collaboration, SmartSens now introduces chip-level anti-vibration technology directly into CMOS sensors, made available in non-mobile applications, such as security and machine vision. In comparison with conventional OIS technology, sensors with chip-level stabilization offer higher simplicity in engineering, without losing any of the robust capabilities unique to OIS in traditional VCMs. Additionally, these sensors will feature added stabilization control to the rotation of the sensor, achieving optimal stabilization with 5 axes. For applications in AI-enabled systems, which often require HDR imaging, high frame rate video capture, or sensing in ultra-low light conditions, SmartSens will be able to provide a solution in the form of efficient stabilization.

Besides its conventional application in mobile cameras, image stabilization is equally important for application in non-mobile areas such as security monitoring, AI machine vision and autonomous vehicles. As an example of the importance of video capturing chips in the field of AI machine vision, CMOS image sensors need to be able to respond to factors such as uneven roads and air turbulence that could result in blurred images. This requires that the image sensor itself possesses exceptional stabilization capabilities, in order to increase efficiency in both the identification as well as the overall AI system.

SmartSens CMO Chris Yiu expresses her optimism, saying, "Optical image stabilizing technology is one of the hottest areas of research and development in the fields of DSLR and mobile cameras, and is unprecedented in non-mobile applications such as AI video capturing. SmartSens's collaboration with MEMS Drive in the area of non-mobile image stabilization opens up new possibilities in this field. SmartSens and MEMS Drive are both companies that rely on innovation to drive growth, and we are very pleased to use this partnership to bring forth further innovation in the field of image sensing technology."

MEMS Drive CEO Colin Kwan notes, "Since its founding, MEMS Drive has dedicated itself to replacing the limited voice coil motor (VCM) technology with its own innovative MEMS OIS technology. Our close collaboration with SmartSens will further improve efficiency of MEMS OIS develop image stabilization for non-mobile fields, and together achieve many more technological breakthroughs."

Artilux Ge-on-Si Imager Info

Artilux kindly agreed to answer on some of my questions on their technology and progress:

Q: What is your QE vs wevelength?

A: "For QE, it is a rather smooth transition from 70%@940nm to 50%@1550nm, and we are working on further optimization at wavelengths that silicon cannot detect."

Q: What is your imager spatial resolution, pixel size, and dark current?

A: "For pitch, resolution, and Idark vs temperature, what we can disclose now is that we have been working with a few LiDAR companies to accommodate different pitch and resolution requirements, as well as the dark current vs temperature trend up to 125C. As for the consumer products, we will have a more complete product portfolio line-up over the following quarters."

Q: What is your QE vs wevelength?

A: "For QE, it is a rather smooth transition from 70%@940nm to 50%@1550nm, and we are working on further optimization at wavelengths that silicon cannot detect."

Q: What is your imager spatial resolution, pixel size, and dark current?

A: "For pitch, resolution, and Idark vs temperature, what we can disclose now is that we have been working with a few LiDAR companies to accommodate different pitch and resolution requirements, as well as the dark current vs temperature trend up to 125C. As for the consumer products, we will have a more complete product portfolio line-up over the following quarters."

Monday, August 19, 2019

ON Semi Presentation on SPAD Sensors

AutoSens publishes ON Semi - Sensl presentation "The Next Generation of SPAD Arrays for Automotive LiDAR" by Wade Appelman, VP of Lidar Technology:

Sunday, August 18, 2019

Thesis on Small Size Stacked SPAD Imagers

Edinburgh University publishes a thesis "Miniature high dynamic range time-resolved CMOS SPAD image sensors" by Tarek Al Abbas that explores the small pixel pitch SPAD sensors:

"The goal of this research is to explore the hypothesis that given the state of the art CMOS nodes and fabrication technologies, it is possible to design miniature SPAD image sensors for time-resolved applications with a small pixel pitch while maintaining both sensitivity and built -in functionality.

Three key approaches are pursued to that purpose: leveraging the innate area reduction of logic gates and finer design rules of advanced CMOS nodes to balance the pixel’s fill factor and processing capability, smarter pixel designs with configurable functionality and novel system architectures that lift the processing burden off the pixel array and mediate data flow.

Two pathfinder SPAD image sensors were designed and fabricated: a 96 × 40 planar front side illuminated (FSI) sensor with 66% fill factor at 8.25μm pixel pitch in an industrialised 40nm process and a 128 × 120 3D-stacked backside illuminated (BSI) sensor with 45% fill factor at 7.83μm pixel pitch. Both designs rely on a digital, configurable, 12-bit ripple counter pixel allowing for time-gated shot noise limited photon counting. The FSI sensor was operated as a quanta image sensor (QIS) achieving an extended dynamic range in excess of 100dB, utilising triple exposure windows and in-pixel data compression which reduces data rates by a factor of 3.75×. The stacked sensor is the first demonstration of a wafer scale SPAD imaging array with a 1-to-1 hybrid bond connection.

Characterisation results of the detector and sensor performance are presented. Two other time-resolved 3D-stacked BSI SPAD image sensor architectures are proposed. The first is a fully integrated 5-wire interface system on chip (SoC), with built-in power management and off-focal plane data processing and storage for high dynamic range as well as autonomous video rate operation. Preliminary images and bring-up results of the fabricated 2mm² sensor are shown. The second is a highly configurable design capable of simultaneous multi-bit oversampled imaging and programmable region of interest (ROI) time correlated single photon counting (TCSPC) with on-chip histogram generation. The 6.48μm pitch array has been submitted for fabrication. In-depth design details of both architectures are discussed."

Thanks to RH for the link!

"The goal of this research is to explore the hypothesis that given the state of the art CMOS nodes and fabrication technologies, it is possible to design miniature SPAD image sensors for time-resolved applications with a small pixel pitch while maintaining both sensitivity and built -in functionality.

Three key approaches are pursued to that purpose: leveraging the innate area reduction of logic gates and finer design rules of advanced CMOS nodes to balance the pixel’s fill factor and processing capability, smarter pixel designs with configurable functionality and novel system architectures that lift the processing burden off the pixel array and mediate data flow.

Two pathfinder SPAD image sensors were designed and fabricated: a 96 × 40 planar front side illuminated (FSI) sensor with 66% fill factor at 8.25μm pixel pitch in an industrialised 40nm process and a 128 × 120 3D-stacked backside illuminated (BSI) sensor with 45% fill factor at 7.83μm pixel pitch. Both designs rely on a digital, configurable, 12-bit ripple counter pixel allowing for time-gated shot noise limited photon counting. The FSI sensor was operated as a quanta image sensor (QIS) achieving an extended dynamic range in excess of 100dB, utilising triple exposure windows and in-pixel data compression which reduces data rates by a factor of 3.75×. The stacked sensor is the first demonstration of a wafer scale SPAD imaging array with a 1-to-1 hybrid bond connection.

Characterisation results of the detector and sensor performance are presented. Two other time-resolved 3D-stacked BSI SPAD image sensor architectures are proposed. The first is a fully integrated 5-wire interface system on chip (SoC), with built-in power management and off-focal plane data processing and storage for high dynamic range as well as autonomous video rate operation. Preliminary images and bring-up results of the fabricated 2mm² sensor are shown. The second is a highly configurable design capable of simultaneous multi-bit oversampled imaging and programmable region of interest (ROI) time correlated single photon counting (TCSPC) with on-chip histogram generation. The 6.48μm pitch array has been submitted for fabrication. In-depth design details of both architectures are discussed."

Thanks to RH for the link!

Saturday, August 17, 2019

Adasky Explains Why Visual World is Confusing for AI

Adasky presentation at Autosens Detroit 2019 shows that sometimes visible light images can be confusing for AI. Thermal camera is claimed to resolve these ambiguities:

Friday, August 16, 2019

LiDAR News: Velodyne, Aeye, Livox, Robosense, Hesai

BusinessWire: Velodyne introduced the Puck 32MR, a cost-effective perception solution for low speed autonomous markets including industrial vehicles, robotics, shuttles and unmanned aerial vehicles (UAVs). The Puck 32MR has a range of 120m and a 40-deg vertical FoV.

“We are proud to announce the Puck 32MR as the latest addition to our broad array of lidar products,” said Anand Gopalan, CTO of Velodyne Lidar. “Velodyne continues to innovate lidar technologies that empower autonomous solutions on a global scale. This product fills a need for an affordable mid-range sensor, which our customers expressed, and we took to heart. We strive to meet our customers’ needs, and the Puck 32MR is another example of our versatility within an evolving industry.”

The Puck 32MR is designed for power-efficiency to extend vehicle operating time within broad temperature and environmental ranges without the need for active cooling. The sensor uses proven 905nm Class 1 eye-safe lasers and is assembled in Velodyne’s state-of-the-art manufacturing facility. The Puck 32MR is designed for scalability and priced attractively for volume customers, although the exact price is not announced.

Aeye publishes its corporate promotional video:

Autosens publishes Aeye presentation "RGBD based deep neural networks for road obstacle detection and classification:"

Livox explains how it has achieved the low cost of its LiDARs:

"Traditionally, high-performance mechanical LiDAR products usually demand highly-skilled personnel and are therefore prohibitively expensive and in short supply. To encourage the adoption of LiDAR technology in a number of different industries ranging from 3D mapping and surveying to robotics and engineering, Livox Mid-40/Mid-100 is developed with cost-efficiency in mind while still maintaining superior performance. The mass production of Livox-40 has reached hundreds daily.

Instead of using expensive laser emitters or immature MEMS scanners, Mid-40/Mid-100 adopts lower cost semiconductor components for light generation and detection. The entire optical system, including the scanning units, uses proven and readily available optical components such as those employed in the optical lens industry. This sensor also introduces a uniquely-designed low cost signal acquisition method to achieve superior performance. All these factors contribute to an accessible price point - $599 for a single unit of Mid-40.

Livox Mid-40/Mid-100 adopts a large aperture refractive scanning method that utilizes a coaxial design. This approach uses far fewer laser detector pairs, yet maintains the high point density and detection distances. This design dramatically reduces the difficulty of optical alignment during production and enable significant production yield increase."

BusinessWire, Law.com, Quartz: Velodyne files patent infringement complaints against Robosense (AKA Suteng) and Hesai to the US District Court for the Northern District of California. Velodyne said the two Chinese companies have “threatened Velodyne and its business” by copying its LiDAR technology.

"Although styled in some instances as “solid state” devices, the Robosense Accused Products include, literally or under the doctrine of equivalents, a rotary component configured to rotate the plurality of laser emitters and the plurality of avalanche photodiode detectors at a speed of at least 200 RPM. For example, the RS-LiDAR-16 device rotates at a rate of 300 to 1200 RPM. The laser channels and APDs are attached to a rotating motor inside the LiDAR housing."

“We are proud to announce the Puck 32MR as the latest addition to our broad array of lidar products,” said Anand Gopalan, CTO of Velodyne Lidar. “Velodyne continues to innovate lidar technologies that empower autonomous solutions on a global scale. This product fills a need for an affordable mid-range sensor, which our customers expressed, and we took to heart. We strive to meet our customers’ needs, and the Puck 32MR is another example of our versatility within an evolving industry.”

The Puck 32MR is designed for power-efficiency to extend vehicle operating time within broad temperature and environmental ranges without the need for active cooling. The sensor uses proven 905nm Class 1 eye-safe lasers and is assembled in Velodyne’s state-of-the-art manufacturing facility. The Puck 32MR is designed for scalability and priced attractively for volume customers, although the exact price is not announced.

Aeye publishes its corporate promotional video:

Autosens publishes Aeye presentation "RGBD based deep neural networks for road obstacle detection and classification:"

Livox explains how it has achieved the low cost of its LiDARs:

"Traditionally, high-performance mechanical LiDAR products usually demand highly-skilled personnel and are therefore prohibitively expensive and in short supply. To encourage the adoption of LiDAR technology in a number of different industries ranging from 3D mapping and surveying to robotics and engineering, Livox Mid-40/Mid-100 is developed with cost-efficiency in mind while still maintaining superior performance. The mass production of Livox-40 has reached hundreds daily.

Instead of using expensive laser emitters or immature MEMS scanners, Mid-40/Mid-100 adopts lower cost semiconductor components for light generation and detection. The entire optical system, including the scanning units, uses proven and readily available optical components such as those employed in the optical lens industry. This sensor also introduces a uniquely-designed low cost signal acquisition method to achieve superior performance. All these factors contribute to an accessible price point - $599 for a single unit of Mid-40.

Livox Mid-40/Mid-100 adopts a large aperture refractive scanning method that utilizes a coaxial design. This approach uses far fewer laser detector pairs, yet maintains the high point density and detection distances. This design dramatically reduces the difficulty of optical alignment during production and enable significant production yield increase."

BusinessWire, Law.com, Quartz: Velodyne files patent infringement complaints against Robosense (AKA Suteng) and Hesai to the US District Court for the Northern District of California. Velodyne said the two Chinese companies have “threatened Velodyne and its business” by copying its LiDAR technology.

"Although styled in some instances as “solid state” devices, the Robosense Accused Products include, literally or under the doctrine of equivalents, a rotary component configured to rotate the plurality of laser emitters and the plurality of avalanche photodiode detectors at a speed of at least 200 RPM. For example, the RS-LiDAR-16 device rotates at a rate of 300 to 1200 RPM. The laser channels and APDs are attached to a rotating motor inside the LiDAR housing."

Thursday, August 15, 2019

Artilux Presents its GeSi 3D Sensing Tech Featuring 50% QE @ 1550nm

PRNewswire, CEChina, EEWorld: After first unveiling its GeSi sensors in October 2018 and presenting them at IEDM 2018, Artilux formally announces its new Explore Series for wide spectrum 3D sensing. By operating at longer wavelength light than conventional solutions, the new Explore Series delivers exceptional accuracy, reduces the risk of eye damage and minimizes sunlight interference, enabling a consistent outdoor-indoor user experience. The breakthrough is based on a new GeSi technology platform developed by Artilux in cooperation with TSMC.

Through a series of technological breakthroughs, ranging from advanced material, sensor design to system and algorithm, Artilux says it has redefined the performance of 3D sensing. Current 3D sensing technology from other companies usually works at wavelengths less than 1µm, typically 850nm or 940nm. These wavelengths have two major drawbacks: firstly, poor outdoor performance due to interference from sunlight, and secondly a potential risk of irreparably damaging eyesight with misused or malfunctioning lasers, since the human retina can easily absorb laser energy at such wavelengths. Existing attempts to extend the spectrum to wavelengths above 1µm have suffered from poor QE, falling drastically from around 30% at 940nm to 0% for wavelength 1µm and above.

Artilux and TSMC integrate GeSi as the light absorption material with CMOS technology on a silicon wafer. It eliminates existing physics and engineering limitations by significantly increasing QE to 70% at 940nm, as well as further extending the available spectrum up to 1550nm by achieving 50% QE at this wavelength. Combined with modulation frequency at 300 MHz and above, it delivers higher accuracy and better performance in sunlight and greatly reduces the risk of eye damage – all at a competitive price. It is now ready for mass production.

Erik Chen, CEO at Artilux, says "Intelligence is shaped by connecting the dots among experiences originating from how we perceive our surroundings. At Artilux, we believe intelligence and technology evolution start with observation and connections, and by exploring and expanding the frontiers of advanced photonic technology for sensing and connectivity, we aim to be an integral part of the infrastructure for future AI and technology evolution to be built upon. The Explore Series is named after this pioneering spirit and sets out to unleash the full potential of wide spectrum 3D sensing."

The new wide spectrum 3D sensing technology will enable numerous new opportunities from short range applications such as 3D facial recognition, with sub-mm depth accuracy in both indoor and outdoor environments, to mid-long-range applications, including augmented reality, security cameras, as well as robotics and autonomous vehicles.

The first wide spectrum 3D ToF image sensors of the new Explore Series, with multiple resolutions and ecosystem partners, will be announced in Q1 2020.

Pictured below is said to be the real 3D image captured by Artilux’s sensor at 1310nm:

Through a series of technological breakthroughs, ranging from advanced material, sensor design to system and algorithm, Artilux says it has redefined the performance of 3D sensing. Current 3D sensing technology from other companies usually works at wavelengths less than 1µm, typically 850nm or 940nm. These wavelengths have two major drawbacks: firstly, poor outdoor performance due to interference from sunlight, and secondly a potential risk of irreparably damaging eyesight with misused or malfunctioning lasers, since the human retina can easily absorb laser energy at such wavelengths. Existing attempts to extend the spectrum to wavelengths above 1µm have suffered from poor QE, falling drastically from around 30% at 940nm to 0% for wavelength 1µm and above.

Artilux and TSMC integrate GeSi as the light absorption material with CMOS technology on a silicon wafer. It eliminates existing physics and engineering limitations by significantly increasing QE to 70% at 940nm, as well as further extending the available spectrum up to 1550nm by achieving 50% QE at this wavelength. Combined with modulation frequency at 300 MHz and above, it delivers higher accuracy and better performance in sunlight and greatly reduces the risk of eye damage – all at a competitive price. It is now ready for mass production.

Erik Chen, CEO at Artilux, says "Intelligence is shaped by connecting the dots among experiences originating from how we perceive our surroundings. At Artilux, we believe intelligence and technology evolution start with observation and connections, and by exploring and expanding the frontiers of advanced photonic technology for sensing and connectivity, we aim to be an integral part of the infrastructure for future AI and technology evolution to be built upon. The Explore Series is named after this pioneering spirit and sets out to unleash the full potential of wide spectrum 3D sensing."

The new wide spectrum 3D sensing technology will enable numerous new opportunities from short range applications such as 3D facial recognition, with sub-mm depth accuracy in both indoor and outdoor environments, to mid-long-range applications, including augmented reality, security cameras, as well as robotics and autonomous vehicles.

The first wide spectrum 3D ToF image sensors of the new Explore Series, with multiple resolutions and ecosystem partners, will be announced in Q1 2020.

Pictured below is said to be the real 3D image captured by Artilux’s sensor at 1310nm:

Corephotonics Files Another Lawsuit against Apple on 10 Patents Infringement

AppleInsider: Israeli-based Corephotonics, recently acquired by Samsung, files its 3rd lawsuit accusing Apple in infringing on its 10 patents:

9,661,233 "Dual aperture zoom digital camera"

10,230,898 "Dual aperture zoom camera with video support and switching / non-switching dynamic control"

10,288,840 "Miniature telephoto lens module and a camera utilizing such a lens module"

10,317,647 "Miniature telephoto lens assembly"

10,324,277 "Miniature telephoto lens assembly"

10,330,897 "Miniature telephoto lens assembly"

10,225,479 "Dual aperture zoom digital camera"

10,015,408 "Dual aperture zoom digital camera"

10,356,332 "Dual aperture zoom camera with video support and switching / non-switching dynamic control"

10,326,942 "Dual aperture zoom digital camera"

The full text of the lawsuit has been published on Scribd:

9,661,233 "Dual aperture zoom digital camera"

10,230,898 "Dual aperture zoom camera with video support and switching / non-switching dynamic control"

10,288,840 "Miniature telephoto lens module and a camera utilizing such a lens module"

10,317,647 "Miniature telephoto lens assembly"

10,324,277 "Miniature telephoto lens assembly"

10,330,897 "Miniature telephoto lens assembly"

10,225,479 "Dual aperture zoom digital camera"

10,015,408 "Dual aperture zoom digital camera"

10,356,332 "Dual aperture zoom camera with video support and switching / non-switching dynamic control"

10,326,942 "Dual aperture zoom digital camera"

The full text of the lawsuit has been published on Scribd:

Wednesday, August 14, 2019

More Autosens Videos: Sony, Valeo, Geo, ARM

Autosens publishes more video presentations from its latest Detroit conference in May 2019.

Sony's Solution Architect Tsuyoshi Hara presents "The influence of colour filter pattern and its arrangement on resolution and colour reproducibility:"

Valeo's Patrick Denny presents "Addressing LED flicker:"

GEO Semiconductor's Director of Imaging R&D Manjunath Somayaji presents "Tuning ISP for automotive use:"

ARM Senior Manager of Image Quality Alexis Lluis Gomez presents "ISP optimization for ML/CV automotive applications:"

Sony's Solution Architect Tsuyoshi Hara presents "The influence of colour filter pattern and its arrangement on resolution and colour reproducibility:"

Valeo's Patrick Denny presents "Addressing LED flicker:"

GEO Semiconductor's Director of Imaging R&D Manjunath Somayaji presents "Tuning ISP for automotive use:"

ARM Senior Manager of Image Quality Alexis Lluis Gomez presents "ISP optimization for ML/CV automotive applications:"

Tuesday, August 13, 2019

Omnivision's CTO Presentation on Automotive RGB-IR Sensors

Autosens publishes Omnivision CTO Boyd Fowler presentation "RGB-IR Sensors for In-Cabin Automotive Applications."

Another Autosens video by Robin Jenkin, Principal Image Quality Engineer, NVIDIA updates on P2020 standard progress.

Another Autosens video by Robin Jenkin, Principal Image Quality Engineer, NVIDIA updates on P2020 standard progress.

Huawei Demos Fingerprint Sensor Under LCD Display

Gizmochina: Huawei presents a prototype of IR camera-based fingerprint sensor under LCD display. Most of other under-display sensors work with OLED displays. The demo posted on Twitter says:

"This is an LCD screen fingerprint technology based on the infrared camera solution, which can basically ignore the LCD panel type and unlock the speed for 300ms."

"This is an LCD screen fingerprint technology based on the infrared camera solution, which can basically ignore the LCD panel type and unlock the speed for 300ms."

#Huawei— Xiaomishka (@xiaomishka) August 11, 2019

This is an LCD screen fingerprint technology based on the infrared camera solution, which can basically ignore the LCD panel type and unlock the speed for 300ms. pic.twitter.com/gDb61I7GIt

Samsung Officially Unveils 108MP Sensor for Smartphones

BusinessWire: Samsung introduces 108MP ISOCELL Bright HMX, the first mobile image sensor in the mobile CIS industry to go beyond 100MP. The new 1/1.33-inch sensor uses the same 0.8μm pixel from the recently announced 64MP. The ISOCELL Bright HMX is the result of close collaboration between Xiaomi and Samsung.

The pixel-merging Tetracell technology allows the sensor to imitate big-pixel sensors, producing brighter 27MP images. In bright environments, the Smart-ISO, a mechanism that intelligently selects the level of amplifier gains according to the illumination of the environment for optimal light-to-electric signal conversion, switches to a low ISO to improve pixel saturation and produce vivid photographs. The mechanism uses a high ISO in darker settings that helps reduce noise, resulting in clearer pictures. The HMX supports video recording without losses in field-of-view at resolutions up to 6K (6016 x 3384) 30fps.

“For ISOCELL Bright HMX, Xiaomi and Samsung have worked closely together from the early conceptual stage to production that has resulted in a groundbreaking 108Mp image sensor. We are very pleased that picture resolutions previously available only in a few top-tier DSLR cameras can now be designed into smartphones,” said Lin Bin, co-founder and president of Xiaomi. “As we continue our partnership, we anticipate bringing not only new mobile camera experiences but also a platform through which our users can create unique content.”

“Samsung is continuously pushing for innovations in pixel and logic technologies to engineer our ISOCELL image sensors to capture the world as close to how our eyes perceive them,” said Yongin Park, EVP of sensor business at Samsung. “Through close collaboration with Xiaomi, ISOCELL Bright HMX is the first mobile image sensor to pack over 100 million pixels and delivers unparalleled color reproduction and stunning detail with advanced Tetracell and ISOCELL Plus technology.”

Mass production of Samsung ISOCELL Bright HMX will begin later this month.

The pixel-merging Tetracell technology allows the sensor to imitate big-pixel sensors, producing brighter 27MP images. In bright environments, the Smart-ISO, a mechanism that intelligently selects the level of amplifier gains according to the illumination of the environment for optimal light-to-electric signal conversion, switches to a low ISO to improve pixel saturation and produce vivid photographs. The mechanism uses a high ISO in darker settings that helps reduce noise, resulting in clearer pictures. The HMX supports video recording without losses in field-of-view at resolutions up to 6K (6016 x 3384) 30fps.

“For ISOCELL Bright HMX, Xiaomi and Samsung have worked closely together from the early conceptual stage to production that has resulted in a groundbreaking 108Mp image sensor. We are very pleased that picture resolutions previously available only in a few top-tier DSLR cameras can now be designed into smartphones,” said Lin Bin, co-founder and president of Xiaomi. “As we continue our partnership, we anticipate bringing not only new mobile camera experiences but also a platform through which our users can create unique content.”

“Samsung is continuously pushing for innovations in pixel and logic technologies to engineer our ISOCELL image sensors to capture the world as close to how our eyes perceive them,” said Yongin Park, EVP of sensor business at Samsung. “Through close collaboration with Xiaomi, ISOCELL Bright HMX is the first mobile image sensor to pack over 100 million pixels and delivers unparalleled color reproduction and stunning detail with advanced Tetracell and ISOCELL Plus technology.”

Mass production of Samsung ISOCELL Bright HMX will begin later this month.

Friday, August 09, 2019

Himax Updates on its 3D Sensing Efforts

GlobeNewsWire: Himax Q2 2019 earnings report updates on the company's 3D sensing projects:

"Himax continues to participate in most of the smartphone OEMs’ ongoing 3D sensing projects covering structured light and time-of-flight (ToF). As the Company reported earlier, in the past, its structured light-based 3D sensing total solution targeting Android smartphone’s front-facing application was unsuccessful due to high hardware cost, long development lead time, and the lack of killer applications. Since then, the Company has adjusted its structured light-based 3D sensing technology development to focus on applications for non-smartphone segments which are typically less sensitive to cost and always require a total solution. The Company teamed up with industry-leading facial recognition algorithm and application processor partners to develop new 3D sensing applications for smart door lock and have started design-in projects with certain end customers. Separately the Company is collaborating with partners who wish to take advantage of its 3D sensing know-how to automate traditional manufacturing and thereby improve its efficiency and cost. A prototype of the cutting-edge manufacturing line is being built on Himax’s premises and it believes this project can represent a major step forward in its alternative 3D sensing applications. Himax is still in the early stage of exploring the full business potential for structured light 3D sensing technology but believe it will be applicable in a wide range of industries, particularly those demanding high level of depth accuracy.

On ToF 3D sensing, the Company has seen increasing adoption of world-facing solutions to enable advanced photography, distance/dimension measurement and 3D depth information generation for AR applications. Very recently, thanks to ToF sensor technology advancement, some OEMs are also exploring ToF 3D for front-facing facial recognition and payment certification. As a technology leader in the 3D sensing space, Himax is an active participant in smartphone OEMs’ design projects for new devices involving ToF technology by offering WLO optics and/or transmitter modules with its unique eye-safety protection design."

"Himax continues to participate in most of the smartphone OEMs’ ongoing 3D sensing projects covering structured light and time-of-flight (ToF). As the Company reported earlier, in the past, its structured light-based 3D sensing total solution targeting Android smartphone’s front-facing application was unsuccessful due to high hardware cost, long development lead time, and the lack of killer applications. Since then, the Company has adjusted its structured light-based 3D sensing technology development to focus on applications for non-smartphone segments which are typically less sensitive to cost and always require a total solution. The Company teamed up with industry-leading facial recognition algorithm and application processor partners to develop new 3D sensing applications for smart door lock and have started design-in projects with certain end customers. Separately the Company is collaborating with partners who wish to take advantage of its 3D sensing know-how to automate traditional manufacturing and thereby improve its efficiency and cost. A prototype of the cutting-edge manufacturing line is being built on Himax’s premises and it believes this project can represent a major step forward in its alternative 3D sensing applications. Himax is still in the early stage of exploring the full business potential for structured light 3D sensing technology but believe it will be applicable in a wide range of industries, particularly those demanding high level of depth accuracy.

On ToF 3D sensing, the Company has seen increasing adoption of world-facing solutions to enable advanced photography, distance/dimension measurement and 3D depth information generation for AR applications. Very recently, thanks to ToF sensor technology advancement, some OEMs are also exploring ToF 3D for front-facing facial recognition and payment certification. As a technology leader in the 3D sensing space, Himax is an active participant in smartphone OEMs’ design projects for new devices involving ToF technology by offering WLO optics and/or transmitter modules with its unique eye-safety protection design."

Thursday, August 08, 2019

Samsung 108MP Sensor to Feature in Xiaomi Smartphone

NextWeb, DPReview: At a briefing in China, Xiaomi announces it is to become the first manufacturer to use Samsung’s new 108MP ISOCELL sensor. The maximum resolution of that sensor will be 12,032 x 9,024 pixels:

Thanks to TS for the pointer!

Thanks to TS for the pointer!

Subscribe to:

Posts (Atom)