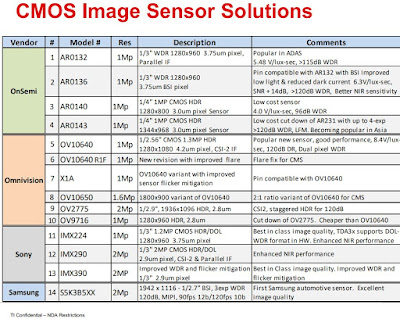

TI publishes a comparison of automotive image sensors from different companies:

Update: Since the original link is down, here is the full 1-page document content, as saved on my computer:

Monday, December 31, 2018

Sunday, December 30, 2018

First ToF Imager from China

Wuhan, China-based Silicon Integrated Inc. unveils SIF2310 that it calls "The first back-illuminated area array ToF sensor in China."

The SIF2310 integrates:

The SIF2310 supports modulation frequencies up to 100MHz and output frame rates up to 240fps. With IR light source, SIF2310 can be used in face recognition, AR/VR, motion capture, 3D modeling, machine vision, and ADAS applications. The chip is available in a die or Glass-BGA package.

The SIF2310 integrates:

- HVGA (480x360) ToF pixel array

- signal generator modulating the IR source

- 12bit ADC

- on-chip temperature sensor

- logic control unit, high-speed clock

- MIPI interface

The SIF2310 supports modulation frequencies up to 100MHz and output frame rates up to 240fps. With IR light source, SIF2310 can be used in face recognition, AR/VR, motion capture, 3D modeling, machine vision, and ADAS applications. The chip is available in a die or Glass-BGA package.

Saturday, December 29, 2018

Digitimes on Samsung Plans

Digitimes overviews 2018 Far East semiconductor industry and emphasize Samsung impact on CIS market:

"With use of CMOS image sensors (CIS) extending from smartphone cameras to automotive cameras, Samsung has planned to expand its CIS production capacity to surpass Sony to become the globally largest supplier. Samsung began to modify Line 11 DRAM factory in its Hwaseong production base for production of CIS at the end of 2017, with the modification to be completed by the end of 2018.

Samsung also will modify Line 13 DRAM factory in the same production base for CIS production. Samsung had monthly production capacity of 45,000 12-inch wafers for making CIS at the end of 2017, and the capacity will increase to nearly 120,000 12-inch wafers when the two additional factories begin production.

Samsung's moves are in line with the optimism of carmakers and other semiconductor vendors about the future of autonmous driving."

"With use of CMOS image sensors (CIS) extending from smartphone cameras to automotive cameras, Samsung has planned to expand its CIS production capacity to surpass Sony to become the globally largest supplier. Samsung began to modify Line 11 DRAM factory in its Hwaseong production base for production of CIS at the end of 2017, with the modification to be completed by the end of 2018.

Samsung also will modify Line 13 DRAM factory in the same production base for CIS production. Samsung had monthly production capacity of 45,000 12-inch wafers for making CIS at the end of 2017, and the capacity will increase to nearly 120,000 12-inch wafers when the two additional factories begin production.

Samsung's moves are in line with the optimism of carmakers and other semiconductor vendors about the future of autonmous driving."

Friday, December 28, 2018

Sony to Increase ToF Sensors Production

Bloomberg interviews Satoshi Yoshihara, the head of Sony’s image sensor division. He says that:

Thanks to RP and TG for the pointer!

- Sony is boosting production of its ToF sensors after getting interest from customers including Apple

- Sony 3D business is already profitable and will make an impact on earnings from the next fiscal year starting in April 2019.

- Sony started providing 3D SDK to outside developers to experiment with its ToF chips and create apps.

- “Cameras revolutionized phones, and based on what I’ve seen, I have the same expectation for 3D,” said Yoshihara

- Huawei is using Sony’s ToF cameras in the next generation models, according to Bloomberg sources

- There will be a need for two 3D cameras on smartphones, for the front and back

- Sony ToF sensors will appear in models from several smartphone manufacturers in 2019

- Sony starts ToF sensors mass production in late summer 2019 to meet the demand

Thanks to RP and TG for the pointer!

Thursday, December 27, 2018

Foxconn to Manufacture CIS in China

Nikkei, EETimes: Foxconn Technology (AKA Hon Hai Precision Industry) is preparing to build a $9b chip fab in the southern Chinese city of Zhuhai. The new fab will manufacture image sensors, chips for 8K TVs, and various sensors for industrial uses and connected devices, according to Nikkei. The construction is expected to start in 2021 (2020, according to Semimedia).

A majority of the investment is to be subsidized by Zhuhai city government. The new fab will rank as one of the country's top high-tech projects, according to Nikkei sources. The fab will make chips not just for its own use but for other customers, competing with TSMC, Globalfoundries, Samsung foundry unit, and SMIC.

According to Nikkei, Foxconn is expected to form a JV for the project with Sharp, which it acquired in 2016, and the Zhuhai government. However, Semimedia reports that Sharp denies its involvement in the project.

Meanwhile, Japan Times says that Sharp is going to spin-off its semiconductor business into two entities. One of them will be responsible for lasers, the second one - for sensors and other semiconductors. Sharp Chairman and President Tai Jeng-wu told reporters that he wants to tap overseas and domestic resources, showing eagerness to forge alliances with other firms including Sharp’s parent, Hon Hai Precision Industry (Foxconn).

Currently, Sharp manufactures semiconductor-related products at its plants in the prefectures of Hiroshima and Nara.

A majority of the investment is to be subsidized by Zhuhai city government. The new fab will rank as one of the country's top high-tech projects, according to Nikkei sources. The fab will make chips not just for its own use but for other customers, competing with TSMC, Globalfoundries, Samsung foundry unit, and SMIC.

According to Nikkei, Foxconn is expected to form a JV for the project with Sharp, which it acquired in 2016, and the Zhuhai government. However, Semimedia reports that Sharp denies its involvement in the project.

Meanwhile, Japan Times says that Sharp is going to spin-off its semiconductor business into two entities. One of them will be responsible for lasers, the second one - for sensors and other semiconductors. Sharp Chairman and President Tai Jeng-wu told reporters that he wants to tap overseas and domestic resources, showing eagerness to forge alliances with other firms including Sharp’s parent, Hon Hai Precision Industry (Foxconn).

Currently, Sharp manufactures semiconductor-related products at its plants in the prefectures of Hiroshima and Nara.

|

| Picture source: EENews |

QIS Article

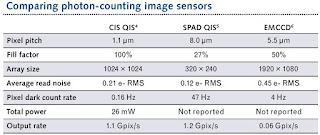

LaserFocusWorld publishes an article "The Quanta Image Sensor (QIS): Making Every Photon Count" by Eric Fossum and Kaitlin Anagnost. The article presents Dartmouth work on Megapixel single photon resolving sensors and compares it with SAPDs and EMCCDs:

Wednesday, December 26, 2018

Huawei Honor V20 Smartphone Features Rear ToF Camera

DeviceSpecifications: Huawei Honor V20 smartphone features Sony 48MP IMX586 image sensor combined with a rear 3D ToF camera. Apparently, the ToF camera is used for better AF, AR applications, and games:

Update: PRNewswire: "...rear camera is a TOF 3D camera, which makes the phone capable of creating a new dimension in photography and videography that brings greater usability and fun to users. It can calculate distance based upon the time-of-flight of a light signal, and has functions including depth sensing, skeletal tracking and real-time motion capture.

The TOF 3D camera can turn HONOR View20 into a motion-controlled gaming console, and allow you to play 3D motion games like never before. In addition, it can also let 3D characters dance following your gestures on your phone, and you can share these funny dancing videos with your friends."

Update: PRNewswire: "...rear camera is a TOF 3D camera, which makes the phone capable of creating a new dimension in photography and videography that brings greater usability and fun to users. It can calculate distance based upon the time-of-flight of a light signal, and has functions including depth sensing, skeletal tracking and real-time motion capture.

The TOF 3D camera can turn HONOR View20 into a motion-controlled gaming console, and allow you to play 3D motion games like never before. In addition, it can also let 3D characters dance following your gestures on your phone, and you can share these funny dancing videos with your friends."

Korean Companies Allocate More Resources to CIS Business

BusinessKorea: As memory prices go down, CIS business becomes more important in Korean companies:

- Samsung has selected image sensors as its next-generation growth engine.

- Samsung establishes a new business team to strengthen its sales of sensors for autonomous vehicles.

- Samsung signed a contract with Tesla to supply image sensors for vehicles. Although the immediate sales impact is not significant, it will make it easier for Samsung to expand with other companies in automotive space.

- Samsung CIS activity has been re-organized so that the process development is now done by the foundry section of the Device Solution Division (DS), while the sensor business team focuses on product planning and sales.

- Samsung is converting some of its 11-line DRAM production in Hwaseong plant into image sensor lines.

- LG and SK Hynix invested in LiDAR startup Aeye

- SK Hynix aims to achieve 1 trillion won (US$900 million) in image sensor sales.

- SK Hynix focuses on mid-end products and increase its market share in China.

- SK Hynix leverages its DRAM strength in image sensor marketing by offering a deal on CIS purchase to a company that buys large amount of DRAM products.

Tuesday, December 25, 2018

Artilux Ge-on-Si IEDM Paper Reports Mass Production Readiness

Taiwan-based Artilux presented its Ge-on-Si paper "High-Performance Germanium-on-Silicon Lock-in Pixels for Indirect Time-of-Flight Applications" by N. Na, S.-L. Cheng, H.-D. Liu, M.-J. Yang, C.-Y. Chen, H.-W. Chen, Y.-T. Chou, C.-T. Lin, W.-H. Liu, C.-F. Liang, C.-L. Chen, S.-W. Chu, B.-J. Chen, Y.-F. Lyu, and S.-L. Chen.

The paper appears to be a process and device development report with statistics on process variations and various optimizations of the device parameters. The conclusion is: "Novel GOS lock-in pixel is investigated at 940nm and 1550nm wavelengths and shown to be a strong contender against conventional Si lock-in pixel. The measured statistical data further demonstrate the technology yields good within wafer and wafer-to-wafer uniformities that may be ready for mass production in the near future."

The paper appears to be a process and device development report with statistics on process variations and various optimizations of the device parameters. The conclusion is: "Novel GOS lock-in pixel is investigated at 940nm and 1550nm wavelengths and shown to be a strong contender against conventional Si lock-in pixel. The measured statistical data further demonstrate the technology yields good within wafer and wafer-to-wafer uniformities that may be ready for mass production in the near future."

Monday, December 24, 2018

Etron and eYs3D Develop AI-on-Edge Natural Light 3D Sensing

Digitimes: Etron and its subsidiary eYs3D Microelectronics are set to launch AI-on-edge 3D sensing solutions based on Etron stereo processors. Their "3D natural light deep vision platform technology that can allow one smartphone to unlock 5-6 smartphones via recognizing faces of smartphone users under their consent, and can also connect smart devices to monitor air conditioners, TVs and other smart household electrical appliances."

Sunday, December 23, 2018

Jamming Smartphone Cameras

Journal of Physics publishes an open-access paper "Tracking and Disabling Smartphone Camera for Privacy" by Qurban Memon, Khawla Al Shanqiti, AlYazia Al Falasi, Amna Al Jaberi, and Yasmeen Amer from UAE University.

"With people's easy access to various forms of recent technologies, privacy has decreased immensely. One of the major privacy breaches nowadays is taking pictures and videos using smart phones without seeking permission of those whom it concerns. This work aims to target privacy in the current mobile environment. The main contribution of this work is to block the smart phone camera without damaging the smart phone or harming people around it. The approach is divided into stages: body area detection and then camera detection in the frame. The detection stage follows pointing of laser(s) controlled by a microcontroller. Tests are conducted on built system and results show performance error less than 1%. For Safety, the beams are devised to be harmless to the people, environment and the targeted smart phones."

"With people's easy access to various forms of recent technologies, privacy has decreased immensely. One of the major privacy breaches nowadays is taking pictures and videos using smart phones without seeking permission of those whom it concerns. This work aims to target privacy in the current mobile environment. The main contribution of this work is to block the smart phone camera without damaging the smart phone or harming people around it. The approach is divided into stages: body area detection and then camera detection in the frame. The detection stage follows pointing of laser(s) controlled by a microcontroller. Tests are conducted on built system and results show performance error less than 1%. For Safety, the beams are devised to be harmless to the people, environment and the targeted smart phones."

Saturday, December 22, 2018

3D AI Camera Company Shuts Down

Not everything 3D and AI is automatically successful. Techcrunch, Engadget, and Information report that Lighthouse home security camera company is shutting down. The startup was founded in 2015 and raised $20m. The final product, while working, has been shipped late and costed too much to be successful.

The company CEO Alex Teichman writes: "I am incredibly proud of the groundbreaking work the Lighthouse team accomplished - delivering useful and accessible intelligence for our homes via advanced AI and 3D sensing. Unfortunately, we did not achieve the commercial success we were looking for and will be shutting down operations in the near future."

The company CEO Alex Teichman writes: "I am incredibly proud of the groundbreaking work the Lighthouse team accomplished - delivering useful and accessible intelligence for our homes via advanced AI and 3D sensing. Unfortunately, we did not achieve the commercial success we were looking for and will be shutting down operations in the near future."

Nikon Invests $25m in Velodyne, Discusses Collaboration

Velodyne Lidar announces Nikon as a new strategic investor with an investment of $25M. The parties further announced they have begun discussions for a multifaceted business alliance.

Aiming to combine Nikon’s optical and precision technologies with Velodyne’s sensor technology, both companies have begun investigating a wide-ranging business relationship, including collaboration in technology development and manufacturing. The companies share a futuristic vision of advanced perception technology for a wide range of applications including robotics, mapping, security, shuttles, drones, and safety on roadways.

Tahnks to TG for the link!

Aiming to combine Nikon’s optical and precision technologies with Velodyne’s sensor technology, both companies have begun investigating a wide-ranging business relationship, including collaboration in technology development and manufacturing. The companies share a futuristic vision of advanced perception technology for a wide range of applications including robotics, mapping, security, shuttles, drones, and safety on roadways.

Tahnks to TG for the link!

Friday, December 21, 2018

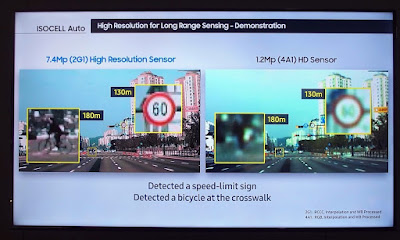

Samsung Exhibits Automotive Image Sensors

Nikkei publishes an overview of Samsung booth at Electronica 2018 exhibition in Germany. Samsung demos 120dB HDR and 7.4MP high resolution automotive-qualified sensors:

Aeye Raises $40m, Unveils iDAR Product

BusinessWire: AEye, the developer of iDAR, announces the second close of its Series B financing, bringing the company’s total funding to over $60 million. AEye Series B round includes Hella Ventures, SUBARU-SBI Innovation Fund, LG Electronics, and SK Hynix. AEye previously announced that the round was led by Taiwania Capital along with existing investors Kleiner Perkins, Intel Capital, Airbus Ventures, R7 Partners, and an undisclosed OEM.

AEye's iDAR physically fuses the 1550nm solid-state LiDAR with a high-resolution camera to create a new data type called Dynamic Vixels. This real-time integration occurs in the IDAR sensor, rather than post fusing separate camera and LiDAR data after the scan. By capturing both geometric and true color (x,y,z and r,g,b) data, Dynamic Vixels uniquely mimic the data structure of the human visual cortex, capturing better data for vastly superior performance and accuracy.

“This funding marks an inflection point for AEye, as we scale our staff, partnerships and investments to align with our customers’ roadmap to commercialization,” said Luis Dussan, AEye founder and CEO. “The support we have received from major players in the automotive industry validates that we are taking the right approach to addressing the challenges of artificial perception. Their confidence in AEye and iDAR will be borne out by the automotive specific products we'll be bringing to market at scale in Q2 of 2019. These products will help OEMs and Tier 1s accelerate their products and services by delivering market leading performance at the lowest cost.”

Aeye AE110 iDAR is fusing 1550nm solid-state agile MOEMS LiDAR, a low-light HD camera, and embedded AI to intelligently capture data at the sensor level. The AE110’s pseudo-random beam distribution search option makes the system eight times more efficient than fixed pattern LiDARs. The AE110 is said to achieve 16 times greater coverage of the entire FOV at 10 times the frame rate (up to 100 Hz) due to its ability to support multiple regions of interest for both LiDAR and camera.

AEye's iDAR physically fuses the 1550nm solid-state LiDAR with a high-resolution camera to create a new data type called Dynamic Vixels. This real-time integration occurs in the IDAR sensor, rather than post fusing separate camera and LiDAR data after the scan. By capturing both geometric and true color (x,y,z and r,g,b) data, Dynamic Vixels uniquely mimic the data structure of the human visual cortex, capturing better data for vastly superior performance and accuracy.

“This funding marks an inflection point for AEye, as we scale our staff, partnerships and investments to align with our customers’ roadmap to commercialization,” said Luis Dussan, AEye founder and CEO. “The support we have received from major players in the automotive industry validates that we are taking the right approach to addressing the challenges of artificial perception. Their confidence in AEye and iDAR will be borne out by the automotive specific products we'll be bringing to market at scale in Q2 of 2019. These products will help OEMs and Tier 1s accelerate their products and services by delivering market leading performance at the lowest cost.”

Aeye AE110 iDAR is fusing 1550nm solid-state agile MOEMS LiDAR, a low-light HD camera, and embedded AI to intelligently capture data at the sensor level. The AE110’s pseudo-random beam distribution search option makes the system eight times more efficient than fixed pattern LiDARs. The AE110 is said to achieve 16 times greater coverage of the entire FOV at 10 times the frame rate (up to 100 Hz) due to its ability to support multiple regions of interest for both LiDAR and camera.

Thursday, December 20, 2018

Optical AI Processor Company Raises $3.3m

VentureBeat: LightOn, a Paris, France-based AI startup, has closed a $3.3M (€2.9M) seed funding round. LightOn is developing a new optics-based data processing technology for AI. Leveraging compressive sensing, LightOn’s hardware and software can make Artificial Intelligence computations both simpler and orders of magnitude more efficient. The technology, licensed by PSL Research University, was originally developed at several of Paris’ leading research institutions.

For the past few months, LightOn has allowed access to their Optical Processing Units (OPU) to a select group of beta customers through the LightOn Cloud, thanks to a partnership with OVH, Europe’s leading cloud provider. First users from both Academia and Industry have already successfully demonstrated impressive results on this hybrid CPU/GPU/OPU server, outperforming silicon-only computing technology in a variety of large scale Machine Learning tasks. Typical use cases currently include transfer learning, change point detection, or time series prediction.

LightOn’s CEO, Igor Carron said, “It’s an exciting time as Artificial Intelligence develops rapidly. The requirements as usage scales necessitate improved power efficiency and performance. LightOn’s technology addresses these monumental challenges.“

LightOn's OPU technology has been presented in 2015 arxiv.org paper "Random Projections through multiple optical scattering: Approximating kernels at the speed of light" by Alaa Saade, Francesco Caltagirone, Igor Carron, Laurent Daudet, Angélique Drémeau, Sylvain Gigan, Florent Krzakala

"Random projections have proven extremely useful in many signal processing and machine learning applications. However, they often require either to store a very large random matrix, or to use a different, structured matrix to reduce the computational and memory costs. Here, we overcome this difficulty by proposing an analog, optical device, that performs the random projections literally at the speed of light without having to store any matrix in memory. This is achieved using the physical properties of multiple coherent scattering of coherent light in random media. We use this device on a simple task of classification with a kernel machine, and we show that, on the MNIST database, the experimental results closely match the theoretical performance of the corresponding kernel. This framework can help make kernel methods practical for applications that have large training sets and/or require real-time prediction. We discuss possible extensions of the method in terms of a class of kernels, speed, memory consumption and different problems."

For the past few months, LightOn has allowed access to their Optical Processing Units (OPU) to a select group of beta customers through the LightOn Cloud, thanks to a partnership with OVH, Europe’s leading cloud provider. First users from both Academia and Industry have already successfully demonstrated impressive results on this hybrid CPU/GPU/OPU server, outperforming silicon-only computing technology in a variety of large scale Machine Learning tasks. Typical use cases currently include transfer learning, change point detection, or time series prediction.

LightOn’s CEO, Igor Carron said, “It’s an exciting time as Artificial Intelligence develops rapidly. The requirements as usage scales necessitate improved power efficiency and performance. LightOn’s technology addresses these monumental challenges.“

LightOn's OPU technology has been presented in 2015 arxiv.org paper "Random Projections through multiple optical scattering: Approximating kernels at the speed of light" by Alaa Saade, Francesco Caltagirone, Igor Carron, Laurent Daudet, Angélique Drémeau, Sylvain Gigan, Florent Krzakala

"Random projections have proven extremely useful in many signal processing and machine learning applications. However, they often require either to store a very large random matrix, or to use a different, structured matrix to reduce the computational and memory costs. Here, we overcome this difficulty by proposing an analog, optical device, that performs the random projections literally at the speed of light without having to store any matrix in memory. This is achieved using the physical properties of multiple coherent scattering of coherent light in random media. We use this device on a simple task of classification with a kernel machine, and we show that, on the MNIST database, the experimental results closely match the theoretical performance of the corresponding kernel. This framework can help make kernel methods practical for applications that have large training sets and/or require real-time prediction. We discuss possible extensions of the method in terms of a class of kernels, speed, memory consumption and different problems."

Power Efficient Neural ToF Camera

SenseTime Research and Tsinghua University publish arxiv.org paper "Very Power Efficient Neural Time-of-Flight" by Yan Chen, Jimmy S. Ren, Xuanye Cheng, Keyuan Qian, and Jinwei Gu.

"Time-of-Flight (ToF) cameras require active illumination to obtain depth information thus the power of illumination directly affects the performance of ToF cameras. Traditional ToF imaging algorithms is very sensitive to illumination and the depth accuracy degenerates rapidly with the power of it. Therefore, the design of a power efficient ToF camera always creates a painful dilemma for the illumination and the performance trade-off. In this paper, we show that despite the weak signals in many areas under extreme short exposure setting, these signals as a whole can be well utilized through a learning process which directly translates the weak and noisy ToF camera raw to depth map. This creates an opportunity to tackle the aforementioned dilemma and make a very power efficient ToF camera possible. To enable the learning, we collect a comprehensive dataset under a variety of scenes and photographic conditions by a specialized ToF camera. Experiments show that our method is able to robustly process ToF camera raw with the exposure time of one order of magnitude shorter than that used in conventional ToF cameras. In addition to evaluating our approach both quantitatively and qualitatively, we also discuss its implication to designing the next generation power efficient ToF cameras. We will make our dataset and code publicly available."

"Time-of-Flight (ToF) cameras require active illumination to obtain depth information thus the power of illumination directly affects the performance of ToF cameras. Traditional ToF imaging algorithms is very sensitive to illumination and the depth accuracy degenerates rapidly with the power of it. Therefore, the design of a power efficient ToF camera always creates a painful dilemma for the illumination and the performance trade-off. In this paper, we show that despite the weak signals in many areas under extreme short exposure setting, these signals as a whole can be well utilized through a learning process which directly translates the weak and noisy ToF camera raw to depth map. This creates an opportunity to tackle the aforementioned dilemma and make a very power efficient ToF camera possible. To enable the learning, we collect a comprehensive dataset under a variety of scenes and photographic conditions by a specialized ToF camera. Experiments show that our method is able to robustly process ToF camera raw with the exposure time of one order of magnitude shorter than that used in conventional ToF cameras. In addition to evaluating our approach both quantitatively and qualitatively, we also discuss its implication to designing the next generation power efficient ToF cameras. We will make our dataset and code publicly available."

Wednesday, December 19, 2018

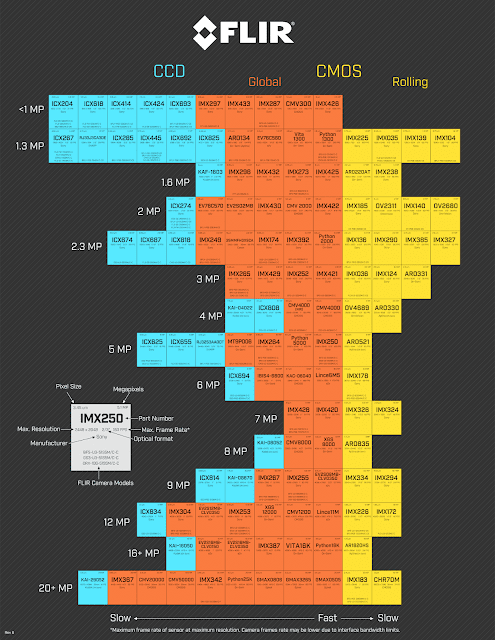

FLIR Periodic Table of Image Sensors

FLIR (former Point Grey) publishes a Periodic Table of Image Sensors for industrial and machine vision applications - a nice attempt to make an order in somewhat messy product lines from different companies. The high resolution pdf files can be downloaded from December 2019 issue of FLIR Insights newsletter:

"With so many sensors coming from different manufacturers, it's hard to remember them all. To help with this, we are giving away a handy guide that organizes 120 sensors, from classic CCDs to the latest CMOS technology, based on resolution, readout method, speed, and FPS. We suggest downloading and laminating it, then pinning it on your wall for easy reference. You will never have to memorize sensor names again. Enjoy, and have a happy holiday!"

"With so many sensors coming from different manufacturers, it's hard to remember them all. To help with this, we are giving away a handy guide that organizes 120 sensors, from classic CCDs to the latest CMOS technology, based on resolution, readout method, speed, and FPS. We suggest downloading and laminating it, then pinning it on your wall for easy reference. You will never have to memorize sensor names again. Enjoy, and have a happy holiday!"

Xiaomi Mi 8EE 3D FaceID Module

SystemPlus Consulting publishes teardown report on Xiaomi Mi 8 Explorer Edition smartphone featuring Mantis Vision structured light 3D module on the front side:

"Xiaomi has chosen Mantis Vision’s solution and its coded structured light to provide the 3D sensing capability. The 3D systems comprise a dot projector and a camera module assembly configuration. On the receiver side, the near infrared (NIR) image is captured by a global shutter (GS) NIR camera module.

The front optical hub is packaged in one metal enclosure, featuring several cameras and sensors. The complete system features a red-green-blue (RGB) camera module, a proximity sensor and an ambient light sensor. The 3D depth sensing system comprises the NIR camera module, the flood illuminator and the dot projector.

All components are standard products that can be readily found in the market. That includes a GS image sensor featuring 3µm x 3µm pixels and standard resolution of one megapixel and two vertical cavity surface emitting lasers (VCSELs), one for the dot projector and one for the flood illuminator. Both are from the same supplier. Both the camera and dot projector use standard camera module assemblies with wire bonding and optical modules featuring lenses. In order to provide coded structured light features, a mask is integrated into the dot projector structure."

"Xiaomi has chosen Mantis Vision’s solution and its coded structured light to provide the 3D sensing capability. The 3D systems comprise a dot projector and a camera module assembly configuration. On the receiver side, the near infrared (NIR) image is captured by a global shutter (GS) NIR camera module.

The front optical hub is packaged in one metal enclosure, featuring several cameras and sensors. The complete system features a red-green-blue (RGB) camera module, a proximity sensor and an ambient light sensor. The 3D depth sensing system comprises the NIR camera module, the flood illuminator and the dot projector.

All components are standard products that can be readily found in the market. That includes a GS image sensor featuring 3µm x 3µm pixels and standard resolution of one megapixel and two vertical cavity surface emitting lasers (VCSELs), one for the dot projector and one for the flood illuminator. Both are from the same supplier. Both the camera and dot projector use standard camera module assemblies with wire bonding and optical modules featuring lenses. In order to provide coded structured light features, a mask is integrated into the dot projector structure."

Tuesday, December 18, 2018

ToF Developers Conference

Espros Photonics announces ToF Developers Conference to be held in San Francisco on January 29–31, 2019:

"A successful design of a 3D TOF camera for example needs a deep understanding of the underlying optical physics - theoretical and practical. In addition, the behavioral model of the imaging system and an excellent understanding of the sensing artifacts in real applications is key knowhow. And further more, thermal management is an issue because these cameras have an active illumination, typically quite powerful. And, as a consequence,

eye-safety becomes an issue as well. A TOF camera consist of 9 functional building blocks which have to be understood and fine-tuned carefully to create a powerful but cost effective design.

So, many more disciplines than just electronics and software are in the game. It's not rocket science, but the relevant understanding of these 9 blocks is a must to know if someone gets the duty to design a 3D TOF camera

There is, at least to our knowledge, no engineering school which addresses TOF and LiDAR as an own discipline. We at ESPROS decided to fill the gap with a training program called TOF Developer Conference. The objective is to provide a solid theoretical background, a guideline to working implementations based on examples and practical work with TOF systems. Thus, the TOF Developer Conference shall become the enabler for electronics engineers (BS and MS in EE engineering) to design working TOF systems. It is ideally for engineers who are or will be, involved in the design of TOF system. We hope that our initiative helps to close the gap between the desire of TOF sensors to massively deployed TOF applications.

Course topics:

TOF history; TOF principles; main parts of a TOF camera; relevant optical physics; light detection; receiver physics; noise considerations; SNR; light emission and light sources; eye safety; light power budget calculation; optics basics; optical systems and key requirements; bringing it all together; electronics, PCB layout guidelines; power considerations; calibration and compensation; filtering computing power requirements; interference detection and suppression; artifacts and how to deal with them; practical lab experiments; Q&A; much more…"

The next two ToF conferences are planned to be held in China: Shanghai on April 2, 2019 and Shenzhen on April 9, 2019.

Update: A picture from San Francisco conference:

"A successful design of a 3D TOF camera for example needs a deep understanding of the underlying optical physics - theoretical and practical. In addition, the behavioral model of the imaging system and an excellent understanding of the sensing artifacts in real applications is key knowhow. And further more, thermal management is an issue because these cameras have an active illumination, typically quite powerful. And, as a consequence,

eye-safety becomes an issue as well. A TOF camera consist of 9 functional building blocks which have to be understood and fine-tuned carefully to create a powerful but cost effective design.

So, many more disciplines than just electronics and software are in the game. It's not rocket science, but the relevant understanding of these 9 blocks is a must to know if someone gets the duty to design a 3D TOF camera

There is, at least to our knowledge, no engineering school which addresses TOF and LiDAR as an own discipline. We at ESPROS decided to fill the gap with a training program called TOF Developer Conference. The objective is to provide a solid theoretical background, a guideline to working implementations based on examples and practical work with TOF systems. Thus, the TOF Developer Conference shall become the enabler for electronics engineers (BS and MS in EE engineering) to design working TOF systems. It is ideally for engineers who are or will be, involved in the design of TOF system. We hope that our initiative helps to close the gap between the desire of TOF sensors to massively deployed TOF applications.

Course topics:

TOF history; TOF principles; main parts of a TOF camera; relevant optical physics; light detection; receiver physics; noise considerations; SNR; light emission and light sources; eye safety; light power budget calculation; optics basics; optical systems and key requirements; bringing it all together; electronics, PCB layout guidelines; power considerations; calibration and compensation; filtering computing power requirements; interference detection and suppression; artifacts and how to deal with them; practical lab experiments; Q&A; much more…"

The next two ToF conferences are planned to be held in China: Shanghai on April 2, 2019 and Shenzhen on April 9, 2019.

Update: A picture from San Francisco conference:

Sony Announces 5.4MP Automotive Sensor with HDR and LED Flicker Mitigation

Sony announces the 1/1.55-inch, 5.4MP (effective) IMX490 CMOS sensor for automotive cameras. Sony will begin shipping samples in March 2019.

The new sensor simultaneously achieves HDR and LED flicker mitigation at what Sony calls the industry’s highest 5.4MP resolution in automotive cameras. Sony has also improved the saturation illuminance through a proprietary pixel structure and exposure method. When using the HDR imaging and LED flicker mitigation functions at the same time, this offers a wide 120dB DR (measured in accordance to the EMVA 1288 standard. 140dB when set to prioritize DR.) This DR is said to be three times higher than that of the previous product. This means highlight oversaturation can be mitigated, even in situations where 100,000 lux sunlight is directly reflecting off a light-colored car in the front, and the like, thereby capturing the subject more accurately even under road conditions where there is a dramatic lighting contrast such as when entering and exiting a tunnel.

Moreover, this unique method is said to prevent motion artifacts that occur when capturing moving subjects compared with other HDR technologies. The new sensor also improves the sensitivity by about 15% compared to that of the previous generation product, improving the capability to recognize pedestrians and obstacles in low illuminance conditions of 0.1 lux, the equivalent of moonlight.

This product is scheduled to meet the AEC-Q100 Grade 2 reliability standards for automobile electronic components for mass production. Sony has also introduced a development process compliant with ISO 26262 functional safety standards for automobiles to ensure that design quality meets the functional safety requirements for automotive applications, thereby supporting functional safety level ASIL D for fault detection, notification and control.*6 Moreover, the new sensor has security functions to protect the output image from tampering.

The new sensor simultaneously achieves HDR and LED flicker mitigation at what Sony calls the industry’s highest 5.4MP resolution in automotive cameras. Sony has also improved the saturation illuminance through a proprietary pixel structure and exposure method. When using the HDR imaging and LED flicker mitigation functions at the same time, this offers a wide 120dB DR (measured in accordance to the EMVA 1288 standard. 140dB when set to prioritize DR.) This DR is said to be three times higher than that of the previous product. This means highlight oversaturation can be mitigated, even in situations where 100,000 lux sunlight is directly reflecting off a light-colored car in the front, and the like, thereby capturing the subject more accurately even under road conditions where there is a dramatic lighting contrast such as when entering and exiting a tunnel.

Moreover, this unique method is said to prevent motion artifacts that occur when capturing moving subjects compared with other HDR technologies. The new sensor also improves the sensitivity by about 15% compared to that of the previous generation product, improving the capability to recognize pedestrians and obstacles in low illuminance conditions of 0.1 lux, the equivalent of moonlight.

This product is scheduled to meet the AEC-Q100 Grade 2 reliability standards for automobile electronic components for mass production. Sony has also introduced a development process compliant with ISO 26262 functional safety standards for automobiles to ensure that design quality meets the functional safety requirements for automotive applications, thereby supporting functional safety level ASIL D for fault detection, notification and control.*6 Moreover, the new sensor has security functions to protect the output image from tampering.

Monday, December 17, 2018

Ambarella Announces 8MP ADAS Processor

BusinessWire: Ambarella introduces the CV22AQ automotive camera SoC, featuring the Ambarella CVflow computer vision architecture for powerful Deep Neural Network (DNN) processing. Target applications include front ADAS cameras, electronic mirrors with Blind Spot Detection (BSD), interior driver and cabin monitoring cameras, and Around View Monitors (AVM) with parking assist. Fabricated in advanced 10nm process technology, its low power consumption supports the small form factor and thermal requirements of windshield-mounted forward ADAS cameras.

The CV22AQ’s CVflow architecture provides computer vision processing in 8MP resolution at 30 fps, to enable object recognition over long distances and with high accuracy. CV22AQ supports multiple image sensor inputs for multi-FOV cameras and can also create multiple digital FOVs using a single high-resolution image sensor to reduce system cost.

“To date, front ADAS cameras have been performance-constrained due to power consumption limits inherent in the form factor,” said Fermi Wang, CEO of Ambarella. “CV22AQ provides an industry-leading combination of outstanding neural network performance and very low typical power consumption of below 2.5 watts. This breakthrough in power and performance, coupled with best-in-class image processing, allows tier-1 and OEM customers to greatly increase the performance and accuracy of ADAS algorithms.”

The CV22AQ’s CVflow architecture provides computer vision processing in 8MP resolution at 30 fps, to enable object recognition over long distances and with high accuracy. CV22AQ supports multiple image sensor inputs for multi-FOV cameras and can also create multiple digital FOVs using a single high-resolution image sensor to reduce system cost.

“To date, front ADAS cameras have been performance-constrained due to power consumption limits inherent in the form factor,” said Fermi Wang, CEO of Ambarella. “CV22AQ provides an industry-leading combination of outstanding neural network performance and very low typical power consumption of below 2.5 watts. This breakthrough in power and performance, coupled with best-in-class image processing, allows tier-1 and OEM customers to greatly increase the performance and accuracy of ADAS algorithms.”

SEMI Forecasts Fab Investment Drop

SEMI: Total fab equipment spending in 2019 is projected to drop 8%, a sharp reversal from the previously forecast increase of 7% as fab investment growth has been revised downward for 2018 to 10% from the 14% predicted in August, according to the latest edition of the World Fab Forecast Report.

However, image sensor fab spending remains a bright spot: "Opto – especially CMOS image sensors – shows strong growth, surging 33 percent to US$3.8 billion in 2019:"

However, image sensor fab spending remains a bright spot: "Opto – especially CMOS image sensors – shows strong growth, surging 33 percent to US$3.8 billion in 2019:"

Credit Suisse on Mobile Imaging Market

IFNews: Credit Suisse report on mobile phone market analyses the market trends:

"The CIS business continues to see a shift toward higher resolutions and multi-camera phones. Multi-camera phones accounted for 65% of iPhone and 44% of Android phone production in Jul–Sep 2018 in an indication that the trend is accelerating. We now assume a multi-camera weighting of 40% (previously 35%) for 2018 and 50% (45%) for 2019, including an increase from 7% to 10% for triple-camera phones in 2019. This represents a tailwind for profits at Sony (6758), as do improvement in the sales mix accompanying the shift toward higher resolutions and larger sensors.

While the smartphone market is looking sluggish overall, we expect continued profit growth for Sony's CIS business in 2H FY3/19 and out as the number of sensors per smartphone increases, the sales mix improves (higher resolutions, larger sizes), and the company gains market share in supplying Chinese smartphone makers. We think the business could continue to drive companywide profits in FY3/20 and remain generally expectant of its potential.

TOF sensors: In our report on the previous survey, we said that some module makers think the 2019 models are likely to incorporate rear time-of-flight (TOF) sensors, but some device makers regard this as unlikely. However, based on the current survey, we now think TOF sensors will probably be first adopted in the 2020 models rather than the 2019 models.

We said in our previous survey report that Samsung was targeting a multi-camera weighting of 50% (triple camera 20%, dual camera 30%) in 2019, but our latest survey indicates a growing possibility of a 70% target triple/quadruple camera 20%, dual camera 50%). Samsung appears to be currently planning for around 10 triple- or quadruple-camera models as well as phones with 5x zoom folded optics cameras and pop-up cameras. The company is considering megapixel combinations of 48MP-16MP-13MP and 48MP-10MP-5MP for triple camera models while also looking at 48MP-8MP-5MP, 32MP-8MP-5MP, and 16MP-8MP-5MP.

In CY19, we think the smartphone manufacturers will adopt triple-camera and triple/quadruple-camera systems on a full scale, mainly in their high-end phones, and we expect them to also build large 48MP sensors into their phones. We believe companies like Huawei, Oppo, and Xiaomi will consider adopting 5x-zoom folding optics systems. Regarding OISs, we project that the smartphone manufacturers will use CIS technology in their main cameras and shift to shape memory alloy (SMA) OISs and away from VCM type OISs in response to the increase in lens size.

Sony will likely be the sole supplier of 48MP sensors for the spring models. Samsung LSI 12MP/48MP-sensor re-mosaic technology is lagging, and we project that it will be mid-CY19 at the earliest before it is adopted in high-end phones. Consequently, we think Samsung LSI CISs that are 48MP in the catalog specs but 12MP in actual image quality will probably be used in midrange spring models. We think that in China, demand for 24MP CISs, mainly for phones with two front-side cameras, has reached around 100mn units per year. We think that in CY19, demand for 48MP CISs for rear-side cameras could increase to roughly 150mn units.

CIS supplies remain tight, particularly for 2/5/8MP CISs manufactured using an 8-inch process. Tight supplies of CISs for high-end models in CY19, owing to the transition to large 48MP sensors, are a concern."

"The CIS business continues to see a shift toward higher resolutions and multi-camera phones. Multi-camera phones accounted for 65% of iPhone and 44% of Android phone production in Jul–Sep 2018 in an indication that the trend is accelerating. We now assume a multi-camera weighting of 40% (previously 35%) for 2018 and 50% (45%) for 2019, including an increase from 7% to 10% for triple-camera phones in 2019. This represents a tailwind for profits at Sony (6758), as do improvement in the sales mix accompanying the shift toward higher resolutions and larger sensors.

While the smartphone market is looking sluggish overall, we expect continued profit growth for Sony's CIS business in 2H FY3/19 and out as the number of sensors per smartphone increases, the sales mix improves (higher resolutions, larger sizes), and the company gains market share in supplying Chinese smartphone makers. We think the business could continue to drive companywide profits in FY3/20 and remain generally expectant of its potential.

TOF sensors: In our report on the previous survey, we said that some module makers think the 2019 models are likely to incorporate rear time-of-flight (TOF) sensors, but some device makers regard this as unlikely. However, based on the current survey, we now think TOF sensors will probably be first adopted in the 2020 models rather than the 2019 models.

We said in our previous survey report that Samsung was targeting a multi-camera weighting of 50% (triple camera 20%, dual camera 30%) in 2019, but our latest survey indicates a growing possibility of a 70% target triple/quadruple camera 20%, dual camera 50%). Samsung appears to be currently planning for around 10 triple- or quadruple-camera models as well as phones with 5x zoom folded optics cameras and pop-up cameras. The company is considering megapixel combinations of 48MP-16MP-13MP and 48MP-10MP-5MP for triple camera models while also looking at 48MP-8MP-5MP, 32MP-8MP-5MP, and 16MP-8MP-5MP.

In CY19, we think the smartphone manufacturers will adopt triple-camera and triple/quadruple-camera systems on a full scale, mainly in their high-end phones, and we expect them to also build large 48MP sensors into their phones. We believe companies like Huawei, Oppo, and Xiaomi will consider adopting 5x-zoom folding optics systems. Regarding OISs, we project that the smartphone manufacturers will use CIS technology in their main cameras and shift to shape memory alloy (SMA) OISs and away from VCM type OISs in response to the increase in lens size.

Sony will likely be the sole supplier of 48MP sensors for the spring models. Samsung LSI 12MP/48MP-sensor re-mosaic technology is lagging, and we project that it will be mid-CY19 at the earliest before it is adopted in high-end phones. Consequently, we think Samsung LSI CISs that are 48MP in the catalog specs but 12MP in actual image quality will probably be used in midrange spring models. We think that in China, demand for 24MP CISs, mainly for phones with two front-side cameras, has reached around 100mn units per year. We think that in CY19, demand for 48MP CISs for rear-side cameras could increase to roughly 150mn units.

CIS supplies remain tight, particularly for 2/5/8MP CISs manufactured using an 8-inch process. Tight supplies of CISs for high-end models in CY19, owing to the transition to large 48MP sensors, are a concern."

Saturday, December 15, 2018

IEDM 2018 HDR and GS Papers Review

This year, IEDM had quite a lot of image sensor papers. Some of them talking about HDR and GS are briefly reviewed below:

1.5µm dual conversion gain, backside illuminated image sensor using stacked pixel level connections with 13ke- full-well capacitance and 0.8e- noise

V. C. Venezia, A. C-W Hsiung, K. Ai, X. Zhao, Zhiqiang Lin, Duli Mao, Armin Yazdani, Eric A. G. Webster, L. A. Grant, OmniVision Technologies

Omnivision presented two stacked designs with pixel-level interconnects. Design A has been selected as a more optimal from the DR point of view:

A 0.68e-rms Random-Noise 121dB Dynamic-Range Sub-pixel architecture CMOS Image Sensor with LED Flicker Mitigation

S. Iida, Y. Sakano, T. Asatsuma, M. Takami, I. Yoshiba, N. Ohba, H. Mizuno, T. Oka, K. Yamaguchi, A. Suzuki, K. Suzuki, M. Yamada, M. Takizawa, Y. Tateshita, and K. Ohno, Sony Semiconductor

Sony presented its version of Big-Little PDs in a single pixel:

A 24.3Me- Full Well Capacity CMOS Image Sensor with Lateral Overflow Integration Trench Capacitor for High Precision Near Infrared Absorption Imaging

M. Murata, R. Kuroda, Y. Fujihara, Y. Aoyagi, H. Shibata*, T. Shibaguchi*, Y. Kamata*, N. Miura*, N. Kuriyama*, and S. Sugawa, Tohoku University, *LAPIS Semiconductor Miyagi Co., Ltd.

Tohoku University and LAPIS present an evolution of their LOFIC pixel with deeply depleted PDs on 1e12 cm-3 doped substrate:

HDR 98dB 3.2µm Charge Domain Global Shutter CMOS Image Sensor (Invited)

A. Tournier, F. Roy, Y. Cazaux*, F. Lalanne, P. Malinge, M. Mcdonald, G. Monnot**, N. Roux**, STMicroelectronics, **CEA Leti, **STMicroelectronics

ST and Leti explain their dual memory pixel architecture:

High Performance 2.5um Global Shutter Pixel with New Designed Light-Pipe Structure

T. Yokoyama, M. Tsutsui,Y. Nishi, I. Mizuno, V. Dmitry, A. Lahav, TPSCo & TowerJazz

TowerJazz and TPSCo show their latest generation small GS pixel available for the foundry customers:

Back-Illuminated 2.74 µm-Pixel-Pitch Global Shutter CMOS Image Sensor with Charge-Domain Memory Achieving 10k e- Saturation Signal

Y. Kumagai, R. Yoshita, N. Osawa, H. Ikeda, K.Yamashita, T. Abe, S. Kudo, J. Yamane, T. Idekoba, S. Noudo, Y. Ono, S.Kunitake, M. Sato, N. Sato, T. Enomoto, K. Nakazawa, H. Mori, Y. Tateshita, and K. Ohno, Sony Semiconductor

Sony presented its approach to shielding the storage nodes in BSI GS sensor:

1.5µm dual conversion gain, backside illuminated image sensor using stacked pixel level connections with 13ke- full-well capacitance and 0.8e- noise

V. C. Venezia, A. C-W Hsiung, K. Ai, X. Zhao, Zhiqiang Lin, Duli Mao, Armin Yazdani, Eric A. G. Webster, L. A. Grant, OmniVision Technologies

Omnivision presented two stacked designs with pixel-level interconnects. Design A has been selected as a more optimal from the DR point of view:

A 0.68e-rms Random-Noise 121dB Dynamic-Range Sub-pixel architecture CMOS Image Sensor with LED Flicker Mitigation

S. Iida, Y. Sakano, T. Asatsuma, M. Takami, I. Yoshiba, N. Ohba, H. Mizuno, T. Oka, K. Yamaguchi, A. Suzuki, K. Suzuki, M. Yamada, M. Takizawa, Y. Tateshita, and K. Ohno, Sony Semiconductor

Sony presented its version of Big-Little PDs in a single pixel:

A 24.3Me- Full Well Capacity CMOS Image Sensor with Lateral Overflow Integration Trench Capacitor for High Precision Near Infrared Absorption Imaging

M. Murata, R. Kuroda, Y. Fujihara, Y. Aoyagi, H. Shibata*, T. Shibaguchi*, Y. Kamata*, N. Miura*, N. Kuriyama*, and S. Sugawa, Tohoku University, *LAPIS Semiconductor Miyagi Co., Ltd.

Tohoku University and LAPIS present an evolution of their LOFIC pixel with deeply depleted PDs on 1e12 cm-3 doped substrate:

HDR 98dB 3.2µm Charge Domain Global Shutter CMOS Image Sensor (Invited)

A. Tournier, F. Roy, Y. Cazaux*, F. Lalanne, P. Malinge, M. Mcdonald, G. Monnot**, N. Roux**, STMicroelectronics, **CEA Leti, **STMicroelectronics

ST and Leti explain their dual memory pixel architecture:

High Performance 2.5um Global Shutter Pixel with New Designed Light-Pipe Structure

T. Yokoyama, M. Tsutsui,Y. Nishi, I. Mizuno, V. Dmitry, A. Lahav, TPSCo & TowerJazz

TowerJazz and TPSCo show their latest generation small GS pixel available for the foundry customers:

Back-Illuminated 2.74 µm-Pixel-Pitch Global Shutter CMOS Image Sensor with Charge-Domain Memory Achieving 10k e- Saturation Signal

Y. Kumagai, R. Yoshita, N. Osawa, H. Ikeda, K.Yamashita, T. Abe, S. Kudo, J. Yamane, T. Idekoba, S. Noudo, Y. Ono, S.Kunitake, M. Sato, N. Sato, T. Enomoto, K. Nakazawa, H. Mori, Y. Tateshita, and K. Ohno, Sony Semiconductor

Sony presented its approach to shielding the storage nodes in BSI GS sensor:

Subscribe to:

Posts (Atom)