Canon developing world-first ultra-high-sensitivity ILC equipped with SPAD sensor, supporting precise monitoring through clear color image capture of subjects several km away, even in darkness

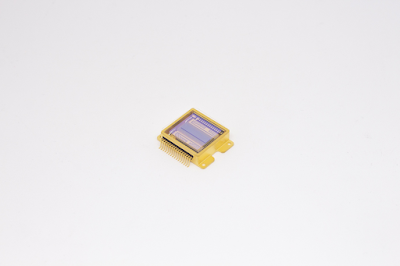

TOKYO, April 3, 2023—Canon Inc. announced today that the company is developing the MS-500, the world's first1 ultra-high-sensitivity interchangeable-lens camera (ILC) equipped with a 1.0 inch Single Photon Avalanche Diode (SPAD) sensor2 featuring the world's highest pixel count of 3.2 megapixels3. The camera leverages the special characteristics of SPAD sensors to achieve superb low-light performance while also utilizing broadcast lenses that feature high performance at telephoto-range focal lengths. Thanks to such advantages, the MS-500 is expected to be ideal for such applications as high-precision monitoring.

There is a growing need for high-precision monitoring systems for use in such environments as national borders, seaports, airports, train stations, power plants and other key infrastructure facilities, in order to quickly identify targets even under adverse conditions including darkness in which human eyes cannot see, and from long distances.

The currently in-development MS-500 is equipped with a 1.0 inch SPAD sensor that reduces noise, thus making possible clear, full-color HD imaging even in extreme low-light environments. When paired with Canon's extensive range of broadcast lenses, which excel at super-telephoto image capture, the camera is capable of accurately capturing subjects with precision in extreme low-light environments, even from great distances. For example, the camera may be used for nighttime monitoring of seaports, thanks to its ability to spot vessels that are several km away, thus enabling identification and high-precision monitoring of vessels in or around the seaport.

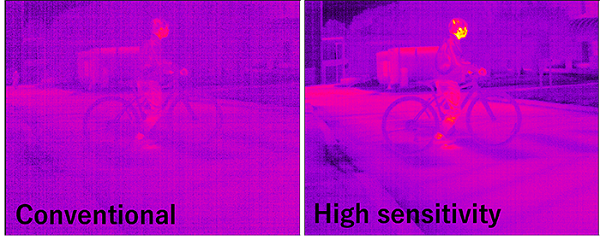

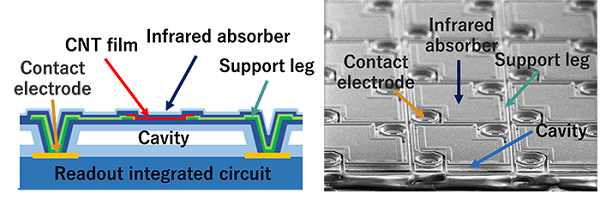

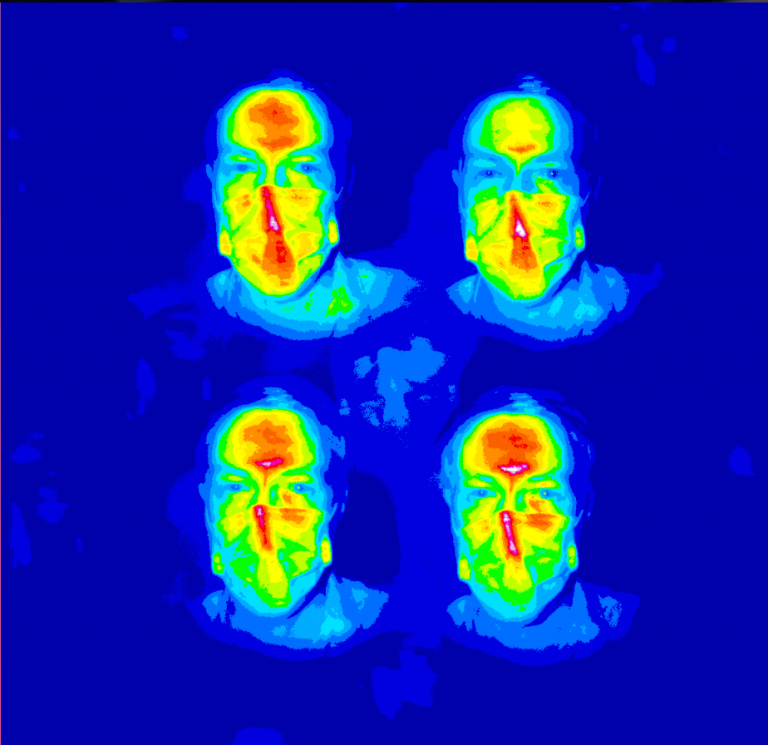

With CMOS sensors, which are commonly used in conventional modern digital cameras, each pixel measures the amount of light that reaches the pixel within a given time. However, the readout of the accumulated electronic charge contains electronic noise, which diminishes image quality, due to the process by which accumulated light is measured. This leads to degradation of the resulting image, particularly when used in low-light environments. SPAD sensors, meanwhile, employ a technology known as "photon counting", in which light particles (photons) that enter each individual pixel are counted. When even a single photon enters a pixel, it is instantly amplified approximately 1 million times and output as an electrical signal. Every single one of these photons can be digitally counted, thus making possible zero-noise during signal readout—a key advantage of SPAD sensors4. Because of this technological advantage, the MS-500 is able to operate even under nighttime environments with no ambient starlight5, and is also capable of accurately detecting subjects with minimal illumination and capture clear color images.

The MS-500 employs the bayonet lens mount (based on BTA S-1005B standards) which is widely used in the broadcast lens industry. This enables the camera to be used with Canon's extensive range of broadcast lenses which feature superb optical performance. As a result, the camera is able to recognize and capture subjects that are several km away.

Going forward, Canon will continue to pursue R&D and create products capable of surpassing the limits of the human eye while contributing to the safety and security of society by leveraging its long history of comprehensive imaging technologies that include optics, sensors, image processing and image analysis.

Canon plans to commence sales of the MS-500 in 2023.

Reference

The MS-500 will be displayed as a reference exhibit at the Canon booth during the 2023 NAB Show for broadcast and filmmaking equipment, to be held in Las Vegas from Saturday, April 15 to Wednesday, April 19.

1Among color cameras. As of April 2, 2023. Based on Canon research.

2Among SPAD sensors for imaging use. As of April 2, 2023. Based on Canon research.

3Total pixel count: 3.2 million pixels. Effective pixel count: 2.1 million pixels.

4For more information on how SPAD sensors operate and how they differ from CMOS sensors, please visit the following website:

https://global.canon/en/technology/spad-sensor-2021.html

5Ambient starlight is equivalent to approximately 0.02 lux. A nighttime environment with no ambient starlight is equivalent to approximately 0.007 lux.