Semiconductor Engineering published an article on dealing with noise in CMOS image sensors: https://semiengineering.com/dealing-with-noise-in-image-sensors/

Dealing With Noise In Image Sensors

The expanding use and importance of image sensors in safety-critical applications such as automotive and medical devices has transformed noise from an annoyance into a life-threatening problem that requires a real-time solution.

In consumer cameras, noise typically results in grainy images, often associated with poor lighting, the speed at which an image is captured, or a faulty sensor. Typically, that image can be cleaned up afterward, such as reducing glare in a selfie. But in cars, glare in an ADAS image system can affect how quickly the brakes are applied. And in vehicles or medical devices, systems are so complex that external effects can affect images, including heat, electromagnetic interference, and vibration. This can be particularly problematic in AI-enabled computer vision systems where massive amounts of data need to be processed at extremely high speeds. And any of this can be affected by aging circuits, due to dielectric breakdown or changes in signal paths due to electromigration.

Thresholds for noise tolerance vary by application. “A simple motion-activated security camera or animal-motion detection system at a zoo can tolerate much more noise and operate at much lower resolution than a CT scanner or MRI system used in life-saving medical contexts,” said Brad Jolly, senior applications engineer at Keysight. “[Noise] can mean anything that produces errors in a component or system that acquires any form of image, including visible light, thermal, X-ray, radio frequency (RF), and microwave.”

Tolerance is also determined by human perception, explained Andreas Suess, senior manager for novel image sensor systems in OmniVision’s Office of the CTO. “Humans perceive an image as pleasing with a signal-to-noise ratio (SNR) of >20dB, ideally >40dB. But objects can often be seen at low SNR levels of 1dB or less. For computational imaging, in order to deduce what noise level can be accepted one needs to be aware of their application-level quality metrics and study the sensitivity of these metrics against noise carefully.”

Noise basics for imaging sensors

No noise is ideal, but it’s an unrealistic goal. “With an image sensor, noise is inevitable,” said Isadore Katz, senior marketing director at Siemens Digital Industries Software. “It’s when you’ve got a pixel value that’s currently out of range with respect to what you would have expected at that point. You can’t design it out of the sensor. It’s just part of the way image sensors work. The only thing you can do is post-process it away. You say to yourself, ‘That’s not the expected value. What should it have been?’”

Primarily noise is categorized as fixed pattern noise and temporal noise, and both explain why engineers must cope with its inevitability. “Temporal noise is a fundamental process based on the quantization of light (photons) and charge (electrons),” said Suess. “When capturing an amount of light over a given exposure, one will observe a varying amount of photons which is known as photon shot noise, which is a fundamental noise process present in all imaging devices.” In fact, even without the presence of light, a dark signal, also known as dark current, can exhibit shot noise.

Worse, even heat alone can cause noise, which can cause difficulties for ADAS sensors under extreme conditions. “An image sensor has to work over the brightest and darkest conditions; it also has to work at -20 degrees and up to 120 degrees,” said Jayson Bethurem, vice president of marketing and business development at Flex Logix. “All CMOS sensors run slower and get noisier when it’s hotter. They run faster, a little cleaner, when it’s cold, but only up to a certain point. When it gets too cold, they start to have other negative effects. Most of these ICs self-heat when they’re running, so noise gets inserted there too. The only way to get rid of that is to filter it out digitally.”

Fixed-pattern noise stems from process non-uniformities, as well as design choices and can cause offset, gain or settling artifacts. Fixed pattern noise can manifest itself as variations in quantum efficiency, offset or gain, as well as read noise. Mitigating fixed pattern noise requires effort on process, device, circuit design, and signal processing levels.

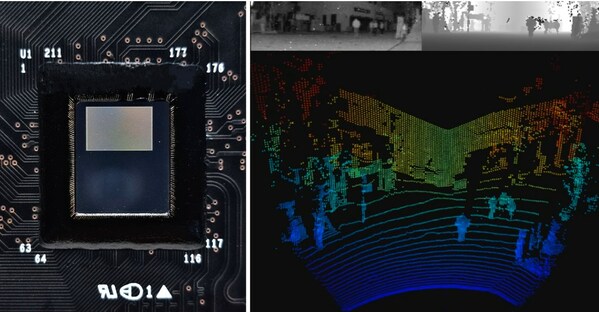

Fig. 1: Noise issues and resolution. Source: Flex Logix

In addition, noise affects both digital and analog systems. “Digital systems always start by digitizing data from some analog source, so digital systems start with all the same noise issues that analog systems do,” Jolly said. “In addition, digital systems must deal with quantization and pixelation issues, which always arise whenever some analog signal value is converted into a bit string. If the bits are then subjected to a lossy compression algorithm, this introduces additional noise. Furthermore, the increase in high-speed digital technologies such as double data rate memory (DDRx), quadrature amplitude modulation (QAM-x), non-return-to-zero (NRZ) line coding, pulse amplitude modulation (PAM), and other complex modulation schemes means that reflections and cross-channel coupling introduce noise into the system, possibly to the point of bit slipping and bit flipping. Many of these issues may be automatically handled by error correcting mechanisms within the digital protocol firmware or hardware.”

Noise can be introduced anywhere along the imaging chain and create a wide range of problems. “For example, the object being imaged may have shadows, occlusions, internal reflections, non-coplanarity issues, parallax, or even subtle vibrations, especially in a manufacturing environment,” Jolly explained. “In such situations, noise can complicate inspections. For example, a multi-layer circuit board being imaged with X-ray technology could have solder joint shadows if there are overlapping grid array components on the top and bottom of the board.”

Variability in the alignment between the image sensor and the subject of the image — rotational or translational offset, and planar skew — may add to the variability. And thermal gradients in the gap between the subject and the sensor may introduce noise, such as heat shimmer on a hot road. Low light and too-fast image capture also may introduce noise.

There are other issues to consider, as well. “A lens in the imaging chain may introduce noise, including chromatic aberration, spherical aberration, and errors associated with microscopic dust or lens imperfections. The lens controls the focus, depth of field, and focal plane of the image, all of which are key aspects of image acquisition. Finally, the imaging sensing hardware itself has normal manufacturing variability and thermal responses, even when operating in its specified range. A sensor with low resolution or low dynamic range is also likely to distort an image. Power integrity issues in the lines that power the sensor may show up as noise in the image. Finally, the camera’s opto-electronic conversion function (OECF) will play a key role in image quality,” Jolly added.

External sources of noise also can include flicker, which needs to be resolved for clear vision.

Fig. 2: Flicker from LED traffic lights or traffic signs poses a serious challenge for HDR solutions, preventing driver-assistance and autonomous driving systems from being able to correctly detect lighted traffic signs. Source: OmniVision

Imaging basics for ADAS

While noise would seem to be a critical problem for ADAS sensors, given the potential for harm or damage, it’s actually less of an issue than for something like a consumer camera, where out-of-range pixels can ruin an image. ADAS is not concerned with aesthetics. It focuses on a binary decision — brake or not brake. In fact, ADAS algorithms are trained on lower-resolution images, and ignore noise that would be a product-killer in a consumer camera.

For example, to find a cat in the middle of an image, first the image is “segmented,” a process in which a bounding box is drawn around a potential object of interest. Then the image is fed into a neural net, and each bounding region is evaluated. The images are labeled, and then an algorithm can train itself to identify what’s salient. “That’s a cat. We should care about it and brake. It’s a skunk. We don’t care about it. Run it over,” said Katz. That may sound like a bad joke, but ADAS algorithms actually are trained to assign lower values to certain animals.

“It is about safety in the end, not so much ethics,” Katz said. “Even if someone does not care about moose, the car still has to brake because of the danger to the passengers. Hitting the brakes in any situation can pose a risk.” But higher values are assigned to cats and dogs, rather than skunks and squirrels.

If an object is fully or partly occluded by another object or obscured by light flare, it will require more advanced algorithms to correctly discern what it is. After the frame is received from the camera and has gone through basic image signal processing, the image is then presented to a neural net.

“Now you’ve left the domain of image signal processing and entered the domain of computer vision, which starts with a frame or sequence of frames that have been cleaned up and are ready for presentation,” said Katz. “Then you’re going to package those frames up and send them off to an AI algorithm for training, or you’re going to take those images and then process them on a local neural net, which will start by creating bounding boxes around each of the artifacts that are inside the frame. If the AI can’t recognize an object in the frame it’s examining, it will try to recognize it in the following or preceding frames.”

In a risky situation, the automatic braking system has about 120ms to respond, so all of this processing needs to happen within the car. In fact, there may not even be time to route from the sensor to the car’s own processor. “Here are some numbers to think about,” said Katz. “At 65 mph, a car is moving at 95 feet per second. At 65 mph, it takes about 500 feet to come to a complete stop. So even at 32.5 mph in a car, it will travel 47 feet in 1 second. If the total round trip from sensor to AI to brake took a half-second, you would be 25 feet down the road and still need to brake. Now keep in mind that the sensor is capturing images at about 30 frames per second. So every 33 milliseconds, the AI has to make another decision.”

In response, companies are using high-level synthesis to develop smart sensors, in which an additional die — with all the traditional functions of an image signal processor (ISP), such as noise reduction, deblurring, and edge detection — is sandwiched directly adjacent to the sensor.

“It’s now starting to include computer vision capability, which can be algorithmic or AI-driven,” said Katz. “You’ll start to see a smart sensor that has a neural net built inside. It could even be a reprogrammable neural net, so you can make updates for the different weights and parameters as soon as it gets smarter.”

If such a scheme succeeds, it means that a sensor could perform actions locally, allowing for real-time decisions. It also could repackage the information to be stored and processed in the cloud or car, for later training to increase accurate, rapid decision-making. In fact, many modern ISPs can already dynamically compensate for image quality. “For example, if there is a sudden change from bright light to low light, or vice-versa, the ISP can detect this and change the sensor settings,” he said. “However, this feedback occurs well before the image gets to the AI and object detection phase, such that subsequent frames are cleaner going into the AI or object detection.”

One application that already exists is driver monitoring, which presents another crucial noise issue for designers. “The car can have the sun shining right in your face, saturating everything, or the complete opposite where it’s totally dark and the only light is emitting off your dashboard,” said Bethurem. “To build an analog sensor and the associated analog equipment to have that much dynamic range and the required level of detail, that’s where noise is a challenge, because you can’t build a sensor of that much dynamic range to be perfect. On the edges, where it’s really bright or over-saturated bright, it’s going to lose quality, which has to get made up. And those are sometimes the most dangerous times, when you want to make sure the driver is doing what they’re supposed to be doing.”

AI and noise

The challenges of noise and the increasing intelligence of sensors have also attracted the attention of the AI community.

“There are already AI systems capable of filling in occluded parts of a digital image,” said Tony Chan Carusone, CTO at Alphawave Semi. “This has obvious potential for ADAS. However, to perform this at the edge in real-time will require new dedicated processing elements to provide the immediate feedback required for safety-critical systems. This is a perfect example of an area where we can expect to see new custom silicon solutions.”

Steve Roddy, chief marketing officer at Quadric, notes that path already is being pioneered. “Look at Android’s/Google’s ‘Magic Eraser’ functionality in phones – quickly deleting photo-bombers and other background objects and filling in the blanks. Doing the same on an automotive sensor to remove occlusions and ‘fill in the blanks’ is a known solved problem. Doing it in real time is a simple compute scaling problem. In 5nm technology today, ~10mm2 can get you a full 40 TOPs of fully programmable GPNPU capability. That’s a tiny fraction of the large (> 400 mm2) ADAS chips being designed today. Thus, there’s likely to be more than sufficient programmable GPNPU compute capability to tackle these kinds of use cases.”

Analyzing noise

Analyzing noise in image sensors is a challenging and active area of research that dates back more than 50 years. The general advice from vendors is to talk to them directly to determine if their instrumentation aligns with a project’s specific needs.

“Noise is of a lot of interest to customers,” said Samad Parekh, product manager for analog/RF simulation at Synopsys. “There are many different ways of dealing with it, and some are very well understood. You can represent the noise in a closed form expression, and because of that you can very accurately predict what the noise profile is going to look like. Other mechanisms are not as well understood or are not as linear. Because those are more random, there’s a lot more effort required to characterize the noise or design with that constraint in mind.”

Best practices

Keysight’s Jolly offered day-to-day advice for reducing and managing noise in image sensor projects:

- Clearly define the objectives of the sensor as part of the overall system. For example, a slow, low-resolution thermal imager or vector network analyzer may reveal information about subcutaneous or subdural disease or injury that would be invisible to a high-resolution, high-speed visible light sensor. Work with your component and module vendors to understand what noise analysis and denoising they have already done. You will learn a lot and be able to leverage a lot of excellent work that has already been accomplished. Also, consider image noise throughout the total product life cycle and use simulation tools early in your design phase to minimize issues caused by sub-optimal signal integrity or power integrity.

- Analyze the problem from the perspective of the end user. What are their objectives? What are their concerns? What skills do they possess? Can they make appropriate interventions and modifications? What is their budget? It may turn out, for example, that a fully automated system with a higher amount of noise may be more appropriate for some applications than a more complex system that can achieve much lower noise.

- Become familiar with camera, optical, and imaging standards that are available, such as ISO 9358, 12232, 12233, 14524, and 15739, as well as European Machine Vision Association (EMVA) 1288.

- Investigate the latest research on the use of higher mathematics, statistics, and artificial intelligence in de-noising. Some of these techniques include expectation maximization estimation, Bayesian estimation, linear minimum mean square error estimation, higher-order partial differential equations, and convolutional neural networks.

Future approaches

While current ADAS systems may tolerate more noise than other forms of imaging, that may not be the case in the future. A greater variety of use cases will push image sensors towards higher resolutions, which in turn will require more localized processing and noise reduction.

“A lot of the image processing in the past was VGA, but applications like internal cabin monitoring, such as eye-tracking the driver and passengers to recognize what’s going inside the cabin — including monitoring driver alertness or whether someone got left behind in the backseat — are going to start to drive us towards higher-resolution images,” Katz said. “In turn, that’s going to start to mandate increasing levels of noise reduction, dealing with image obstructions, and with being able to process a lot more data locally. When you go from VGA to 720 to 1020 up to 4k, you’re increasing the number of pixels you have to operate with by 4X. Every one of these demands more and more localized processing. That’s where we’ll end up going.”