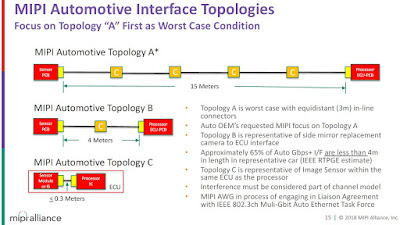

MIPI publishes Sony presentation on challenges of automotive applications. Quite a lot of changes are needed in the MIPI link, including 15m-long cable support:

Monday, April 30, 2018

Sunday, April 29, 2018

Heinmann Thermopile Sensor Reverse Engineered

SystemPlus publishes a reverse engineering report of Heimann 32 x 32-pixe thermopile LWIR sensor with silicon lens:

"A low-definition, 32 x 32 thermopile sensor, Heimann Sensor’s HTPA32x32d is... cheaper than a microbolometer and easier to integrate, the thermopile offers very good performance for applications that do not require high-resolution images and a high frame rate.

The thermopile array sensor consists only of a 0.5cm³ camera (with lens). The system is made easy for integrators with a digital I²C interface, and includes for the first time a silicon lens for low-cost applications. The 32 x 32 array sensor uses a 90µm pixel based on a thermopile technology for a very compact design."

"A low-definition, 32 x 32 thermopile sensor, Heimann Sensor’s HTPA32x32d is... cheaper than a microbolometer and easier to integrate, the thermopile offers very good performance for applications that do not require high-resolution images and a high frame rate.

The thermopile array sensor consists only of a 0.5cm³ camera (with lens). The system is made easy for integrators with a digital I²C interface, and includes for the first time a silicon lens for low-cost applications. The 32 x 32 array sensor uses a 90µm pixel based on a thermopile technology for a very compact design."

Innoviz LiDAR Adopted in BMW-Magna Platform

Globenewswire: Magna and Innoviz will supply the BMW Group with solid-state LiDAR for upcoming autonomous vehicle production platforms. This deal is said to be one of the first in the auto industry to include solid-state LiDAR for serial production. This solid-state LiDAR is said to be able to generate a 3D point cloud in real time even in challenging settings such as direct sunlight, varying weather conditions and multi-LiDAR environments.

Saturday, April 28, 2018

Friday, April 27, 2018

Sony Forecasts Decline in CIS Business Profits

Sony reports its 2017 yearly results for the fiscal year ended on March 31, 2018. While the past year results are very good, the forecast is less so - the profits are expected to decline due to "Increase in depreciation and amortization expenses as well as in research and development expenses:"

SeekingAlpha earnings call transcript gives few more details on the forecast:

"The rate of growth in demand for image sensors is likely to decline in the short term due to saturation of the smartphone market. But over the medium to long-term we expect further growth to come from expansion of new applications such as 3D sensing, security, factory automation and automotive.

So concerning the increase in capacity of image sensors we will watch the supply and demand situation.

And the forecast of a semiconductor business in fiscal 2018 and improvement of product mix. They immediately - the spread of dual camera on smartphone. The pace is slower than we initially expected, but the sensing demand increase is faster than we thought. And so for fiscal ‘18 we will continue to expand the sales of high end image sensors and at the same time work on the implement our profitability.

In other words, we will come up with the high value added product where we can secure the high margin. And at the same time work on the technology development for the new applications such as autos and sensing.

And another point if they are there the plans for investment for the future, because on your R&D expense the last year 450 billion yen, this year 470 billion yen, increase of about 20 billion yen in investment."

SeekingAlpha earnings call transcript gives few more details on the forecast:

"The rate of growth in demand for image sensors is likely to decline in the short term due to saturation of the smartphone market. But over the medium to long-term we expect further growth to come from expansion of new applications such as 3D sensing, security, factory automation and automotive.

So concerning the increase in capacity of image sensors we will watch the supply and demand situation.

And the forecast of a semiconductor business in fiscal 2018 and improvement of product mix. They immediately - the spread of dual camera on smartphone. The pace is slower than we initially expected, but the sensing demand increase is faster than we thought. And so for fiscal ‘18 we will continue to expand the sales of high end image sensors and at the same time work on the implement our profitability.

In other words, we will come up with the high value added product where we can secure the high margin. And at the same time work on the technology development for the new applications such as autos and sensing.

And another point if they are there the plans for investment for the future, because on your R&D expense the last year 450 billion yen, this year 470 billion yen, increase of about 20 billion yen in investment."

CCDs Get One More Customer

Merck launches its new CellStream benchtop flow cytometry system that uses a camera for detection. Its unique optics system and design provide researchers with unparalleled sensitivity and flexibility when analyzing cells and submicron particles.

The CellStream system’s Amnis time-delay integration (TDI) camera technology rapidly captures low-resolution cell images and converts them to high-throughput intensity data with enhanced fluorescence sensitivity.

"The custom camera within CellStream flow cytometers operates using this TDI technique, whereby a specialized detector readout mode preserves sensitivity and image quality, even with fast relative movement between the detector and the objects being imaged. The TDI detection technology of the CCD camera allows up to 1000 times more signal to be acquired from cells in flow than from conventional frame imaging approaches. Velocity detection and autofocus systems maintain proper camera synchronization and focus during the process of image acquisition."

The CellStream system’s Amnis time-delay integration (TDI) camera technology rapidly captures low-resolution cell images and converts them to high-throughput intensity data with enhanced fluorescence sensitivity.

"The custom camera within CellStream flow cytometers operates using this TDI technique, whereby a specialized detector readout mode preserves sensitivity and image quality, even with fast relative movement between the detector and the objects being imaged. The TDI detection technology of the CCD camera allows up to 1000 times more signal to be acquired from cells in flow than from conventional frame imaging approaches. Velocity detection and autofocus systems maintain proper camera synchronization and focus during the process of image acquisition."

Thursday, April 26, 2018

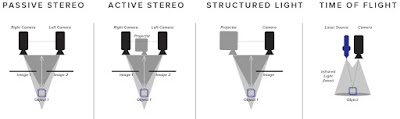

Review of 3D Cameras for AR Glasses

Daqri smart glasses rely on an array of cameras, including 3D Intel RealSense:

The company's Chief Scientist Daniel Wagner publishes a nice overview of 3D camera technologies together with AR glasses requirements for a depth camera:

"First, sensors need to be very small in order to integrate into headsets of comparably restricted size. For AR headsets, small can be defined as “mobile phone class sensors” in size,e.g., a camera module no more than 5mm thick.

Second, the depth camera should use as little power as possible, ideally something noticeably lower than 500 mW, since the overall heat dissipation capability of the average headset is just a few watts.

Third, in order to further save power, the depth camera should not require intensive processing of the sensor output since that would result in further power consumption.

For environmental scanning, the depth camera needs to see as far as possible — in practice roughly a range of around 60 cm to 5 meters.

In contrast, user input needs to work at only arm’s length, hence a range of around 20 to 100 cm.

Lastly, there is the matter of calibration. As automatic built-in self-calibration is not yet available, they rely on the factory calibration to remain valid over their lifetime, which can be a problem."

The company's Chief Scientist Daniel Wagner publishes a nice overview of 3D camera technologies together with AR glasses requirements for a depth camera:

"First, sensors need to be very small in order to integrate into headsets of comparably restricted size. For AR headsets, small can be defined as “mobile phone class sensors” in size,e.g., a camera module no more than 5mm thick.

Second, the depth camera should use as little power as possible, ideally something noticeably lower than 500 mW, since the overall heat dissipation capability of the average headset is just a few watts.

Third, in order to further save power, the depth camera should not require intensive processing of the sensor output since that would result in further power consumption.

For environmental scanning, the depth camera needs to see as far as possible — in practice roughly a range of around 60 cm to 5 meters.

In contrast, user input needs to work at only arm’s length, hence a range of around 20 to 100 cm.

Lastly, there is the matter of calibration. As automatic built-in self-calibration is not yet available, they rely on the factory calibration to remain valid over their lifetime, which can be a problem."

Are All Sony Sensors Created Equal?

Basler publishes a nice white paper "Sensor Comparison: Are all IMXs equal?" comparing various Sony sensors in different families:

"EMVA1288 standard offers the measured value of the “absolute threshold value for sensitivity”. It states the average number of required photons so that the signal to noise ratio is exactly 1."

"EMVA1288 standard offers the measured value of the “absolute threshold value for sensitivity”. It states the average number of required photons so that the signal to noise ratio is exactly 1."

4 Generations of Camera Module Testing

Pamtek publishes a video showing the four generations of its automated camera module testing machines:

Wednesday, April 25, 2018

High Speed SERDES Technology Enables High Frame Rates, Potentially

EETimes: With the emergence of 112 Gbps per lane SERDES technology and wide adoption of 56 Gbps per lane, the 12.8 Tbps single-chip switches from different companies have reached the market. This enables a data infrastructure for high frame rate and high resolution imaging systems. For instance, an 8K video with 16b per pixel can transferred at more than 24,000 fps speed through this data pipe. Now, once the data transfer technology is ready and the wafer stacking technology is mature, we could design image sensors supporting this speed and find an application for them. Or, may be, find the application first.

Technavio Forecasts Automotive CIS Cost Reductions

BusinessWire: Technavio global automotive image sensors market reports talks about an number of the recent trends:

"The global automotive image sensors market has witnessed a reduction in the cost of image sensors. The adoption of image sensors in consumer electronics and smartphones has allowed image sensor manufacturers to experience economies of scale, which further resulted in price reduction. The automotive industry did not benefit just from the reduction in cost but also by improved performance and picture quality.

One of the key trends impacting the growth of the market is the development of high-sensitivity CMOS image sensor with LED flicker mitigation."

"The global automotive image sensors market has witnessed a reduction in the cost of image sensors. The adoption of image sensors in consumer electronics and smartphones has allowed image sensor manufacturers to experience economies of scale, which further resulted in price reduction. The automotive industry did not benefit just from the reduction in cost but also by improved performance and picture quality.

One of the key trends impacting the growth of the market is the development of high-sensitivity CMOS image sensor with LED flicker mitigation."

ST Reports Weak Sales of Imaging Products for Smartphones

SeekingAlpha publishes ST Q1 2018 earnings call. The company sounds not happy about its imaging business in smartphones:

"On a sequential basis, AMS [Analog, MEMS and Sensors Group] revenues decreased by 27.4%, principally reflecting the negative impact of smartphone applications to our Imaging business...

As we already anticipated, and now this is well-known by the industry, the second quarter is another quarter of weak sales in smartphones, particularly for our Imaging business."

"On a sequential basis, AMS [Analog, MEMS and Sensors Group] revenues decreased by 27.4%, principally reflecting the negative impact of smartphone applications to our Imaging business...

As we already anticipated, and now this is well-known by the industry, the second quarter is another quarter of weak sales in smartphones, particularly for our Imaging business."

Tuesday, April 24, 2018

Sony Stacked Vision Chip Paper

MDPI Special Issue on the 2017 International Image Sensor Workshop keeps publishing papers presented at the workshop. Sony paper "Design and Performance of a 1 ms High-Speed Vision Chip with 3D-Stacked 140 GOPS Column-Parallel PEs" by Atsushi Nose, Tomohiro Yamazaki, Hironobu Katayama, Shuji Uehara, Masatsugu Kobayashi, Sayaka Shida, Masaki Odahara, Kenichi Takamiya, Shizunori Matsumoto, Leo Miyashita, Yoshihiro Watanabe, Takashi Izawa, Yoshinori Muramatsu, Yoshikazu Nitta, and Masatoshi Ishikawa presents:

"We have developed a high-speed vision chip using 3D stacking technology to address the increasing demand for high-speed vision chips in diverse applications. The chip comprises a 1/3.2-inch, 1.27 Mpixel, 500 fps (0.31 Mpixel, 1000 fps, 2 × 2 binning) vision chip with 3D-stacked column-parallel Analog-to-Digital Converters (ADCs) and 140 Giga Operation per Second (GOPS) programmable Single Instruction Multiple Data (SIMD) column-parallel PEs for new sensing applications. The 3D-stacked structure and column parallel processing architecture achieve high sensitivity, high resolution, and high-accuracy object positioning."

"We have developed a high-speed vision chip using 3D stacking technology to address the increasing demand for high-speed vision chips in diverse applications. The chip comprises a 1/3.2-inch, 1.27 Mpixel, 500 fps (0.31 Mpixel, 1000 fps, 2 × 2 binning) vision chip with 3D-stacked column-parallel Analog-to-Digital Converters (ADCs) and 140 Giga Operation per Second (GOPS) programmable Single Instruction Multiple Data (SIMD) column-parallel PEs for new sensing applications. The 3D-stacked structure and column parallel processing architecture achieve high sensitivity, high resolution, and high-accuracy object positioning."

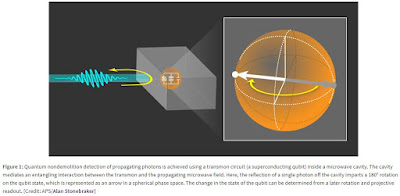

Nondestructive Photon Detection

APS Physics publishes Washington University article "Viewpoint: Single Microwave Photons Spotted on the Rebound" by Kater W. Murch.

"Single optical photon detectors typically absorb an incoming photon and use that energy to generate an electrical signal, or “click,” that indicates the arrival of a single quantum of light. Such a high-precision measurement—at the quantum limit of detection—is a remarkable achievement, but the price of that click is in some cases too high, as the measurement completely destroys the photon. If the photon could be saved, then it could be measured by other detectors or entangled with other photons. Fortunately, there is a way to detect single photons without destroying them.

This quantum nondemolition photon detection was recently demonstrated in the optical domain, and now the feat has been repeated for microwaves. Two research groups—one based at the Swiss Federal Institute of Technology (ETH) in Zurich and the other at the University of Tokyo in Japan—have utilized a cavity-qubit combination to detect a single microwave photon through its reflection off the cavity."

The non-destructive optical photon detection paper has been published in 2013 and described in Photonics magazine:

"Andreas Reiserer and colleagues at the Max Planck Institute of Quantum Optics have developed a device that leaves the photon untouched upon detection.

In their experiment, Reiserer, Dr. Stephan Ritter and professor Gerhard Rempe developed a cavity consisting of two highly reflecting mirrors closely facing each other. When a photon is put inside the cavity, it travels back and forth thousands of times before it is transmitted or lost, leading to strong interaction between the light particle and a rubidium atom trapped in the cavity. By reflecting the photon away from the device, the team was able to detect the photon by changing its phase rather than its energy.

The phase shift of the atomic state is detected using a well-known technique."

I'm not sure what is the practical use of this for image sensing. In theory, this opens a way to an invisible image sensor that detects and releases all the incoming photons without absorbing them.

"Single optical photon detectors typically absorb an incoming photon and use that energy to generate an electrical signal, or “click,” that indicates the arrival of a single quantum of light. Such a high-precision measurement—at the quantum limit of detection—is a remarkable achievement, but the price of that click is in some cases too high, as the measurement completely destroys the photon. If the photon could be saved, then it could be measured by other detectors or entangled with other photons. Fortunately, there is a way to detect single photons without destroying them.

This quantum nondemolition photon detection was recently demonstrated in the optical domain, and now the feat has been repeated for microwaves. Two research groups—one based at the Swiss Federal Institute of Technology (ETH) in Zurich and the other at the University of Tokyo in Japan—have utilized a cavity-qubit combination to detect a single microwave photon through its reflection off the cavity."

The non-destructive optical photon detection paper has been published in 2013 and described in Photonics magazine:

"Andreas Reiserer and colleagues at the Max Planck Institute of Quantum Optics have developed a device that leaves the photon untouched upon detection.

In their experiment, Reiserer, Dr. Stephan Ritter and professor Gerhard Rempe developed a cavity consisting of two highly reflecting mirrors closely facing each other. When a photon is put inside the cavity, it travels back and forth thousands of times before it is transmitted or lost, leading to strong interaction between the light particle and a rubidium atom trapped in the cavity. By reflecting the photon away from the device, the team was able to detect the photon by changing its phase rather than its energy.

The phase shift of the atomic state is detected using a well-known technique."

I'm not sure what is the practical use of this for image sensing. In theory, this opens a way to an invisible image sensor that detects and releases all the incoming photons without absorbing them.

Prophesee Event-Driven Reference Design

EETimes: Prophesee (former Chronocam) comes up with an event driven sensor reference design for potential customers. The Onboard reference system contains a VGA event-driven camera integrated with Prophesee’s ASIC, Qualcomm’s quad-core Snapdragon processor running at 1.5GHz, 6-axis Inertial Measurement Unit, and interfaces including USB 3.0, Ethernet, micro-HDMI and WiFi (802.11ac), and MIPI CSI-2:

Monday, April 23, 2018

Image Sensor Market is Greater than Lamps

IC Insights Optoelectronic, Sensor, and Discrete (O-S-D) report gives a nice comparison of image sensor business with others. It turns out that the world spends more on image sensing than on the scenes illumination:

LiDAR Patents Review

EETimes publishes Junko Yoshida article "Who’s the Lidar IP Leader?" Few quotes:

"Pierre Cambou, activity leader for imaging and sensors at market-research firm Yole Développement (Lyon, France), said he can’t imagine a robotic vehicle without lidars.

Qualcomm, LG Innotek, Ricoh and Texas Instruments.. contributions are “reducing the size of lidars” and “increasing the speed with high pulse rate” by using non-scanning technologies. Quanergy, Velodyne, Luminar and LeddarTech... focus on highly specific patented technology that leads to product assertion and its application. Active in the IP landscape are Google, Waymo, Uber, Zoox and Faraday Future. Chinese giants such as Baidu and Chery also have lidar IPs.

Notable is the emergence of lidar IP players in China. They include LeiShen, Robosense, Hesai, Bowei Sensor Tech."

"Pierre Cambou, activity leader for imaging and sensors at market-research firm Yole Développement (Lyon, France), said he can’t imagine a robotic vehicle without lidars.

Qualcomm, LG Innotek, Ricoh and Texas Instruments.. contributions are “reducing the size of lidars” and “increasing the speed with high pulse rate” by using non-scanning technologies. Quanergy, Velodyne, Luminar and LeddarTech... focus on highly specific patented technology that leads to product assertion and its application. Active in the IP landscape are Google, Waymo, Uber, Zoox and Faraday Future. Chinese giants such as Baidu and Chery also have lidar IPs.

Notable is the emergence of lidar IP players in China. They include LeiShen, Robosense, Hesai, Bowei Sensor Tech."

Sunday, April 22, 2018

Trinamix and Andanta Company Presentations

Spectronet publishes presentations of two small German image sensor companies - Trinamix and Andanta:

As for 3D imaging, Trinamix complements its initial "chemical 3D imager" idea with a more traditional structured light approach:

Andanta too publishes some info about the company and its products:

As for 3D imaging, Trinamix complements its initial "chemical 3D imager" idea with a more traditional structured light approach:

Andanta too publishes some info about the company and its products:

Saturday, April 21, 2018

Stretchcam

Columbia University, Northwest University and University of Tokio publish a paper "Stretchcam: Zooming Using Thin, Elastic Optics" by Daniel C. Sims, Oliver Cossairt, Yonghao Yu, Shree K. Nayar:

"Stretchcam is a thin camera with a lens capable of zooming with small actuations. In our design, an elastic lens array is placed on top of a sparse, rigid array of pixels. This lens array is then stretched using a small mechanical motion in order to change the field of view of the system. We present in this paper the characterization of such a system and simulations which demonstrate the capabilities of stretchcam. We follow this with the presentation of images captured from a prototype device of the proposed design. Our prototype system is able to achieve 1.5 times zoom when the scene is only 300 mm away with only a 3% change of the lens array's original length."

"Stretchcam is a thin camera with a lens capable of zooming with small actuations. In our design, an elastic lens array is placed on top of a sparse, rigid array of pixels. This lens array is then stretched using a small mechanical motion in order to change the field of view of the system. We present in this paper the characterization of such a system and simulations which demonstrate the capabilities of stretchcam. We follow this with the presentation of images captured from a prototype device of the proposed design. Our prototype system is able to achieve 1.5 times zoom when the scene is only 300 mm away with only a 3% change of the lens array's original length."

Friday, April 20, 2018

IHS Markit on Under-Display Fingerprint Sensor Adoption

Thursday, April 19, 2018

Leonardo DRS Launches 10um Pixel Thermal Camera

PRNewswire: The pixel race goes on in microbolometric sensors. Leonardo DRS launches of its Tenum 640 thermal imager, the first uncooled 10um pixel thermal camera core for OEMs.

The Tenum 640 thermal camera module combines small pixel structure with its sensitive vanadium oxide micro-bolometer sensor and a 640 x 512 array. It provides exceptional LWIR imaging at up to 60fps. The high-resolution LWIR camera core features image contrast enhancement, called "ICE™ ", 24-bit RGB and YUV (4,2,2), at sensitivity less than 50 mK NETD.

"The Tenum 640 represents the most advanced, uncooled and cost-effective infrared sensor design available to OEM's today," said Shawn Black, VP and GM of the Leonardo DRS EO&IS business unit. "Our market-leading innovative technologies, such as the Tenum 640, continue to enable greater affordability while delivering uncompromising thermal imaging performance for our customers."

The Tenum 640 thermal camera module combines small pixel structure with its sensitive vanadium oxide micro-bolometer sensor and a 640 x 512 array. It provides exceptional LWIR imaging at up to 60fps. The high-resolution LWIR camera core features image contrast enhancement, called "ICE™ ", 24-bit RGB and YUV (4,2,2), at sensitivity less than 50 mK NETD.

"The Tenum 640 represents the most advanced, uncooled and cost-effective infrared sensor design available to OEM's today," said Shawn Black, VP and GM of the Leonardo DRS EO&IS business unit. "Our market-leading innovative technologies, such as the Tenum 640, continue to enable greater affordability while delivering uncompromising thermal imaging performance for our customers."

Face Recognition Startup Raises $600m on $3b Valuation

Bloomberg, Teslarati: A 3 year-old Chinese startup SenseTime raises $600m from Alibaba Group, Singaporean state firm Temasek Holdings, retailer Suning.com, and other investors at a valuation of more than $3b ($4.5b, according to Reuters), becoming the world’s most valuable face recognition startup. By the way, the second largest Chinese facial-recognition start-up Megvii has raised $460m last year.

The Qualcomm-backed company specializes in systems that analyse faces and images on an enormous scale. SenseTime turned profitable in 2017 and wants to grow its workforce to 2,000 by the end of this year. With the latest financing deal, SenseTime has doubled its valuation in a few months.

The Qualcomm-backed company specializes in systems that analyse faces and images on an enormous scale. SenseTime turned profitable in 2017 and wants to grow its workforce to 2,000 by the end of this year. With the latest financing deal, SenseTime has doubled its valuation in a few months.

Subscribe to:

Comments (Atom)