Saturday, January 30, 2021

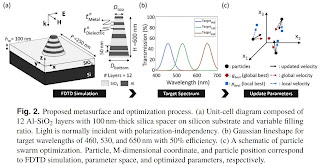

Color Filters for 0.255um Pixels

Reference for Column-Parallel Sigma-Delta ADC

Friday, January 29, 2021

Microsoft Partners with D3 Engineering, Leopard Imaging, and SICK to Deploy its ToF Technology in Industrial Applications

Smartsens Starts IPO Preparations

Thursday, January 28, 2021

Worthy Survey?

- We have conducted the first state-of-the-art comprehensive survey on CIS from an applications’ perspective in different predominant fields, which was not done before.

- A novel taxonomy has been introduced by us in which work is classified in terms of CIS models, applications, and design characteristics, as shown in Figure 1 and Appendix A Table A1.

- We have noted the limitations and future directions and related works are highlighted.

Brigates Cancels its IPO Plans

Wednesday, January 27, 2021

trinamiX Introduces Behind OLED 3D Imaging Solution

Eric Fossum, ON Semi, and Kodak Win Emmy Award

- Eric Fossum

- ON Semiconductor

- Eastman Kodak

Yole on Machine Vision Market

Gpixel Starts a Line of Charge-Domain TDI Sensors

Tuesday, January 26, 2021

ams Announces 13.8MP and 8MP Global Shutter Sensors

BusinessWire: ams introduces the CSG family of image sensors for industrial vision equipment which achieves higher resolution at very high frame rates. The new CSG14K and CSG8K sensors are supplied in – respectively – a 1” or a 1/1.1” optical format.

The CSG14K is a global shutter image sensor that combines resolution of 13.8MP with high-speed operation: in 10-bit mode at full resolution, the sensor can capture images at a maximum rate of 140fps, and at 93.6fps in 12-bit mode. The CSG8K achieves even higher speeds of 231fps in 10-bit and 155fps in 12-bit mode at its full resolution of 8MP.

They are the first products to gain the benefits of a pixel design which is notable for its low noise and high sensitivity, plus HDR mode.

Peter Vandersteegen, Marketing Manager of the CMOS Image Sensors business line at ams, said: “AOI is a vital part of the quality control process in modern factories. By delivering a fast frame rate and higher resolution, the CSG image sensors provide a simple way for industrial camera manufacturers to upgrade the performance of their products, and to enable their cus-tomers to raise throughput, productivity and quality – all in a standard optical format.”

The CSG sensors feature a sub-LVDS data interface like that of the ams CMV family of image sensors. Both sensors are supplied in a 20mm x 22mm LGA package, share the same footprint and pinout, and are software-compatible. The CSG14K has a 1:1 aspect ratio, and is ideal for use in C-mount, 29mm x 29mm industrial cameras. The CSG8K has a 16:9 aspect ratio, suita-ble for video.

The CSG14K and CSG8K sensors are available for sampling.