From GlobeNewswire: https://www.globenewswire.com/news-release/2024/05/29/2889639/8267/en/Himax-Announces-Strategic-Investment-in-Obsidian-Sensors-to-Revolutionize-Next-Gen-Thermal-Imagers.html

Himax Announces Strategic Investment in Obsidian Sensors to Revolutionize Next-Gen Thermal Imagers

TAINAN, Taiwan and SAN DIEGO, May 29, 2024 (GLOBE NEWSWIRE) -- Himax Technologies, Inc. (Nasdaq: HIMX) (“Himax” or “Company”), a leading supplier and fabless manufacturer of display drivers and other semiconductor products, today announced its strategic investment in Obsidian Sensors, Inc. ("Obsidian"), a San Diego-based thermal imaging sensor solution manufacturer. Himax's strategic investment in Obsidian Sensors, as the lead investor in Obsidian’s convertible note financing, was motivated by the potential of their proprietary and revolutionary high-resolution thermal sensors to dominate the market through low-cost, high-volume production capabilities. The investment amount was not disclosed. In addition to an ongoing engineering collaboration where Obsidian leverages Himax's IC design resources and know-how, the two companies also aim to combine the advantages of Himax’s WiseEye ultralow power AI processors with Obsidian’s high-resolution thermal imaging to create an advanced thermal vision solution. This would complement Himax's existing AI capabilities and ecosystem support, improving detection in challenging environments and boosting accuracy and reliability, thereby opening doors to a wide array of applications, including industrial, automotive safety and autonomy, and security systems. Obsidian’s proprietary thermal imaging camera solutions have already garnered attention in the industry, with notable existing investors including Qualcomm Ventures, Hyundai, Hyundai Mobis, SK Walden and Innolux.

Thermal imaging sensors offer unparalleled versatility, capable of detecting heat differences in total darkness, measuring temperature, and identifying distant objects. They are particularly well suited for a wide range of surveillance applications, especially in challenging and life-saving scenarios. Compared to prevailing thermal sensor solutions, which typically suffer from low resolution, high cost, and limited production volumes, Obsidian is revolutionizing the thermal imaging industry by producing high resolution thermal sensors with its proprietary Large Area MEMS Platform (“LAMP”), offering low-cost production at high volumes. With large glass substrates capable of producing sensors with superior resolution, VGA or higher, at volumes exceeding 100 million units per year, Obsidian is poised to drive the mass market adoption of this unrivaled technology across industries, including automotive, security, surveillance, drones, and more.

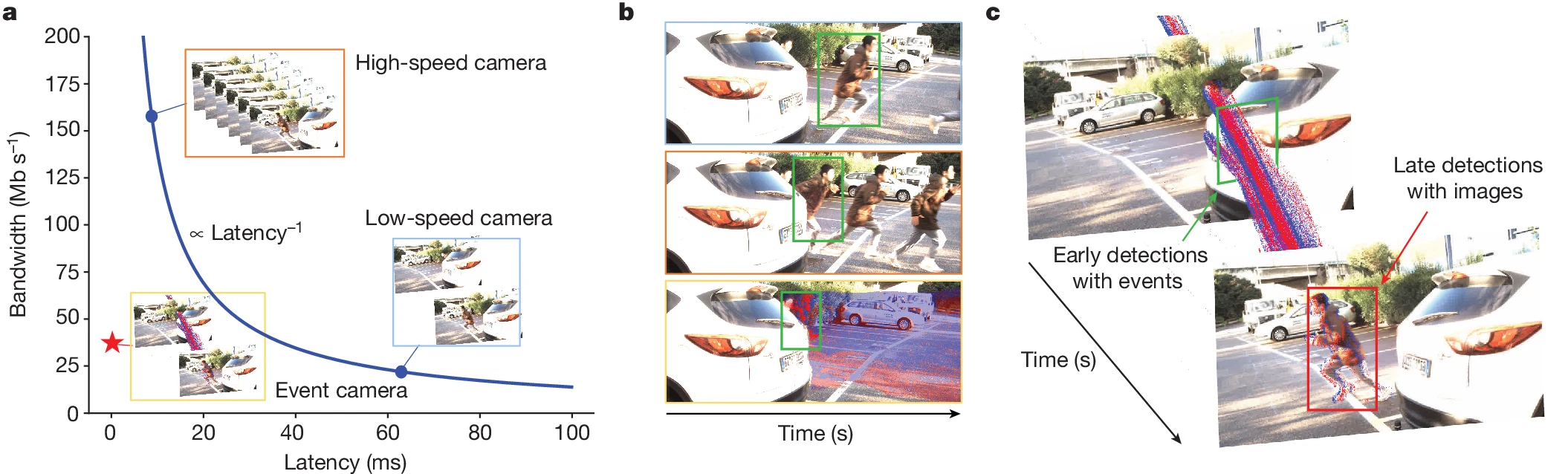

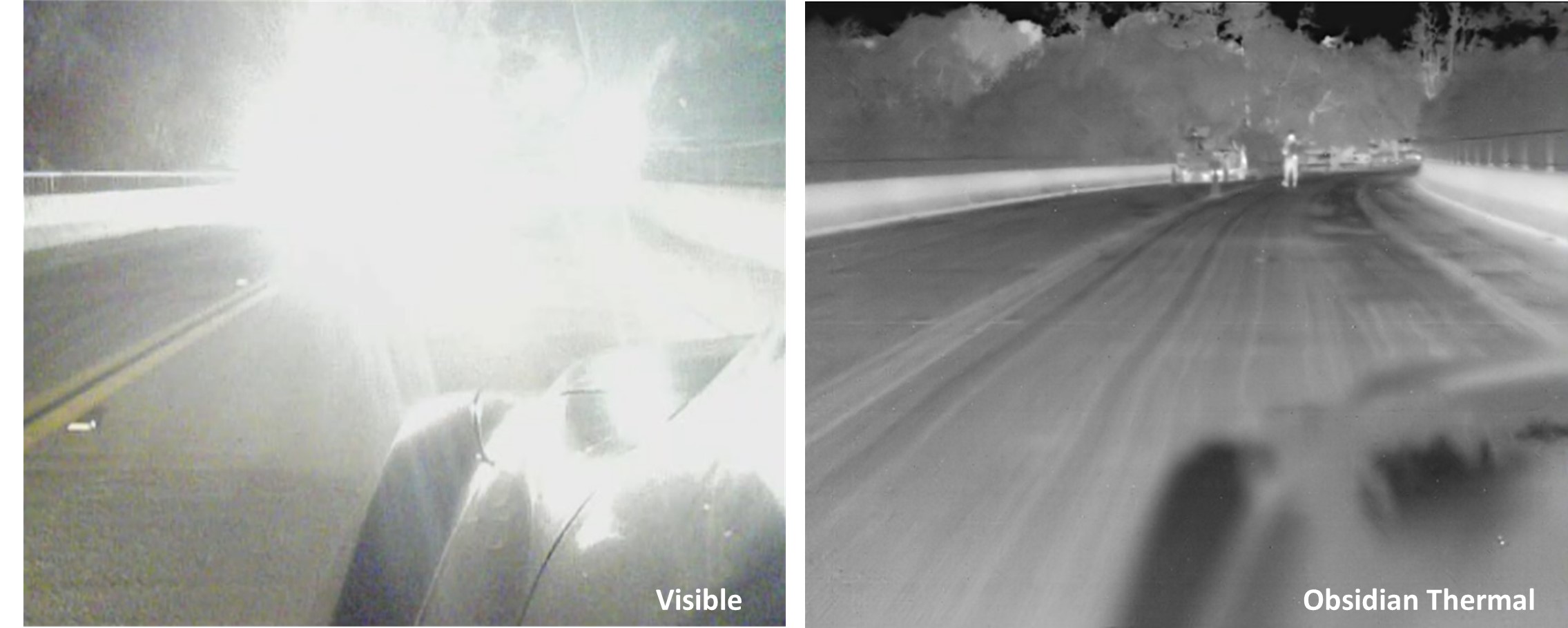

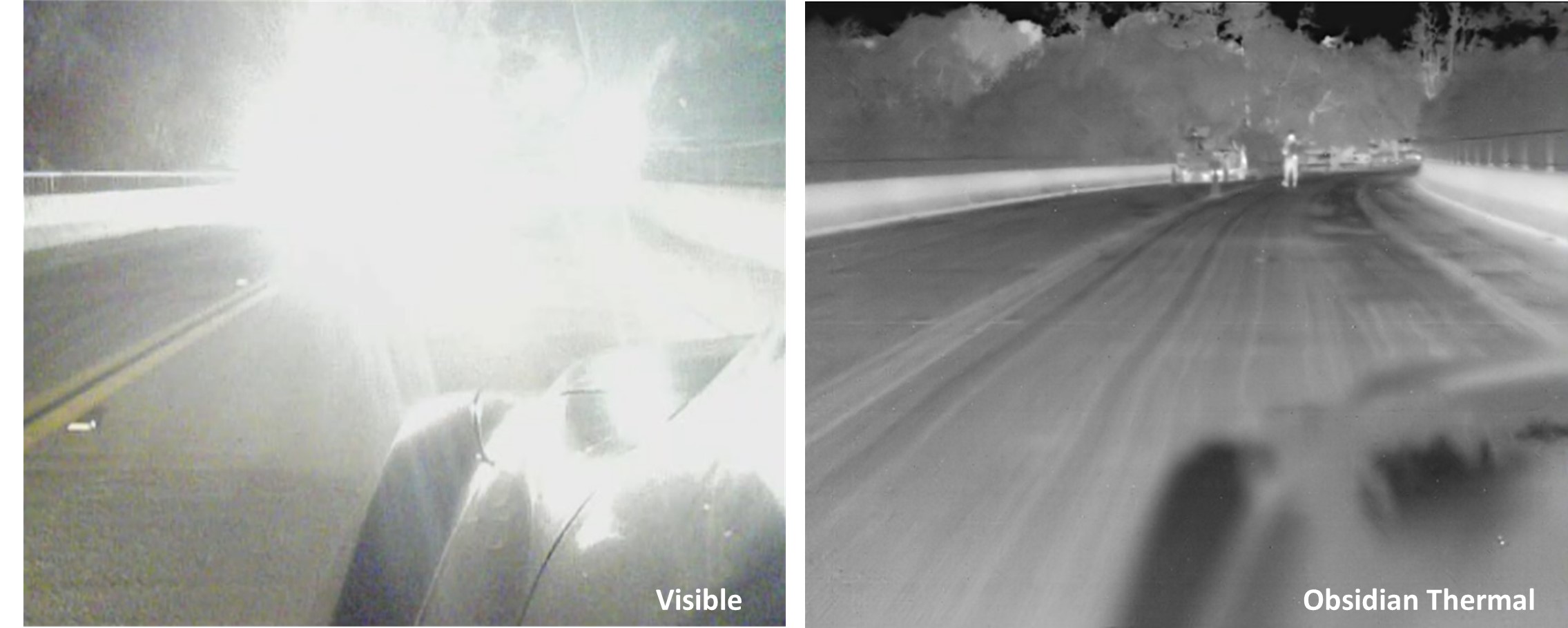

With accelerating interest in both the consumer and defense sectors, Obsidian’s groundbreaking thermal imaging sensor solutions are gaining traction in automotive applications and poised to play a pivotal role. The novel ADAS (Advanced Driver Assistance Systems) and AEB (Automatic Emergency Braking) system, integrated with Obsidian’s thermal sensors, significantly enable higher-resolution and clear vision in low-light and adverse weather conditions such as fog, smoke, rain, and snow, ensuring much better driving safety and security. This aligns perfectly with measures announced by the NHTSA (National Highway Traffic Safety Administration) on April 29, 2024, which issued its final rule mandating the implementation of AEB, including PAEB (Pedestrian AEB) that is effective at night, as a standard feature on all new cars beginning in 2029, recognizing pedestrian safety features as essential components rather than just luxury add-ons. This safety standard is expected to significantly reduce rear-end and pedestrian crashes. Traffic safety authorities in other countries are also following suit with similar regulations underscoring the trend and significant potential demand for thermal imaging sensors from Obsidian Sensors in the years to come.

A dangerous nighttime driving situation can be averted with a thermal camera

“We are pleased to begin our strategic partnership with Himax through this funding round and look forward to a fruitful collaboration to potentially merge our market leading thermal imaging sensor and camera technologies with Himax’s advanced ultralow power WiseEyeTM endpoint AI, leveraging each other's domain expertise. Furthermore, progress has been made in the engineering projects for mixed signal integrated circuits, leveraging Himax’s decades of experience in image processing. Given our disruptive cost and scale advantage, this partnership will enable us to better cater to the needs of the rapid-growing thermal imaging market,” said John Hong, CEO of Obsidian Sensors.

“We see great potential in Obsidian Sensors' revolutionary high-resolution thermal imaging sensor. Himax’s strategic investment in Obsidian further enhances our portfolio and expands our technology reach to cover thermal sensing which represents a great compliment to our WiseEye technology, a world leading ultralow power image sensing AI total solution. Further, we see tremendous potential of Obsidian’s technology in the automotive sector where Himax already holds a dominant position in display semiconductors. We also anticipate additional synergies through expansion of our partnership with our combined strength and respective expertise driving future success,” said Mr. Jordan Wu, President and Chief Executive Officer of Himax.