Friday, April 30, 2021

1/f and RTS Noise Theories

Thursday, April 29, 2021

Xiaomi Phone Features Liquid Lens from Nextlens

ToF Flying Pixel Elimination

Princeton University and King Abdullah University of Science and Technology publish arxiv.org paper "Mask-ToF: Learning Microlens Masks for Flying Pixel Correction in Time-of-Flight Imaging" by Ilya Chugunov, Seung-Hwan Baek, Qiang Fu, Wolfgang Heidrich, and Felix Heide.

Wednesday, April 28, 2021

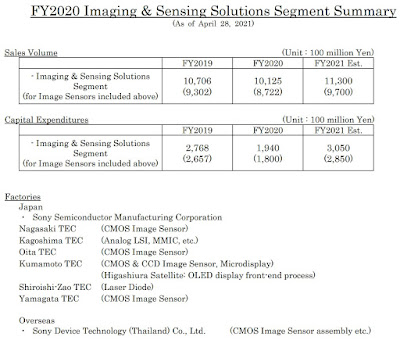

Sony Forecasts Higher Revenue but Lower Profit

- FY20 sales decreased 5% year-on-year to 1 trillion 12.5 billion yen primarily due to lower sales of image sensors for mobile.

- Operating income decreased a significant 89.7 billion yen year-on-year to 145.9 billion yen primarily due to an increase in research and development expenses and depreciation, as well as the impact of the decrease in sales.

- FY21 sales are expected to increase 12% year-on-year to 1 trillion 130 billion yen and operating income is expected to decrease 5.9 billion yen to 140 billion yen.

- In FY21, we expect that our market share on a volume basis will return to a similar level as it was in the fiscal year ended March 31, 2020, thanks to our efforts to expand our customer base in the mobile sensor business, and we will manage the business in a more proactive manner while keeping an eye on risk.

- We plan to increase research expenses in FY21 by approximately 15%, or 25 billion yen, year-on-year to expand the type of products we sell and to shift to higher value-added models from the fiscal year ending March 31, 2023 (“FY22”).

- We expect image sensor capital expenditures to be 285 billion yen, part of which was postponed from the previous fiscal year.

- We plan to shift to higher value-added products that leverage Sony’s stacked technology in preparation for an improvement in the product mix from FY22, and we will concentrate our investment on production capacity necessary to produce them.

- The other day, we held a completion ceremony for our new Fab 5 building at our Nagasaki Factory. Expansion of production capacity is progressing according to plan and we will build, expand and equip facilities in-line with the pace of expansion of our business going forward.

- Shortages of semiconductors have become an issue recently, but, with the cooperation of our partners, we have already secured enough supply of logic semiconductors used in our image sensors to cover our production plan for this fiscal year.

- However, there is a possibility that the semiconductor shortage will be prolonged, so we are accelerating the shift to higher value-added products that we have been advancing heretofore.

- We are also continuing to proactively pursue mid- to long-term initiatives in the automotive and 3D sensing areas and will explain more details at the IR Day scheduled for next month.

THz Imager Uses Photodiode for Scene Illumination (Not a Mistake, for Illumination!)

Noise in Polarization Sensors

Tuesday, April 27, 2021

iPhone 12 Pro Max Rear Camera Reverse Engineering

BBC on Smartphone Optics Innovations

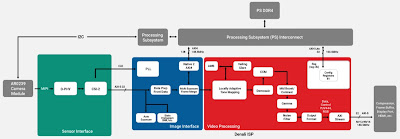

Ambarella Presentation

Monday, April 26, 2021

Counterpoint on Smartphone Camera Trends

- Higher resolution sensors

- Large pixels and sensors

- Multiple cameras

- AI processing integration

Always-On News: Qualcomm, Microsoft, Himax, Cadence

- Moderator: Jeff HENCKELS, Director, Product Management & Business Development, Qualcomm

- Peter BERNARD, Sr. Director, Silicon and Telecom, Azure Edge Devices, Platform & Services, Microsoft

- Lian Jye SU, Principal Analyst, ABI Research

- Edwin PARK, Principal Engineer, QUALCOMM Inc

- Evan PETRIDIS, Chief Product Officer, EVP of Systems Engineering, Eta Compute

- Tony CHIANG, Sr. Director of Marketing, Himax Imaging

- Optimized for always-on applications including smart sensors, AR/VR glasses and IoT/smart home devices

- 128-bit SIMD with 400 giga operations per second (GOPS) offers one-third the power and area plus 20 percent higher frequency compared to the widely deployed Vision P6 DSP

- Architecture optimized for small memory footprint and operation in low-power mode

Sunday, April 25, 2021

Column-Parallel Sigma-Delta ADC Thesis

Saturday, April 24, 2021

Time-Based SPAD HDR Imaging Claimed to be Better than Dull Photon Counting

Omron and Politecnico di Milano Develop SPAD-based Rangefinder

Friday, April 23, 2021

Characterization and Modeling of Image Sensor Helps to Achieve Lossless Image Compression

EETimes-Europe: Swiss startup Dotphoton claims to achieve 10x lossless image compression:

"Dotphoton’s Jetraw software starts before the image is created and uses the information of the image sensor’s noise performance to efficiently compress the image data. The roots of the image compression date back to the research questions of quantum physics. For example, whether effects such as quantum entanglement can be made visible for the human eye.

Bruno Sanguinetti, CTO and co-founder of Dotphoton, explained, “Experimental setups with CCD/CMOS sensors for the quantification of the entropy and the relation between signal and noise showed that even with excellent sensors, the largest part of the entropy consists of noise. With a 16-bit sensor, we typically detected 9-bit entropy, which could be referred back solely to noise, and only 1 bit that came from the signal. It is a finding from our observations that good sensors virtually ‘zoom’ into the noise.”

Dotphoton showed that, with their compression method, image files are not affected by loss of information even with compression by a factor of ten. In concrete terms, Dotphoton uses information about the sensor’s own temporal and spatial noise."

The company's Dropbox comparison document dated by January 2020 benchmarks its DPCV algorithm vs other approaches:

— per-pixel calibration and linearization. Even for high-end cameras, each pixel may have a different efficiency, offset and noise structure. Our advanced calibration method perfectly captures this information, which then allows both to correct sensor defects and to better evaluate whether an observed feature arises from signal or from noise.

— quantitatively-accurate amplitude noise reduction. Many de-noising techniques produce visually stunning results but affect the quantitative properties of an image. Our noise reduction methods, on the other hand, are targeted at scientific applications, where the quantitative properties of an image are important and where producing no artefacts is critical.

— color noise reduction using amplitude data and spectral calibration data

Xilinx Releases AI Vision Starter Kit, Pinnacle Adds HDR ISP

Demosaicing for Quad-Bayer Sensors

Axiv.org paper "Beyond Joint Demosaicking and Denoising: An Image Processing Pipeline for a Pixel-bin Image Sensor" by SMA Sharif, Rizwan Ali Naqvi, and Mithun Biswas from Rigel-IT, Bangladesh, and Sejong University, South Korea, applies CNN to demosaic quad Bayer image:

"Pixel binning is considered one of the most prominent solutions to tackle the hardware limitation of smartphone cameras. Despite numerous advantages, such an image sensor has to appropriate an artefact-prone non-Bayer colour filter array (CFA) to enable the binning capability. Contrarily, performing essential image signal processing (ISP) tasks like demosaicking and denoising, explicitly with such CFA patterns, makes the reconstruction process notably complicated. In this paper, we tackle the challenges of joint demosaicing and denoising (JDD) on such an image sensor by introducing a novel learning-based method. The proposed method leverages the depth and spatial attention in a deep network. The proposed network is guided by a multi-term objective function, including two novel perceptual losses to produce visually plausible images. On top of that, we stretch the proposed image processing pipeline to comprehensively reconstruct and enhance the images captured with a smartphone camera, which uses pixel binning techniques. The experimental results illustrate that the proposed method can outperform the existing methods by a noticeable margin in qualitative and quantitative comparisons. Code available: https://github.com/sharif-apu/BJDD_CVPR21."