Eric Fossum published a portion of his private email exchange with ADVIS founder Mark Bocko (link):

Dear Dr. Fossum -

I read your posting in Digital Photography Review

http://forums.dpreview.com/forums/read.asp?forum=1000&message=17865314

where you made the following comments about our sigma-delta CMOS image sensor technology. I am writing to clear up a few points.

...........

>I have some information about this sensor. I don't think it has any >advantages over other technology.

>

>1. Pixel-level sigma-delta. Every reset (delta) adds kTC noise.

>

You are correct , there is noise from the DAC. However the appropraite C value is the capacitance of the MOSCAP in the DAC which in our designs is much less than the photodiode capacitance (by a factor of 10 - 20) so the kTC noise is reduced proportionately.

>2. In low light, not even clear how a single pulse will be generated.

When one begins image acquisition we reset the PD to near the sigma-delta modulator threshold voltage. The gain is controlled by the DAC feedback charge, by reducing this (by controlling the voltage to which the DAC charge storage capacitor is charged) you effectively increase the gain. The sigma-delta modulator thus operates in a small range near the threshold voltage.

>3. No one has seen a low light level image from the prototype sensor

>that I know, incl. potential investors.

>

Our noise measurements are on a prototype with a small group of pixels, we currently are making images in a small imaging array 128x128. This will be coming soon.

>4. Unless the sampling rate is high, and threshold low, this sensor is going

>to see large lag under low light conditions.

>

As I said above the PD is reset to near the threshold, the lag is then how long it takes for the PD voltage to reach the threshold, but after it does reach the threshold there is no need to reset the PD again. Any lag will occur only when the image sensor is first turned on.

>5. You cannot average out shot noise. Yikes!

What we said has been misunderstood. If one does not reset the PD then of course successive measurements of the accumulated charge are correlated and can not be averaged out, however if one resets the PD occassionally, maybe every 30 sigma-delta samples, then the accumulated charge noise is decorrelated and may indeed be averaged out. For example is we use an oversampling ratio of 256 we could reset the PD every 32 samples and then average together the 8 subframes. However, to assess the potential benefit of doing this one needs to know the relative size of the other sources of noise.

------------------------------

(Dear gentle readers - I responded to Prof. Bocko's email directly but he did not reply to me. As promised, here are some initial comments)

> >

> >1. Pixel-level sigma-delta. Every reset (delta) adds kTC noise.

> >

> You are correct , there is noise from the DAC. However the

> appropraite C value is the capacitance of the MOSCAP in the DAC

> which in our designs is much less than the photodiode capacitance

> (by a factor of 10 - 20) so the kTC noise is reduced

> proportionately.

As I am sure you know, the noise goes like the square root of kTC, so actually the noise does not get reduced proportionately. It is reduced by a factor or 3 or 4. Second, the PD capacitance is going to be of the order of 10,000 e-/V or 1.6 fC. That leaves you with about 5 e- rms kTC noise.

I have seen a slide of your timing diagram and I have to say, I am not sure that it is even correct for what you are doing. In any case it could be improved quite a bit. You could make the delta circuit kTC noise independent but that is another story.

>

> >2. In low light, not even clear how a single pulse will be generated.

>

> When one begins image acquisition we reset the PD to near the

> sigma-delta modulator threshold voltage. The gain is controlled by

> the DAC feedback charge, by reducing this (by controlling the

> voltage to which the DAC charge storage capacitor is charged) you

> effectively increase the gain. The sigma-delta modulator thus

> operates in a small range near the threshold voltage.

This does not help. How many electrons is each delta-reset supposed to correspond to? You claim 256 cycles and a large dynamic range. Say the dynamic range is 1000:1 (you claim 1,000,000:1). Each frame period you need to be able to take a full PD of charge out of the PD or perhaps 20,000 e-. That requires each delta-reset to be 20,000/256 or 80 e-. Say 10 e- is falling on the sensor per frame period. It seems like it will take another 8 frame periods to trip the comparator. This looks like it will cause lag.

Furthermore, at 1000:1 dynamic range your minimum signal is 20 e- but you need 80 to trigger the comparator. How can this work? It gets worse if we go with the 1,000,000:1 dynamic range you claim. Then you need 0.02 e- to be sensed!

>

> >3. No one has seen a low light level image from the prototype sensor >that I know, incl. potential investors.

> >

>

> Our noise measurements are on a prototype with a small group of

> pixels, we currently are making images in a small imaging array

> 128x128. This will be coming soon.

>

>

OK

> >4. Unless the sampling rate is high, and threshold low, this sensor is going >to see large lag under low light conditions.

> >

>

> As I said above the PD is reset to near the threshold, the lag is

> then how long it takes for the PD voltage to reach the threshold,

> but after it does reach the threshold there is no need to reset the

> PD again. Any lag will occur only when the image sensor is first

> turned on.

>

I think you are assuming static scenes. See the above comment regarding lag.

>

> >5. You cannot average out shot noise. Yikes!

>

> What we said has been misunderstood. If one does not reset the PD

> then of course successive measurements of the accumulated charge

> are correlated and can not be averaged out, however if one resets

> the PD occassionally, maybe every 30 sigma-delta samples, then the

> accumulated charge noise is decorrelated and may indeed be averaged

> out. For example is we use an oversampling ratio of 256 we could

> reset the PD every 32 samples and then average together the 8

> subframes. However, to assess the potential benefit of doing this

> one needs to know the relative size of the other sources of noise.

>

>

I believe that in the post, before it was deleted (I guess by your instructions) you talked about averaging out dark current shot noise. How does this work exactly?

-EF

--------------------------

>6. Decimation filter power and chip area requirements are significant.

The worst case decimation filter power is about ten times the pixel power based on the bus activity in our decimation filter architecture. Also, our prototype which achieved less than 1 nW per pixel is in 0.35 um CMOS process (at 30 fps) In a 0.13 micron process this should be about 6 times lower power. So in a 0.13 process, including the decimation filter the sigma-delta design will consume about 1.5 - 2 nW per pixel, which is about 25 times better than the current best Micron APS designs.

>7. You need a frame buffer somewhere to implement the decimation

>filter.

That's is correct and this is the price one has to pay for the performance gains in this approach. There's no free lunch. However, we have developed a decimation filter architecture that performs all operations on the single bit stream format of the data in which all operations may be performed by simple gates. Employing this architecture we may accumulate the image in either raw format or directly as the DCT (for JPEG) or even as a wavelet decomposition (for JPEG2000). We also may perform the color processing steps in this single bit domain, so a lot of computation may be pushed efficiently into the decimation filter.

>8. Most power in sensors is due to the readout. ADVIS conveniently

>ignores the readout power and pad driver power. They also don't include

>the decimation filter or frame buffer power.

As estimated above even with the filter the power still is much less than existing designs. We do not know the pad driver power precisely but given the opportunity to build compression into the image sensor chip the amount of data ultimately shipped off chip will be greatly reduced. Of course to read out the entire raw image one will have to pay the full power price.

>9. There are already many viable ways of doing high dynamic range

>imaging. The problem is not the technology, it is in the market.

The SMaL (now Cypres) approach does not have linear dynamic range which is required for high quality imaging and it has severe problems with DC offset variation due to the logarithmic response. The Pixim multisampling approach requires an entire second chip and consumes more power than even CCD's. I'd be happy to hear about any other high dynamic range approaches.

>10. It seems to me that the advis technology was developed by someone who doesnt understand image sensors very well. Look at his track record in imaging. Zero thus far. That would be consistent with the claims being made.

Indeed we are new to CMOS image sensors but we have much relevant experience in many other types of sensors, noise, signal processing and analog IC design. Sometimes a fresh view of a subject is a good thing.

>Hate to be brutal, but that is the way I see it.

>-Eric

There are a couple further points I would like to make.

1. The sigma-delta sensor derives its performance from speed not precision of the transistors. Thus some of the key scaling issues that limit the performance of analog image sensor designs will be ameliorated.

2. The fast oversampling readout of the sigma-delta sensor enables one to come close to a global shutter, we do not have the rolling shutter problem that APS encounters due to the speed limitations of the readout.

My colleague Zeljko Ignjatovic and I would be happy to explain our points to you further if you wish. It also would be really appreciated if you were inclined to share these clarifications with the DPReview.com list and possibly even with any of the potential investors with whom you may have had contact.

Thank you,

Mark Bocko

* Mark F. Bocko - Professor and Chair

* Department of Electrical and Computer Engineering &

* Professor, Department of Physics and Astronomy

* P.O. Box 270126

* University of Rochester

* Rochester, New York 14627 (USA)

* email: bocko@ece.rochester.edu

* Phone: (585) 275-4879

* Fax: (585) 273-4919

* http://mrl.esm.rochester.edu

* http://www.ece.rochester.edu/~sde/

------------------------------------

> >6. Decimation filter power and chip area requirements are significant.

>

> The worst case decimation filter power is about ten times the pixel

> power based on the bus activity in our decimation filter

> architecture. Also, our prototype which achieved less than 1 nW

> per pixel is in 0.35 um CMOS process (at 30 fps) In a 0.13 micron

> process this should be about 6 times lower power. So in a 0.13

> process, including the decimation filter the sigma-delta design

> will consume about 1.5 - 2 nW per pixel, which is about 25 times

> better than the current best Micron APS designs.

What is the chip area requirement?

>

> >7. You need a frame buffer somewhere to implement the decimation >filter.

>

> That's is correct and this is the price one has to pay for the

> performance gains in this approach. There's no free lunch.

> However, we have developed a decimation filter architecture that

> performs all operations on the single bit stream format of the data

> in which all operations may be performed by simple gates.

> Employing this architecture we may accumulate the image in either

> raw format or directly as the DCT (for JPEG) or even as a wavelet

> decomposition (for JPEG2000). We also may perform the color

> processing steps in this single bit domain, so a lot of computation

> may be pushed efficiently into the decimation filter.

I AM interested in this part. It sounds innovative and real.

>

> >8. Most power in sensors is due to the readout. ADVIS conveniently

>ignores the readout power and pad driver power. They also don't include

>the decimation filter or frame buffer power.

>

>

> As estimated above even with the filter the power still is much

> less than existing designs. We do not know the pad driver power

> precisely but given the opportunity to build compression into the

> image sensor chip the amount of data ultimately shipped off chip

> will be greatly reduced. Of course to read out the entire raw

> image one will have to pay the full power price.

>

I don't see how you are comparing power in your sensor to power in Micron's sensor if you don't add everything in so you can do an apples to apples comparison on functionality. If you just compare the pixels, and assume a bias current of a couple of microamps for the CMOS APS readout (divided among 1900 pixels,say) then you wind up with about the same number.

-----------------------

Above I posted the email I received from Prof. Bocko, part of which includes my posted comments. Later I will reply to his responses.

Hope it is clear how the exchange went thus far.

-Eric

-------------------------

Saturday, April 29, 2006

Wednesday, April 26, 2006

Image Sensor Trends Article

Don Scansen from reverse engineering company Semiconductor Insights published a market trends overview in Video/Imaging Designline.

Covering wide range of issues, from camera-module footprint and hight to price and ISP integration trade-offs, the article gives a birds-eye view on many controversial problems in image sensor design.

Some points to note:

- a modern image sensor process is almost twice more expensive than standard CMOS

- sensor-ISP integration is less cost-optimal than baseband-ISP integration in camera-phones

- 1MP camera-phone module costs about $7-8 to build.

Covering wide range of issues, from camera-module footprint and hight to price and ISP integration trade-offs, the article gives a birds-eye view on many controversial problems in image sensor design.

Some points to note:

- a modern image sensor process is almost twice more expensive than standard CMOS

- sensor-ISP integration is less cost-optimal than baseband-ISP integration in camera-phones

- 1MP camera-phone module costs about $7-8 to build.

Wednesday, April 19, 2006

Sony Moves High-Speed

Sony Semiconductor CX-News Vol. 43 presents CMOS sensors capable of 60fps speed at full resolution, with further plans to achieve few hundreds frames per second speed. Not much details are given, except of co-developing a supporting DSP which is promised to "take full advantage of this performance". No word on the sensor resolution either.

One of the features is "Multiplane Addition:

Recently, the number of users who want to shoot indoors or evening/night scenes cleanly and preferably without flash has been increasing. Thus cameras that boast camera shake correction or high ISO sensitivity have appeared in the market.

These products adopt either camera shake correction functions in the lens or high-sensitivity image sensors.

However, there is another way to achieve camera shake correction and higher sensitivity (higher signal-to-noise ratio).

This method consists of continuous imaging of the entire frame at 60 frame/s, and using this increased amount of information in the time direction to create a single high-quality image. This can be implemented in a total camera system that includes a camera DSP. For example, if someone applies multiplane addition to several images that were captured at 60 frame/s, an image with a signal-to-noise ratio several times better can be acquired. Also, a high-speed camera shake correction function can be implemented if images are stacked while applying camera shake correction to each image. This function can make it possible to capture bright, camera-shake-free images even without an inlens camera shake correction function even in slightly darker environments where camera shake can easily occur, such as school festivals, children’s plays or presentations, or indoor events."

Here is a demo of the new camera shake reduction feature:

http://www.sony.net/Products/SC-HP/cmos/cmos3.html

Flash Presentation about new high-speed image sensor features:

http://www.sony.net/Products/SC-HP/cmos/index.html

One of the features is "Multiplane Addition:

Recently, the number of users who want to shoot indoors or evening/night scenes cleanly and preferably without flash has been increasing. Thus cameras that boast camera shake correction or high ISO sensitivity have appeared in the market.

These products adopt either camera shake correction functions in the lens or high-sensitivity image sensors.

However, there is another way to achieve camera shake correction and higher sensitivity (higher signal-to-noise ratio).

This method consists of continuous imaging of the entire frame at 60 frame/s, and using this increased amount of information in the time direction to create a single high-quality image. This can be implemented in a total camera system that includes a camera DSP. For example, if someone applies multiplane addition to several images that were captured at 60 frame/s, an image with a signal-to-noise ratio several times better can be acquired. Also, a high-speed camera shake correction function can be implemented if images are stacked while applying camera shake correction to each image. This function can make it possible to capture bright, camera-shake-free images even without an inlens camera shake correction function even in slightly darker environments where camera shake can easily occur, such as school festivals, children’s plays or presentations, or indoor events."

Here is a demo of the new camera shake reduction feature:

http://www.sony.net/Products/SC-HP/cmos/cmos3.html

Flash Presentation about new high-speed image sensor features:

http://www.sony.net/Products/SC-HP/cmos/index.html

Monday, April 17, 2006

Omnivision People: Dr. Howard E. Rhodes, VP of Process Engineering

Not really a news, this was brought to my attention recently (link):

Dr. Howard E. Rhodes is Vice President of Process Engineering at

OmniVision Technologies. Dr. Rhodes earned his B.S., M.S, and PhD

degrees in Solid State Physics from the University of Illinois. From

1980 to 1988, Dr. Rhodes worked at Kodak Research Labs where he was in

charge of process development and process integration for high speed

visible and IR sensitive CCD products. In 1988, Dr. Rhodes joined Micron

Technology where he was named a Principal Fellow in 1998. In 2002, Dr.

Rhodes became Micron's Director of Imager Engineering, overseeing the

development and process technology for all of Micron's imager products.

In October 2004, Dr. Rhodes left Micron to join OmniVision as Director

of Process Engineering, developing OmniVision's advanced pixel design

and process technologies. In August 2005, Dr. Rhodes was promoted to

Vice President of Process Engineering. Dr. Rhodes holds 149 patents and

over 15 publications. Since joining OmniVision Dr. Rhodes has filed for

18 patents. In June 2005, Dr. Rhodes received the Walter Kosonocky Award

for his published work in CMOS Imaging.

Omnivision understands that CIS process development is important!

Dr. Howard E. Rhodes is Vice President of Process Engineering at

OmniVision Technologies. Dr. Rhodes earned his B.S., M.S, and PhD

degrees in Solid State Physics from the University of Illinois. From

1980 to 1988, Dr. Rhodes worked at Kodak Research Labs where he was in

charge of process development and process integration for high speed

visible and IR sensitive CCD products. In 1988, Dr. Rhodes joined Micron

Technology where he was named a Principal Fellow in 1998. In 2002, Dr.

Rhodes became Micron's Director of Imager Engineering, overseeing the

development and process technology for all of Micron's imager products.

In October 2004, Dr. Rhodes left Micron to join OmniVision as Director

of Process Engineering, developing OmniVision's advanced pixel design

and process technologies. In August 2005, Dr. Rhodes was promoted to

Vice President of Process Engineering. Dr. Rhodes holds 149 patents and

over 15 publications. Since joining OmniVision Dr. Rhodes has filed for

18 patents. In June 2005, Dr. Rhodes received the Walter Kosonocky Award

for his published work in CMOS Imaging.

Omnivision understands that CIS process development is important!

Thursday, April 13, 2006

Camera Phone Industry Report

Research In China pubished 2005-2006 report on camera-phone industry. Some interesting quotes from the report:

"The global mobile phone shipment in 2005 was 795 million units, 57% of which (about 455 million units) had the photographing function. It is predicted that 85% of the mobile phones will be camera phones by 2008 with a shipment of 800 million units; and the market scale of camera phone module will accordingly rise from $2 billion in 2005 to $4.8 billion by 2008, showing very promising prospects.

Lens manufacturers are concentrated in Taiwan, Japan and South Korea. Due to its high technology content, Lens industry has a high entry threshold. And Taiwan-based enterprises are obviously advantageous in costs with a market share of 57% in 2005, which is expected to reach 65% by 2006.

There are 4 major camera phone lens manufacturers in Taiwan are Genius, Largan, Asia Optical, and Premier. The global market shares for those four are 23%, 25%, 5% and 4% respectively

With the pixel upgrade of camera phones, more and more lens manufacturers are engaged in glass lens manufacturing, but the manufacturers who only have plastic lens technologies are declining. Enplas, for instance, whose sales revenue reduced 6 billion yen with a profit decline from 8.45 billion yen to 3.7 billion yen. Especially in 2-megapixel camera phone lens market, glass lens manufacturers are taking obvious advantages; the optical giants like Fujinon, Konica Minolta, and Largan almost monopolize the market.

Market shares of major 1.3-megapixel lens manufacturers, 2005Q2:

As for assembly industry, Flextronics has become the largest camera phone module manufacturer in the world after it acquired the CMOS image sensor department of Agilent, and the image sensor testing plant of ASE; however, it does not have very efficient R&D. Altus, affiliated to Foxconn Group, developed fast, and its market share has risen from 4% in 2004 to 9% in the second half of 2005 with a shipment of 41 million sets. Meanwhile, domestic manufacturers are declining sharply. During the first half of 2005, the operation revenue of Macat’s major businesses was 157 million Yuan, a 40% decrease year-on-year; the profit of its major businesses was 3.55 million Yuan, a 82% decrease year-on-year; and its net losses were 14 million Yuan, a 286% increase year-on-year. This is mainly caused by the loss of its major client Agilent, which turned to Fextronics."

Market shares of major camera module assembly manufacturers, second half of 2005:

"The global mobile phone shipment in 2005 was 795 million units, 57% of which (about 455 million units) had the photographing function. It is predicted that 85% of the mobile phones will be camera phones by 2008 with a shipment of 800 million units; and the market scale of camera phone module will accordingly rise from $2 billion in 2005 to $4.8 billion by 2008, showing very promising prospects.

Table: Relationship between CMOS sensor IC and wafer plants

| Manufacturer | Maximum pixel (megapixel) | Manufacturing plant |

| OmniVision | 5.17 | TSMC |

| Micron | 5.04 | Has its own wafer plant |

| Magnachip | 3.2 | Has its own wafer plant |

| ST | 2 | Has its own wafer plant |

| ESST | 1.3 | Has its own wafer plant |

| Transchip | 2 | TSMC |

| Agilent | 5 | TSMC |

| pixelplus | 3.2 | Dongbu Anam |

| Kodak | 30 | IBM |

| Toshiba | 3.2 | Has its own wafer plant |

| Crypress | 3 | Has its own wafer plant |

| Samsung | 7 | Has its own wafer plant |

| Pixart | 1.3 | Subsidiary of UMC |

| TASC | 2.1 | TSMC |

| ElecVision | 0.3 | TSMC |

| Galaxycore | 3 | SMIC |

Lens manufacturers are concentrated in Taiwan, Japan and South Korea. Due to its high technology content, Lens industry has a high entry threshold. And Taiwan-based enterprises are obviously advantageous in costs with a market share of 57% in 2005, which is expected to reach 65% by 2006.

There are 4 major camera phone lens manufacturers in Taiwan are Genius, Largan, Asia Optical, and Premier. The global market shares for those four are 23%, 25%, 5% and 4% respectively

With the pixel upgrade of camera phones, more and more lens manufacturers are engaged in glass lens manufacturing, but the manufacturers who only have plastic lens technologies are declining. Enplas, for instance, whose sales revenue reduced 6 billion yen with a profit decline from 8.45 billion yen to 3.7 billion yen. Especially in 2-megapixel camera phone lens market, glass lens manufacturers are taking obvious advantages; the optical giants like Fujinon, Konica Minolta, and Largan almost monopolize the market.

Market shares of major 1.3-megapixel lens manufacturers, 2005Q2:

As for assembly industry, Flextronics has become the largest camera phone module manufacturer in the world after it acquired the CMOS image sensor department of Agilent, and the image sensor testing plant of ASE; however, it does not have very efficient R&D. Altus, affiliated to Foxconn Group, developed fast, and its market share has risen from 4% in 2004 to 9% in the second half of 2005 with a shipment of 41 million sets. Meanwhile,

Market shares of major camera module assembly manufacturers, second half of 2005:

Tuesday, April 11, 2006

VLSI Symposium Image Sensor Papers

2006 VLSI Circuits Symposium on June 15-17 presents quite a few papers on image sensors:

The most interesting paper naturally came from the market leader Micron:

A High-Performance, 5-Megapixel CMOS Image Sensor for Mobile and Digital Still Camera Applications, A. Zadeh, M. Malone, A. Bahukhandi, S. Ay, P. Amini, M. Buckley, J. Gleason, Micron Technology Inc., Pasadena, CA

This is a 5 Mega-pixel CMOS imager targeting mobile and digital still camera. The imager includes 12-bit ADC, PLL, and a serializer with 650 mega-sample-per-second throughput. The imaging cell is 2.2μm, 4-way shared architecture. The chip uses two double-date rate readout channels to perform 12 frames per second at full resolution. Its power consumption is less than 260mW and its standby current is less tha 10μA. It is fabricated in 0.18μm mixed-signal CMOS process with 8.4mm x 7.9mm chip area.

If not a typo, this is the first announced sensor having 2.2um pixel in 0.18um process - quite a significant achievement. So far, at least 0.15um process generation has been used to achieve that small pixel pitch.

Another sensor might advance the high-end DSLR capabilities to the next level in terms of low-ISO dynamic range. However, its low light properties remain to be seen. Photogate structures usualy suffer from high dark current. It's interesting to see how they control it. Also, 6-inch wafer is quite a strange choice for that large sensor.

A 76 x 77mm2, 16.85 Million Pixel CMOS APS Image Sensor, S.U. Ay, E.R. Fossum*, Micron Technology Inc., Pasadena, CA, *University of Southern California, Los Angeles, CA

A 16.85 million pixel (4,096 x 4,114), single die (76mmx77mm) CMOS active pixel sensor (APS) image sensor with 1.35Me- pixel well-depth was designed, fabricated, and tested in a 0.5μm CMOS process with a stitching option. A hybrid photodiode-photogate (HPDPG) APS pixel technology was developed. Pixel pitch was 18μm. The developed image sensor was the world’s largest single-die CMOS image sensor fabricated on a 6-inch silicon wafer.

Photron is rapidly becoming the name in very high-speed image sensors:

A 3500fps High-Speed CMOS Image Sensor with 12b Column-Parallel Cyclic A/D Converters, M. Furuta, T. Inoue*, Y. Nishikawa, S. Kawahito, Shizuoka Univ., Hamammatsu, Japan, *Photron Ltd., Tokyo, Japan

This paper presents a high-speed CMOS image sensor with a global electronic shutter and 12bit column parallel cyclic A/D converters. The fabricated chip in 0.25um CMOS imager technology achieves the full frame rate in excess of 3500 frames per second. The in-pixel charge amplifier achieves the optical sensitivity of 19.9V/lx-s with on-chip microlens.

A controversal Rochester University sigma-delta sensor is presented too:

A 0.88nW/pixel, 99.6 dB Linear-Dynamic-Range Fully-Digital Image Sensor Employing a Pixel-Level Sigma-Delta ADC, Z. Ignjatovic, M.F. Bocko, University of Rochester, Rochester, NY

We describe a CMOS image sensor employing pixel-level sigma-delta analog to digital conversion. The design has high fill factor (31%), zero DC offset fixed pattern noise and reduced reset and transistor readout noise in comparison to other analog and digital imager readout techniques. The sigma-delta pixel design also has low power consumption: 0.88 nW/pixel at 30 fps, high dynamic range of 16 bits, intrinsic linearity, and relative insensitivity to process variations.

KAIST professor Euisic Yoon is a known generator of interesting ideas. This time it's about high DR sensor:

A High Dynamic Range CMOS Image Sensor with In-Pixel Floating-Node Analog Memory for Pixel Level Integration Time Control, S.-W. Han, S.-J. Kim, J.-H. Choi*, C.-K. Kim, E. Yoon*, KAIST, Daejeon, Korea, *University of Minnesota, Minneapolis, MN

In this paper we report a high dynamic range CMOS image sensor (CIS) with in-pixel floating-node analog memory for pixel level integration time control. Each pixel has different integration time based upon the amount of its previous frame illumination. There is no significant additional hardware because we use a floating-node parasitic capacitor as an analog memory. Moreover, there is no significant sacrificing of any other CIS characteristics. In the fabricated test sensor, we could achieve the extended dynamic range by more than 42dB.

The most interesting paper naturally came from the market leader Micron:

A High-Performance, 5-Megapixel CMOS Image Sensor for Mobile and Digital Still Camera Applications, A. Zadeh, M. Malone, A. Bahukhandi, S. Ay, P. Amini, M. Buckley, J. Gleason, Micron Technology Inc., Pasadena, CA

This is a 5 Mega-pixel CMOS imager targeting mobile and digital still camera. The imager includes 12-bit ADC, PLL, and a serializer with 650 mega-sample-per-second throughput. The imaging cell is 2.2μm, 4-way shared architecture. The chip uses two double-date rate readout channels to perform 12 frames per second at full resolution. Its power consumption is less than 260mW and its standby current is less tha 10μA. It is fabricated in 0.18μm mixed-signal CMOS process with 8.4mm x 7.9mm chip area.

If not a typo, this is the first announced sensor having 2.2um pixel in 0.18um process - quite a significant achievement. So far, at least 0.15um process generation has been used to achieve that small pixel pitch.

Another sensor might advance the high-end DSLR capabilities to the next level in terms of low-ISO dynamic range. However, its low light properties remain to be seen. Photogate structures usualy suffer from high dark current. It's interesting to see how they control it. Also, 6-inch wafer is quite a strange choice for that large sensor.

A 76 x 77mm2, 16.85 Million Pixel CMOS APS Image Sensor, S.U. Ay, E.R. Fossum*, Micron Technology Inc., Pasadena, CA, *University of Southern California, Los Angeles, CA

A 16.85 million pixel (4,096 x 4,114), single die (76mmx77mm) CMOS active pixel sensor (APS) image sensor with 1.35Me- pixel well-depth was designed, fabricated, and tested in a 0.5μm CMOS process with a stitching option. A hybrid photodiode-photogate (HPDPG) APS pixel technology was developed. Pixel pitch was 18μm. The developed image sensor was the world’s largest single-die CMOS image sensor fabricated on a 6-inch silicon wafer.

Photron is rapidly becoming the name in very high-speed image sensors:

A 3500fps High-Speed CMOS Image Sensor with 12b Column-Parallel Cyclic A/D Converters, M. Furuta, T. Inoue*, Y. Nishikawa, S. Kawahito, Shizuoka Univ., Hamammatsu, Japan, *Photron Ltd., Tokyo, Japan

This paper presents a high-speed CMOS image sensor with a global electronic shutter and 12bit column parallel cyclic A/D converters. The fabricated chip in 0.25um CMOS imager technology achieves the full frame rate in excess of 3500 frames per second. The in-pixel charge amplifier achieves the optical sensitivity of 19.9V/lx-s with on-chip microlens.

A controversal Rochester University sigma-delta sensor is presented too:

A 0.88nW/pixel, 99.6 dB Linear-Dynamic-Range Fully-Digital Image Sensor Employing a Pixel-Level Sigma-Delta ADC, Z. Ignjatovic, M.F. Bocko, University of Rochester, Rochester, NY

We describe a CMOS image sensor employing pixel-level sigma-delta analog to digital conversion. The design has high fill factor (31%), zero DC offset fixed pattern noise and reduced reset and transistor readout noise in comparison to other analog and digital imager readout techniques. The sigma-delta pixel design also has low power consumption: 0.88 nW/pixel at 30 fps, high dynamic range of 16 bits, intrinsic linearity, and relative insensitivity to process variations.

KAIST professor Euisic Yoon is a known generator of interesting ideas. This time it's about high DR sensor:

A High Dynamic Range CMOS Image Sensor with In-Pixel Floating-Node Analog Memory for Pixel Level Integration Time Control, S.-W. Han, S.-J. Kim, J.-H. Choi*, C.-K. Kim, E. Yoon*, KAIST, Daejeon, Korea, *University of Minnesota, Minneapolis, MN

In this paper we report a high dynamic range CMOS image sensor (CIS) with in-pixel floating-node analog memory for pixel level integration time control. Each pixel has different integration time based upon the amount of its previous frame illumination. There is no significant additional hardware because we use a floating-node parasitic capacitor as an analog memory. Moreover, there is no significant sacrificing of any other CIS characteristics. In the fabricated test sensor, we could achieve the extended dynamic range by more than 42dB.

Saturday, April 08, 2006

Open Discussion on ADVIS Technology

An interesing discussion on ADVIS is ongoing in dpreview.com forums. Mark Bocko, the founder of ADVIS, responds to Eric Fossum comments (link):

"I am the idiot (or possibly the Charlatan) to whom this comment was directed.

We never claimed to be be able to average out photon shot noise, I do not know where this came from. Any comments we have ever made about averaging out shot noise pertained to leakage current related shot noise, which most of the time is not the limiting factor anyway. I don't know how this became an issue but since it was brought up here's the explanation.

The photodiode integrates current, thus successive samples (in time) of the random charge on the photodiode are correlated and can not be averaged together to reduce the noise. However by resetting the PD periodically one destroys this correlation and the decorrelated samples may be averaged to reduce the noise. This all becomes a tradoff between the reset noise and the leakage current related shot noise."

Eric Fossum replies:

"So, given that you can't tell the difference if electrons collected by the PD are from leakage (Aka dark current) or photon generation, and given that shot noise is shot noise, how exactly will you average out the dark current shot noise if you agree you cannot average out photon shot noise?"

So far Eric's position looks much more logical to me.

Update Apr. 13, 2006: The thread has mysteriously disappeared from dpreview.com forums. As of today, the thread traces are still kept in Google cache, but not for long, probably.

Update Apr. 14, 2006: Eric Fossum posted his remarks about behind-the-scene history:

"So, not so long ago there was a thread on the press release by Advis-inc (www.advis-inc.com) about their new (proposed) sensor technology.

The thread disappeared. Was it yanked for some reason? A search for "advis" yields an error.

I posted a series of comments on this technology. The principal subsequently contacted me privately with comments on my comments. I advis-ed him to post them directly himself but I don't think he ever did, and instead the thread is gone gone gone.

I am new enough to DPreview to not know if this is common or rare.

Any ideas?

I would like to post his comments and my comments on his comments, if anyone is still interested in this image sensor technology...

-Eric"

"I am the idiot (or possibly the Charlatan) to whom this comment was directed.

We never claimed to be be able to average out photon shot noise, I do not know where this came from. Any comments we have ever made about averaging out shot noise pertained to leakage current related shot noise, which most of the time is not the limiting factor anyway. I don't know how this became an issue but since it was brought up here's the explanation.

The photodiode integrates current, thus successive samples (in time) of the random charge on the photodiode are correlated and can not be averaged together to reduce the noise. However by resetting the PD periodically one destroys this correlation and the decorrelated samples may be averaged to reduce the noise. This all becomes a tradoff between the reset noise and the leakage current related shot noise."

Eric Fossum replies:

"So, given that you can't tell the difference if electrons collected by the PD are from leakage (Aka dark current) or photon generation, and given that shot noise is shot noise, how exactly will you average out the dark current shot noise if you agree you cannot average out photon shot noise?"

So far Eric's position looks much more logical to me.

Update Apr. 13, 2006: The thread has mysteriously disappeared from dpreview.com forums. As of today, the thread traces are still kept in Google cache, but not for long, probably.

Update Apr. 14, 2006: Eric Fossum posted his remarks about behind-the-scene history:

"So, not so long ago there was a thread on the press release by Advis-inc (www.advis-inc.com) about their new (proposed) sensor technology.

The thread disappeared. Was it yanked for some reason? A search for "advis" yields an error.

I posted a series of comments on this technology. The principal subsequently contacted me privately with comments on my comments. I advis-ed him to post them directly himself but I don't think he ever did, and instead the thread is gone gone gone.

I am new enough to DPreview to not know if this is common or rare.

Any ideas?

I would like to post his comments and my comments on his comments, if anyone is still interested in this image sensor technology...

-Eric"

Tuesday, April 04, 2006

Fujifilm's Organic Image Sensor

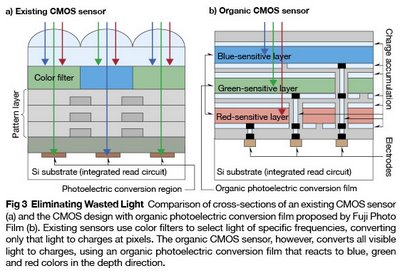

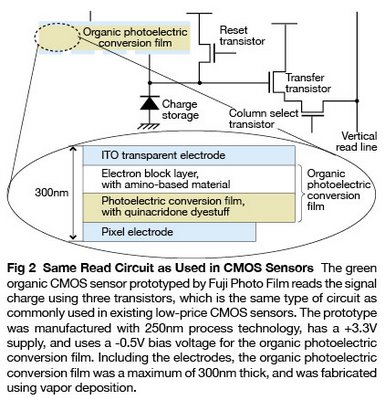

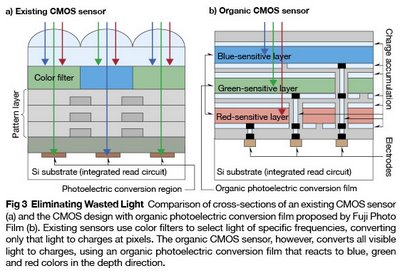

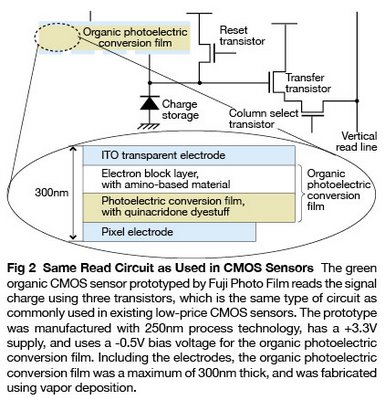

NE Asia Online published an article about Fujifilm prototype of organic image sensor.

The sensor is touted to be more sensitive as (a) it does not discard colors signals as Bayer sensor does, (b) it has nearly 100% aperture ratio and (c) it minimizes color crosstalk because sentitive layers are very thin.

The following two pictures explain the sensor principle:

The sensor is touted to be more sensitive as (a) it does not discard colors signals as Bayer sensor does, (b) it has nearly 100% aperture ratio and (c) it minimizes color crosstalk because sentitive layers are very thin.

The following two pictures explain the sensor principle:

Saturday, April 01, 2006

Advis - New CMOS Imager Company

Not really very recent news, but Dr. Mark F. Bocko from Rochester University started a new image sensor company: ADVantatge Imaging Systems Inc. - ADVIS.

The company pitches wide dynamic range and low-power of their sensors:

"ADVIS’s Direct Photodigital Conversion (DPC) technology turns photons into a single bit digital stream at each pixel site to create the highest dynamic range and lowest power image sensor in the world."

"ADVIS DPC technology has demonstrated a dynamic range of 100dB at a power consumption of 0.88 nanoWatt/per pixel (30 frames per second). This is one-thousandth the power required by typical CCD’s and less than one percent of the power used by typical CMOS Active Pixel Sensors (APS)."

The basic building block is a distributed sigma-delta convertor. On the surface it looks very similar to Amain's approach which failed to get the market acceptance.

Another product is image sensor with integrated image compression engine - it's supposed to be very low power too.

Below is Eric Fossum's opinion on ADVIS technology (link):

"I have some information about this sensor. I don't think it has any advantages over other technology.

1. Pixel-level sigma-delta. Every reset (delta) adds kTC noise.

2. In low light, not even clear how a single pulse will be generated.

3. No one has seen a low light level image from the prototype sensor that I know, incl. potential investors.

4. Unless the sampling rate is high, and threshold low, this sensor is going to see large lag under low light conditions.

5. You cannot average out shot noise. Yikes!

6. Decimation filter power and chip area requirements are significant.

7. You need a frame buffer somewhere to implement the decimation filter.

8. Most power in sensors is due to the readout. ADVIS conveniently ignores the readout power and pad driver power. They also don't include the decimation filter or frame buffer power.

9. There are already many viable ways of doing high dynamic range imaging. The problem is not the technology, it is in the market.

10. It seems to me that the advis technology was developed by someone who doesnt understand image sensors very well. Look at his track record in imaging. Zero thus far. That would be consistent with the claims being made.

Hate to be brutal, but that is the way I see it.

-Eric"

The company pitches wide dynamic range and low-power of their sensors:

"ADVIS’s Direct Photodigital Conversion (DPC) technology turns photons into a single bit digital stream at each pixel site to create the highest dynamic range and lowest power image sensor in the world."

"ADVIS DPC technology has demonstrated a dynamic range of 100dB at a power consumption of 0.88 nanoWatt/per pixel (30 frames per second). This is one-thousandth the power required by typical CCD’s and less than one percent of the power used by typical CMOS Active Pixel Sensors (APS)."

The basic building block is a distributed sigma-delta convertor. On the surface it looks very similar to Amain's approach which failed to get the market acceptance.

Another product is image sensor with integrated image compression engine - it's supposed to be very low power too.

Below is Eric Fossum's opinion on ADVIS technology (link):

"I have some information about this sensor. I don't think it has any advantages over other technology.

1. Pixel-level sigma-delta. Every reset (delta) adds kTC noise.

2. In low light, not even clear how a single pulse will be generated.

3. No one has seen a low light level image from the prototype sensor that I know, incl. potential investors.

4. Unless the sampling rate is high, and threshold low, this sensor is going to see large lag under low light conditions.

5. You cannot average out shot noise. Yikes!

6. Decimation filter power and chip area requirements are significant.

7. You need a frame buffer somewhere to implement the decimation filter.

8. Most power in sensors is due to the readout. ADVIS conveniently ignores the readout power and pad driver power. They also don't include the decimation filter or frame buffer power.

9. There are already many viable ways of doing high dynamic range imaging. The problem is not the technology, it is in the market.

10. It seems to me that the advis technology was developed by someone who doesnt understand image sensors very well. Look at his track record in imaging. Zero thus far. That would be consistent with the claims being made.

Hate to be brutal, but that is the way I see it.

-Eric"

Subscribe to:

Comments (Atom)