The MIPI Alliance, an international organization that develops interface specifications for mobile and mobile-influenced industries, today announced a major update to its high-performance, low-power and low electromagnetic interference (EMI) C-PHY interface specification for connecting cameras and displays. Version 3.0 introduces support for an 18-Wirestate mode encoding option, increasing the maximum performance of a C-PHY lane by approximately 30 to 35 percent. This enhancement delivers up to 75 Gbps over a short channel, supporting the rapidly growing demands of ultra-high-resolution, high-fidelity image sensors.

The new, more efficient encoding option, 32b9s, transports 32 bits over nine symbols and maintains MIPI C-PHY’s industry-leading low EMI and low power properties. For camera applications, the new mode enables the use of lower symbol rates or lane counts for existing use cases, or higher throughput with current lane counts to support new use cases involving very high-end image sensors such as:

- Next-generation prosumer video content creation on smartphones, with high dynamic range (HDR), smart region-of-interest detection and advanced motion vector generation

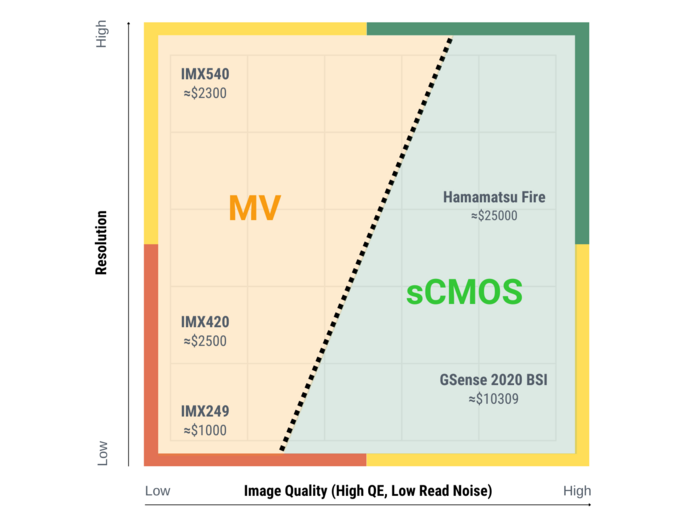

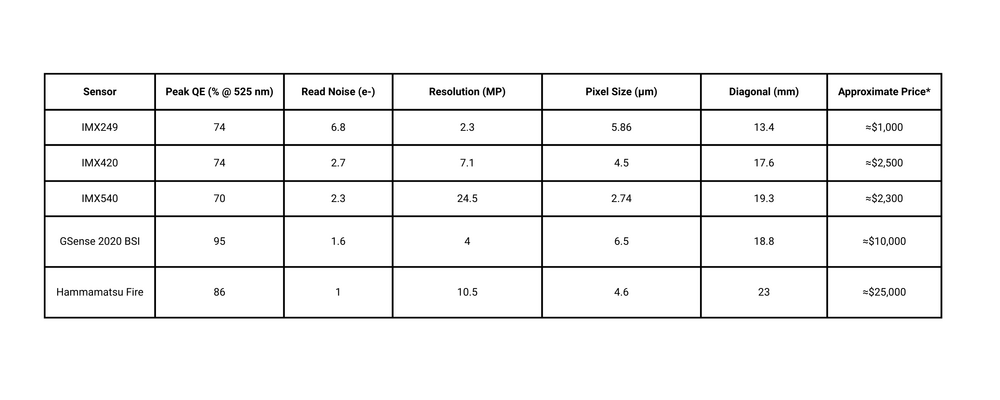

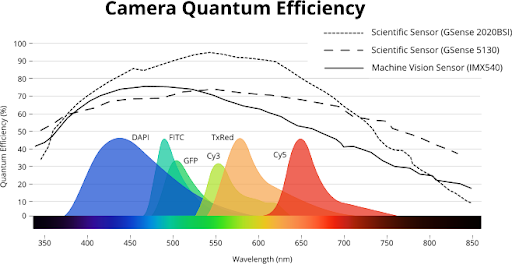

- Machine vision quality-control systems that can detect the smallest of defects in fast-moving production lines

- Advanced driver assistance systems (ADAS) in automotive that can analyze the trajectory and behavior of fast-moving objects in the most challenging lighting conditions

MIPI C-PHY supports the MIPI Camera Serial Interface 2 (MIPI CSI-2) and MIPI Display Serial Interface 2 (MIPI DSI-2) ecosystems in low-power, high-speed applications for the typical interconnect lengths found in mobile, PC compute and IoT applications. The specification:

- Provides high throughput, a minimized number of interconnect signals and superior power efficiency to connect cameras and displays to an application processor. This is due to efficient three-phase coding unique to C-PHY that reduces the number of system interconnects and minimizes electromagnetic emissions to sensitive RF receiver circuitry that is often co-located with C-PHY interfaces.

- Offers flexibility to reallocate lanes within a link because C-PHY functions as an embedded clock link

- Enables low-latency transitions between high-speed and low-power modes

- Includes an alternate low power (ALP) feature, which enables a link operation using only C-PHY’s high-speed signaling levels. An optional fast lane turnaround capability utilizes ALP and supports asymmetrical data rates, which enables implementers to optimize the transfer rates to system needs.

- Can coexist on the same device pins as MIPI D-PHY, so designers can develop dual-mode devices

“C-PHY is MIPI's ternary-based PHY for smartphones, IoT, drones, wearables, PCs, and automotive cameras and displays,” said Hezi Saar, chair of MIPI Alliance. “It supports low-cost, low-resolution image sensors with fewer wires and high-performance image sensors in excess of 100 megapixels. The updated specification enables forward-looking applications like cinematographic-grade video on smartphones, machine vision quality-control systems and ADAS applications in automotive.”

Forthcoming MIPI D-PHY Updates

Significant development work is continuing on MIPI's other primary shorter-reach physical layer, MIPI D-PHY. D-PHY v3.5, released in 2023, includes an embedded clock option for display applications, while the forthcoming v3.6 specification will expand embedded clock support for camera applications, targeting PC / client computing platforms. The next full version, v4.0, will further expand D-PHY’s embedded clock support for use in mobile and beyond-mobile machine vision applications, and further increase D-PHY’s data rate beyond its current 9 Gbps per lane.

Also, MIPI Alliance last year conducted a comprehensive channel signal analysis to document the longer channel lengths of both C- and D-PHY. The resulting member application note, "Application Note for MIPI C-PHY and MIPI D-PHY IT/Compute," demonstrated that both C-PHY and D-PHY can be used in larger end products, such as laptops and all-in-ones, with minimal or no changes to the specifications as originally deployed in mobile phones or tablets, or for even longer lengths by operating at a reduced bandwidth.