A recent paper in Nature invents a new technique called "HADAR": heat-assisted detection and ranging. https://www.nature.com/articles/s41586-023-06174-6

Abstract: Machine perception uses advanced sensors to collect information about the surrounding scene for situational awareness. State-of-the-art machine perception8 using active sonar, radar and LiDAR to enhance camera vision faces difficulties when the number of intelligent agents scales up. Exploiting omnipresent heat signal could be a new frontier for scalable perception. However, objects and their environment constantly emit and scatter thermal radiation, leading to textureless images famously known as the ‘ghosting effect’. Thermal vision thus has no specificity limited by information loss, whereas thermal ranging—crucial for navigation—has been elusive even when combined with artificial intelligence (AI). Here we propose and experimentally demonstrate heat-assisted detection and ranging (HADAR) overcoming this open challenge of ghosting and benchmark it against AI-enhanced thermal sensing. HADAR not only sees texture and depth through the darkness as if it were day but also perceives decluttered physical attributes beyond RGB or thermal vision, paving the way to fully passive and physics-aware machine perception. We develop HADAR estimation theory and address its photonic shot-noise limits depicting information-theoretic bounds to HADAR-based AI performance. HADAR ranging at night beats thermal ranging and shows an accuracy comparable with RGB stereovision in daylight. Our automated HADAR thermography reaches the Cramér–Rao bound on temperature accuracy, beating existing thermography techniques. Our work leads to a disruptive technology that can accelerate the Fourth Industrial Revolution (Industry 4.0) with HADAR-based autonomous navigation and human–robot social interactions.

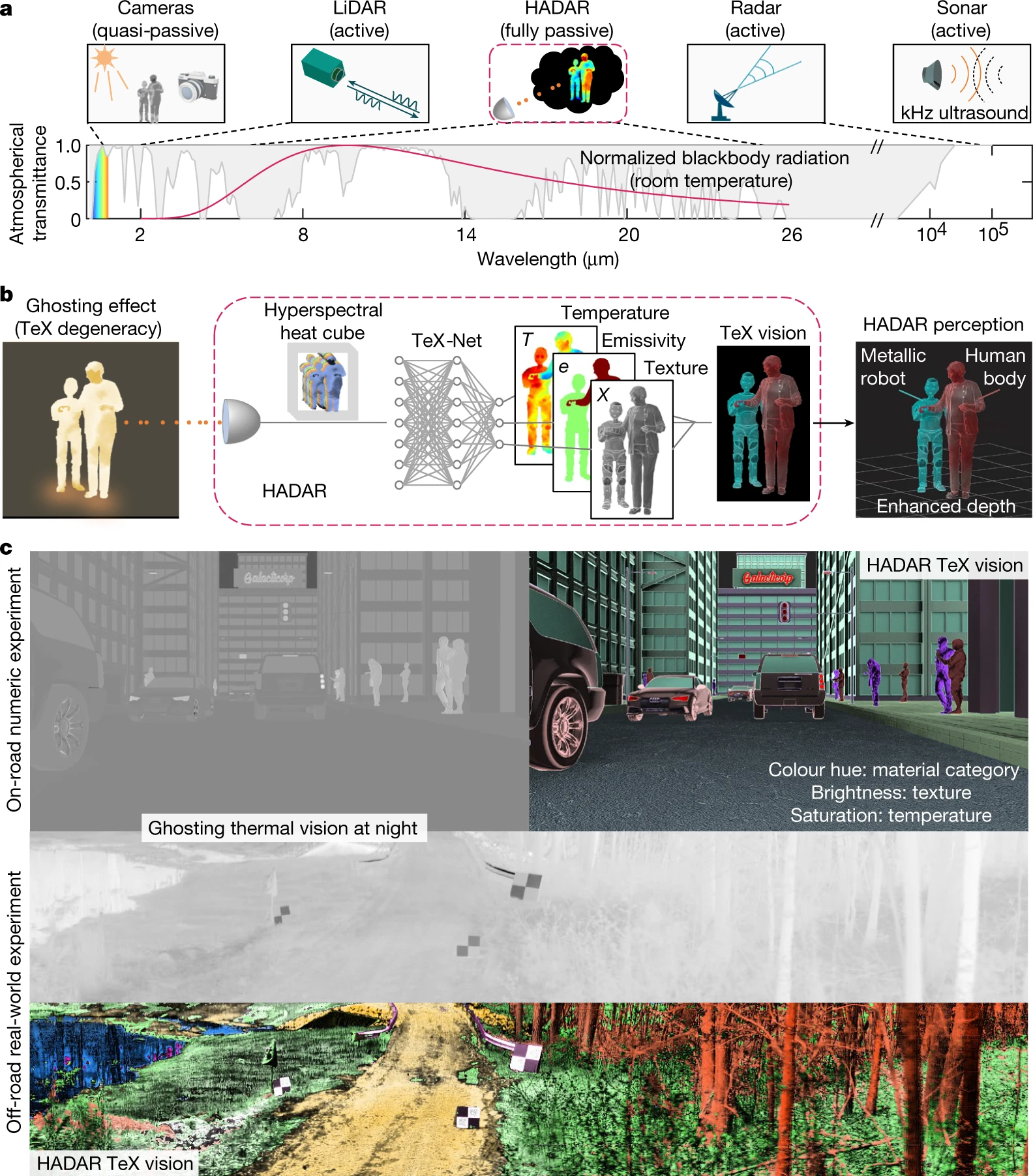

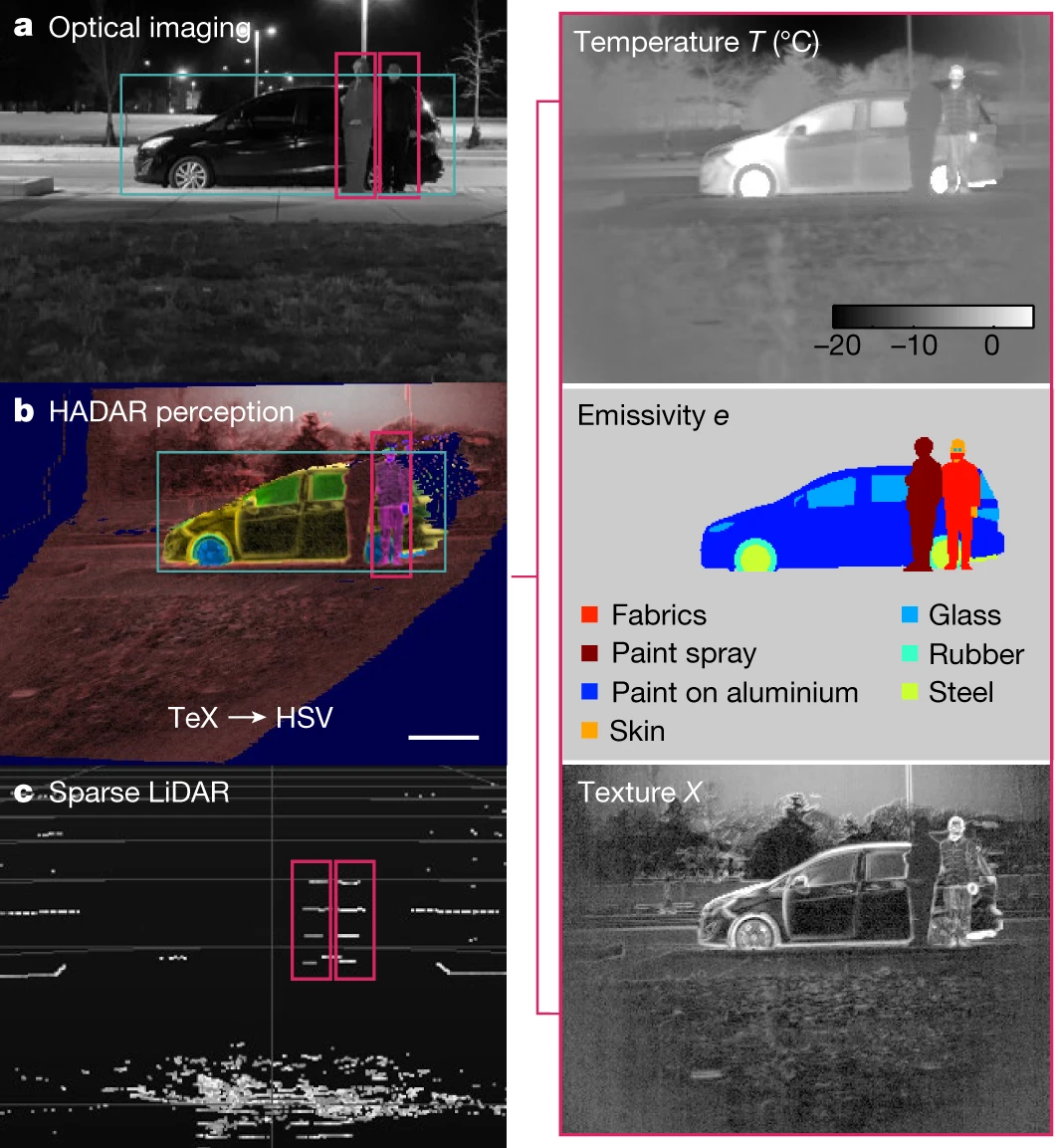

a, Fully passive HADAR makes use of heat signals, as opposed to active sonar, radar, LiDAR and quasi-passive cameras. Atmospherical transmittance window (white area) and temperature of the scene determine the working wavelength of HADAR. b, HADAR takes thermal photon streams as input, records hyperspectral-imaging heat cubes, addresses the ghosting effect through TeX decomposition and generates TeX vision for improved detection and ranging. c, TeX vision demonstrated on our HADAR database and outdoor experiments clearly shows that HADAR sees textures through the darkness with comprehensive understanding of the scene.

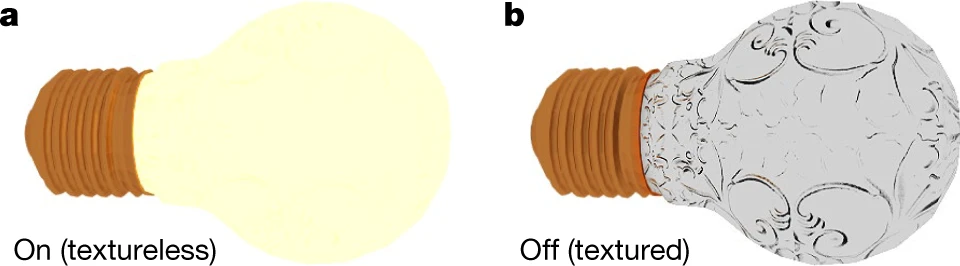

Geometric texture on a light bulb can only be seen when the bulb is off, whereas this texture is completely missing when it is glowing. The blackbody radiation can never be turned off, leading to loss of texture for thermal images. This ghosting effect presents the long-standing obstruction for heat-assisted machine perception.

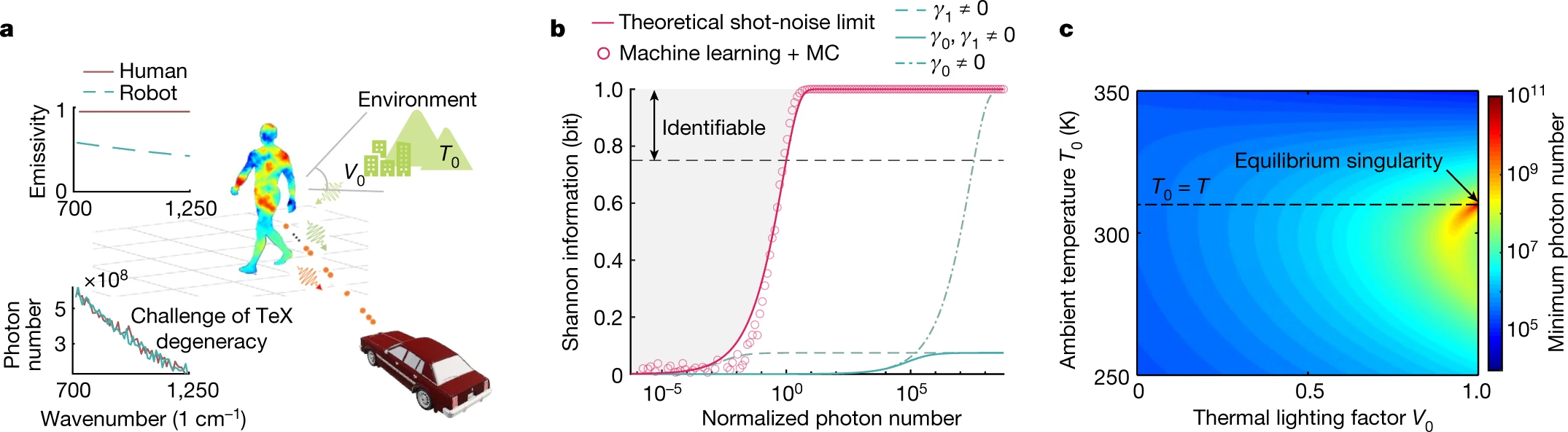

a, TeX degeneracy limits HADAR identifiability, as in the illustrative human–robot identification problem. Top inset, distinct emissivity of human (grey body) and robot (aluminium). Bottom inset, near-identical incident spectra for human (37 °C, red) and robot (72.5 °C, blue). b, HADAR identifiability (Shannon information) as a function of normalized photon number Nd02. We compare the theoretical shot-noise limit of HADAR (solid red line) and machine-learning performance (red circles) on synthetic spectra generated by Monte Carlo (MC) simulations. We also consider realistic detectors with Johnson–Nyquist noise (γ0 = 3.34e5), flicker noise (γ1N = 3.34e5) or mixed noise (γ1N = γ0 = 3.34e5). Identifiability criterion (dashed grey line) is Nd0=1. c, The minimum photon number 1/d02 required to identify a target is usually large because of the TeX degeneracy, dependent on the scene as well as the thermal lighting factor, as shown for the scene in a. Particularly, it diverges at singularity V0 = 1 and T0 = T when the target is in thermal equilibrium with the environment.

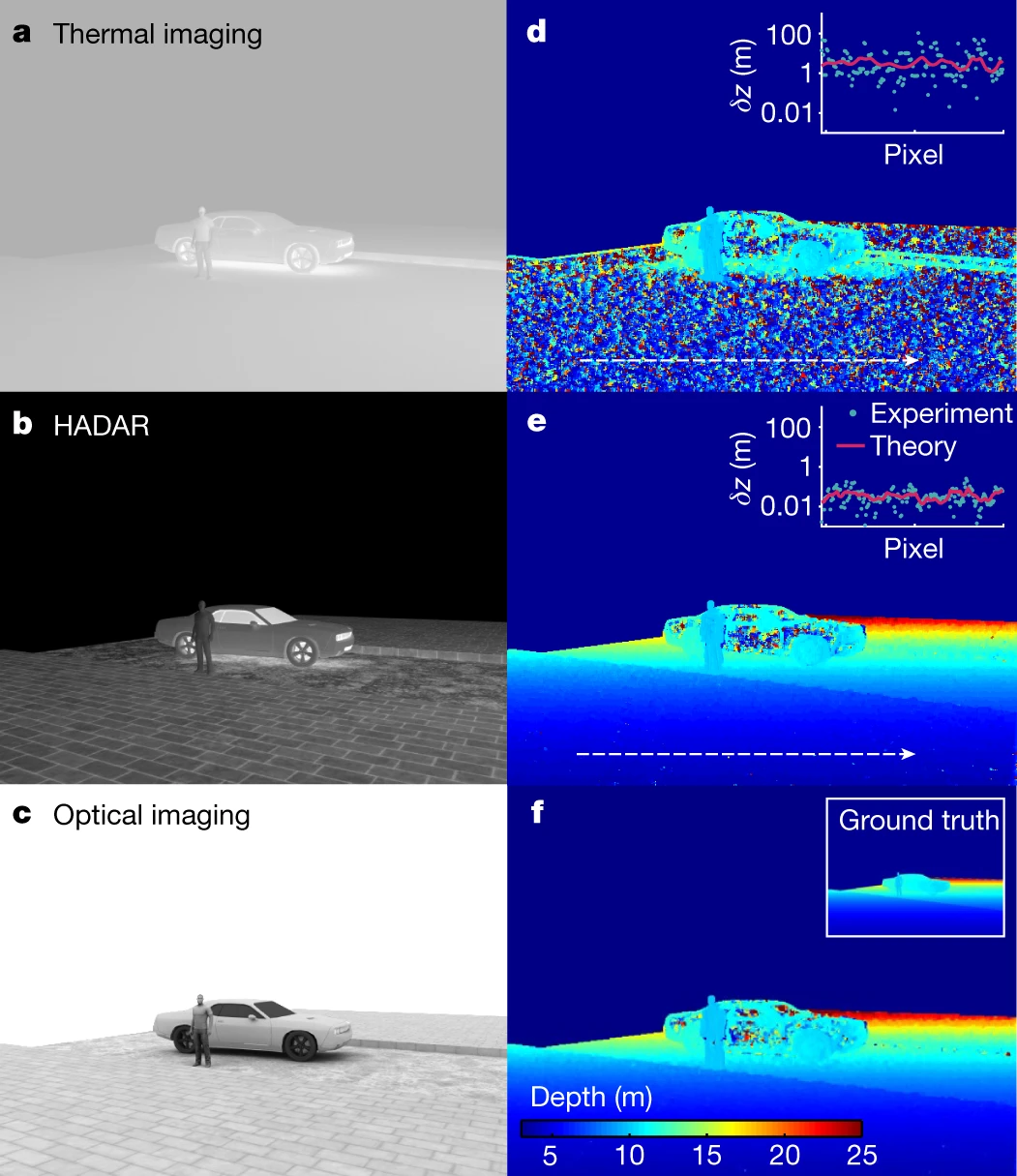

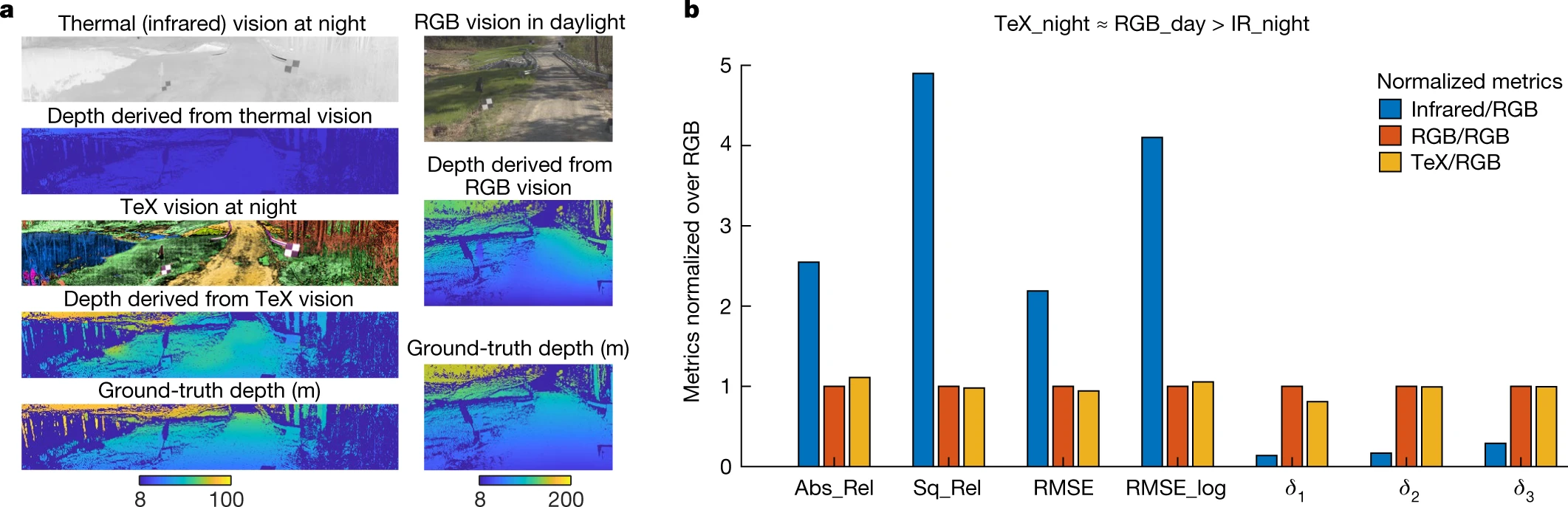

a,d, Ranging on the basis of raw thermal images shows poor accuracy owing to ghosting. b,e, Recovered textures and enhanced ranging accuracy (approximately 100×) in HADAR as compared with thermal ranging. c,f, We also show the optical imaging (c) and RGB stereovision (f) for comparison. Insets in d and e show the depth error δz in Monte Carlo experiments (cyan points) in comparison with our theoretical bound (red curve), along the dashed white lines.

For an outdoor scene of a human body, an Einstein cardboard cutout and a black car at night, vision-driven object detection yields two human bodies (error) and one car from optical imaging (a) and two human bodies and no car (error) from LiDAR point cloud (c). HADAR perception based on TeX physical attributes has comprehensive understanding of the scene and accurate semantics (b; one human body and one car) for unmanned decisions. Scale bar, 1 m.

a, It can be clearly seen that thermal imaging is impeded by the ghosting effect, whereas HADAR TeX vision overcomes the ghosting effect, providing a fundamental route to extracting thermal textures. This texture is crucial for AI algorithms to function optimally. To prove the HADAR ranging advantage, we used GCNDepth (pre-trained on the KITTI dataset)36 for monocular stereovision, as the state-of-the-art AI algorithm. Ground-truth depth is obtained through a high-resolution LiDAR. Depth metrics are listed in Table 1. We normalized the depth metrics over that of RGB stereovision. b, The comparison of normalized metrics clearly demonstrates that ‘TeX_night ≈ RGB_day > IR_night’, that is, HADAR, sees texture and depth through the darkness as if it were day.

This writeup and the Nature abstract are a bit confusing -- they never describes what HADAR actually is beyond a thermal imaging camera and some AI that provides additional texture detail and depth estimation. The graphic shows a 'hyperspectral heat cube' and their reference on the 'famous ghost effect' is on polarimetric images measurements of human faces.

ReplyDeleteHopefully the Nature publication includes these details, but my guess is they are using a cryogenically cooled LWIR camera+filterset that cycles through multiple spectral and potentially polarization filters. Multiple spectral bands make greybody emissivity+temperature estimates trivial (i.e. 2-color pyrometer), and the polarization provides additional surface texture based on their polarimetric camera reference.

The problem here is that this type of camera is pretty impractical for a moving terrestrial system (or target) since it would require multiple expensive (and low resolution) thermal cameras. Cheap microbolometer thermal cameras are also not very useful since their SNR suffers greatly with narrow spectral bands -- they typically operate by receiving the full 8-14 micron atmospheric transmission band.

Perhaps I'm missing something here?

You're not, I don't get it either

ReplyDelete