From globalnewswire: https://www.globenewswire.com/news-release/2026/03/05/3249875/0/en/Teledyne-e2v-Introduces-Perciva-5D-Camera-Occlusion-free-3D-Vision-for-Industrial-Retail-and-Robotic-Imaging.html

Teledyne e2v Introduces Perciva™ 5D Camera: Occlusion-free 3D Vision for Industrial, Retail, and Robotic Imaging

GRENOBLE, France, March 05, 2026 (GLOBE NEWSWIRE) -- Teledyne e2v, a Teledyne Technologies [NYSE: TDY] company and global innovator of imaging solutions, announces the launch of the Perciva™ 5D camera, a breakthrough imaging innovation designed to make high-quality short-range 3D vision cost-effective, reliable, and easy to integrate.

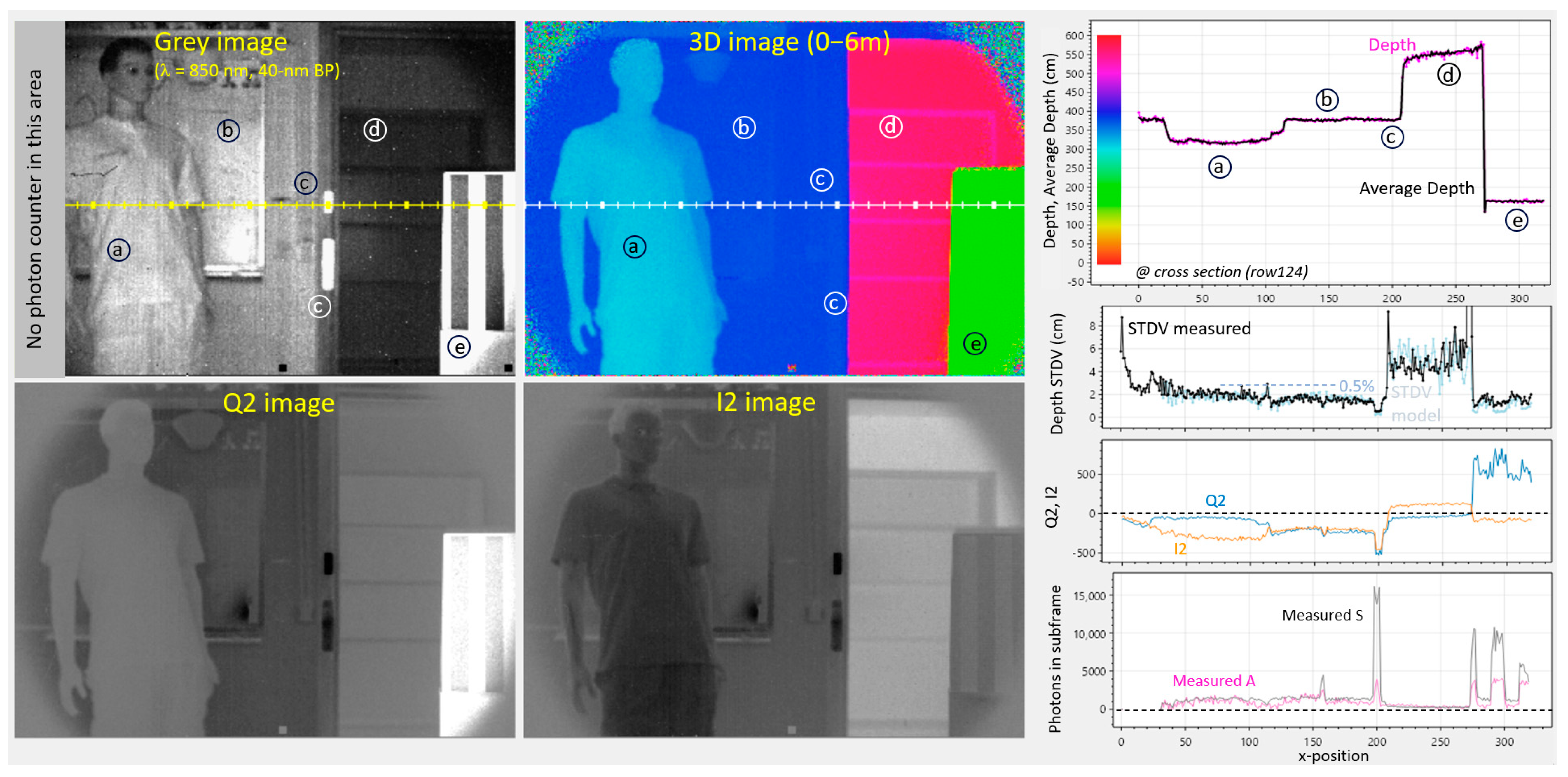

Most industrial cameras only capture 2D images, yet many applications increasingly require depth perception at close and very-close distances. Perciva 5D delivers this capability through a unique Angular Sensitive Pixel technology and advanced on-board processing, enabling real-time 2D and 3D image fusion at the calibrated working distance range. Perciva 5D also features a powerful Neural Processing Unit (NPU), enabling Artificial Intelligence models to run on-device and be customized to each customer’s specific requirements.

Perciva 5D generates 2D and 3D data from a single CMOS sensor, free from optical occlusion, producing time-aligned 2D frames alongside pixel-aligned 3D depth maps. With comprehensive 3D processing built directly into the camera, users benefit from immediate depth maps or point-cloud outputs. Perciva 5D operates using ambient light, indoors or outdoors, eliminating the need for an external NIR source while maintaining reliable performance and minimizing overall system costs. Designed for challenging environments, it offers plug-and-play integration through its GenICam-compliant, GigE Vision interface and robust IP6x-rated housing with industrial M12 connectors.

Factory calibrated and weighing just 230 grams, Perciva 5D operates at less than 5 W, and is ideal for robotics (arms, cobots and humanoids), retail self-checkout solutions, and 3D industrial process monitoring. It supports user-adjustable frame rates or triggered acquisition and multiple power options. Using GenDC / GenTL the camera integrates seamlessly with Teledyne’s Spinnaker® 4 API and SpinView® for 2D / 3D visualisation, as well as leading machine-vision software platforms.

Perciva 5D will be showcased during Embedded World, Nuremberg, Germany, from 10-12 March 2026. Visit Teledyne at stand 2-541 in Hall 2 or contact us online for more information.

Documentation, samples, and software for evaluation or development are available upon request.