Monday, January 31, 2022

Hynix Begins Mass Production of 0.7um Pixels

Chinese CIS Companies Report All-Time High Profits

SecuritiesTimes: Omnivision's net profit in 2021 is expected to be in range of 4.468 billion to 4.868 billion yuan, a year-on-year increase of 65.13% to 79.91%. The net profit after deducting "non-deductible items" (?) is 3.918 billion to 4.268 billion yuan, a year-on-year increase of 74.51% to 90.10%.

Galaxycore too expects operating income of 6.652 billion to 7.492 billion yuan in 2021, net profit of 1.135 billion to 1.35 billion yuan, a year-on-year increase of 46.83% to 74.57%; Profits ranged from 1.086 billion to 1.291 billion yuan, a year-on-year increase of 41.75% to 68.52%, both of which are expected to exceed the revenue growth rate over the same period.

Indeed, Strategy Analytics reports show that Omnivision and other Chinese companies increase their smartphone market share at the expense of Sony and Samsung:

Sunday, January 30, 2022

Panasonic Develops Low-Cost LWIR Lens

Panasonic has developed a mass production technology of low-cost far-IR aspherical lenses. These lenses are made of chalcogenide glass having excellent transmission characteristics in the far-IR. In addition to realizing low-cost (approx. half compared to the company’s conventional method) by newly developed glass molding method and mold processing technology, Panasonic is now able to offer a variety of lenses such as diffractive lens, the world's first* highly hermetic frame-integrated lens without using adhesive (leak detection accuracy of less than 1x10-9 Pa・m3/sec in helium leak test).

A low-cost silicon that has been commonly used as the lens material for far-IR sensors is not suitable for high pixel counts due to its low transmittance, so germanium spherical lenses having high transmittance are widely used as the number of pixels increases. However, as the pixel count increases further, the effect of aberration caused by a spherical lens becomes more pronounced. To reduce this effect, combination of many spherical lenses and an aspherical lens will be required, which leads to increase in cost and size.

To resolve this problem, Panasonic has developed a new technology for the low-cost production of high-performance aspherical lenses suitable for far-infrared optical systems, based on the glass molding technology the company cultivated through the production of visible light aspherical lenses for cameras.

Hermetic sealing is important for thermal imaging camera modules. A low cost lens solution makes thermal cameras more accessible to general consumer applications.

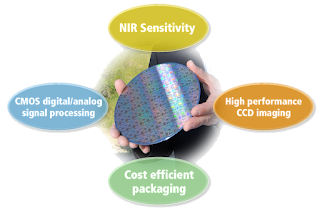

SmartSens News

Saturday, January 29, 2022

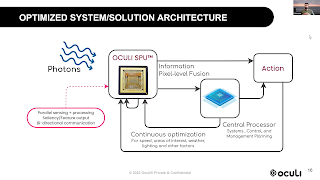

Recent Videos: CEA-Leti, Himax, Gigajot, Trieye, Pixart, Oculi

Friday, January 28, 2022

ESPROS Presents OHC15L Process

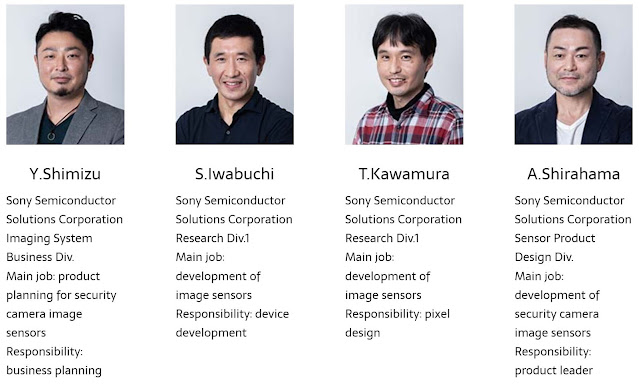

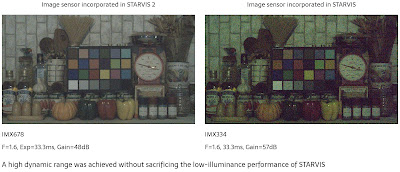

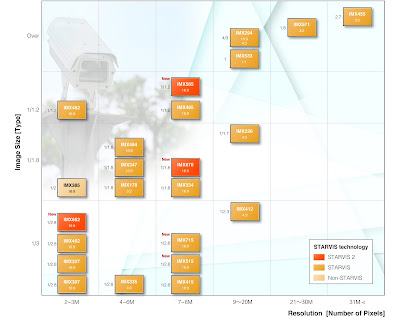

Interview with Sony STARVIS 2 Designers

Sony Stacked Sensor Inventions

Thursday, January 27, 2022

EET-China: For the First Time, Sony Outsources to TSMC Pixel Layer Manufacturing for iPhone 14 Pro Sensor

iToF: Comparison of Different Multipath Resolve Methods

IEEE Sensors publishes a video presentation "Multi-Layer ToF: Comparison of Different Multipath Resolve Methods for Indirect 3D Time-of-Flight" by Jonas Gutknecht and Teddy Loeliger from ZHAW School of Engineering, Switzerland.

Abstract: Multipath Interferences (MPI) represent a significant source of error for many 3D indirect time-of-flight (iToF) applications. Several approaches for separating the individual signal paths in case of MPI are described in literature. However, a direct comparison of these approaches is not possible due to the different parameters used in these measurements. In this article, three approaches for MPI separation are compared using the same measurement and simulation data. Besides the known procedures based on the Prony method and the Orthogonal Matching Pursuit (OMP) algorithm, the Particle Swarm Optimization (PSO) algorithm is applied to this problem. For real measurement data, the OMP algorithm has achieved the most reliable results and reduced the mean absolute distance error up to 96% for the tested measurement setups. However, the OMP algorithm limits the minimal distance between two objects with the setup used to approximately 2.7 m. This limitation cannot be significantly reduced even with a considerably higher modulation bandwidth.

Wednesday, January 26, 2022

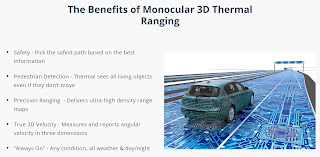

3D Thermal Imaging Startup Owl Autonomous Imaging Raises $15M in Series-A Round

Tuesday, January 25, 2022

Facebook Proposes Image Sensing for More Accurate Voice Recognition

Meta (Facebook) publishes a research post "AI that understands speech by looking as well as hearing:"

"People use AI for a wide range of speech recognition and understanding tasks, from enabling smart speakers to developing tools for people who are hard of hearing or who have speech impairments. But oftentimes these speech understanding systems don’t work well in the everyday situations when we need them most: Where multiple people are speaking simultaneously or when there’s lots of background noise. Even sophisticated noise-suppression techniques are often no match for, say, the sound of the ocean during a family beach trip or the background chatter of a bustling street market.

To help us build these more versatile and robust speech recognition tools, we are announcing Audio-Visual Hidden Unit BERT (AV-HuBERT), a state-of-the-art self-supervised framework for understanding speech that learns by both seeing and hearing people speak. It is the first system to jointly model speech and lip movements from unlabeled data — raw video that has not already been transcribed. Using the same amount of transcriptions, AV-HuBERT is 75 percent more accurate than the best audio-visual speech recognition systems (which use both sound and images of the speaker to understand what the person is saying)."

Sony Holds “Sense the Wonder Day”

Sony Semiconductor Solutions Corporation (SSS) held "Sense the Wonder Day," an event to share with a wide range of stakeholders, including employees, the concept behind the company's new corporate slogan, "Sense the Wonder."

At the event, SSS President and CEO Terushi Shimizu introduced SSS as "a company driven by technology and the curiosity of each individual," and explained that SSS's technology "will create the social infrastructure of the future, and will no doubt lead to a 'sensing society' in which image sensors play an active role in all aspects of life." In addition, he said, "The imaging and sensing technologies we create will allow us to uncover new knowledge that makes us question the common sense of the world and discover new richness hidden in our daily lives.”