Globes: Israeli 3D sensing company Mantis Vision completes the acquisition of Jackson Hole, Utah-based Alces Technology. The newspaper's sources suggest the acquisition was for about $10m. Earlier this month Mantis Vision raised $55m bringing its total investment to $84m. Alces is a depth sensing startup developing a high-resolution structured light technology.

Mantis Vision CEO Gur Bitan says, "Mantis Vision is leading innovation in 3D acquisition and sharing. Alces is a great match and we look forward to bringing their innovations to market. Alces will be rebranded Mantis Vision, Inc. and operate as an R&D center and serve as a base for commercial expansion in the US."

Alces CEO Rob Christensen says, “Our combined knowledge in hardware and optics, along with Mantis Vision’s expertise in algorithms and applications, will enable an exciting new class of products employing high-performance depth sensing.”

Here is how Alces used to compare its technology with Microsoft Kinect:

Tuesday, July 31, 2018

Samsung Expects 10% of Smartphones to Have Triple Camera Next Year

SeekingAlpha: Samsung reports an increase in image sensor sales in Q2 2018 and a strong forecast for both its own image sensor and also the image sensors manufactured at Samsung foundry. Regarding the triple camera trends, Samsung says:

"First of all, regarding the triple camera, the triple camera offers various advantages, such as optical zoom or ultra-wide angle, also extreme low-light imaging. And that's why we're expecting more and more handsets to adopt triple cameras not only in 2018 but next year as well.

But by next year, about 10% of handsets are expected to have triple cameras. And triple camera adoption will continue to grow even after that point. Given this market outlook, actually, we've already completed quite a wide range of sensor line-up that can support the key features, such as optical zoom, ultra-wide viewing angle, bokeh and video support so that we're able to supply image sensors upon demand by customers.

At the same time, we will continue to develop higher performance image sensors that would be able to implement even more differentiating and sophisticated features based on triple camera.

To answer your second part of your question about capacity plan from the Foundry business side, given the expected increase of sensor demand going forward, we are planning additional investments to convert Line 11 from Hwaseong DRAM to image sensors with the target of going into mass production during first half of 2019. The actual size of that capacity will be flexibly managed depending on the customers' demand."

"First of all, regarding the triple camera, the triple camera offers various advantages, such as optical zoom or ultra-wide angle, also extreme low-light imaging. And that's why we're expecting more and more handsets to adopt triple cameras not only in 2018 but next year as well.

But by next year, about 10% of handsets are expected to have triple cameras. And triple camera adoption will continue to grow even after that point. Given this market outlook, actually, we've already completed quite a wide range of sensor line-up that can support the key features, such as optical zoom, ultra-wide viewing angle, bokeh and video support so that we're able to supply image sensors upon demand by customers.

At the same time, we will continue to develop higher performance image sensors that would be able to implement even more differentiating and sophisticated features based on triple camera.

To answer your second part of your question about capacity plan from the Foundry business side, given the expected increase of sensor demand going forward, we are planning additional investments to convert Line 11 from Hwaseong DRAM to image sensors with the target of going into mass production during first half of 2019. The actual size of that capacity will be flexibly managed depending on the customers' demand."

Monday, July 30, 2018

4th International Workshop on Image Sensors and Imaging Systems (IWISS2018)

4th International Workshop on Image Sensors and Imaging Systems (IWISS2018) is to be held on November 28-29 at Tokyo Institute of Technology, Japan. The invited and plenary part of the Workshop program has many interesting presentations:

Now, once the invited and plenary presentations are announces, IWISS2018 calls for posters:

"We are accepting approximately 20 poster papers. Submission of papers for the poster presentation starts in July, and the deadline is on October 5, 2018. Awards will be given to the selected excellent papers presented by ITE members. We encourage everyone to submit latest original work. Every participant needs registration by November 9, 2018. On-site registration is NOT accepted. Only poster session is an open session organized by ITE."

Thanks to KK for the link to the announcement!

- [Plenary] Time-of-flight single-photon avalanche diode imagers by Franco Zappa (Politecnico di Milano (POLIMI), Italy)

- [Invited] Light transport measurement using ToF camera by Yasuhiro Mukaigawa (Nara Institute of Science and Technology, Japan)

- [Invited] A high-speed, high-sensitivity, large aperture avalanche image intensifier panel by Yasunobu Arikawa, Ryosuke Mizutani, Yuki Abe, Shohei Sakata, Jo. Nishibata, Akifumi Yogo, Mitsuo Nakai, Hiroyuki Shiraga, Hiroaki Nishimura, Shinsuke Fujioka, Ryosuke Kodama (Osaka Univ., Japan)

- [Invited] A back-illuminated global-shutter CMOS image sensor with pixel-parallel 14b subthreshold ADC by Shin Sakai, Masaki Sakakibara, Tsukasa Miura, Hirotsugu Takahashi, Tadayuki Taura, and Yusuke Oike (Sony Semiconductor Solutions, Japan)

- [Invited] RTS noise characterization and suppression for advanced CMOS image sensors (tentative) by Rihito Kuroda, Akinobu Teranobu, and Shigetoshi Sugawa (Tohoku Univ., Japan)

- [Invited] Snapshot multispectral imaging using a filter array (tentative) by Kazuma Shinoda (Utsunomiya Univ., Japan)

- [Invited] Multiband imaging and optical spectroscopic sensing for digital agriculture (tentative) by Takaharu Kameoka, Atsushi Hashimoto (Mie Univ., Japan), Kazuki Kobayashi (Shinshu Univ., Japan), Keiichiro Kagawa (Shizuoka Univ., Japan), Masayuki Hirafuji (UTokyo, Japan), and Jun Tanida (Osaka Univ., Japan)

- [Invited] Humanistic intelligence system by Hoi-Jun Yoo (KAIST, Korea)

- [Invited] Lensless fluorescence microscope by Kiyotaka Sasagawa, Ayaka Kimura, Yasumi Ohta, Makito Haruta, Toshihiko Noda, Takashi Tokuda, and Jun Ohta (Nara Institute of Science and Technology, Japan)

- [Invited] Medical imaging with multi-tap CMOS image sensors by Keiichiro Kagawa, Keita Yasutomi, and Shoji Kawahito (Shizuoka Univ., Japan)

- [Invited] Image processing for personalized reality by Kiyoshi Kiyokawa (Nara Institute of Science and Technology, Japan)

- [Invited] Pixel aperture technique for 3-dimensional imaging (tentative) by Jang-Kyoo Shin, Byoung-Soo Choi, Jimin Lee (Kyungpook National Univ., Korea), Seunghyuk Chang, Jong-Ho Park, and Sang-Jin Lee (KAIST, Korea)

- [Invited] Computational photography using programmable sensor by Hajime Nagahara, (Osaka Univ., Japan)

- [Invited] Image sensing for human-computer interaction by Takashi Komuro (Saitama Univ., Japan)

Now, once the invited and plenary presentations are announces, IWISS2018 calls for posters:

"We are accepting approximately 20 poster papers. Submission of papers for the poster presentation starts in July, and the deadline is on October 5, 2018. Awards will be given to the selected excellent papers presented by ITE members. We encourage everyone to submit latest original work. Every participant needs registration by November 9, 2018. On-site registration is NOT accepted. Only poster session is an open session organized by ITE."

Thanks to KK for the link to the announcement!

ON Semi Renames Image Sensor Group, Reports Q2 Results

ON Semi renames Image Sensor Group to "Intelligent Sensing Group," suggesting that other businesses might be added to it in search for a revenue growth:

The company reports:

"During the second quarter, we saw strong demand for our image sensors for ADAS applications. Out traction in ADAS image sensors continues to accelerate. With a complete line of image sensors, including 1, 2, and 8 Megapixels, we are the only provider of complete range of pixel densities on a single platform for next generation ADAS and autonomous driving applications. We believe that a complete line of image sensors on a single platform provides us with significant competitive advantage, and we continue working to extend our technology lead over our competitors.

As we have indicated earlier, according to independent research firms, ON Semiconductor is the leader in image sensors for industrial applications. We continue to leverage our expertise in automotive market to address most demanding applications in industrial and machine vision markets. Both of these markets are driven by artificial intelligence and face similar challenges, such as low light conditions, high dynamic range and harsh operating environment."

The company reports:

"During the second quarter, we saw strong demand for our image sensors for ADAS applications. Out traction in ADAS image sensors continues to accelerate. With a complete line of image sensors, including 1, 2, and 8 Megapixels, we are the only provider of complete range of pixel densities on a single platform for next generation ADAS and autonomous driving applications. We believe that a complete line of image sensors on a single platform provides us with significant competitive advantage, and we continue working to extend our technology lead over our competitors.

As we have indicated earlier, according to independent research firms, ON Semiconductor is the leader in image sensors for industrial applications. We continue to leverage our expertise in automotive market to address most demanding applications in industrial and machine vision markets. Both of these markets are driven by artificial intelligence and face similar challenges, such as low light conditions, high dynamic range and harsh operating environment."

Cepton to Integrate its LiDAR into Koito Headlights

BusinessWire: Cepton, a developer 3D LiDAR based on stereo scanner, announces it will provide Koito with its miniaturized LiDAR solution for autonomous driving. The compact design of Cepton’s LiDAR sensors enables direct integration into a vehicle’s lighting system. Its Micro-Motion Technology (MMT) platform is said to be free of mechanical rotation and frictional wear, producing high-resolution imaging of a vehicle’s surroundings to detect objects at a distance of up to 300 meters away.

“We are excited to bring advanced LiDAR technology to vehicles to improve safety and reliability,” said Jun Pei, CEO and co-founder of Cepton. “With the verification of our LiDAR technology, we hope to advance the goals of Koito, a global leader within the automotive lighting industry producing over 20 percent of headlights globally and 60 percent of Japanese OEM vehicles.”

Before Cepton, Koito used to cooperate with Quanergy with the similar claims a year ago. Cepton technology is based on mechanical scanning, a step away from Quanergy optical phased array scanning.

Cepton ToF scanning solution is presented in a number of patent applications. 110a,b are the laser sources, while 160a,b are the ToF photodetectors:

“We are excited to bring advanced LiDAR technology to vehicles to improve safety and reliability,” said Jun Pei, CEO and co-founder of Cepton. “With the verification of our LiDAR technology, we hope to advance the goals of Koito, a global leader within the automotive lighting industry producing over 20 percent of headlights globally and 60 percent of Japanese OEM vehicles.”

Before Cepton, Koito used to cooperate with Quanergy with the similar claims a year ago. Cepton technology is based on mechanical scanning, a step away from Quanergy optical phased array scanning.

Cepton ToF scanning solution is presented in a number of patent applications. 110a,b are the laser sources, while 160a,b are the ToF photodetectors:

Sunday, July 29, 2018

SensibleVision Disagrees with Microsoft Proposal of Facial Recognition Regulation

BusinessWire: SensibleVision, a developer of 3D face authentication solutions, criticized Microsoft President Brad Smith's call for government regulation of facial recognition technology:

“Why would Smith single out this one technology for external oversight and not all biometrics methods?” asks George Brostoff, CEO and Co-Founder of SensibleVision. “In fact, unlike fingerprints or iris scans, a person's face is always in view and public. I would suggest it’s the use cases, ownership and storage of biometric data (in industry parlance “templates”) that are critical and should be considered for regulation. Partnerships between private companies and the public sector have always been key to the successful adoption of innovative technologies. We look forward to contributing to this broader discussion.”

“Why would Smith single out this one technology for external oversight and not all biometrics methods?” asks George Brostoff, CEO and Co-Founder of SensibleVision. “In fact, unlike fingerprints or iris scans, a person's face is always in view and public. I would suggest it’s the use cases, ownership and storage of biometric data (in industry parlance “templates”) that are critical and should be considered for regulation. Partnerships between private companies and the public sector have always been key to the successful adoption of innovative technologies. We look forward to contributing to this broader discussion.”

Saturday, July 28, 2018

Column-Parallel ADC Archietctures Comparison

Japanese IEICE Transactions on Electronics publishes Shoji Kawahito paper "Column-Parallel ADCs for CMOS Image Sensors and Their FoM-Based Evaluations."

"The defined FoM are applied to surveyed data on CISs reported and the following conclusions are obtained:

- The performance of CISs should be evaluated with different metrics to high pixel-rate regions (∼> 1000MHz) from those to low or middle pixel-rate regions.

- The conventional FoM (commonly-used FoM) calculated by (noise) x (power) /(pixel-rate) is useful for observing entirely the trend of performance frontline of CISs.

- The FoM calculated by (noise)2 x (power) /(pixel-rate) which considers a model on thermal noise and digital system noise well explain the frontline technologies separately in low/middle and high pixel-rate regions.

- The FoM calculated by (noise) x (power)/ (intrascene dynamic range)/ (pixel-rate) well explains the effectiveness of the recently-reported techniques for extending dynamic range.

- The FoM calculated by (noise) x (power)/ (gray-scale range)/ (pixel-rate) is useful for evaluating the value of having high gray-scale resolution, and cyclic-based and deltasigma ADCs are on the frontline for high and low pixel-rate regions, respectively."

"The defined FoM are applied to surveyed data on CISs reported and the following conclusions are obtained:

- The performance of CISs should be evaluated with different metrics to high pixel-rate regions (∼> 1000MHz) from those to low or middle pixel-rate regions.

- The conventional FoM (commonly-used FoM) calculated by (noise) x (power) /(pixel-rate) is useful for observing entirely the trend of performance frontline of CISs.

- The FoM calculated by (noise)2 x (power) /(pixel-rate) which considers a model on thermal noise and digital system noise well explain the frontline technologies separately in low/middle and high pixel-rate regions.

- The FoM calculated by (noise) x (power)/ (intrascene dynamic range)/ (pixel-rate) well explains the effectiveness of the recently-reported techniques for extending dynamic range.

- The FoM calculated by (noise) x (power)/ (gray-scale range)/ (pixel-rate) is useful for evaluating the value of having high gray-scale resolution, and cyclic-based and deltasigma ADCs are on the frontline for high and low pixel-rate regions, respectively."

Friday, July 27, 2018

TowerJazz CIS Update

SeekingAlpha publishes TowerJazz Q2 2018 earnings call transcript with an update on its CIS technology progress:

"We had announced the new 25 megapixel sensor using our state-of-the-art and record smallest 2.5 micron global shutter pixels with Gpixel, a leading industrial sensor provider in China.

The product is achieving very high traction in the market with samples having been delivered to major and to customers. Another leading provider in this market, who has worked with us for many years will soon release a new global shutter sensor based on the same platform. Both of the above mentioned sensors are the first for families of sensors with different pixel count resolutions for each of those customers next generation industrial sensor offering ranging from 1 megapixel to above 100-megapixel.

We expect this global shutter with this outstanding performance based on our 65-nanometer 300- millimeter wafers to drive high volumes in 2019 and the years following. We see this as a key revenue driver from our industrial sensor customers. In parallel, e2v is ramping to production with its very successful Emerald sensor family on our 110-nanometer global shutter platform using state-of-the-art 2.8 micron pixel with best in class shutter efficiency and noise level performance. We recently released our 200-millimeter backside illumination for selected customers.

We are working with them on new products based on this technology, as well as on upgrading existing products from our front side illumination version to a BSI version, increase in the quantum efficiency of the pixels by using BSI, especially for the near IR regime within the industrial and surveillance markets, enabling our customers improve performance of their existing products. As a bridge to the next generation family of sensors in our advanced 300-millimeter platform.

The medical X-ray market, we are continually gaining momentum and are working with several market leaders on large panel dental and medical CMOS detectors based on our one dye per wafer sensor technology using our well established and high margin stitching with best in class high dynamic range pixels providing customers with extreme value creation and high yield both in 200-millimeter and 300-millimeter wafer technology.

We presently have a strong business with market leadership in this segment and expect substantial growth in 2019 on 200-millimeter with 300 millimeter initial qualifications that will drive an incremental growth over the next multiple years.

For mid to long-term accretive market growth, we are progressing well with a leading DSLR camera supplier and have as well begun a second project with this customer, using state-of-the-art stacked wafer technology on 300-millimeter wafers. For this DSLR supplier, the first front side illmination project is progressing according to plan, expecting to ramp the volume production in 2020, while the second stacked wafer based project with industry leading alignment accuracy and associated performance benefits is expected to ramp to volume production a year after.

In addition, we are progressing on two very exciting programs in the augmented and virtual reality markets, one for 3D time of flight-based sensors and one for silicon-based screens for a virtual reality, head-mount displays."

"We had announced the new 25 megapixel sensor using our state-of-the-art and record smallest 2.5 micron global shutter pixels with Gpixel, a leading industrial sensor provider in China.

The product is achieving very high traction in the market with samples having been delivered to major and to customers. Another leading provider in this market, who has worked with us for many years will soon release a new global shutter sensor based on the same platform. Both of the above mentioned sensors are the first for families of sensors with different pixel count resolutions for each of those customers next generation industrial sensor offering ranging from 1 megapixel to above 100-megapixel.

We expect this global shutter with this outstanding performance based on our 65-nanometer 300- millimeter wafers to drive high volumes in 2019 and the years following. We see this as a key revenue driver from our industrial sensor customers. In parallel, e2v is ramping to production with its very successful Emerald sensor family on our 110-nanometer global shutter platform using state-of-the-art 2.8 micron pixel with best in class shutter efficiency and noise level performance. We recently released our 200-millimeter backside illumination for selected customers.

We are working with them on new products based on this technology, as well as on upgrading existing products from our front side illumination version to a BSI version, increase in the quantum efficiency of the pixels by using BSI, especially for the near IR regime within the industrial and surveillance markets, enabling our customers improve performance of their existing products. As a bridge to the next generation family of sensors in our advanced 300-millimeter platform.

The medical X-ray market, we are continually gaining momentum and are working with several market leaders on large panel dental and medical CMOS detectors based on our one dye per wafer sensor technology using our well established and high margin stitching with best in class high dynamic range pixels providing customers with extreme value creation and high yield both in 200-millimeter and 300-millimeter wafer technology.

We presently have a strong business with market leadership in this segment and expect substantial growth in 2019 on 200-millimeter with 300 millimeter initial qualifications that will drive an incremental growth over the next multiple years.

For mid to long-term accretive market growth, we are progressing well with a leading DSLR camera supplier and have as well begun a second project with this customer, using state-of-the-art stacked wafer technology on 300-millimeter wafers. For this DSLR supplier, the first front side illmination project is progressing according to plan, expecting to ramp the volume production in 2020, while the second stacked wafer based project with industry leading alignment accuracy and associated performance benefits is expected to ramp to volume production a year after.

In addition, we are progressing on two very exciting programs in the augmented and virtual reality markets, one for 3D time of flight-based sensors and one for silicon-based screens for a virtual reality, head-mount displays."

Thursday, July 26, 2018

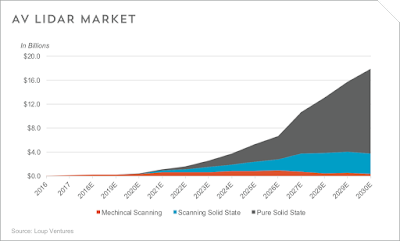

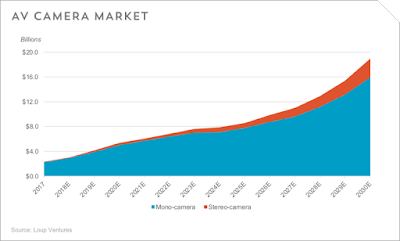

Loup Ventures LiDAR Technologies Comparison

Loup Ventures publishes its analysis of LiDAR technologies and how they compete with each other on the market:

There is also a comparison of camera, LiDAR and Radar technologies of autonomous vehicles:

Another Loup Ventures article tries to answer on question "If a human can drive a car based on vision alone, why can’t a computer?"

"While we believe Tesla can develop autonomous cars that “resemble human driving” primarily driven by cameras, the goal is to create a system that far exceeds human capability. For that reason, we believe more data is better, and cars will need advanced computer perception technologies such as RADAR and LiDAR to achieve a level of driving far superior than humans. However, since cameras are the only sensor technology that can capture texture, color and contrast information, they will play a key role in reaching level 4 and 5 autonomy and in-turn represent a large market opportunity."

There is also a comparison of camera, LiDAR and Radar technologies of autonomous vehicles:

Another Loup Ventures article tries to answer on question "If a human can drive a car based on vision alone, why can’t a computer?"

"While we believe Tesla can develop autonomous cars that “resemble human driving” primarily driven by cameras, the goal is to create a system that far exceeds human capability. For that reason, we believe more data is better, and cars will need advanced computer perception technologies such as RADAR and LiDAR to achieve a level of driving far superior than humans. However, since cameras are the only sensor technology that can capture texture, color and contrast information, they will play a key role in reaching level 4 and 5 autonomy and in-turn represent a large market opportunity."

Synaptics Under-Display Fingerprint Scanner Reverse Engineering

SystemPlus Consulting publishes a reverse engineering report of Synaptics’ under-display fingerprint scanner found inside the VIVO X21 UD Smartphone:

"This scanner uses optical fingerprint technology that allows integration under the display. With a stainless steel support and two flexible printed circuit layers, the Synaptics fingerprint sensor’s dimensions are 6.46 mm x 9.09 mm, with an application specific integrated circuit (ASIC) driver in the flex module. This image sensor is also assembled with a glass substrate where filters are deposited.

The sensor has a resolution of 30,625 pixels, with a pixel density of 777ppi. The module’s light source is providing by the OLED display glasses. The fingerprint module uses a collimator layer corresponding to the layers directly deposited on the die sensor and composed of organic, metallic and silicon layers. This only allows light rays reflected at normal incidence to the collimator filter layer to pass through and reach the optical sensing elements. The sensor is connected by wire bonding to the flexible printed circuit and uses a CMOS process."

"This scanner uses optical fingerprint technology that allows integration under the display. With a stainless steel support and two flexible printed circuit layers, the Synaptics fingerprint sensor’s dimensions are 6.46 mm x 9.09 mm, with an application specific integrated circuit (ASIC) driver in the flex module. This image sensor is also assembled with a glass substrate where filters are deposited.

The sensor has a resolution of 30,625 pixels, with a pixel density of 777ppi. The module’s light source is providing by the OLED display glasses. The fingerprint module uses a collimator layer corresponding to the layers directly deposited on the die sensor and composed of organic, metallic and silicon layers. This only allows light rays reflected at normal incidence to the collimator filter layer to pass through and reach the optical sensing elements. The sensor is connected by wire bonding to the flexible printed circuit and uses a CMOS process."

|

| Sensor die |

|

| ASIC die |

|

| Optical filter deposition on glass substrate |

Wednesday, July 25, 2018

Microsoft Proposes Government Regulation of Facial Recognition Use

Microsoft President Brad Smith writes in the company blog: "Advanced technology no longer stands apart from society; it is becoming deeply infused in our personal and professional lives. This means the potential uses of facial recognition are myriad.

Some emerging uses are both positive and potentially even profound. But other potential applications are more sobering. Imagine a government tracking everywhere you walked over the past month without your permission or knowledge. Imagine a database of everyone who attended a political rally that constitutes the very essence of free speech. Imagine the stores of a shopping mall using facial recognition to share information with each other about each shelf that you browse and product you buy, without asking you first.

Perhaps as much as any advance, facial recognition raises a critical question: what role do we want this type of technology to play in everyday society?

This in fact is what we believe is needed today – a government initiative to regulate the proper use of facial recognition technology, informed first by a bipartisan and expert commission."

Some emerging uses are both positive and potentially even profound. But other potential applications are more sobering. Imagine a government tracking everywhere you walked over the past month without your permission or knowledge. Imagine a database of everyone who attended a political rally that constitutes the very essence of free speech. Imagine the stores of a shopping mall using facial recognition to share information with each other about each shelf that you browse and product you buy, without asking you first.

Perhaps as much as any advance, facial recognition raises a critical question: what role do we want this type of technology to play in everyday society?

This in fact is what we believe is needed today – a government initiative to regulate the proper use of facial recognition technology, informed first by a bipartisan and expert commission."

Automotive News: Kyocera, Asahi Kasei

Nikkei: Kyocera presents "Camera-LiDAR Fusion Sensor" to be to commercialize in 2022 or 2023. The sensor uses two kinds of data collected by the camera and LiDAR sensor, respectively, to improve the recognition rate of objects around the sensor. The LiDAR uses MEMS for beam scanning. The resolution of the LiDAR is 0.05°.

"When only LiDAR is used, it is difficult to detect the locations of objects," Kyocera said. "When only the camera is used, there is a possibility that shadows on the ground are mistakenly recognized as three-dimensional objects."

Nikkei: Asahi Kasei develops a technology to measure the pulse of a driver based on video shot by a NIR camera. The camera uses the fact that hemoglobin in the blood absorbs a large amount of green light. So, the brightness of the face in video changes and a pulse rate can be calculated.

It takes about 8s to measure the pulse rate of a driver, including the face authentication. It helps to check the physical conditions of a driver.

"When only LiDAR is used, it is difficult to detect the locations of objects," Kyocera said. "When only the camera is used, there is a possibility that shadows on the ground are mistakenly recognized as three-dimensional objects."

| Kyocera Camera-LiDAR |

Nikkei: Asahi Kasei develops a technology to measure the pulse of a driver based on video shot by a NIR camera. The camera uses the fact that hemoglobin in the blood absorbs a large amount of green light. So, the brightness of the face in video changes and a pulse rate can be calculated.

It takes about 8s to measure the pulse rate of a driver, including the face authentication. It helps to check the physical conditions of a driver.

SiOnyx Launches Aurora Day/Night Video Camera

BusinessWire: SiOnyx announces the official launch for the SiOnyx Aurora action video camera with true day and night color imaging. Aurora is based on the SiOnyx Ultra Low Light technology that is protected by more than 40 patents and until now was only available in the highest-end night vision optics costing tens of thousands of dollars. This identical technology has now been cost-reduced for use in Aurora and other upcoming devices from SiOnyx and its partners.

SiOnyx backgrounder says: "SiOnyx XQE CMOS image sensors provide superior night vision, biometrics, eye tracking, and a natural human interface through our proprietary Black Silicon semiconductor technology. XQE image sensors deliver unprecedented performance advantages in infrared imaging, including sensitivity enhancements as high as 10x today’s sensor solutions.

XQE enhanced IR sensitivity takes advantage of the naturally occurring IR ‘nightglow’ to enable imaging under extreme (0.001 lux) conditions. XQE sensors provide high-quality daytime color as well as nighttime imaging capabilities that offer new levels of performance and threat detection.

As a result, SiOnyx’s Black Silicon platform represents a significant breakthrough in the development of smaller, lower cost, higher performance photonic devices.

The SiOnyx XQE technology is based on a proprietary laser process that creates the ultimate light trapping pixel, which is capable of increased quantum efficiency across the silicon band gap without damaging artifacts like dark current, non-uniformities, image lag or bandwidth limitations.

Compared to today’s CCD and CMOS image sensors, SiOnyx XQE CMOS sensors provide increased IR responsivity at the critical 850nm and 940nm wavelengths that are used in IR illumination. SiOnyx has more than 1,000 claims to the technology used in Black Silicon.

Surface modification of silicon enables SiOnyx to achieve the theoretical limit in light trapping, which results in extremely high absorption of both visible and infrared light.

The result is the industry’s best uncooled low light CMOS sensor that can be used in bright light (unlike standard night vision goggles) and can see and display color and display in high resolution (unlike thermal sensors)."

SiOnyx backgrounder says: "SiOnyx XQE CMOS image sensors provide superior night vision, biometrics, eye tracking, and a natural human interface through our proprietary Black Silicon semiconductor technology. XQE image sensors deliver unprecedented performance advantages in infrared imaging, including sensitivity enhancements as high as 10x today’s sensor solutions.

XQE enhanced IR sensitivity takes advantage of the naturally occurring IR ‘nightglow’ to enable imaging under extreme (0.001 lux) conditions. XQE sensors provide high-quality daytime color as well as nighttime imaging capabilities that offer new levels of performance and threat detection.

As a result, SiOnyx’s Black Silicon platform represents a significant breakthrough in the development of smaller, lower cost, higher performance photonic devices.

The SiOnyx XQE technology is based on a proprietary laser process that creates the ultimate light trapping pixel, which is capable of increased quantum efficiency across the silicon band gap without damaging artifacts like dark current, non-uniformities, image lag or bandwidth limitations.

Compared to today’s CCD and CMOS image sensors, SiOnyx XQE CMOS sensors provide increased IR responsivity at the critical 850nm and 940nm wavelengths that are used in IR illumination. SiOnyx has more than 1,000 claims to the technology used in Black Silicon.

Surface modification of silicon enables SiOnyx to achieve the theoretical limit in light trapping, which results in extremely high absorption of both visible and infrared light.

The result is the industry’s best uncooled low light CMOS sensor that can be used in bright light (unlike standard night vision goggles) and can see and display color and display in high resolution (unlike thermal sensors)."

Tuesday, July 24, 2018

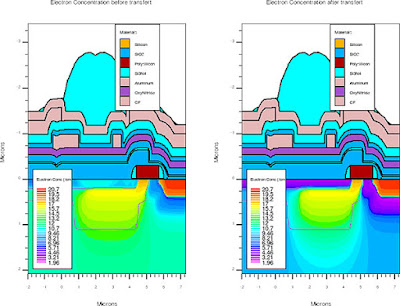

Toward the Ultimate High-Speed Image Sensor

MDPI Sensors publishes a paper "Toward the Ultimate-High-Speed Image Sensor: From 10 ns to 50 ps" by Anh Quang Nguyen, Vu Truong Son Dao, Kazuhiro Shimonomura, Kohsei Takehara, and Takeharu Goji Etoh from Hanoi University of Science and Technology (Vietnam), Vietnam National University HCMC, Ritsumeikan University (Japan), and Kindai University (Japan).

"The paper summarizes the evolution of the Backside-Illuminated Multi-Collection-Gate (BSI MCG) image sensors from the proposed fundamental structure to the development of a practical ultimate-high-speed silicon image sensor. A test chip of the BSI MCG image sensor achieves the temporal resolution of 10 ns. The authors have derived the expression of the temporal resolution limit of photoelectron conversion layers. For silicon image sensors, the limit is 11.1 ps. By considering the theoretical derivation, a high-speed image sensor designed can achieve the frame rate close to the theoretical limit. However, some of the conditions conflict with performance indices other than the frame rate, such as sensitivity and crosstalk. After adjusting these trade-offs, a simple pixel model of the image sensor is designed and evaluated by simulations. The results reveal that the sensor can achieve a temporal resolution of 50 ps with the existing technology."

"The paper summarizes the evolution of the Backside-Illuminated Multi-Collection-Gate (BSI MCG) image sensors from the proposed fundamental structure to the development of a practical ultimate-high-speed silicon image sensor. A test chip of the BSI MCG image sensor achieves the temporal resolution of 10 ns. The authors have derived the expression of the temporal resolution limit of photoelectron conversion layers. For silicon image sensors, the limit is 11.1 ps. By considering the theoretical derivation, a high-speed image sensor designed can achieve the frame rate close to the theoretical limit. However, some of the conditions conflict with performance indices other than the frame rate, such as sensitivity and crosstalk. After adjusting these trade-offs, a simple pixel model of the image sensor is designed and evaluated by simulations. The results reveal that the sensor can achieve a temporal resolution of 50 ps with the existing technology."

Andor Reveals Details of its New BSI sCMOS Sona Camera

Andor publishes a video with some details of its new BSI sCMOS Sona sensor: 4.2MP, 32mm diagonal, up to 95% QE (was 82% in non-BSI version), vacuum sealed and cooled to -45deg:

There is also a recording of webinar explaining the new camera features here.

Update: Andor publishes an official PR on its Sona camera:

There is also a recording of webinar explaining the new camera features here.

Update: Andor publishes an official PR on its Sona camera:

AIRY3D Raises $10m in Series A Funding

PRNewswire: AIRY3D, a Montreal-based 3D vision start-up, raises $10m in an oversubscribed Series A funding round led by Intel Capital, including all Seed round investors CRCM Ventures, Nautilus Venture Partners, R7 Partners, Robert Bosch Venture Capital (RBVC), and WI Harper Group along with several angel investors. This financing will allow AIRY3D to advance its licensing roadmap for the first commercial adoptions of its DepthIQ 3D sensor platform with top-tier mobile OEMs in 2019.

"The simplicity and cost-efficiency of AIRY3D's 3D sensor technology, which does not require multiple components, helps position AIRY3D's technology as a potential enabler for many target markets", said Dave Flanagan, VP and group managing director at Intel Capital.

"AIRY3D's DepthIQ platform is a cost-effective technology that can accelerate the adoption of several new applications such as facial, gesture and object recognition, portrait modes, professional 'Bokeh' effects, and image segmentation in mobile markets and beyond," said Ingo Ramesohl, Managing Director at Robert Bosch Venture Capital GmbH. "We see tremendous opportunities in one of the largest business areas of Bosch - the automotive industry. The adoption of 3D cameras inside the cabin and outside with ADAS can drive significant improvements in safety and facilitate the industry's shift towards autonomous vehicles."

"After concluding our Seed round in March 2017, we fabricated DepthIQ 3D sensors on state-of-the-art CMOS wafers in collaboration with a leading sensor OEM. AIRY3D has since demonstrated 3D camera prototypes and numerous use cases. With the support of Intel, Bosch and our other investors, we now are formalizing partnerships with camera sensor OEMs and design-in collaborations with industry leading end customers in our strategic markets," said Dan Button, CEO of AIRY3D.

AIRY3D's DepthIQ platform can convert any single 2D imaging sensor into one that generates a 2D image and 3D depth data. It combines simple optical physics (in situ Transmissive Diffraction Mask technology) with proprietary algorithms to deliver versatile 3D sensing solutions while preserving 2D performance:

Thanks to GP for the link!

"The simplicity and cost-efficiency of AIRY3D's 3D sensor technology, which does not require multiple components, helps position AIRY3D's technology as a potential enabler for many target markets", said Dave Flanagan, VP and group managing director at Intel Capital.

"AIRY3D's DepthIQ platform is a cost-effective technology that can accelerate the adoption of several new applications such as facial, gesture and object recognition, portrait modes, professional 'Bokeh' effects, and image segmentation in mobile markets and beyond," said Ingo Ramesohl, Managing Director at Robert Bosch Venture Capital GmbH. "We see tremendous opportunities in one of the largest business areas of Bosch - the automotive industry. The adoption of 3D cameras inside the cabin and outside with ADAS can drive significant improvements in safety and facilitate the industry's shift towards autonomous vehicles."

"After concluding our Seed round in March 2017, we fabricated DepthIQ 3D sensors on state-of-the-art CMOS wafers in collaboration with a leading sensor OEM. AIRY3D has since demonstrated 3D camera prototypes and numerous use cases. With the support of Intel, Bosch and our other investors, we now are formalizing partnerships with camera sensor OEMs and design-in collaborations with industry leading end customers in our strategic markets," said Dan Button, CEO of AIRY3D.

AIRY3D's DepthIQ platform can convert any single 2D imaging sensor into one that generates a 2D image and 3D depth data. It combines simple optical physics (in situ Transmissive Diffraction Mask technology) with proprietary algorithms to deliver versatile 3D sensing solutions while preserving 2D performance:

Thanks to GP for the link!

Monday, July 23, 2018

Sony Announces 48MP 0.8um Pixel Sensor

Sony announces 1/2-inch IMX586 stacked CMOS sensor for smartphones featuring 48MP, the industry’s highest pixel count. The new product uses the world-first pixel size of 0.8 μm.

The new sensor uses the Quad Bayer color filter array, where adjacent 2x2 pixels come in the same color. During low light shooting, the signals from the four adjacent pixels are added, raising the sensitivity to a level equivalent to that of 1.6 μm pixels (12MP).

The new sensor uses the Quad Bayer color filter array, where adjacent 2x2 pixels come in the same color. During low light shooting, the signals from the four adjacent pixels are added, raising the sensitivity to a level equivalent to that of 1.6 μm pixels (12MP).

| 12MP sensor (left) vs IMX586 (right) |

Sunday, July 22, 2018

Review of Ion Implantation Technology for Image Sensors

MDPI Sensor publishes "A Review of Ion Implantation Technology for Image Sensors" by Nobukazu Teranishi, Genshu Fuse, and Michiro Sugitani from Shizuoka and Hyogo Universities and Sumitomo.

"Image sensors are so sensitive to metal contamination that they can detect even one metal atom per pixel. To reduce the metal contamination, the plasma shower using RF (radio frequency) plasma generation is a representative example. The electrostatic angular energy filter after the mass analyzing magnet is a highly effective method to remove energetic metal contamination. The protection layer on the silicon is needed to protect the silicon wafer against the physisorbed metals. The thickness of the protection layer should be determined by considering the knock-on depth. The damage by ion implantation also causes blemishes. It becomes larger in the following conditions if the other conditions are the same; a. higher energy; b. larger dose; c. smaller beam size (higher beam current density); d. longer ion beam irradiation time; e. larger ion mass. To reduce channeling, the most effective method is to choose proper tilt and twist angles. For P+ pinning layer formation, the low-energy B+ implantation method might have less metal contamination and damage, compared with the BF2+ method."

"Image sensors are so sensitive to metal contamination that they can detect even one metal atom per pixel. To reduce the metal contamination, the plasma shower using RF (radio frequency) plasma generation is a representative example. The electrostatic angular energy filter after the mass analyzing magnet is a highly effective method to remove energetic metal contamination. The protection layer on the silicon is needed to protect the silicon wafer against the physisorbed metals. The thickness of the protection layer should be determined by considering the knock-on depth. The damage by ion implantation also causes blemishes. It becomes larger in the following conditions if the other conditions are the same; a. higher energy; b. larger dose; c. smaller beam size (higher beam current density); d. longer ion beam irradiation time; e. larger ion mass. To reduce channeling, the most effective method is to choose proper tilt and twist angles. For P+ pinning layer formation, the low-energy B+ implantation method might have less metal contamination and damage, compared with the BF2+ method."

Saturday, July 21, 2018

e2v on CCD vs CMOS Sensors

AZO Materials publishes Teledyne e2v article "The Development of CMOS Image Sensors" with a table comparing CCD and CMOS sensors. Although I do not agree with some of the statements in the table, here it is:

| Characteristic | CCD | CMOS |

|---|---|---|

| Signal from pixel | Electron packet | Voltage |

| Signal from chip | Analog Voltage | Bits (digital) |

| Readout noise | low | Lower at equivalent frame rate |

| Fill factor | High | Moderate or low |

| Photo-Response | Moderate to high | Moderate to high |

| Sensitivity | High | Higher |

| Dynamic Range | High | Moderate to high |

| Uniformity | High | Slightly Lower |

| Power consumption | Moderate to high | Low to moderate |

| Shuttering | Fast, efficient | Fast, efficient |

| Speed | Moderate to High | Higher |

| Windowing | Limited | Multiple |

| Anti-blooming | High to none | High, always |

| Image Artefact | Smearing, charge transfer inefficiency | FPN, Motion (ERS), PLS |

| Biasing and Clocking | Multiple, higher voltage | Single, low-voltage |

| System Complexity | High | Low |

| Sensor Complexity | Low | High |

| Relative R&D cost | Lower | Lower or Higher depending on series |

Valeo XtraVue Demos

Valeo publishes video showing use cases for its XtraVue system based on a camera, laser scanner, and vehicle-to-vehicle networking:

And another somewhat dated video has been re-posted on Valeo channel:

And another somewhat dated video has been re-posted on Valeo channel:

Friday, July 20, 2018

Andor Teases BSI sCMOS Sensors

Andor publishes a teaser for its oncoming Sona BSI sCMOS sensor-based cameras calling them "the world's most sensitive," to be officially unveiled on July 24, 2018:

Thursday, July 19, 2018

Nobukazu Teranishi Receives Emperor Medal of Honor with Purple Ribbon

Nobukazu Teranishi, inventor of the pinned photodide, receives the Medal of Honor with Purple Ribbon from the Emperor of Japan. The Medal of Honor with Purple Ribbon is awarded for great achievements in science, engineering, sports, arts.

3D-enabled Smartphone Shipments to Exceed 100M in 2018

Digitimes quotes China-based Sigmaintell forecast that Ggobal shipments of smartphones with 3D sensing cameras are to top 100m units in 2018, with Apple being the primary supplier, followed by Xiaomi, Oppo and Vivo.

Light Raises $121m from SoftBank and Leica

Light Co. announces a Series D funding round of $121m, led by the SoftBank Vision Fund. Leica Camera AG too participated in the financing.

The new funding will allow Light to expand its platform into security, robotic, automotive, aerial and industrial imaging applications. Later this year, the first mobile phone incorporating Light’s technology will be available to consumers around the world.

“Light’s technology is a revelation, showing that several small, basic camera modules, combined with highly powerful software, can produce images that rival those produced by cameras costing and weighing orders of magnitude more. We’re just getting started. Having the support of SoftBank Vision Fund as a strategic investor means this technology will see more of the world sooner than we could have ever imagined,” said Dave Grannan, Light CEO and Co-founder.

“With the rapid development of the computational photography, partnering with the innovators at Light ensures Leica to extend its tradition of excellence into the computational photography era,” said Dr. Andreas Kaufmann, Leica Chairman of the Supervisory Board.

“It is humbling to have the support of the most iconic camera brand in the world. We are excited by the overwhelming potential of the collaboration with Leica,” said Dr. Rajiv Laroia, Light CTO and Co-founder.

The SoftBank Vision Fund investment will be made in tranches, subject to certain conditions.

Light’s technologies, including its Lux Capacitor camera control chip and its Polar Fusion Engine for multi-image processing, are available for licensing for use in applications like smartphones, security, automotive, robotics, drones, and more.

The new funding will allow Light to expand its platform into security, robotic, automotive, aerial and industrial imaging applications. Later this year, the first mobile phone incorporating Light’s technology will be available to consumers around the world.

“Light’s technology is a revelation, showing that several small, basic camera modules, combined with highly powerful software, can produce images that rival those produced by cameras costing and weighing orders of magnitude more. We’re just getting started. Having the support of SoftBank Vision Fund as a strategic investor means this technology will see more of the world sooner than we could have ever imagined,” said Dave Grannan, Light CEO and Co-founder.

“With the rapid development of the computational photography, partnering with the innovators at Light ensures Leica to extend its tradition of excellence into the computational photography era,” said Dr. Andreas Kaufmann, Leica Chairman of the Supervisory Board.

“It is humbling to have the support of the most iconic camera brand in the world. We are excited by the overwhelming potential of the collaboration with Leica,” said Dr. Rajiv Laroia, Light CTO and Co-founder.

The SoftBank Vision Fund investment will be made in tranches, subject to certain conditions.

Light’s technologies, including its Lux Capacitor camera control chip and its Polar Fusion Engine for multi-image processing, are available for licensing for use in applications like smartphones, security, automotive, robotics, drones, and more.

Tuesday, July 17, 2018

Imaging Resource on Nikon Image Sensor Design

Imaging Resource publishes a very nice article "Pixels for Geeks: A peek inside Nikon’s super-secret sensor design lab" by Dave Etchells. There is quite a lot on Nikon-internal stuff that has been publicly released for the first time. Just a few interesting quotes out of many:

"Nikon actually designs their own sensors, to a fairly minute level of detail. I think this is almost unknown in the photo community; most people just assume that “design” in Nikon’s case simply consists of ordering-up different combinations of specs from sensor manufacturers.

In actuality, they have a full staff of sensor engineers who design cutting-edge sensors like those in the D5 and D850 from the ground up, optimizing their designs to work optimally with NIKKOR lenses and Nikon's EXPEED image-processor architecture.

As part of matching their sensors to NIKKOR optics, Nikon’s sensor designers pay a lot of attention to the microlenses and the structures between them and the silicon surface. Like essentially all camera sensors today, Nikon’s microlenses are offset a variable amount relative to the pixels beneath, to compensate for light rays arriving at oblique angles near the edges of the array."

Apparently, Nikon uses Silvaco tools for pixel device and process simulations:

"Nikon actually designs their own sensors, to a fairly minute level of detail. I think this is almost unknown in the photo community; most people just assume that “design” in Nikon’s case simply consists of ordering-up different combinations of specs from sensor manufacturers.

In actuality, they have a full staff of sensor engineers who design cutting-edge sensors like those in the D5 and D850 from the ground up, optimizing their designs to work optimally with NIKKOR lenses and Nikon's EXPEED image-processor architecture.

As part of matching their sensors to NIKKOR optics, Nikon’s sensor designers pay a lot of attention to the microlenses and the structures between them and the silicon surface. Like essentially all camera sensors today, Nikon’s microlenses are offset a variable amount relative to the pixels beneath, to compensate for light rays arriving at oblique angles near the edges of the array."

Apparently, Nikon uses Silvaco tools for pixel device and process simulations:

Taiwanese CIS Makers Look for New Opportunities

Digitimes reports that Silicon Optronics and Pixart, and backend houses Xintec, VisEra, King Yuan Electronics (KYEC) and Tong Hsing Electronic Industries are moving their focus to niche markets as they fail to find grow in the mainstream smartphone cameras.

Kingpak manages to survive on smartphone market due to its contract with Sony to provide backend services for CIS. Other Taiwan-based CIS suppliers are unable to grow in smartphone applications, despite promising demand for multi-camera phones.

Meanwhile, Silicon Optronics (SOI) has held its IPO on the Taiwan Stock Exchange (TWSE) on July 16. Its share price has raised by 44.55% in its first day of trading.

Kingpak manages to survive on smartphone market due to its contract with Sony to provide backend services for CIS. Other Taiwan-based CIS suppliers are unable to grow in smartphone applications, despite promising demand for multi-camera phones.

Meanwhile, Silicon Optronics (SOI) has held its IPO on the Taiwan Stock Exchange (TWSE) on July 16. Its share price has raised by 44.55% in its first day of trading.

Mantis Vision Raises $55m in Round D

PRNewswire: Mantis Vision announces the closing of its Series D round of $55m with a total investment of $83m to date. New funds will serve to extend the company's technological edge, accelerate Mantis Vision's go-to-market strategy, expand its international workforce and support external growth opportunities. The Series D investment was led by Luenmei Quantum Co. Ltd., a new investor in Mantis Vision, and Samsung Catalyst Fund, an existing shareholder of the company.

Mantis Vision and Luenmei Quantum also announced the formation of a new joint venture, "MantisVision Technologies", to further strengthen Mantis Vision's position and growth in the Greater China Market.

Mantis Vision is planning to double its global workforce with an additional 140 employees in Israel, U.S., China and Slovak Republic by the end of 2020. As part of the latest series funding, Mantis Vision will expand its pool of talent engineers for advanced R&D algorithmic research in computer vision and deep learning, advanced optics experts, mobile camera engineers, 3D apps developers and 3D Volumetric studio experts amongst other open positions in program management and business development.

According to So Chong Keung, Luenmei Quantum Co. Ltd. President and GM: "Luenmei Quantum is closely following the Israeli high-tech industry, which creates outstanding technology. Mantis Vision's versatile and advanced 3D technologies is well positioned and suited for mobile, secure face ID applications and entertainment industries in China. We found that Mantis Vision is the right match for Luenmei Quantum, combining hi-tech, innovation and passion."

According to Gur Arie Bitan, Founder and CEO of Mantis Vision: "This latest announcement is another proof of Mantis Vision's meteoric advancements in this recent period, technologically and business-wise. We regard our continued partnership with Samsung Catalyst Fund and Luenmei Quantum Co. as a strategic partnership and thanks to our new joint venture, we will be able to further strengthen our grip in the Greater Chinese market."

Mantis Vision and Luenmei Quantum also announced the formation of a new joint venture, "MantisVision Technologies", to further strengthen Mantis Vision's position and growth in the Greater China Market.

Mantis Vision is planning to double its global workforce with an additional 140 employees in Israel, U.S., China and Slovak Republic by the end of 2020. As part of the latest series funding, Mantis Vision will expand its pool of talent engineers for advanced R&D algorithmic research in computer vision and deep learning, advanced optics experts, mobile camera engineers, 3D apps developers and 3D Volumetric studio experts amongst other open positions in program management and business development.

According to So Chong Keung, Luenmei Quantum Co. Ltd. President and GM: "Luenmei Quantum is closely following the Israeli high-tech industry, which creates outstanding technology. Mantis Vision's versatile and advanced 3D technologies is well positioned and suited for mobile, secure face ID applications and entertainment industries in China. We found that Mantis Vision is the right match for Luenmei Quantum, combining hi-tech, innovation and passion."

According to Gur Arie Bitan, Founder and CEO of Mantis Vision: "This latest announcement is another proof of Mantis Vision's meteoric advancements in this recent period, technologically and business-wise. We regard our continued partnership with Samsung Catalyst Fund and Luenmei Quantum Co. as a strategic partnership and thanks to our new joint venture, we will be able to further strengthen our grip in the Greater Chinese market."

NIST Publishes LiDAR Characterization Standard

Spar3D: NIST's Physical Measurement Laboratory develops an international performance evaluation standard for for LiDARs.

An invitation was sent to all leading manufacturers of 3D laser scanners to visit NIST and run the approximately 100 tests specified in the draft standard. Four of the manufacturers traveled to NIST (one each from Germany and France) to participate in the runoff. Another sent an instrument during the week for testing. Two other manufacturers who could not attend have expressed interest in visiting NIST soon to try out the tests. These seven manufacturers represent about four-fifths of the entire market for large volume laser scanners.

The new standard ASTM E3125 is available here.

An invitation was sent to all leading manufacturers of 3D laser scanners to visit NIST and run the approximately 100 tests specified in the draft standard. Four of the manufacturers traveled to NIST (one each from Germany and France) to participate in the runoff. Another sent an instrument during the week for testing. Two other manufacturers who could not attend have expressed interest in visiting NIST soon to try out the tests. These seven manufacturers represent about four-fifths of the entire market for large volume laser scanners.

The new standard ASTM E3125 is available here.

Monday, July 16, 2018

PMD Presentation at AWE 2018

PMD VP Business Development Mitchel Reifel presents the company ToF solutions for AR and VR applications at Augmented World Expo:

Sunday, July 15, 2018

Saturday, July 14, 2018

Thursday, July 12, 2018

Magic Leap Gets Investment from AT&T

Techcrunch reports that AT&T makes a strategic investment into Magic Leap, a developer of AR glasses. Magic Leap last round D valued the startup at $6.3b, and the companies have confirmed that this AT&T completes the Series D round of $963m.

So far, Magic Leap has raised $2.35b from a number of strategic backers including Google, Alibaba and Axel Springer.

So far, Magic Leap has raised $2.35b from a number of strategic backers including Google, Alibaba and Axel Springer.

AutoSens Announces its Awards Finalists

AutoSens Awards reveals the shortlisted finalists for 2018 with some of them related to imaging:

Most Engaging Content:

Hardware Innovation:

Software Innovation:

Most Exciting Start-Up:

Game Changer:

Greatest Exploration:

Best Outreach Project:

Most Engaging Content:

- Mentor Graphics, Andrew Macleod

- videantis, Marco Jacobs

- Toyota Motor North America, CSRC, Rini Sherony

- 2025AD, Stephan Giesler

- EE Times, Junko Yoshida

Hardware Innovation:

- NXP Semiconductors

- Cepton

- Renesas

- OmniVision

- Velodyne Lidar

- Robert Bosch

Software Innovation:

- Dibotics

- Algolux

- Brodmann17

- Civil Maps

- Dataspeed

- Immervision

- Prophesee

Most Exciting Start-Up:

- Hailo

- Metamoto

- May Mobility

- AEye

- Ouster

- Arbe Robotics

Game Changer:

- Siddartha Khastgir, WMG, University of Warwick, UK

- Marc Geese, Robert Bosch

- Kalray

- Prof. Nabeel Riza, University College Cork

- Intel

- NVIDIA and Continental partnership

Greatest Exploration:

- Ding Zhao, University of Michigan

- Prof Philip Koopman, Carnegie Mellon University

- Prof Alexander Braun, University of Applied Sciences Düsseldorf

- Cranfield University Multi-User Environment for Autonomous Vehicle Innovation (MUEAVI)

- Professor Natasha Merat, Institute for Transport Studies

- Dr Valentina Donzella, WMG University of Warwick

Best Outreach Project:

- NWAPW

- Detroit Autonomous Vehicle Group

- DIY Robocars

- RobotLAB

- Udacity

Image Sensors America Agenda

Image Sensors America to be held on October 11-12, 2018 in San Francisco announces its agenda with many interesting papers:

State of the Art Uncooled InGaAs Short Wave Infrared Sensors

Dr. Martin H. Ettenberg | President of Princeton Infrared Technologies

Super-Wide-Angle Cameras- The Next Smartphone Frontier Enabled by Miniature Lens Design and the Latest Sensors

Patrice Roulet Fontani | Vice President,Technology and Co-Founder of ImmerVision

SPAD vs. CMOS Image Sensor Design Challenges – Jitter vs. Noise

Dr. Daniel Van Blerkom | CTO & Co-Founder of Forza Silicon

sCMOS Technology: The Most Versatile Imaging Tool in Science

Dr. Scott Metzler | PCO Tech

Image Sensor Architecture

Presentation By Sub2R

Using Depth Sensing Cameras for 3D Eye Tracking

Kenneth Funes Mora | CEO and Co-founder of Eyeware

Autonomous Driving The Development of Image Sensors?

Ronald Mueller | CEO of Vision Markets of Associate Consultant of Smithers Apex

SPAD Arrays for LiDAR Applications

Carl Jackson | CTO and Founder of SensL Division, OnSemi

Future Image Sensors for SLAM and Indoor 3D Mapping

Vitality Goncharuk | CEO & Founder | Augmented Pixels

Future Trends in Imaging Beyond the Mobile Market

Amos Fenigstein | Senior Director of R&D for Image Sensors of TowerJazz

Presentation by Gigajot

State of the Art Uncooled InGaAs Short Wave Infrared Sensors

Dr. Martin H. Ettenberg | President of Princeton Infrared Technologies

Super-Wide-Angle Cameras- The Next Smartphone Frontier Enabled by Miniature Lens Design and the Latest Sensors

Patrice Roulet Fontani | Vice President,Technology and Co-Founder of ImmerVision

SPAD vs. CMOS Image Sensor Design Challenges – Jitter vs. Noise

Dr. Daniel Van Blerkom | CTO & Co-Founder of Forza Silicon

sCMOS Technology: The Most Versatile Imaging Tool in Science

Dr. Scott Metzler | PCO Tech

Image Sensor Architecture

Presentation By Sub2R

Using Depth Sensing Cameras for 3D Eye Tracking

Kenneth Funes Mora | CEO and Co-founder of Eyeware

Autonomous Driving The Development of Image Sensors?

Ronald Mueller | CEO of Vision Markets of Associate Consultant of Smithers Apex

SPAD Arrays for LiDAR Applications

Carl Jackson | CTO and Founder of SensL Division, OnSemi

Future Image Sensors for SLAM and Indoor 3D Mapping

Vitality Goncharuk | CEO & Founder | Augmented Pixels

Future Trends in Imaging Beyond the Mobile Market

Amos Fenigstein | Senior Director of R&D for Image Sensors of TowerJazz

Presentation by Gigajot

Wednesday, July 11, 2018

Four Challenges for Automotive LiDARs

DesignNews publishes a list of four challenges that LiDARs have to overcome on the way to wide acceptance in vehicles:

Price reduction:

“Every technology gets commoditized at some point. It will happen with LiDAR,” said Angus Pacala, co-founder and CEO of LiDAR startup Ouster. “Automotive radars used to be $15,000. Now, they are $50. And it did take 15 years. We’re five years into a 15-year lifecycle for LiDAR. So, cost isn’t going to be a problem.”

Increase detection range:

“Range isn’t always range,” said John Eggert, director of automotive sales and marketing at Velodyne. “[It’s] dynamic range. What do you see and when can you see it? We see a lot of ‘specs’ around 200 meters. What do you see at 200 meters if you have a very reflective surface? Most any LiDAR can see at 100, 200, 300 meters. Can you see that dark object? Can you get some detections off a dark object? It’s not just a matter of reputed range, but range at what reflectivity? While you’re able to see something very dark and very far away, how about something very bright and very close simultaneously?”

Improve robustness:

“It comes down to vibration and shock, wear and tear, cleaning—all the aspects that we see on our cars,” said Jada Smith, VP engineering and external affairs at Aptiv, Delphi spin-off. “LiDAR systems have to be able to withstand that. We need perfection in the algorithms. We have to be confident that the use cases are going to be supported time and time again.”

Withstand the environment and different weather conditions:

Jim Schwyn, CTO of Valeo North America, said “What if the LiDAR is dirty? Are we in a situation where we are going to take the gasoline tank from a car and replace it with a windshield washer reservoir to be able to keep these things clean?”

The potentially fatal LiDAR flaws that need to be corrected:

Price reduction:

“Every technology gets commoditized at some point. It will happen with LiDAR,” said Angus Pacala, co-founder and CEO of LiDAR startup Ouster. “Automotive radars used to be $15,000. Now, they are $50. And it did take 15 years. We’re five years into a 15-year lifecycle for LiDAR. So, cost isn’t going to be a problem.”

Increase detection range:

“Range isn’t always range,” said John Eggert, director of automotive sales and marketing at Velodyne. “[It’s] dynamic range. What do you see and when can you see it? We see a lot of ‘specs’ around 200 meters. What do you see at 200 meters if you have a very reflective surface? Most any LiDAR can see at 100, 200, 300 meters. Can you see that dark object? Can you get some detections off a dark object? It’s not just a matter of reputed range, but range at what reflectivity? While you’re able to see something very dark and very far away, how about something very bright and very close simultaneously?”

Improve robustness:

“It comes down to vibration and shock, wear and tear, cleaning—all the aspects that we see on our cars,” said Jada Smith, VP engineering and external affairs at Aptiv, Delphi spin-off. “LiDAR systems have to be able to withstand that. We need perfection in the algorithms. We have to be confident that the use cases are going to be supported time and time again.”

Withstand the environment and different weather conditions:

Jim Schwyn, CTO of Valeo North America, said “What if the LiDAR is dirty? Are we in a situation where we are going to take the gasoline tank from a car and replace it with a windshield washer reservoir to be able to keep these things clean?”

The potentially fatal LiDAR flaws that need to be corrected:

- Bright sun against a white background

- A blizzard that causes whiteout conditions

- Early morning fog

SmartSens Unveils GS BSI VGA Sensor

PRNewswire: SmartSens launches SC031GS calling it "the world's first commercial-grade 300,000-pixel Global Shutter CMOS image sensor based on BSI pixel technology." While other companies announced GS BSI sensors, they have higher than VGA resolution.

The SC031GS is aimed to a wide range of commercial products, including smart barcode readers, drones, smart modules (Gesture Recognition/vSLAM/Depth Information/Optical Flow) and other image recognition-based AI applications, such as facial recognition and gesture control.

SC031GS uses 3.75um large pixels (1/6" optical size) and SmartSens' single-frame HDR technology, combined with a global shutter. The maximum frame rate is 240fps.

Leo Bai, GM of SmartSens' AI Image Sensors Division, stated: "SmartSens is not only a new force in the global CMOS image sensor market, but also a company that commits to designing and developing products that meet the market needs and reflect industry trends. We partnered with key players in the AI field to integrate AI functions into the product design. SC031GS is such a revolutionary product that is powered by our leading Global Shutter CMOS image sensing technology and designed for trending AI applications."

SC031GS is now in mass production.

The SC031GS is aimed to a wide range of commercial products, including smart barcode readers, drones, smart modules (Gesture Recognition/vSLAM/Depth Information/Optical Flow) and other image recognition-based AI applications, such as facial recognition and gesture control.

SC031GS uses 3.75um large pixels (1/6" optical size) and SmartSens' single-frame HDR technology, combined with a global shutter. The maximum frame rate is 240fps.

Leo Bai, GM of SmartSens' AI Image Sensors Division, stated: "SmartSens is not only a new force in the global CMOS image sensor market, but also a company that commits to designing and developing products that meet the market needs and reflect industry trends. We partnered with key players in the AI field to integrate AI functions into the product design. SC031GS is such a revolutionary product that is powered by our leading Global Shutter CMOS image sensing technology and designed for trending AI applications."

SC031GS is now in mass production.

Subscribe to:

Posts (Atom)