Monday, February 28, 2022

Article about Peter Noble and his Early Image Sensors

Friday, February 25, 2022

PreAct Announces Software-Definable Automotive Flash LiDAR

Oregon-based PreAct Technologies announces T30P flash LiDAR said to be the industry’s first software-definable LiDAR. Vehicles with software-defined architectures require sensor technology that can support over-the-air updates throughout the life of the vehicle, allowing OEMs to generate ongoing revenue by offering powerful new features and functionality.

“We are excited to bring our software-definable flash LiDAR to market, furthering the advancement of autonomous mobility across multiple industries,” said Paul Drysch, CEO of PreAct Technologies. “We’ve spent the last three years creating a solution that fulfills the need of software-defined vehicles, providing the most value for Tier 1s and OEMs over the long term by making any ADAS application relevant for the entire life of the vehicle.”

PreAct’s flash LiDAR architecture is based on modulated waveforms that can be optimized for different applications via over-the-air updates, along with an application stack that resides on the edge. The flexibility of a software defined LiDAR allows Tier 1 suppliers and OEMs to package one sensor for multiple use cases – everything from true curb detection and gesture recognition to self-parking and automatic door actuation – that can update to meet their changing needs as more user and sensor data become available.

“Near field automotive sensors have either been low-precision and low-cost, or high-precision and high-cost,” said Ian Riches, VP for the Global Automotive Practice at Strategy Analytics. “By bringing a high-precision, low-cost sensor to market, PreAct is enabling a huge range of safety and convenience features. The software-defined characteristics of the T30P will allow these features to improve during the lifetime of the vehicle, unlocking new revenue streams for automakers.”

T30P, with a frame rate of 200 fps and QVGA resolution, is also the fastest flash Lidar on the market making it well suited for ground and air robotics or industrial applications – systems which all share a need for fast, accurate and high-resolution sensors that can reliably define and track objects in all environmental conditions.

PreAct’s T30P Flash LiDAR sensor suite will be available in July 2022.

Thursday, February 24, 2022

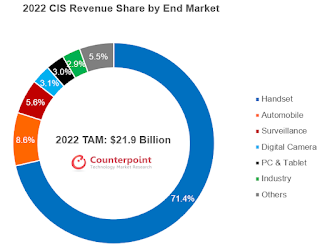

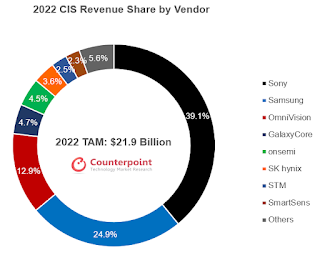

Counterpoint Forecasts Sony Market Share to Shrink to 39% in 2022

Wednesday, February 23, 2022

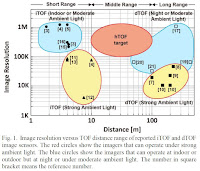

Sony in Search for Killer Applications for its ToF Sensor

Tuesday, February 22, 2022

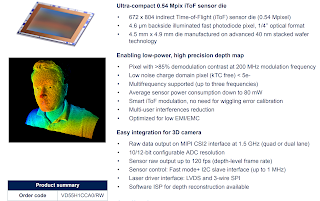

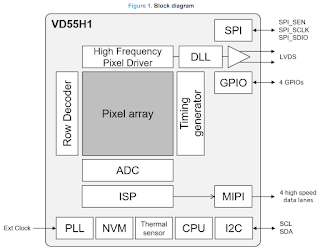

ST Unveils its First iToF Sensor with 0.5MP Resolution

Monday, February 21, 2022

Sigma Updates on the Next Generation Foveon Sensor Development

- Stage 1: Repeated design simulations of the new three-layer structure to confirm that it will function as intended.

- Stage 2: Prototype evaluation using a small image sensor with the same pixel size as the product specifications but with a reduced total pixel count to verify the performance characteristics of the image sensor in practice.

- Stage 3: Final prototype evaluation using a full-frame image sensor with the same specifications as the mass products including the AD converter etc…

Vision Sensor-Processor with In-Pixel Memory

Sunday, February 20, 2022

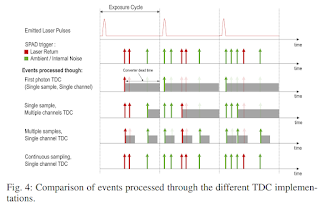

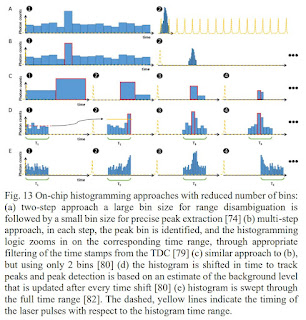

High-Throughput SPAD Signal Processing

Saturday, February 19, 2022

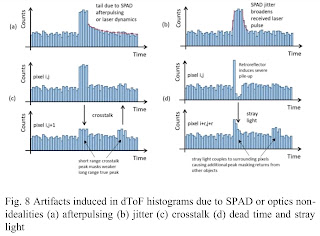

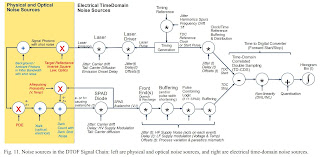

dToF Tutorial from Edinburgh University and ST

Friday, February 18, 2022

Recent Videos: IIT Delhi, ADI, Omnivision, FLIR, Hamamatsu

Himax Reports 2021 Results

Thursday, February 17, 2022

Intel Heritage in Image Sensors

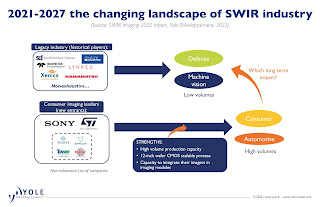

Yole Predicts that Sony and ST Will Capture 95% of SWIR Imagers Market

Yole Developpement believes that ST and Sony could disrupt the technological landscape with their SWIR imagers:

"In 2021, the SWIR industry’s leading players were SCD, Sensors Unlimited, and Teledyne FLIR, sharing more than 50% of the 11,000 units shipped in the year. These leaders are subsidiaries of leading defense companies that started developing SWIR technology with the support of governments for strategic purposes. They constitute the legacy side of the SWIR industry.

On the other side, STMicroelectronics and Sony, two leaders in the consumer imaging industry started being active players in SWIR with new technologies including quantum dots. Their entrance might be explained by the growing demand from consumer OEM for new integration designs such as under-display 3D sensing in smartphones. If SWIR imagers reach a low price point, shipments could skyrocket to hundreds of millions within a few years. The SWIR industry could emulate the current 3D imaging industry, where STMicroelectronics and Sony share nearly 95% of the 225 million shipments (2020 data)."

Wednesday, February 16, 2022

Peter Noble, Marvin White, and Northrop Grumman Win 2021 Emmy Awards

- Correlated Double Sampling for Image Sensors

- Marvin H. White

- Northrop Grumman Mission Systems Group