Press release: https://metalenz.com/metalenz-launches-polar-id-enabling-simple-secure-face-unlock-for-smartphones/

Metalenz Launches Polar ID, Enabling Simple, Secure Face Unlock for Smartphones

- The world’s first polarization sensor for smartphones, Polar ID provides ultra-secure facial authentication in a condensed footprint, lowering implementation cost and complexity.

- Now demonstrated on Qualcomm Technologies’ latest Snapdragon mobile platform, Polar ID is poised to drive large-scale adoption of secure face unlock across the Android ecosystem.

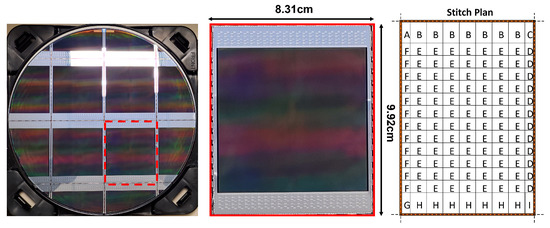

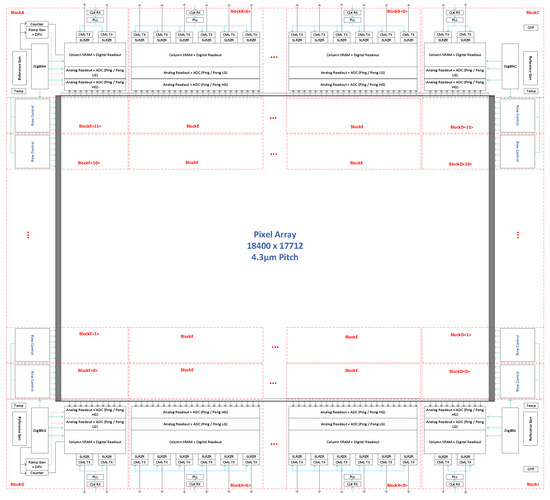

Boston, MA – October 26, 2023 Meta-optics industry leader Metalenz unveiled Polar ID, a revolutionary new face unlock solution, at Qualcomm Technologies’ annual Snapdragon Summit this week. Being the world’s only consumer-grade imaging system that can sense the full polarization state of light, Polar ID enables the next level of biometric security. Using breakthrough advances in meta-optic capability, Polar ID accurately captures the unique “polarization signature” of a human face. With this additional layer of information, even the most sophisticated 3D masks and spoof instruments are immediately detected as non-human.

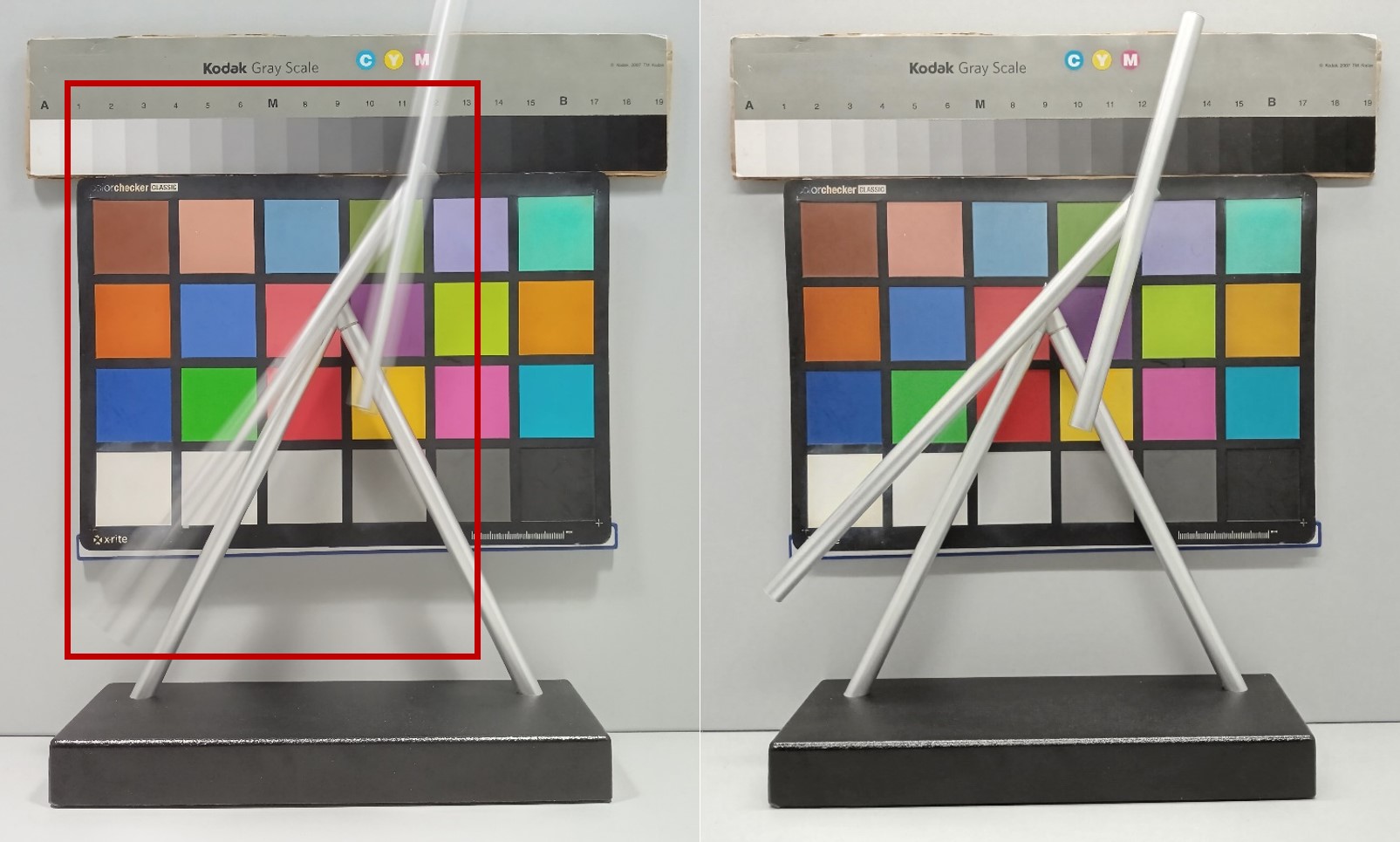

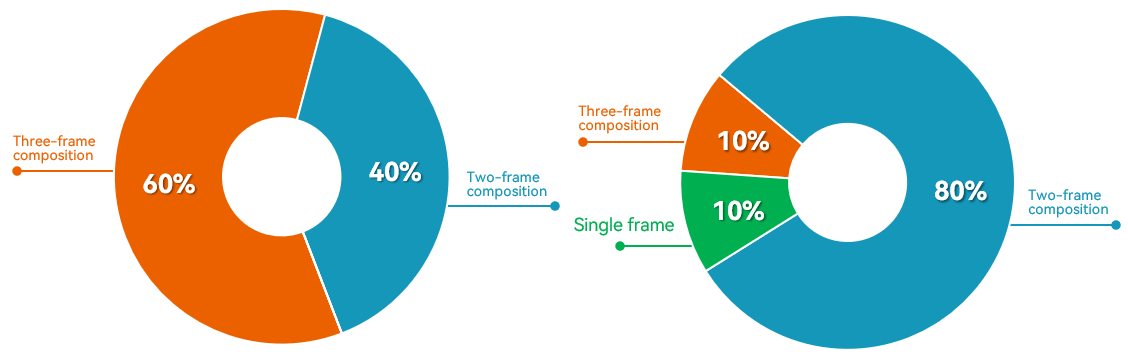

Facial authentication provides a seamless method for unlocking phones and allowing digital payment. However, to make the solution sufficiently secure requires expensive, bulky, and often power-hungry optical modules. Historically, this has limited the implementation of face unlock to only a few high-end phone models. Polar ID harnesses meta-optic technology to extract additional information such as facial contour details and to detect human tissue liveness from a single image. It is significantly more compact and cost effective than incumbent “Structured Light” face authentication solutions which require an expensive dot-pattern projector and multiple images.

Now demonstrated on a smartphone reference design powered by the new Snapdragon® 8 Gen 3 Mobile Platform, Polar ID has the efficiency, footprint, and price point to enable any Android smartphone OEM to bring the convenience and security of face unlock to the 100s of millions of mobile devices that currently use fingerprint sensors.

“Size, cost, and performance, those are the key metrics in the consumer industry”, said Rob Devlin, Metalenz CEO & Co-founder. “Polar ID offers an advantage in all three. Its small enough to fit in the most challenging form factors, eliminating the need for a large notch in the display. Its secure enough that it doesn’t get fooled by the most sophisticated 3D masks. Its substantially higher resolution than existing facial authentication solutions, so even if you’re wearing sunglasses and a surgical mask, the system still works. As a result, Polar ID delivers secure facial recognition at less than half the size and cost of incumbent solutions.”

“With each new generation of our flagship Snapdragon 8 series, our goal is to deliver the next generation of cutting-edge smartphone imaging capabilities to consumers. Our advanced Qualcomm® Spectra™ ISP and Qualcomm® Hexagon™ NPU were specifically designed to enable complex new imaging solutions, and we are excited to work with Metalenz to support their new Polar ID biometric imaging solution on our Snapdragon mobile platform for the first time,” said Judd Heape, VP of Product Management, Qualcomm Technologies, Inc.

“Polar ID is a uniquely powerful biometric imaging solution that combines our polarization image sensor with post-processing algorithms and sophisticated machine learning models to reliably and securely recognize and authenticate the phone’s registered user. Working closely with Qualcomm Technologies to implement our solution on their reference smartphone powered by Snapdragon 8 Gen 3, we were able to leverage the advanced image signal processing capabilities of the Qualcomm Spectra ISP while also implementing mission critical aspects of our algorithms in the secure framework of the Qualcomm Hexagon NPU, to ensure that the solution is not only spoof-proof but also essentially unhackable” said Pawel Latawiec, CTO of Metalenz. “The result is an extremely fast and compute efficient face unlock solution ready for OEMs to use in their next generation of Snapdragon 8 Gen 3-powered flagship Android smartphones.”

Polar ID is under early evaluation with several top smartphone OEMs, and additional evaluation kits will be made available in early 2024. Metalenz will exhibit its revolutionary Polar ID solution at MWC Barcelona and is now booking meetings to showcase a live demo of the technology to mobile OEMs.

Contact sales@metalenz.com to reserve your demo.