A paper by Dodda et al. from a research group in the Material Science and Engineering department at Pennsylvania State University was recently published in Nature Materials.

Link: https://www.nature.com/articles/s41563-022-01398-9

Active pixel sensor matrix based on monolayer MoS2 phototransistor array

Abstract:

In-sensor processing, which can reduce the energy and hardware burden for many machine vision applications, is currently lacking in state-of-the-art active pixel sensor (APS) technology. Photosensitive and semiconducting two-dimensional (2D) materials can bridge this technology gap by integrating image capture (sense) and image processing (compute) capabilities in a single device. Here, we introduce a 2D APS technology based on a monolayer MoS2 phototransistor array, where each pixel uses a single programmable phototransistor, leading to a substantial reduction in footprint (900 pixels in ∼0.09 cm2) and energy consumption (100s of fJ per pixel). By exploiting gate-tunable persistent photoconductivity, we achieve a responsivity of ∼3.6 × 107 A W−1, specific detectivity of ∼5.6 × 1013 Jones, spectral uniformity, a high dynamic range of ∼80 dB and in-sensor de-noising capabilities. Further, we demonstrate near-ideal yield and uniformity in photoresponse across the 2D APS array.

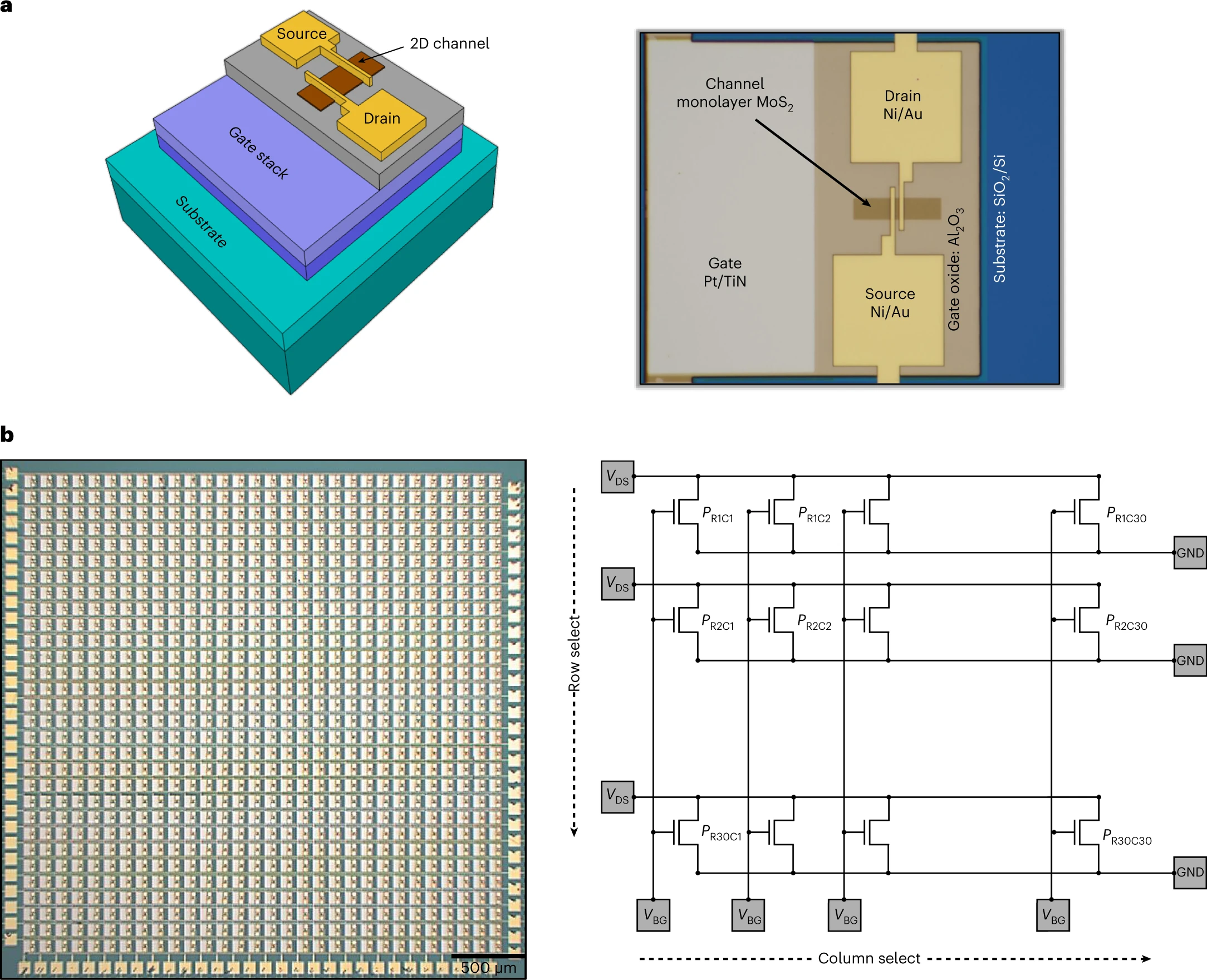

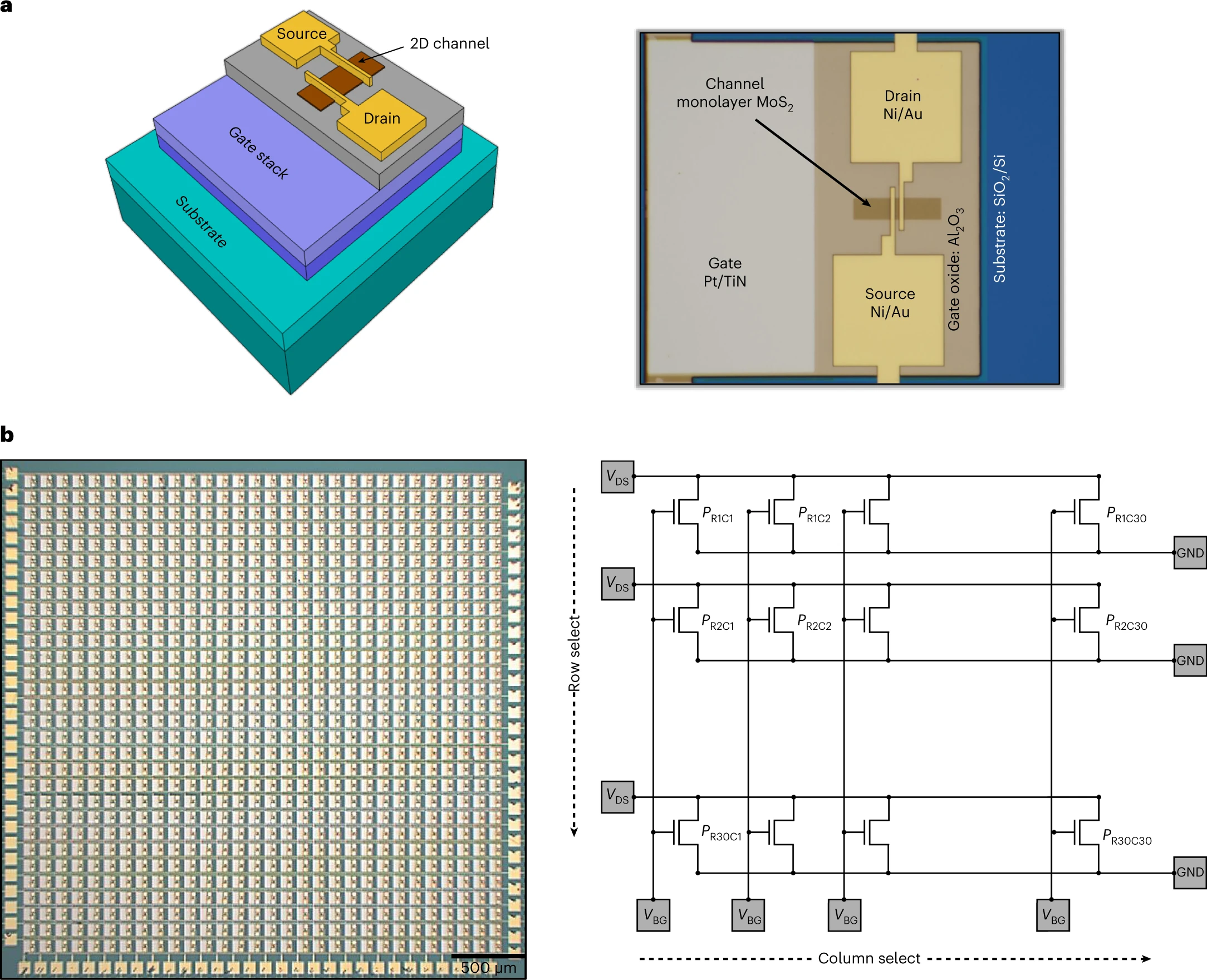

Fig 1: 2D APS. a, 3D schematic (left) and optical image (right) of a monolayer MoS2 phototransistor integrated with a programmable gate stack. The local back-gate stacks, comprising atomic layer deposition grown 50 nm Al2O3 on sputter-deposited Pt/TiN, are patterned as islands on top of an Si/SiO2 substrate. The monolayer MoS2 used in this study was grown via an MOCVD technique using carbon-free precursors at 900 °C on an epitaxial sapphire substrate to ensure high film quality. Following the growth, the film was transferred onto the TiN/Pt/Al2O3 back-gate islands and subsequently patterned, etched and contacted to fabricate phototransistors for the multipixel APS platform. b, Optical image of a 900-pixel 2D APS sensor fabricated in a crossbar architecture (left) and the corresponding circuit diagram showing the row and column select lines (right).

Fig. 2: Characterization of monolayer MoS2. a, Structure of MoS2 viewed down its c axis with atomic-resolution HAADF-STEM imaging at an accelerating voltage of 80 kV. Inset: the atomic model of 2H-MoS2 overlayed on the STEM image. b, SAED of the monolayer MoS2, which reveals a uniform single-crystalline structure. c,d, XPS of Mo 3d (c) and S 2p (d) core levels of monolayer MoS2 film. e,f, Raman spectra (e) and corresponding spatial colourmap of peak separation between the two Raman active modes, E12g and A1g, measured over a 40 µm × 40 µm area, for as-grown MoS2 film (f). g,h, PL spectra (g) and corresponding spatial colourmap of the PL peak position (h), measured over the same area as in f. The mean peak separation was found to be ~20.2 cm−1 with a standard deviation of ~0.6 cm−1 and the mean PL peak position was found to be at ~1.91 eV with a standard deviation of ~0.002 eV. i, Map of the relative crystal orientation of the MoS2 film obtained by fitting the polarization-dependence of the SHG response shown in j, which is an example polarization pattern obtained from a single pixel of i by rotating the fundamental polarization and collecting the harmonic signal at a fixed polarization.

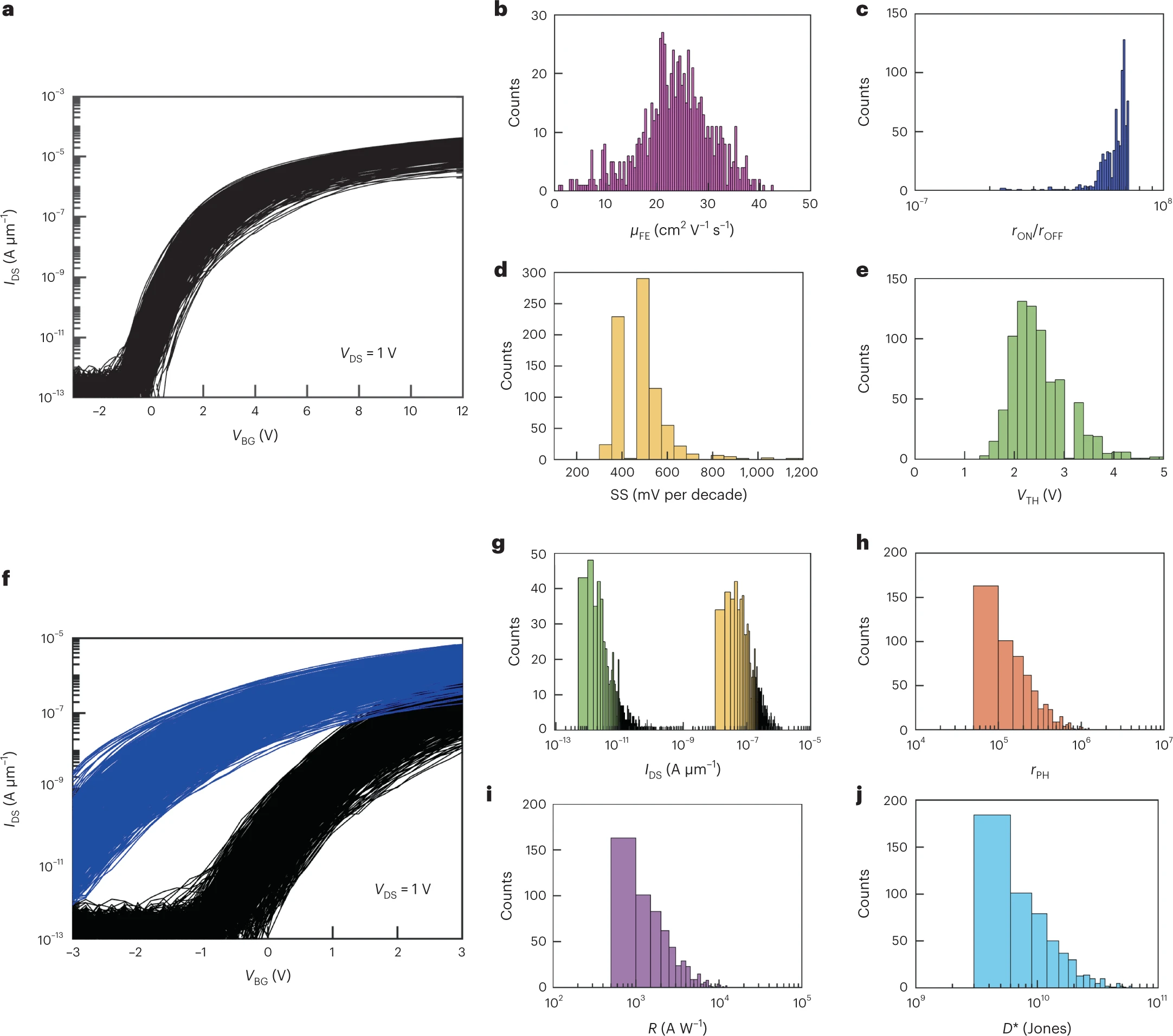

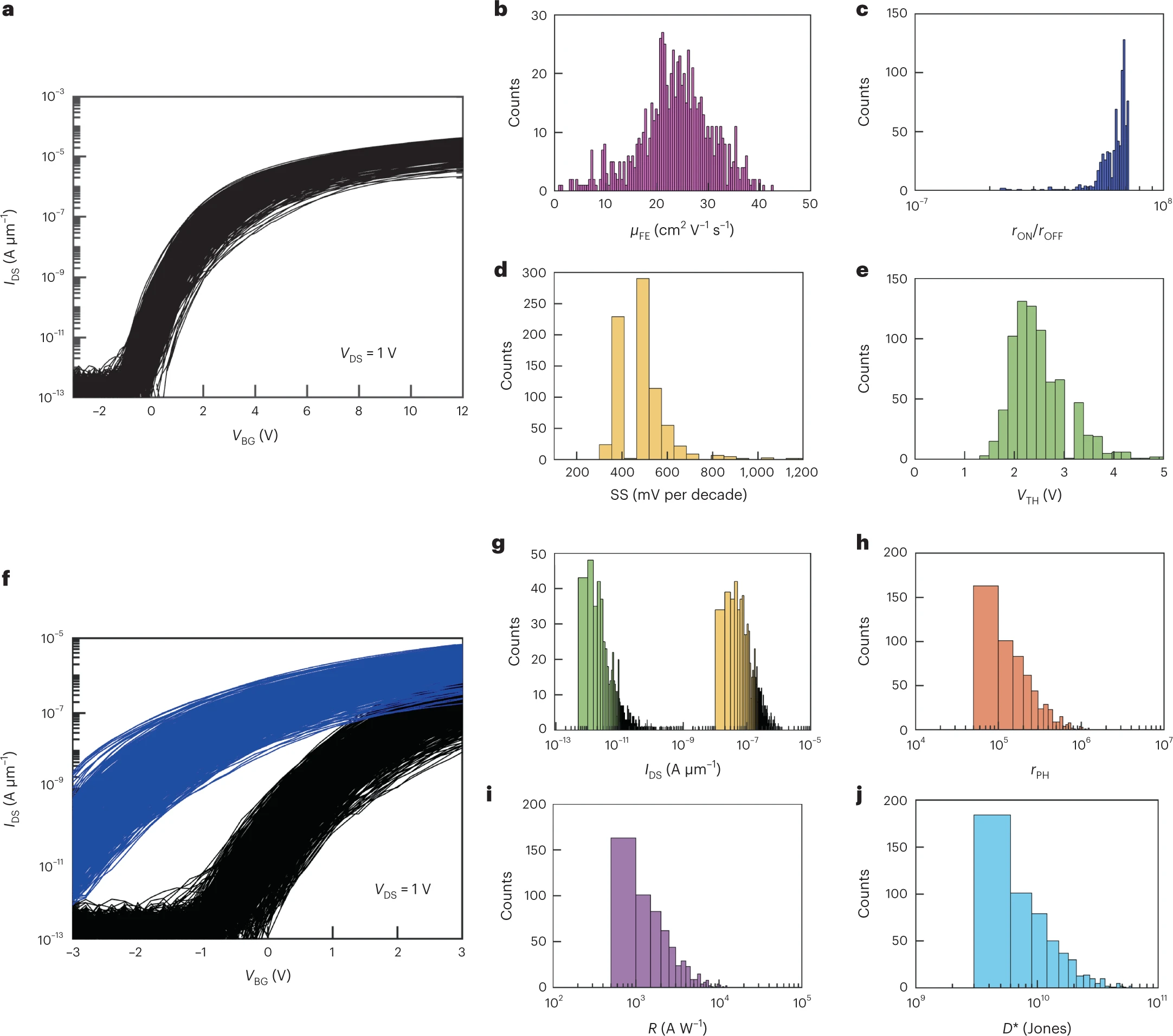

Fig. 3: Device-to-device variation in the characteristics of MoS2 phototransistors. a, Transfer characteristics, that is, source to drain current (IDS) as a function of the local back-gate voltage (VBG), at a source-to-drain voltage (VDS) of 1 V and measured in the dark for 720 monolayer MoS2 phototransistors (80% of the devices that constitute the vision array) with channel lengths (L) of 1 µm and channel widths (W) of 5 µm. b–d, Device-to-device variation is represented using histograms of electron field-effect mobility values (μFE) extracted from the peak transconductance (b), current on/off ratios (rON/OFF) (c), subthreshold slopes (SS) over three orders of magnitude change in IDS (d) and threshold voltages (VTH) extracted at an isocurrent of 500 nA µm−1 for 80% of devices in the 2D APS array (e). f, Pre- and post-illumination transfer characteristics of 720 monolayer MoS2 phototransistors after exposure to white light with Pin = 20 W m−2 at Vexp = −3 V for τexp = 1 s. g–j, Histograms of dark current (IDARK) (green) and photocurrent (IPH) (yellow) (g), the ratio of post-illumination photocurrent to dark current (rPH) (h), responsivity (R) (i) and detectivity (D*) (j), all measured at VBG = −1 V.

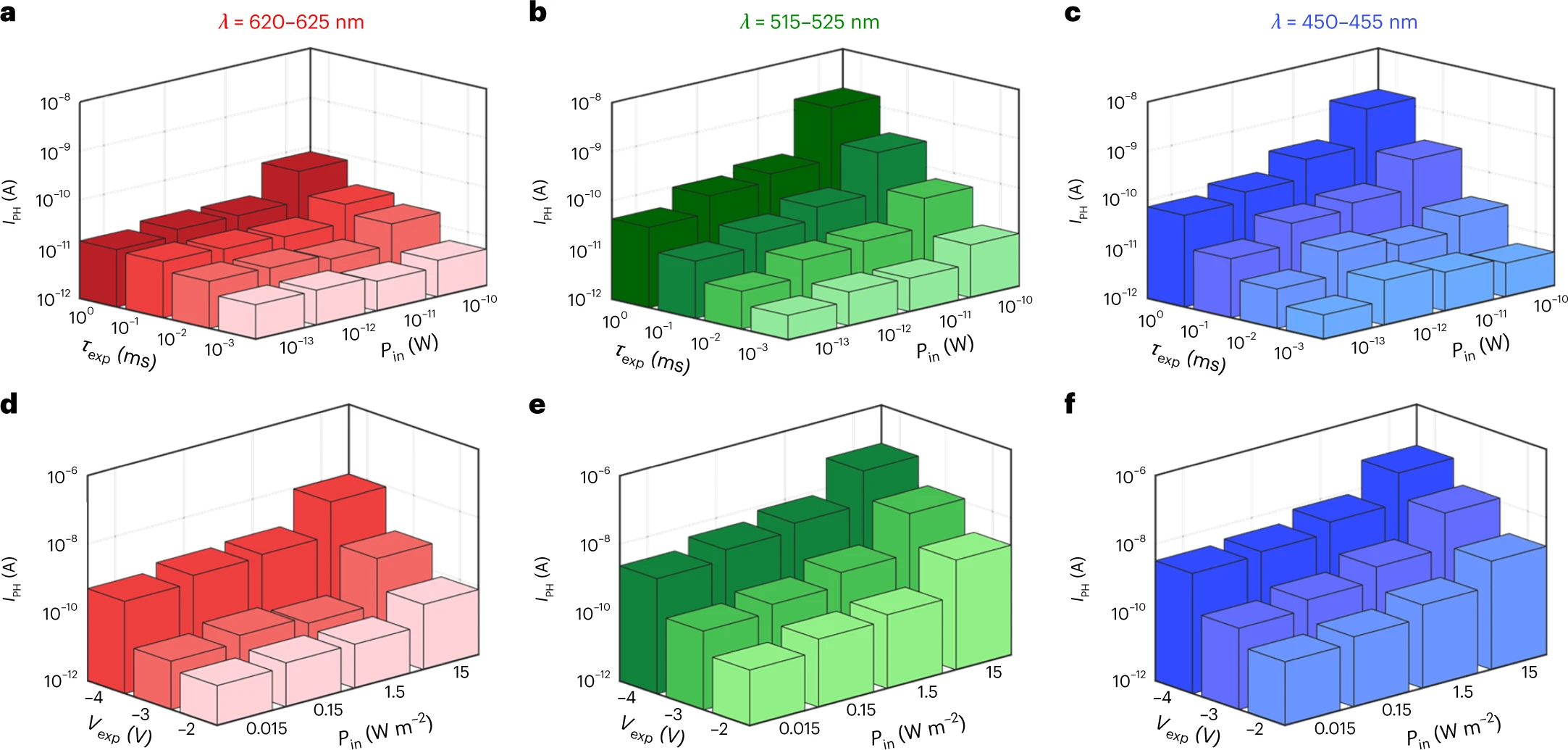

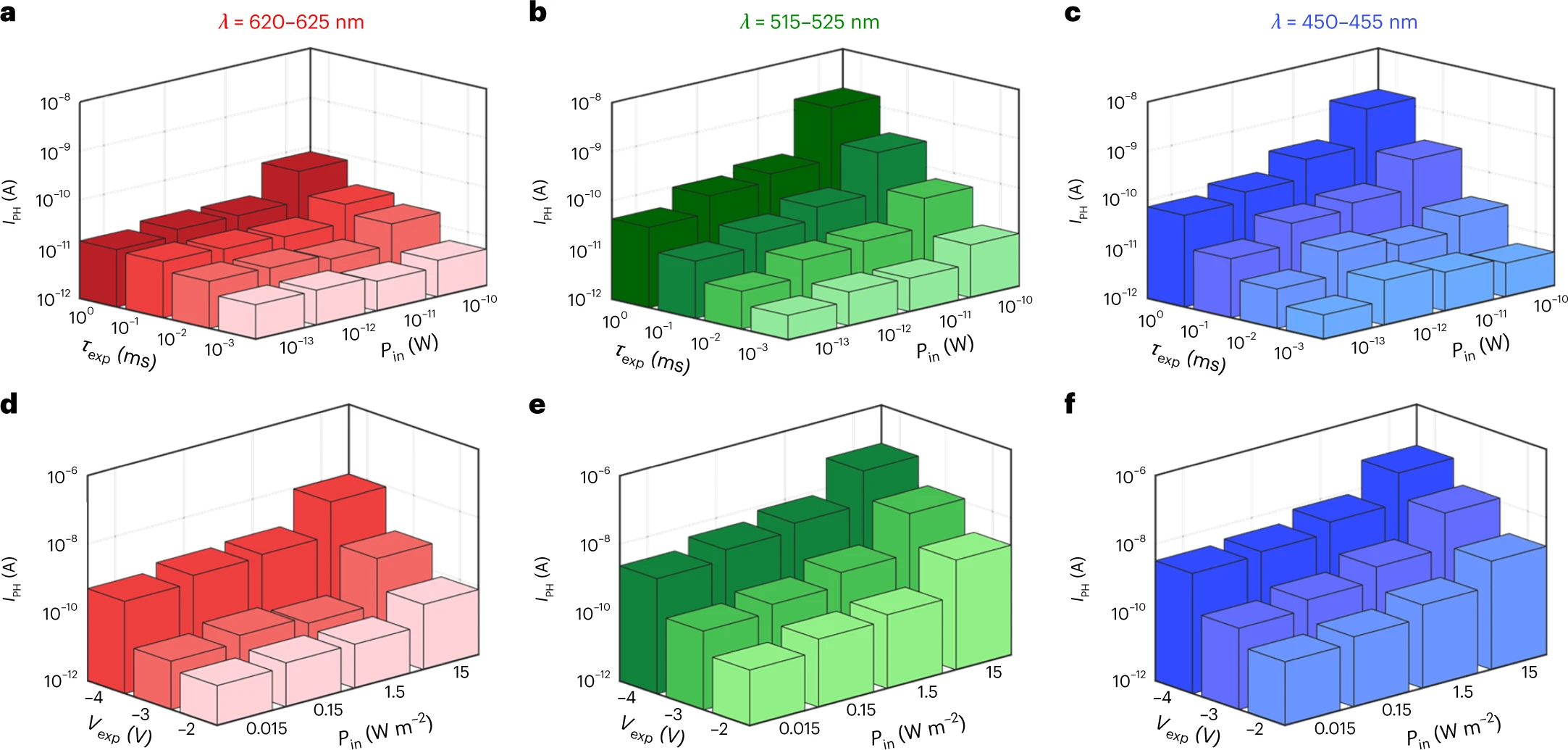

Fig. 4: HDR and spectral uniformity. a–c, The post-illumination persistent photocurrent (IPH) read out using VBG = 0 V and VDS = 1 V under different exposure times (τexp) is plotted against Pin for Vexp = −2 V at red (a), green (b) and blue (c) wavelengths. Clearly, the 2D APS demonstrates HDR for all wavelengths investigated. d–f, However, the 2D APS displays spectral non-uniformity in the photoresponse, which can be adjusted by exploiting gate-tunable persistent photoconductivity, that is, by varying Vexp. This is shown by plotting IPH against Pin for different Vexp at red (d), green (e) and blue (f) wavelengths.

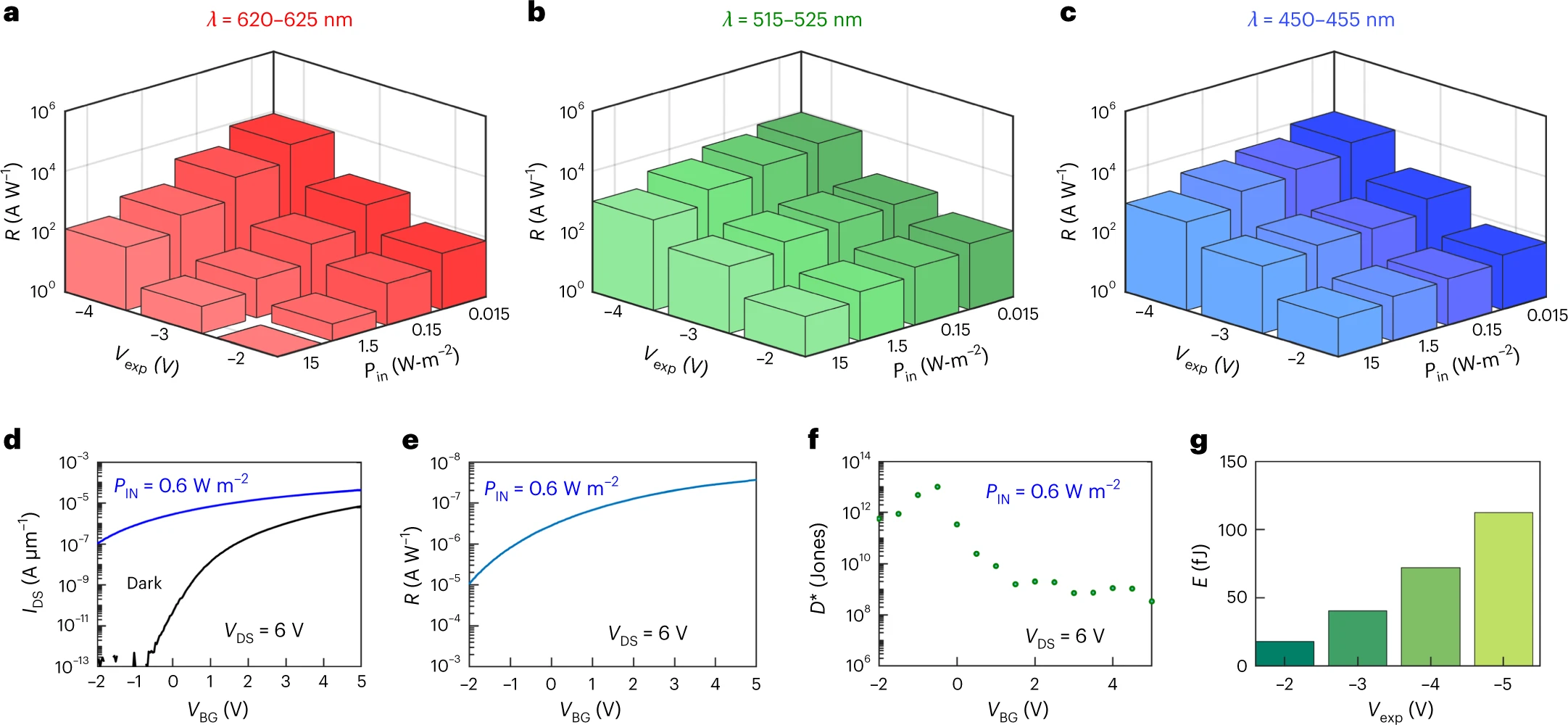

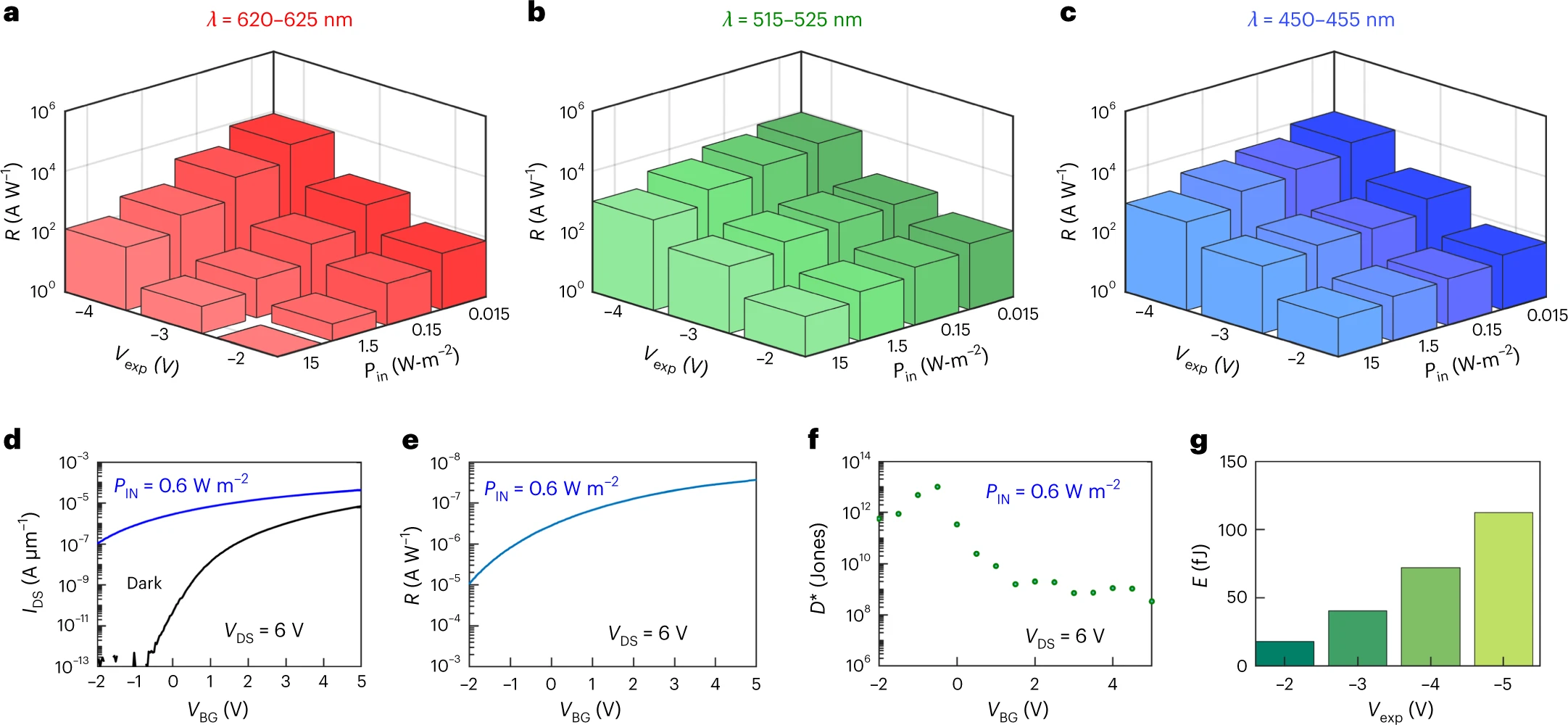

Fig. 5: Photodetection metrics. a–c, Responsivity (R) as a function of Vexp and Pin for τexp = 100 ms for red (a), green (b) and blue (c) wavelengths. R increases monotonically with the magnitude of Vexp. d, Transfer characteristics of a representative 2D APS in the dark and post-illumination at Vexp = −6 V with Pin = 0.6 W m−2 for τexp = 200 s and VDS = 6 V. e, R as a function of VBG. For VDS = 6 V and VBG = 5 V we extract an R value of ~3.6 × 107 A W−1. f, Specific detectivity (D*) as a function of VBG at different VDS. At lower VBG, both R and Inoise, that is, the dark current obtained from d, are low, leading to lower D*, whereas at higher VBG both R and Inoise are high, also leading to lower D*. Peak D* can reach as high as ~5.6 × 1013 Jones. g, Energy consumption per pixel (E) as a function of Vexp.

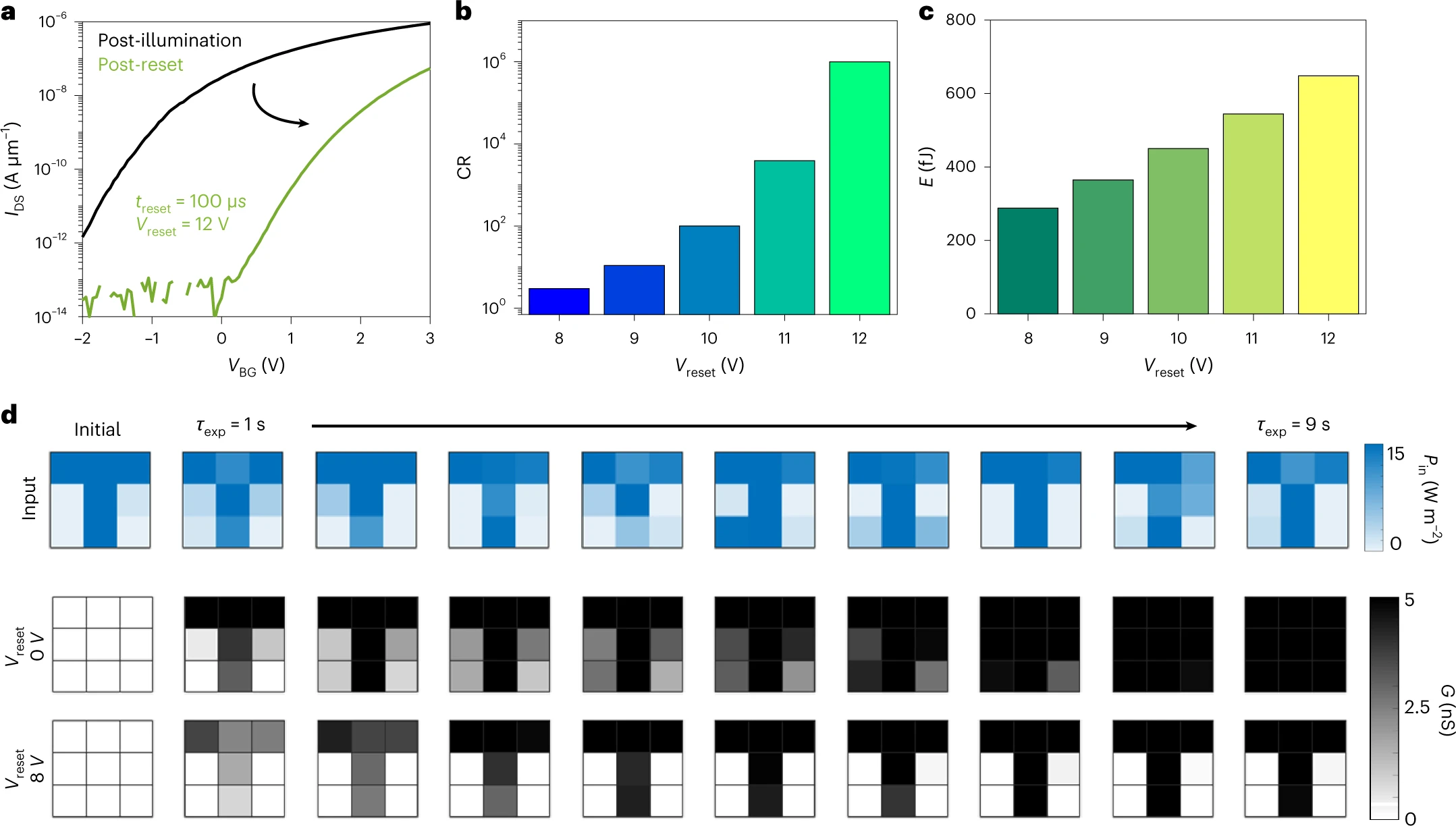

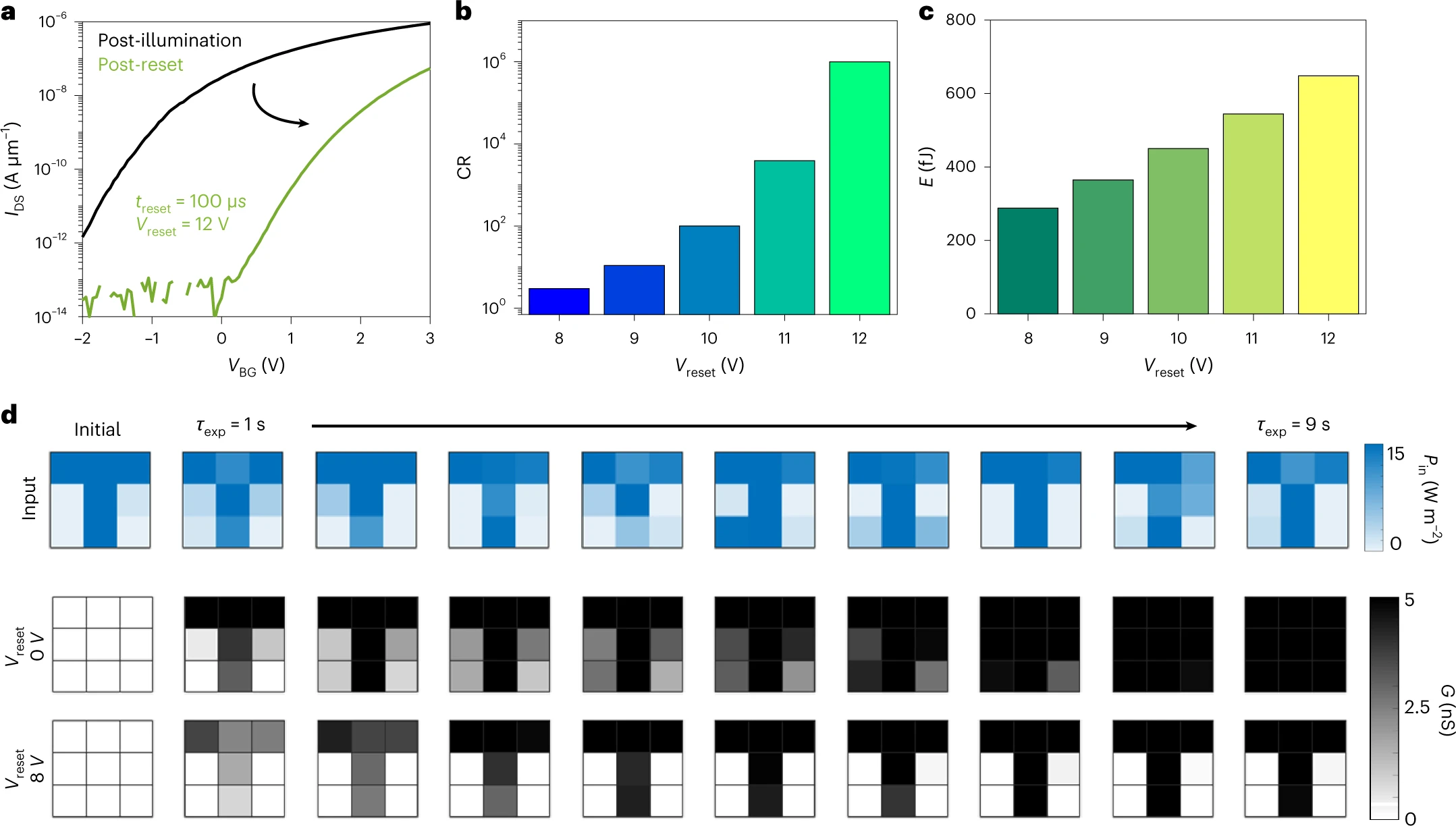

Fig. 6: Fast reset and de-noising. a, After the read out, each pixel can be reset by applying a reset voltage (Vreset) for time periods as low as treset = 100 µs. b, The conductance ratio (CR), defined as the ratio between the conductance values before and after the application of a reset voltage, is plotted against different Vreset. c, Energy expenditure for reset operations under different Vreset. d, Heatmaps of conductance (G) measured at VBG = 0 V from the image sensor with and without Vreset when exposed to images under noisy conditions. Clearly, application of Vreset helps in de-noising image acquisition.

This work was covered in the IEEE Spectrum magazine in an article titled "New Pixel Sensors Bring Their Own Compute: Atomically thin devices that combine sensing and computation also save power".

Link: https://spectrum.ieee.org/active-pixel-sensor

In the new study, the researchers sought to add in-sensor processing to active pixel sensors to reduce their energy and size. They experimented with the 2D material molybdenum disulfide, which is made of a sheet of molybdenum atoms sandwiched between two layers of sulfur atoms. Using this light-sensitive semiconducting material, they aimed to combine image-capturing sensors and image-processing components in a single device.

The scientists developed a 2D active pixel sensor array in which each pixel possessed a single programmable phototransistor. These light sensors can each perform their own charge-to-voltage conversion without needing any extra transistors.

The prototype array contained 900 pixels in 9 square millimeters, with each pixel about 100 micrometers large. In comparison, state-of-the-art CMOS sensors from Omnivision and Samsung have reached about 0.56 µm in size. However, commercial CMOS sensors also require additional circuitry to detect low light levels, increasing their overall area, which the new array does not... .