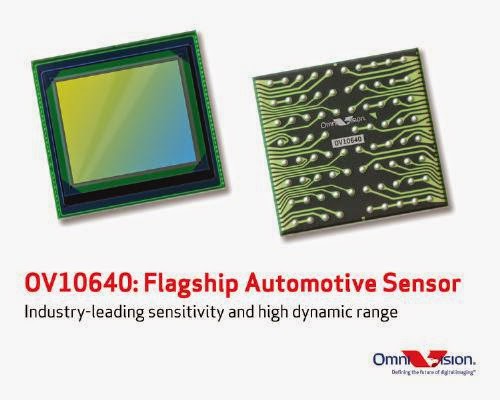

PR Newswire: OmniVision announces the automotive industry's BSI image sensor and a companion processing chip. The 1.3MP/60fps OV10640 delivers high sensitivity and HDR of up to 120 dB in highly compact automotive-grade packages, while the OV490 enables image and video processing for the next-generation ADAS systems.

"With the consumer predilection for advanced automotive features such as 360-degree surround view, lane departure warning and pedestrian detection, there is a tremendous demand among automobile manufacturers for imaging and processing solutions that deliver exceptionally clear, low-noise images and video," said Inayat Khajasha, senior product marketing manager for automotive products at OmniVision. "The announcement of the OV10640 and OV490 underscores OmniVision's position within the automotive industry as a trendsetter in developing sensors for next-generation ADAS applications."

The OV10640 is the first automotive OmniBSI image sensor, said to have the industry's highest sensitivity. The OV490 is a companion processor that enables simultaneous output of fully processed YUV or RGB data for display-based applications and RAW data for machine-vision downstream processing. The OV10640 and OV490 are now available for sampling, and are expected to complete AEC-Q100 Grade-2 qualification and enter volume production in Q4 2014.

Hi,

ReplyDeletehow do they get 120dB of dynamic range? If I'm right, this is 46.5 f-stops! To my knowledge, best cameras achieve 14-16 f-stops. What do 120 DB exactly mean and how on earth is it possible to get there? Anyone has information?

120dB of dynamic range is roughly equal to 20 stops. They most likely reach that number with exposure bracketing (using at least 3 exposures).

ReplyDeleteok, I had a double mistake, I took 140dB instead of 120 dB, and moreover, assumed amplitude instead of power formula for convertion from dB to f-stops. Thanks for the reply. If the 20 stops come from multiple exposures, then it is of no use, they better inform on the native dynamic range of the imager.

Delete"no use". Uh oh, stop the fab.

DeleteOf course it is useful and if you are thinking stops you are thinking photography and not thinking video and associated HDR tricks.

HDR is not limited to video, you can do it for stills. I've not said the device if of no use but that this spec is of no use, I'd prefer to know the native basic dynamic range, it is kind of obvious that you can extend it by combining multiple exposures, moreover if the process is automated by the companion ISP. But If it is not useful to me, it is good that it is to you. Thank God for the variety of interests out there....

DeleteCheck the official product description: http://www.ovt.com/products/sensor.php?id=151

DeleteThere are more details there.

Some of the dynamic range comes from split-pixel diode. Strictly speaking it is two exposures, but they are simultaneous. Does it then really make sense to complain that the DR comes from two exposures?

No, it's ok, thank you

DeleteHow is it possible to reach 120dB with only few steps on response. This should violate the dynamic range gaps (SNR holes) formula... This seems to be again another marketing number that will in practice never exceed 90dB.

ReplyDeleteDetails and maths here: http://spie.org/Publications/Book/903927 or in the e-book here: http://www.aphesa.com/documents.php

Guys, note that multiple exposures is not possible in automotive because of the relative motion. The processing required to make multiple exposures with good results is too costly for the automotive market.

Depends on inherent DR of the sensor. Ideally, you get 120dB DR with 2 exposures and with inherent DR of 60dB. Of course the SNR plot would look like a sawtooth and the SNR at the middle would reach 0 dB, hardly acceptable by automotive industry.

DeleteIf you start with ~75dB inherent DR, which seems possible given the large pixel size, the SNR dip shouldn't be a big concern.

I do agree that multiple exposures introduce motion artefacts but here the effect is reduced since both long and short exposures start integrating at the same time through alternate rows.

This is exactly what i mean. The advertised DR should be the largest one with no SNR dip.

DeleteTo be correct, the SNR dip is not just reaching 0dB. The SNR hole is defined as SNR below 1, i.e. below 0dB.

The DR is simply the ratio of the largest signal that can be accomodated to the noise floor. There is nothing in this definition that says the SNR has to increase monotonically. Maybe you want a new metric, like dipless DR (like linear DR). That is ok. But the traditional DR is what is meant here and most people understand the meaning and limitations.

DeleteEric, I agree with you, but I would like to add to this : DR is the ratio of the largest signal that can be accomodated to the noise floor, WITHIN ONE IMAGE.

DeleteI agree with Albert: I'd like to see people stop calculating DR as the ratio of max signal in low-gain to noise floor in high-gain. It should be DR in one image, using the max signal and noise floor of that one image and its settings (i.e. gain). DR should then be spec'd out per gain setting.

Delete-DP

Albert, yes of course, somehow thought that it was understood that it was always intrascene, not interscene DR. Personally I prefer to use light level so it is the ratio of maximum light level accommodated to light-equivalent noise floor but that really does not make a difference.

DeleteThe EMVA1288 definition of DR (intrascene DR) is the ratio of the light level that corresponds to the maximum of the photon transfer curve to the light level that gives a signal equal to the noise floor.

ReplyDeleteThe relation with PTC makes sure that the "saturation" is somewhat limited to useful imaging close to saturation and not when the very last pixel saturates.

"Maximum of PTC" is a fuzzy thing and also not always useful. In my QIS analysis paper I set it to 99% of the full value due to the saturation characteristics of the QIS. This is also pretty arbitrary.

DeleteBut, a way to be less arbitrary is to set it to where the SNR=1 during saturation since SNR always plummets as the sensor saturates. Thus DR spans the range from SNR=1 to SNR=1...which also helps with your concerns about SNR dips. I wonder how this would change the reported DR values compared to this EMVA1288 machine vision spec?

Can you explain SNR=1 at saturation? Because i'm used to SNR going to infinity as saturation cancels the variance.

DeleteSorry about that, first take a look at sections IV, V, and VI of the QIS modeling paper:

Deletehttp://ericfossum.com/Publications/Papers/2013%20Modeling%20Single%20Bit%20and%20Multi%20Bit%20QIS.pdf

Second, for SNR I am referring to light-referred SNR. So the signal part is normal, but the light-referred noise has to do with how much delta light is equal to the noise level. Since it takes a lot of light to raise the voltage signal level, this differential definition causes the light equivalent noise to grow to a large value. Thus SNR plummets at saturation. You are correct, just taking the straight voltage signal to voltage noise as a ratio does go to infinity.

My definition is consistent with using light as the reference for DR and I think it is more appropriate for non-linear regimes since SNR getting huge at saturation really makes no physical sense from an information theory point of view. The light-referred SNR plummets which does make more sense, at least to me.

Coming back to SNR holes, it is not true that the dynamic range is just the ratio of max over min. This is only valid if the SNR is always above 0dB. I know that this is the DR definition but it has to be extended for HDR sensors with piecewise linear response.

ReplyDeleteIf the SNR dips below 0dB then the range when SNR>1 and sensor is not saturated is split over several segments. You could have for example a useful range between 10 photons and 1000 photons and another useful range between 5000 photons and 100000 photons. The DR is not 100000/10 because a range of 4000 photons in wasted in the middle of the response.

I had published a proposal to always publish dynamic range values together with the SNR plot and a "dynamic range gap presence function" that is 0 when SNR<0dB and 1 otherwise.

Having SNR holes (DR gaps) can make an image look really bad, even if the dynamic range itself looks great. This was very common in the first HDR piecewise linear image sensors that were pushing the DR value to a maximum but could never give decent images at high DR settings because of the too strong compression and abrupt changes in response's slope.

Indeed, it would be useful to have the number of SNR holes (can be 2 if 3 exposures are used) and their SNR value for multi-exporure and other piecewise HDR sensors. I guess they provide this info in their detailed datasheet.

DeleteI have never seen any manufacturer providing this info. The best you can do is to guess the pixel design architecture and to calculate it using Yang&Gamal formulas based on some realistic noise measurements that you will make yourself.

ReplyDeleteThe EMVA1288 working group is currently working on a procedure for this and most of the noise measurements already used in EMVA1288 can be used to get an approximated SNR curve.

You can of course also measure your SNR curve but it is difficult, especially as low SNR or for very low and very high illumination levels.

Hi Arnaud,

ReplyDeleteYou can find at http://image-sensors-world.blogspot.fi/2014/03/pixpolar-patent-case-study.html#comment-form a question by an anonymous blogger on April 9, 2014 at 3:35 PM about the FWC of MIG technology. In my (Artto Aurola) answers on April 15, 2014 at 10:47 AM, on April 15, 2014 at 10:50 AM, on April 15, 2014 at 10:52 AM, and on April 15, 2014 at 10:54 AM I have explained how it is possible to have simultaneous multiple exposure times even with small FWC without neither sacrificing low light image quality (accumulation of read noise is avoided) nor having any dips or saw-tooth like features in the SNR curve. The SNR curve will be actually a totally natural, monolithically increasing function which maximum value is not in anyways limited by the FWC.

These benefits can be obtained with a 3D integrated MIG sensor featuring Non-Destructive CDS (NDCDS) readout. My two other subsequent posts on April 20, 2014 at 10:59 AM and on April 22, 2014 at 9:47 AM are also related to the matter. All afore said 7 posts are at the moment the last posts of present 55 posts.

Actually it is not anymore about multiple distinctive integration times but about adding signals together - read the posts and you'll get the point.

DeleteThis is then similar to multiple sampling, this is another branch of technology.

ReplyDeleteIn present CCDs and CMOS image sensors you can obtain a natural SNR vs. intensity curve by performing multi-sampling and by adding the signals together. The problem is, however, the more often you readout the sensor the higher the overall read noise is due to accumulation of read noise which is resulted in by destructive CDS readout. This means that you can clearly discern between a single exposure time image and a multi-sampled image having similar overall exposure time even if there is no over-exposure in the single exposure time image (the single exposure time image would be better due to lower read noise). This means that one cannot compensate lower FWC with higher readout rate, i.e., the Full Well Capacity (FWC) in present CCDs and CMOS image sensors is a fundamentally important parameter since it unavoidably affects the image quality.

ReplyDeleteIn 3D integrated image sensors (face to face bonded separate image sensor and readout chips) provided either with

1) ability to perform instantaneous Non-Destructive CDS (NDCDS) readout as well as pixel level selective reset; or with

2) ability to perform non-destructive non-CDS readout as well as pixel level selective instantaneous Destructive CDS (DCDS) readout;

the readout rate does not increase the overall noise even at substantially small FWC levels. The reason is that one can perform instantaneous CDS readout and reset whenever the pixel approaches saturation (e.g. at FWC/2 level), which means that the overall accumulated read noise will be always considerably smaller than accumulated photon shot noise (this applies when read noise R << sqrt(FWC/2) ). Another point is that by adding together all the CDS readout results obtained when reset is performed one would obtain a perfectly natural SNR vs. intensity curve. Consequently, in image sensors according to either case 1) or 2) one can compensate lower FWC against higher readout rate in order to enable similar image quality and Dynamic Range (DR).

Thus one cannot discern between a single exposure time image obtained with a sensor having a large FWC and an image obtained with an otherwise similar sensor but having relatively small FWC and utilizing multi-sampling provided that the overall exposure time is the same in both cases (and that there is no over-exposure in the single exposure time image). In other words, in both cases 1) and 2) FWC is not a fundamentally important parameter since it does not unavoidably affect the image quality. So the question is that if one cannot discern between multi-sampled and single exposure time images does it really matter if multi-sampling is utilized?

Actually, if there were so small intensities in the image area that the signal were still very small when the overall exposure ends and if in case 1) intermediate NDCDS readout values (i.e. values that are readout when reset is not performed immediately afterwards) were stored to memory and utilized in order to perform e.g. regression analyses (i.e. fitting a straight line to the NDCDS readout results) one could reduce the overall read noise in multi-sampling below the read noise of a single exposure time image. Thus case 1) would actually enable detection of fainter signals than the single exposure time image (in this case one could naturally also discern between multi-sampled and single exposure time images). Another benefit of the stored intermediate NDCDS readouts is also that one can remove image blur due to subject or camera movements by performing afterwards suitable translations and/or rotations to the whole image area and/or to a sub-image area.

One should note that the higher frame rate does not necessarily mean shorter readout time per pixel if the amount of pixels per Analog to Digital Converter is reduced accordingly.

This blog item has been taken over by the "Aurola Manifesto" - a wild rambling and repetition of MIGS (say, DEPFET or CMD) alleged benefits and how great life would have been if only someone had actually recognized how good of a technology it really is, even though it has not every been demonstrated.

DeleteThe last entry discussion looks a lot like the Berezin patent - subsequently called digital integration. US 7,139,025 filed in Oct 1998. But, Berezin probably stole that too from Aurola.